Output factors define what Dendro tries to optimize - they are your business objectives translated into measurable metrics. While input factors control what can change, output factors determine what "better" means.

Key concepts:

This guide explains how to configure output factors to align Dendro's optimization with your business goals. These can be specified in the Output Factors input table in Cosmic Frog models.

Recommended reading prior to diving into this guide: Getting Started with Dendro, a high-level overview of how Dendro works, and Dendro: Genetic Algorithm Guide which explains the inner workings of Dendro in more detail.

An output factor tells Dendro's genetic algorithm:

Imagine you are evaluating employee performance:

Dendro uses the same approach to evaluate inventory policy combinations across your supply chain.

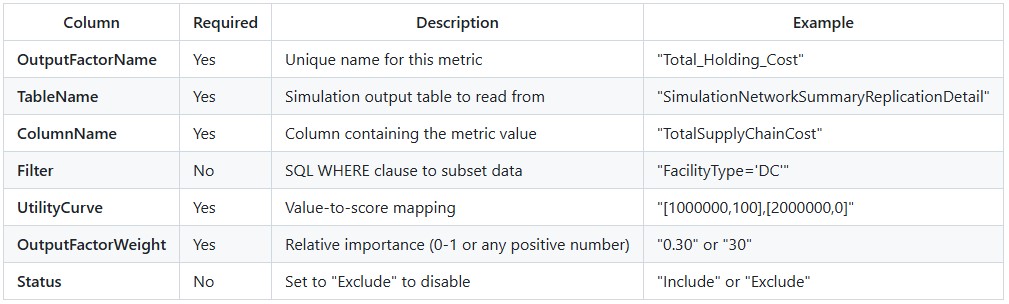

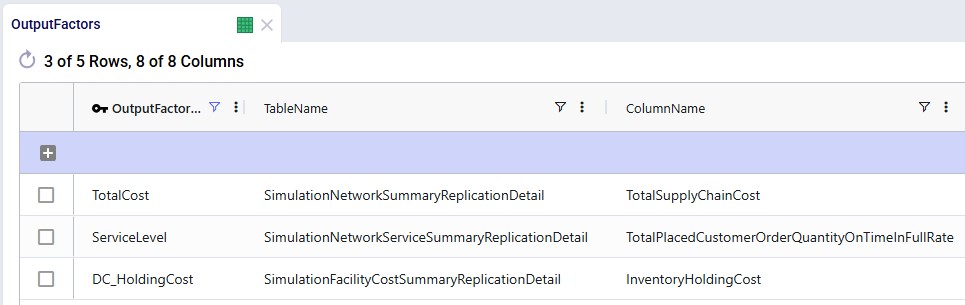

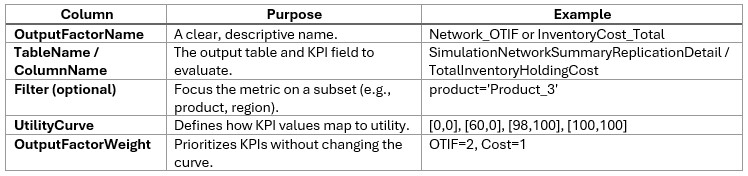

Output factors are configured in the Output Factors input table with the following columns:

Minimize overall supply chain costs:

OutputFactorName: TotalCost TableName: SimulationNetworkSummaryReplicationDetail ColumnName: TotalSupplyChainCost Filter: (leave empty for all data)UtilityCurve: [1500000,100],[2000000,0]OutputFactorWeight: 0.40 Status: Include Interpretation:

Maximize service level performance:

OutputFactorName: ServiceLevel TableName: SimulationNetworkServiceSummaryReplicationDetailColumnName: TotalPlacedCustomerOrderQuantityOnTimeInFullRate Filter: (leave empty) UtilityCurve: [0,0],[85,10],[95,99],[100,100]OutputFactorWeight: 0.35 Status: Include Interpretation:

Focus on inventory costs at distribution centers only:

OutputFactorName: DC_HoldingCost TableName: SimulationFacilityCostSummaryReplicationDetail ColumnName: InventoryHoldingCost Filter: FacilityType='DC' UtilityCurve: [50000,100],[150000,0]OutputFactorWeight: 0.25 Status: Include Interpretation:

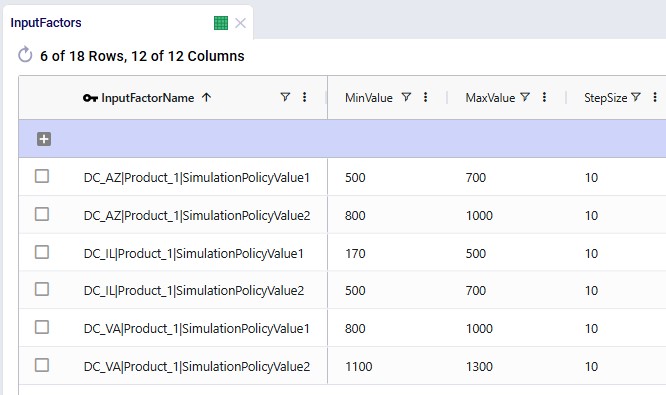

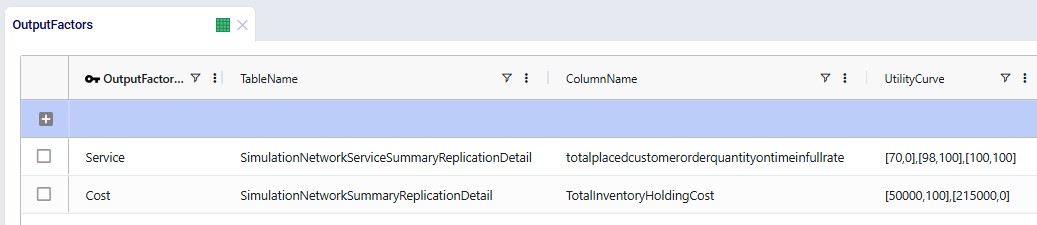

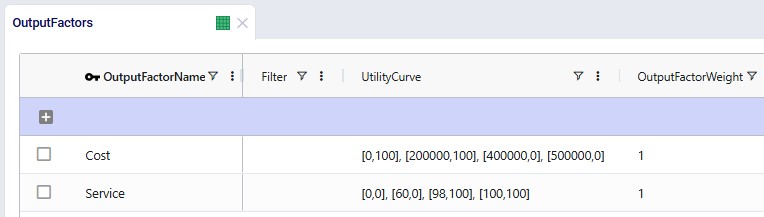

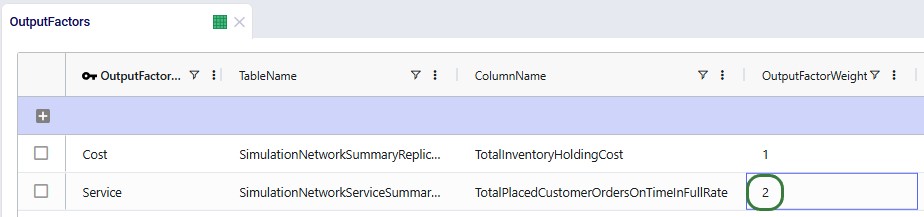

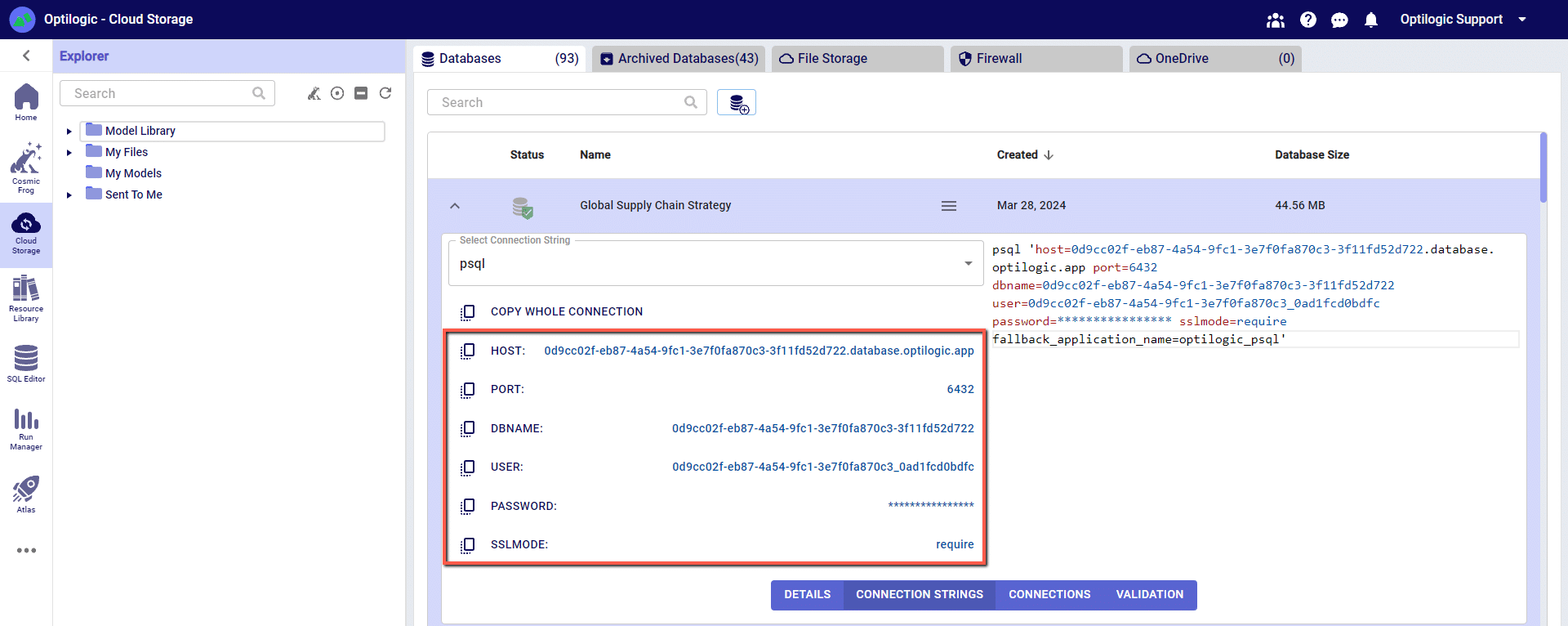

The following 2 screenshots show the Output Factors input table in Cosmic Frog; it contains 3 rows which represent the 3 examples above:

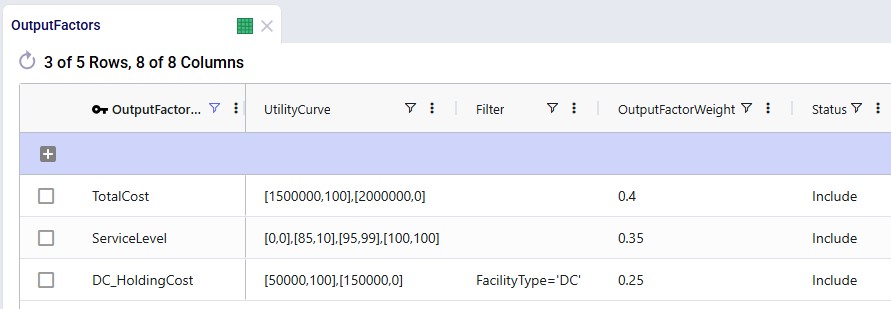

A utility curve translates raw metric values (like dollars or percentages) into standardized quality scores (0-100 points). It answers the question: "How good is this value?".

Format:[value1,score1],[value2,score2],[value3,score3],...

Each [value,score] pair defines a point on the curve:

Dendro connects these points with straight lines to create a piecewise linear curve.

[1000,100],[2000,0]

Meaning:

Visualization:

Best for:

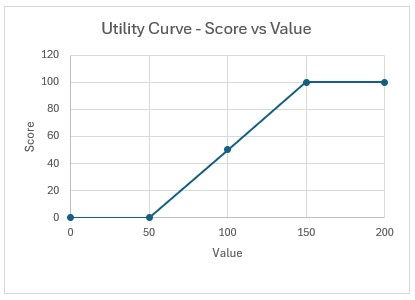

[50,0],[100,50],[150,100]

Meaning:

Visualization:

Best for:

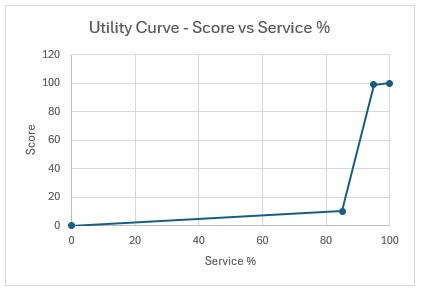

[0,0],[85,10],[95,99],[100,100]

Meaning:

Characteristics:

Visualization:

Best for:

The challenge: Early in optimization, Dendro does not know what the best and worst possible values are.

The solution: Utility curves automatically expand when new extremes are discovered.

Example scenario:

Generation 1:

[1800000,100],[2200000,0]Generation 5:

[1600000,100],[2200000,0]Why it matters:

When rescaling occurs:

Weights determine how much each output factor contributes to the overall fitness score.

Common approaches:

Total Cost: Weight = 0.40 (40%) Service Level: Weight = 0.35 (35%) Inventory Value: Weight = 0.25 (25%) Total = 1.00 (100%) Advantage: Easy to understand - directly represents percentage contribution.

Total Cost: Weight = 40 Service Level: Weight = 35 Inventory Value: Weight = 25 Total = 100 (but could be any sum) Advantage: Can use any convenient scale; Dendro handles normalization.

Critical Factor: Weight = 10 Important Factor: Weight = 5 Secondary Factor: Weight = 2 Minor Factor: Weight = 1 Advantage: Emphasizes relative priorities clearly.

Example calculation:

Output Factors:

Holding Cost: Raw value = $80,000 → Utility score = 60 → Weight = 0.40 Service Level: Raw value = 94% → Utility score = 85 → Weight = 0.35 Transport Cost: Raw value = $45,000 → Utility score = 70 → Weight = 0.25 Weighted Contributions:

Holding Cost: 60 × 0.40 = 24.0 pointsService Level: 85 × 0.35 = 29.75 points Transport Cost: 70 × 0.25 = 17.5 points Overall Fitness Score: 24.0 + 29.75 + 17.5 = 71.25 points

Higher fitness scores are better in Dendro

The fitness calculation adds these weighted scores, so the formula is:

Fitness = 24.0 + 29.75 + 17.5 = 71.25

Balanced approach:

Cost factors (total): 50% Service factors (total): 50% Equal priority to cost reduction and service achievement.

Cost-focused approach:

Cost factors (total): 70% Service factors (total): 30% Emphasizes cost minimization; willing to accept moderate service levels.

Service-focused approach:

Cost factors (total): 30% Service factors (total): 70% Prioritizes customer service; cost is secondary.

Multi-objective balanced:

Holding cost: 25% Transport cost: 25% Service level: 25% Inventory turns: 25% Equal consideration to multiple objectives.

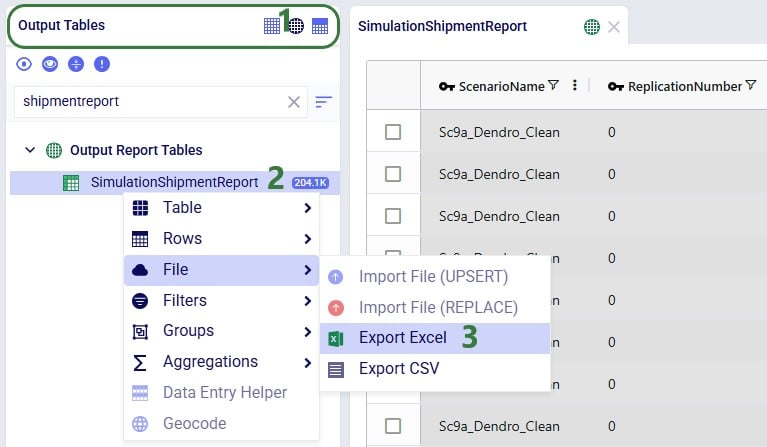

For each output factor, Dendro reads the metric value from the simulation results:

Factor 1 (Total Cost): Reads TotalSupplyChainCost → $1,750,000 Factor 2 (Service Level): Reads OnTimeInFullRate → 92% Factor 3 (Holding Cost): Reads InventoryHoldingCost → $320,000 Each value is mapped to a raw score using its utility curve:

Factor 1: $1,750,000 → Utility curve [1500000,100],[2000000,0]Interpolation: (1,750,000 - 1,500,000) / (2,000,000 - 1,500,000) = 0.5 Raw score: 100 - (0.5 × 100) = 50 Factor 2: 92% → Utility curve [0,0],[85,10],[95,99],[100,100]Falls between 85 and 95: Interpolate Raw score: 10 + ((92-85)/(95-85)) × (99-10) = 10 + 62.3 = 72.3Factor 3: $320,000 → Utility curve [200000,100],[400000,0]Interpolation: (320,000 - 200,000) / (400,000 - 200,000) = 0.6Raw score: 100 - (0.6 × 100) = 40 Utility scores are scaled to a 0-100 range based on the min/max scores in the curve:

If all curves use 0-100 range → No additional scaling neededScores are already normalized: 50, 72.3, 40 Multiply each normalized score by its weight:

Factor 1: 50 × 0.40 = 20.0 Factor 2: 72.3 × 0.35 = 25.3 Factor 3: 40 × 0.25 = 10.0 Combine all weighted scores:

Overall score = 20.0 + 25.3 + 10.0 = 55.3

Chromosome A:

Total Cost: $1,600,000 → Score 80 → Weighted 32.0 Service: 96% → Score 100 → Weighted 35.0 Holding: $280,000 → Score 60 → Weighted 15.0 Total: 82.0 Chromosome B:

Total Cost: $1,750,000 → Score 50 → Weighted 20.0 Service: 92% → Score 72 → Weighted 25.2 Holding: $320,000 → Score 40 → Weighted 10.0 Total: 55.2 Result: Chromosome A (fitness score of 82.0) is better than Chromosome B (fitness score of 55.2).

Chromosome A achieves better performance across all metrics, resulting in a higher fitness score.

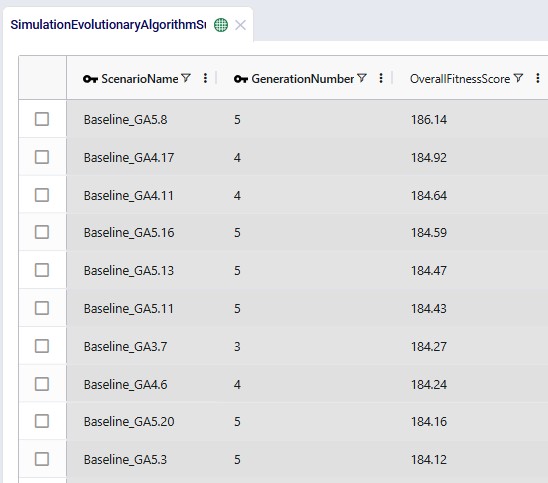

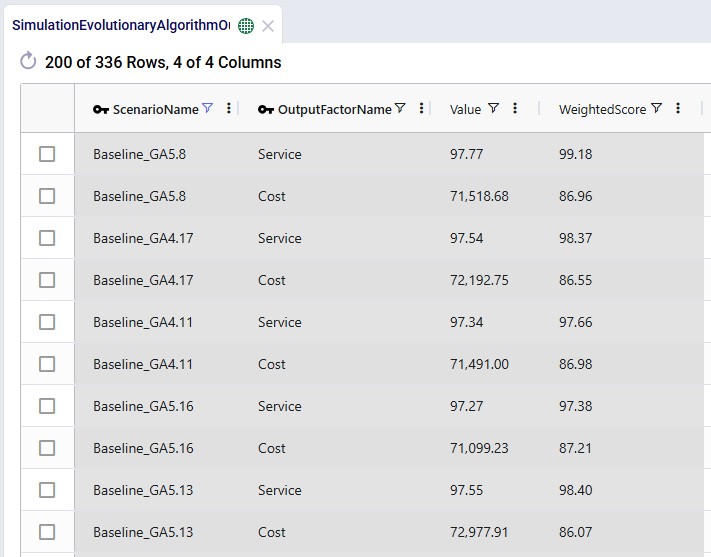

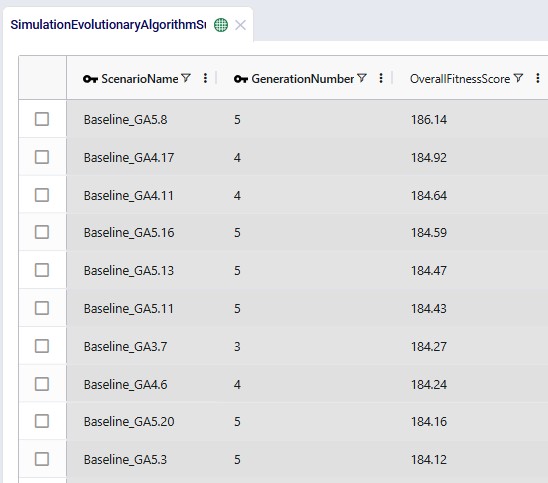

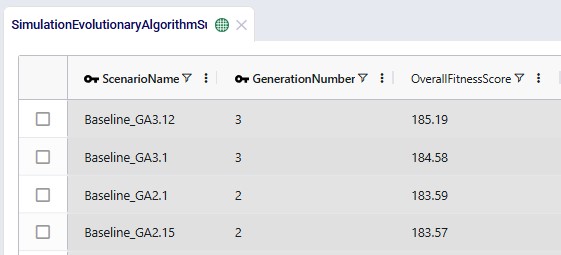

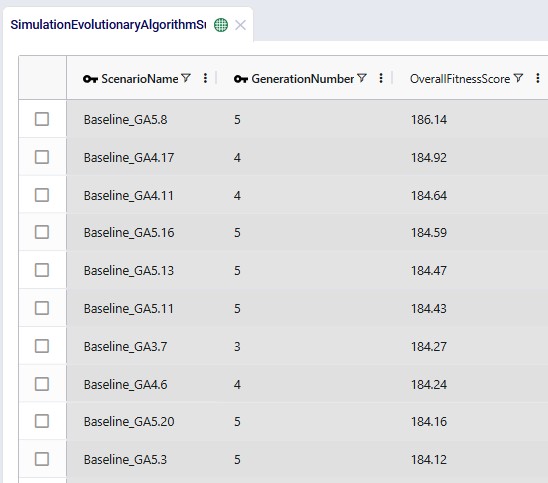

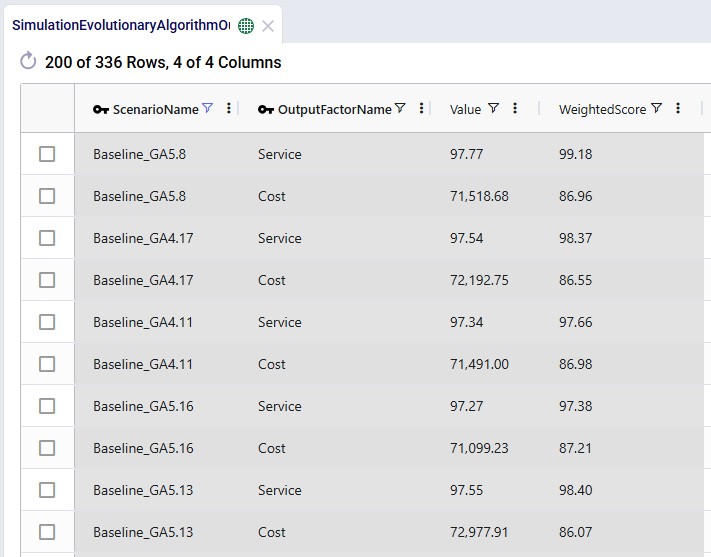

In Cosmic Frog, the Overall Fitness Scores of all scenarios (=chromosomes) run can be assessed in the Simulation Evolutionary Algorithm Summary output table after a Dendro run has completed. Scores of individual output factors can be reviewed in the Simulation Evolutionary Algorithm Output Factor Report output table:

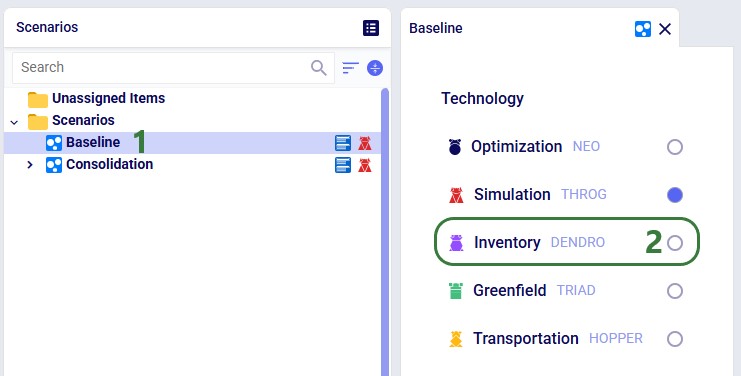

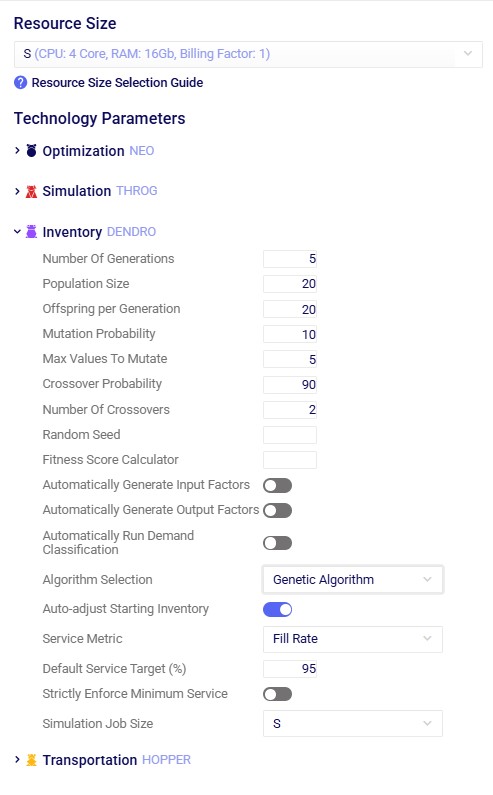

The Automatically Generate Output Factors option automatically creates standard output factor configurations based on your target service levels and baseline simulation results. This option can be set in the Dendro section of the Technology Parameters.

When to use:

What it creates:

Step 1: Run baseline simulation Execute your current inventory policies to measure actual cost.

Step 2: Establish boundaries

Baseline Cost: $1,800,000 Left Boundary (best): 75% of baseline = $1,350,000 Right Boundary (worst): 110% of baseline = $1,980,000 Step 3: Create utility curve

UtilityCurve: [1350000,100],[1980000,0]

Interpretation:

Step 4: Set weight

OutputFactorWeight: 1 (50% when combined with service factor)

Step 1: Use configured service targets

DefaultTargetService: 95% LowService: 85% Step 2: Create multi-point utility curve

UtilityCurve: [0,0],[85,10],[95,99],[100,100]

Interpretation:

Design rationale:

Step 3: Set weight

OutputFactorWeight: 1 (50% when combined with cost factor)

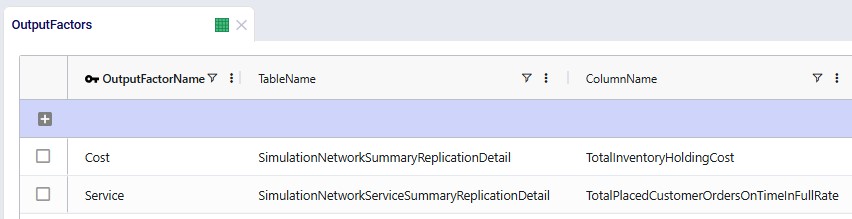

OutputFactors Table:

Row 1: OutputFactorName: Cost TableName: SimulationNetworkSummaryReplicationDetailColumnName: TotalSupplyChainCost Filter: NULL UtilityCurve: [1350000,100],[1980000,0]OutputFactorWeight: 1 Status: Include Row 2: OutputFactorName: Service TableName: SimulationNetworkServiceSummaryReplicationDetailColumnName: TotalPlacedCustomerOrderQuantityOnTimeInFullRateFilter: NULL UtilityCurve: [0,0],[85,10],[95,99],[100,100]OutputFactorWeight: 1 Status: Include Optimization behavior: Dendro will seek policies that:

Goal: Find the lowest-cost inventory policies without service constraints.

Setup:

OutputFactorName: TotalCost TableName: SimulationNetworkSummaryReplicationDetailColumnName: TotalSupplyChainCost UtilityCurve: [1000000,100],[3000000,0]OutputFactorWeight: 1.0 Result: Dendro minimizes cost aggressively; service may suffer.

When to use:

Goal: Maximize service while preventing excessive cost increases.

Setup:

Factor 1 - Service (Primary): OutputFactorName: Service TableName: SimulationNetworkSummaryReplicationDetailColumnName: OnTimeInFullRate UtilityCurve: [90,0],[95,50],[98,99],[100,100]OutputFactorWeight: 0.70 Factor 2 - Cost (Constraint):OutputFactorName: CostLimit TableName: SimulationNetworkSummaryReplicationDetailColumnName: TotalSupplyChainCost UtilityCurve: [1500000,100],[2000000,50],[2500000,0]OutputFactorWeight: 0.30 Result: Dendro prioritizes service but penalizes solutions exceeding cost thresholds.

When to use:

Goal: Optimize multiple objectives with balanced priorities.

Setup:

Factor 1 - Holding Cost: OutputFactorName: HoldingCost TableName: SimulationNetworkSummaryReplicationDetailColumnName: InventoryHoldingCost UtilityCurve: [200000,100],[500000,0]OutputFactorWeight: 0.25 Factor 2 - Transport Cost: OutputFactorName: TransportCostTableName: SimulationNetworkSummaryReplicationDetailColumnName: TransportationCost UtilityCurve: [300000,100],[600000,0]OutputFactorWeight: 0.25 Factor 3 - Service Level: OutputFactorName: Service TableName: SimulationNetworkSummaryReplicationDetailColumnName: FillRate UtilityCurve: [85,0],[95,99],[100,100]OutputFactorWeight: 0.30 Factor 4 - Inventory Turns: OutputFactorName: InventoryTurnsTableName: SimulationNetworkSummaryReplicationDetailColumnName: InventoryTurns UtilityCurve: [2,0],[6,50],[12,100]OutputFactorWeight: 0.20 Result: Dendro finds well-rounded solutions balancing cost, service, and efficiency.

When to use:

Goal: Different service targets for different regions.

Setup:

Factor 1 - Premium Region Service: OutputFactorName: Premium_Service TableName: SimulationNetworkSummaryReplicationDetailColumnName: FillRate Filter: Region='Premium' UtilityCurve: [92,0],[98,99],[100,100]OutputFactorWeight: 0.40 Factor 2 - Standard Region Service: OutputFactorName: Standard_Service TableName: SimulationNetworkSummaryReplicationDetailColumnName: FillRate Filter: Region='Standard' UtilityCurve: [85,0],[92,99],[100,100]OutputFactorWeight: 0.30 Factor 3 - Overall Cost: OutputFactorName: TotalCost TableName: SimulationNetworkSummaryReplicationDetailColumnName: TotalSupplyChainCost UtilityCurve: [2000000,100],[3000000,0]OutputFactorWeight: 0.30 Result: Premium regions get higher service targets; standard regions have lower thresholds.

When to use:

Some output tables contain multiple rows per scenario (e.g., one row per facility or product). Dendro combines these into a single metric value by using a straight average calculation. More options will be added in future releases.

How it works: Calculates the arithmetic mean of all matching rows.

SQL equivalent:

SELECT AVG(ColumnName)

FROM TableName

WHERE ScenarioName = 'Gen3_Chr5' AND [Filter]Example:

Facility A holding cost: $80,000 Facility B holding cost: $120,000 Facility C holding cost: $100,000 SimpleAverage: ($80,000 + $120,000 + $100,000) / 3 = $100,000 Best for:

Do not use:

[0,0],[10,5],[20,15],[30,28],[40,45],[50,65],[60,82],[70,95],[80,98],[90,99],[100,100]

Over-complicated curves are hard to understand and maintain.

Do use:

[0,0],[85,10],[95,99],[100,100]

Simple curves with clear inflection points at meaningful thresholds.

Principle: Use the minimum number of points to capture your business logic.

Cost factors (minimize):

[best_cost, 100], [worst_acceptable_cost, 0]

Lower values → higher scores.

Service factors (maximize):

[minimum_acceptable, 0], [target, 99], [perfect, 100]

Higher values → higher scores.

Efficiency metrics (target range):

[too_low, 0], [optimal, 100], [too_high, 0]

Sweet spot in the middle.

Too narrow:

[1900000,100],[2000000,0]

Only a 5% range - most solutions will score 0 or 100, providing little differentiation.

Too wide:

[500000,100],[5000000,0]

Unrealistic extremes - actual values may all cluster in a small region, reducing curve sensitivity.

Just right:

[1500000,100],[2500000,0]

Reasonable range around baseline (±25%) allows meaningful differentiation.

Avoid extreme imbalances:

Cost: Weight = 0.95 Service: Weight = 0.05 Service becomes nearly irrelevant - Dendro will slash costs with little regard for service.

Prefer balanced or clearly justified ratios:

Cost: Weight = 0.60 Service: Weight = 0.40 Both factors matter; cost slightly more important.

Or equal weighting for exploration:

Cost: Weight = 0.50 Service: Weight = 0.50 Neutral stance - let Dendro find the natural trade-off.

Workflow:

Benefits:

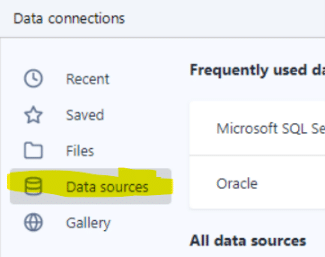

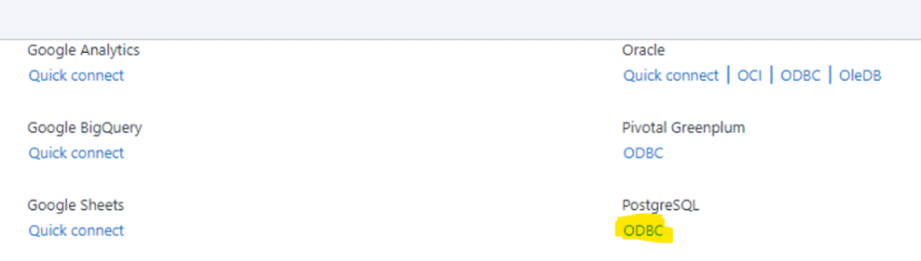

Before running full optimization:

SELECT * FROM SimulationFacilityCostSummaryReplicationDetail

WHERE ScenarioName = 'Baseline' AND FacilityType = 'DC'[100,0],[200,100] ✓ Valid [100,0] [200,100] ✗ Invalid (missing comma between pairs)Simulate scoring: Manually calculate expected fitness for baseline After the Dendro runs have all completed, review the Overall Fitness Score values in the Simulation Evolutionary Algorithm Summary output table and the scores of the individiual otuput factors Simulation Evolutionary Algorithm Output Factor Report output tables.

Healthy optimization shows:

Warning signs:

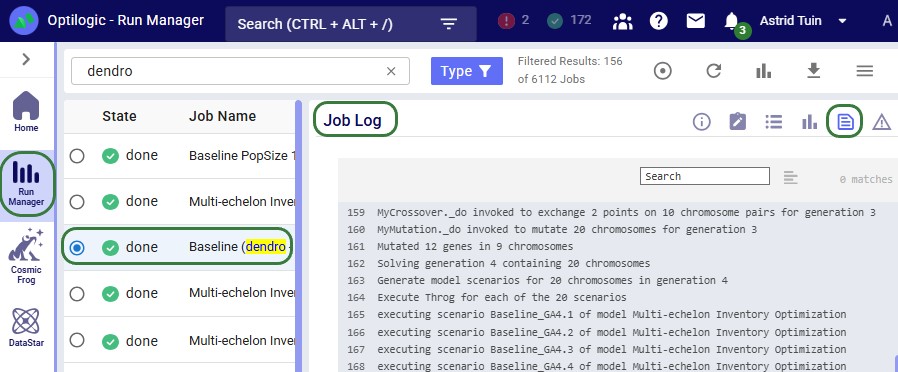

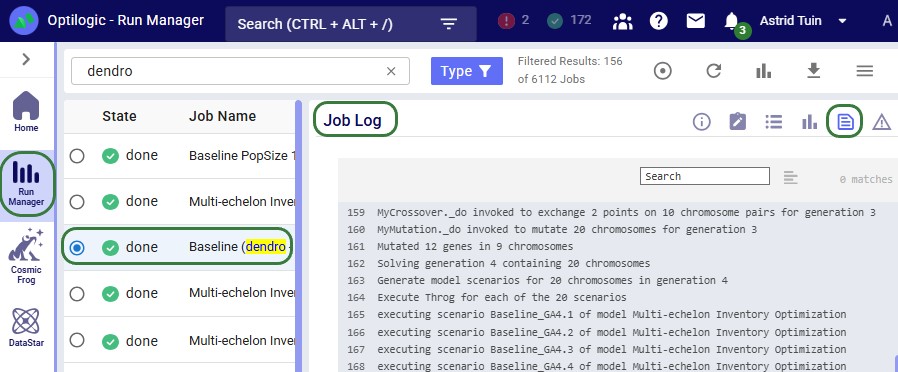

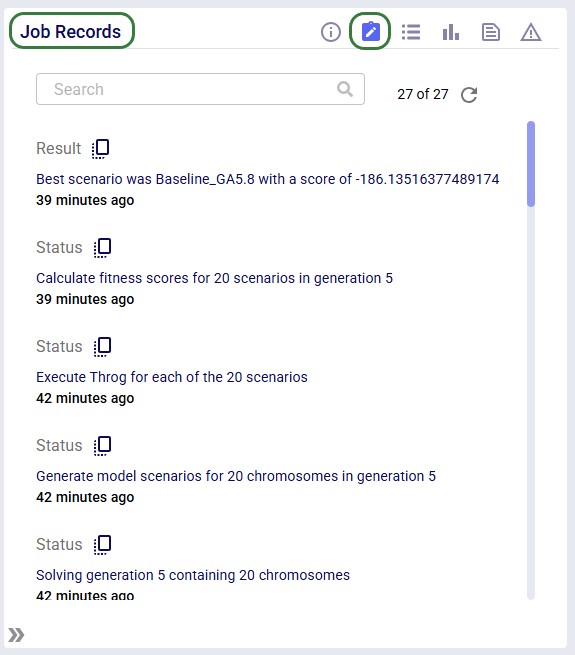

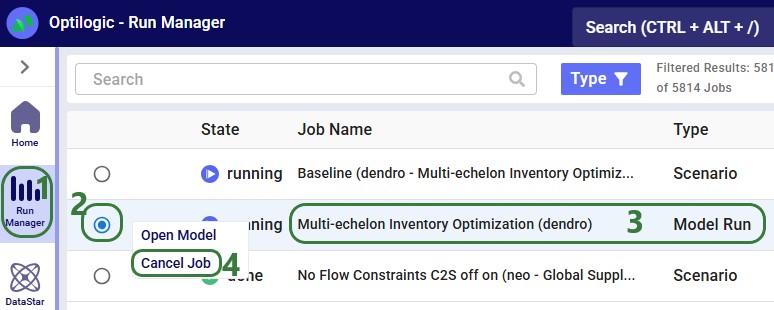

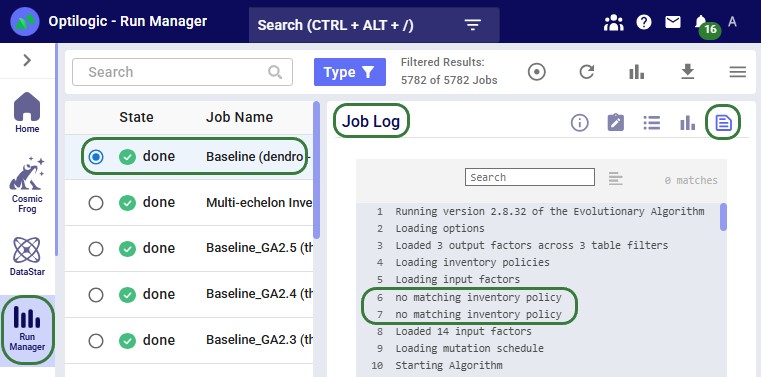

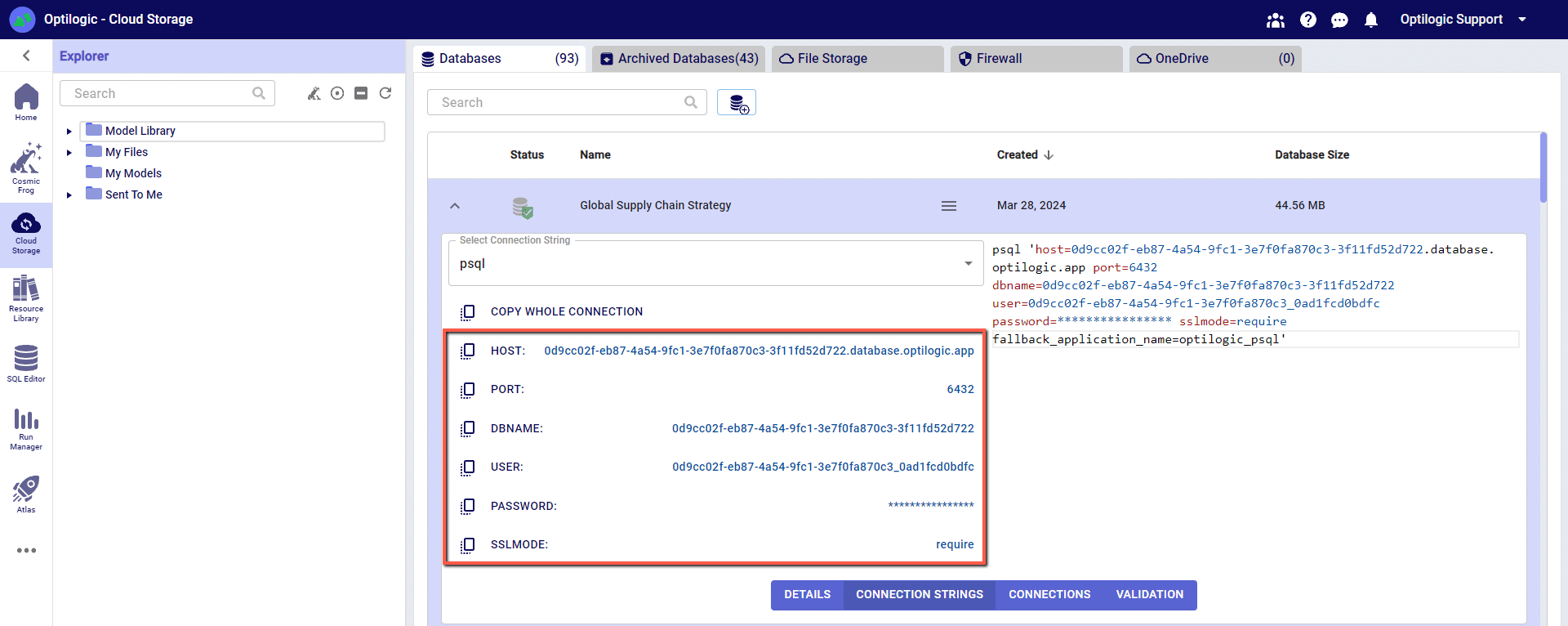

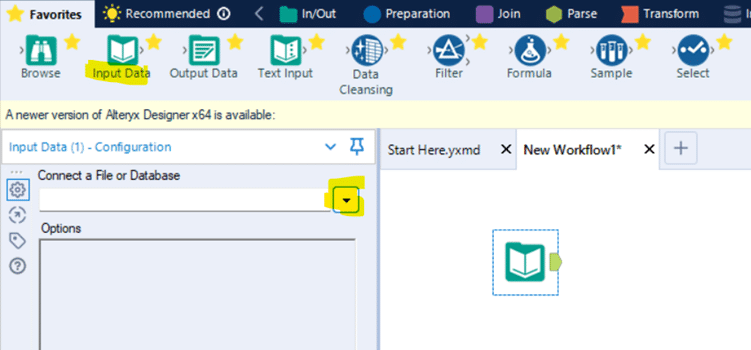

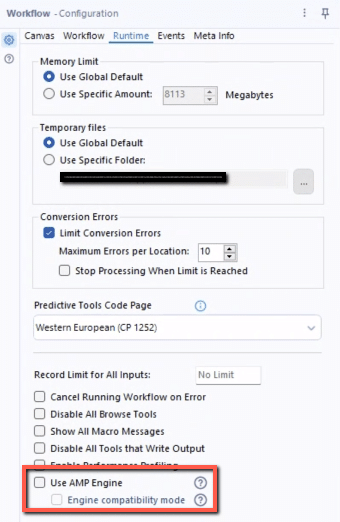

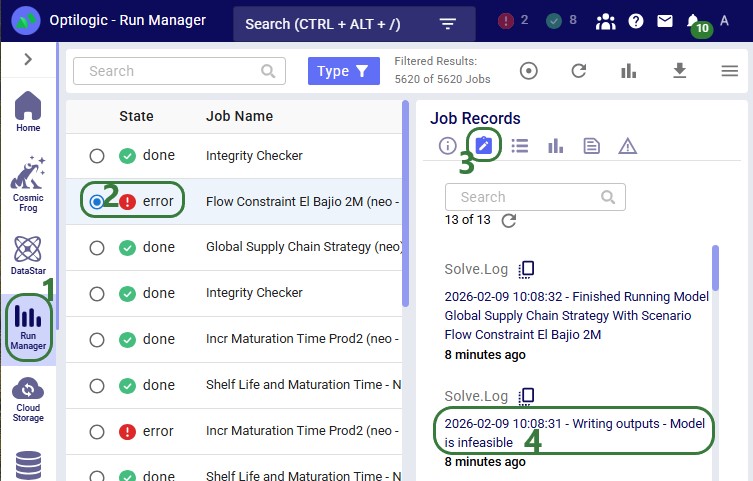

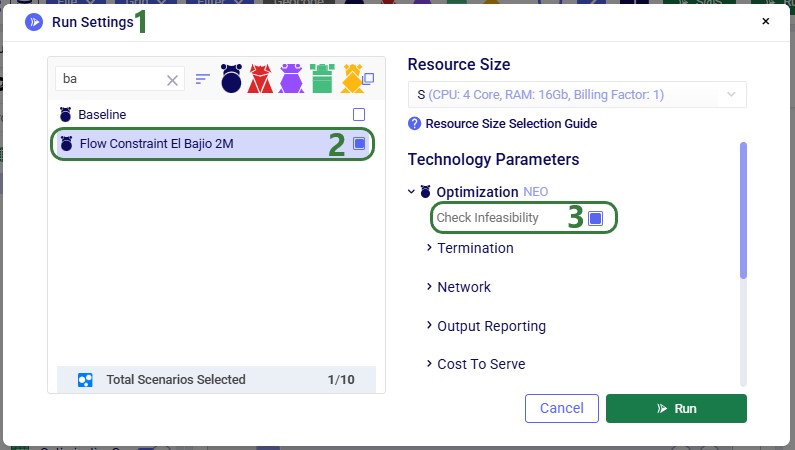

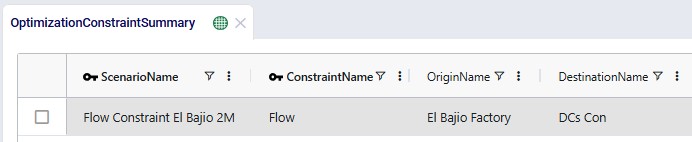

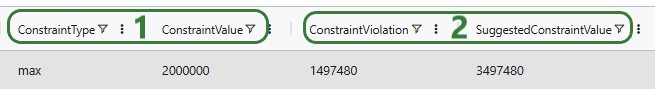

The symptoms of the problems desribed in this section can generally be seen either in the Cosmic Frog output tables, typically the Simulation Evolutionary Algorithm Summary and/or the Simulation Evolutionary Algorithm Output Factor Report output tables, or in the logs of the base scenario that Dendro was run on. These logs can be reviewed using the Run Manager application. The following screenshot shows part of a Job Log of a Dendro run, which is the most detailed log available:

Besides the Job Log, the Job Records log (second icon in the row of icons at the top rightof the logs) can also be helpful; it contains status messages of a run at a more aggregated level. If a run ends in an error, the Job Error Log may provide useful troubleshooting information. This log is accessed by clicking on the last icon in the row of icons at the top right of the logs.

Symptom: Fitness scores are nearly identical across different policy combinations.

Possible causes:

Cause 1: Utility curves too flat

UtilityCurve: [0,100],[10000000,0]

Range is so wide that actual values ($1.5M-$2.5M) all map to ~80-90 points.

Solution: Narrow the utility curve to the realistic range of values.

Cause 2: Weights too imbalanced

Dominant factor: Weight = 0.99 Other factors: Weight = 0.01 (total) One factor overwhelms all others, hiding differentiation.

Solution: Balance weights more evenly (unless extreme prioritization is truly intended).

Cause 3: Input factors do not affect output metrics - optimizing parameters that do not impact measured outcomes.

Solution: Verify that input factor changes influence output metrics via simulation.

Symptom: Cost decreases but service stays low or drops.

Possible causes:

Cause 1: Service weight too low

Cost: Weight = 0.90 Service: Weight = 0.10 Dendro focuses almost entirely on cost reduction.

Solution: Increase service weight to at least 0.30-0.50.

Cause 2: Service utility curve rewards poor performance

UtilityCurve: [80,50],[100,100]

80% service still gets 50 points - not enough penalty.

Solution: Use a steeper curve with lower minimum:

UtilityCurve: [80,0],[95,99],[100,100]

Cause 3: Conflicting objectives Impossible to improve service without exceeding cost limits defined in utility curves.

Solution: Relax cost constraints or adjust service targets to achievable levels.

Symptom: Frequent rescaling events throughout optimization.

Possible cause: Utility curve bounds are too narrow; Dendro keeps finding values outside the range.

Solution:

Symptom: Error loading output factors or calculating fitness.

Possible causes:

Cause 1: Wrong table name

TableName: NetworkSummary

Actual table is SimulationNetworkSummaryReplicationDetail.

Solution: Use exact table name from database schema.

Cause 2: Wrong column name

ColumnName: TotalCost

Actual column is TotalSupplyChainCost.

Solution: Verify column names match simulation output schema exactly.

Cause 3: Filter returns no rows

Filter: Region='West Coast'

No rows in output table have Region='West Coast'.

Solution: Test filter with SQL query against actual simulation output.

Symptom: "UtilityCurve is None" or parsing failures.

Common syntax errors:

❌ Missing commas between pairs:

[100,0][200,100]

✓ Correct:

[100,0],[200,100]

❌ Spaces inside brackets:

[ 100 , 0 ],[ 200 , 100 ]

✓ Correct (spaces are removed automatically but avoid for clarity):

[100,0],[200,100]

❌ Invalid numbers:

[1,000,0],[2,000,100]

Commas in numbers are invalid.

✓ Correct:

[1000,0],[2000,100]

❌ Single point:

[100,0]

Need at least two points for a curve.

✓ Correct:

[100,0],[200,100]

Optimize performance across different time periods:

Factor 1 - Peak Season Service: Filter: Month IN (11,12) Weight: 0.40 Factor 2 - Off-Season Service: Filter: Month NOT IN (11,12) Weight: 0.20 Factor 3 - Annual Cost: Filter: (empty - all months) Weight: 0.40 Result: Higher service standards during peak season, relaxed otherwise.

Different objectives for different product categories:

Factor 1 - A-Item Service (High priority): Filter: ProductCategory='A' UtilityCurve: [95,0],[98,99],[100,100] Weight: 0.35 Factor 2 - B-Item Service (Medium priority): Filter: ProductCategory='B' UtilityCurve: [90,0],[95,99],[100,100] Weight: 0.25 Factor 3 - C-Item Service (Low priority): Filter: ProductCategory='C' UtilityCurve: [85,0],[90,99],[100,100] Weight: 0.15 Factor 4 - Total Cost (All categories): Filter: (empty) Weight: 0.25 Result: ABC-based service differentiation with balanced cost control.

Output factors define success in Dendro optimization:

✓ What to measure: Metrics from simulation output tables

✓ How good is good: Utility curves mapping values to quality scores

✓ How important: Weights defining relative priorities

Key takeaways:

Well-configured output factors ensure Dendro optimizes for what truly matters to your business - whether that is cost minimization, service maximization, or balanced multi-objective optimization.

You may find these links helpful, some of which have already been mentioned above:

Please do not hesitate to contact the Optilogic Support team on support@optilogic.com for any questions or feedback.

Dendro, Optilogic’s simulation-optimization engine, uses a sophisticated Genetic Algorithm (GA) to, for example, optimize inventory policies across your supply chain network. This guide explains how the algorithm works in business-friendly terms, helping you understand what happens when you run Dendro and how to get the best results.

The Big Picture: Dendro's Genetic Algorithm explores thousands of different inventory policy combinations, simulates each one to see how it performs, and gradually evolves toward the best possible solution - much like natural evolution produces better-adapted organisms over time.

Recommended reading prior to diving into this guide: Getting Started with Dendro, which is a higher-level overview of how Dendro works.

Genetic Algorithms are inspired by biological evolution. Just as species evolve to become better adapted to their environment through:

Dendro evolves inventory policies to become better adapted to your business objectives through:

Traditional optimization methods struggle with inventory networks due to:

Genetic Algorithms excel at this type of problem because they:

Dendro's implementation uses three fundamental elements: chromosomes, genes, and fitness score.

A chromosome represents one complete set of inventory policies for your entire supply chain.

Example Chromosome:

Each chromosome is essentially a complete "proposal" for how to manage inventory across your network.

Each gene within a chromosome represents the policy for one facility-product combination.

Example Gene:

Genes can mutate (change their values) to explore different policy settings.

The fitness score measures how good a chromosome is - combining costs, service levels, and other objectives.

Higher scores are better - Dendro displays scores where better solutions have higher values.

A fitness score might combine:

Whenever the below refers to an option, this is a model run option that can be set in the Dendro section of the Technology Parameters on the right-hand side of the Run Settings screen that comes up after a user clicks on the green Run button in Cosmic Frog.

What happens: Dendro creates the initial population of chromosomes (policy combinations).

Implementation details:

Business perspective: Think of this as Dendro assembling a diverse team of proposals. The first proposal is "keep doing what we are doing", while the others explore variations like "increase safety stock by 10%", "reduce order quantities", etc.

Each generation follows the same four-step cycle:

What happens: Each chromosome is evaluated by:

Implementation details:

Business perspective: Dendro tests each proposal by running it through a realistic simulation of your supply chain over time. It is like running a pilot program for each policy combination to see what would actually happen - but virtually, so you can test thousands of options without risk.

Typical duration:

What happens: Dendro ranks all chromosomes by fitness score and selects the best ones to continue to the next generation.

Implementation details:

Business perspective: After testing all proposals, Dendro keeps the most promising ones and discards the poor performers. This is like a review committee keeping the best ideas and dropping the ones that do not work well.

Example:

Generation 5 Results (20 chromosomes evaluated):

What happens: Dendro creates new chromosomes by combining parts of two successful parent chromosomes.

Implementation details:

Business perspective: Dendro creates new proposals by combining the best parts of successful proposals. If one policy set works well for East Coast facilities and another works well for high-volume products, crossover might create a policy that combines both successful approaches.

Example with 1-Point Crossover:

Parent 1: [Gene_A1, Gene_A2, Gene_A3, Gene_A4, Gene_A5]

Parent 2: [Gene_B1, Gene_B2, Gene_B3, Gene_B4, Gene_B5]

↑ Crossover point

Offspring 1: [Gene_A1, Gene_A2, Gene_A3 | Gene_B4, Gene_B5]

Offspring 2: [Gene_B1, Gene_B2, Gene_B3 | Gene_A4, Gene_A5]

When crossover is most effective:

What happens: Dendro randomly adjusts policy values in the new chromosomes to explore variations.

Implementation details:

Business perspective: Dendro introduces controlled randomness to explore new options. Even the best current policies might not be optimal, so mutation ensures the algorithm does not get stuck. It is like saying "this policy works well at 500 units, but let's try 525 and 475 too".

Example mutations:

Original Gene: Reorder Point = 500, Order-Up-To = 1000

Mutated Gene: Reorder Point = 525, Order-Up-To = 1050

Mutation strategies:

Input factors define what Dendro can change. They are the "variables" in your optimization problem. They can be specified in the Input Factors input table in your Cosmic Frog model.

Common input factors:

How they work in chromosomes: Each input factor becomes a position in the chromosome. Dendro explores different values for each position.

Example: If you have 50 facility-product combinations to optimize, and each has two policy values (reorder point and order-up-to), your chromosome has 50 genes, each with 2 values = 100 total parameters being optimized.

Input factors are covered in detail in the Dendro: Input Factors Guide.

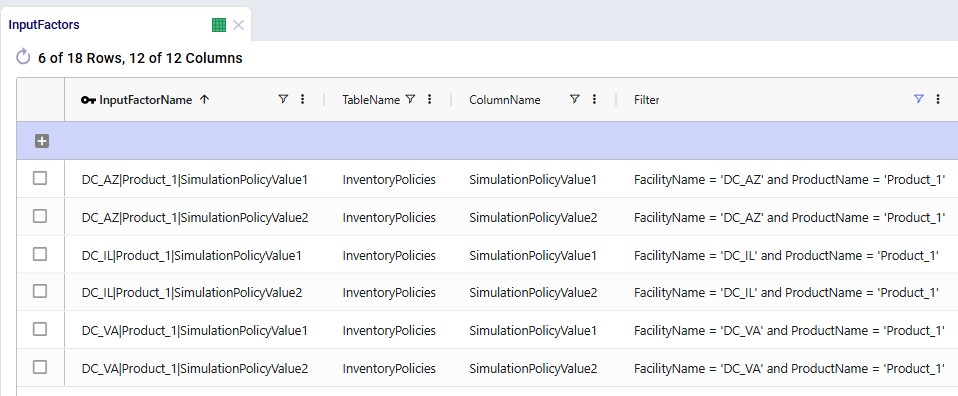

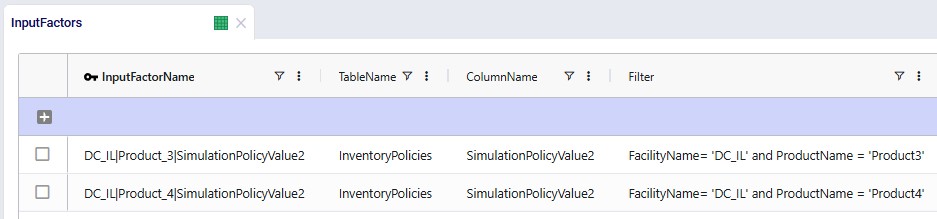

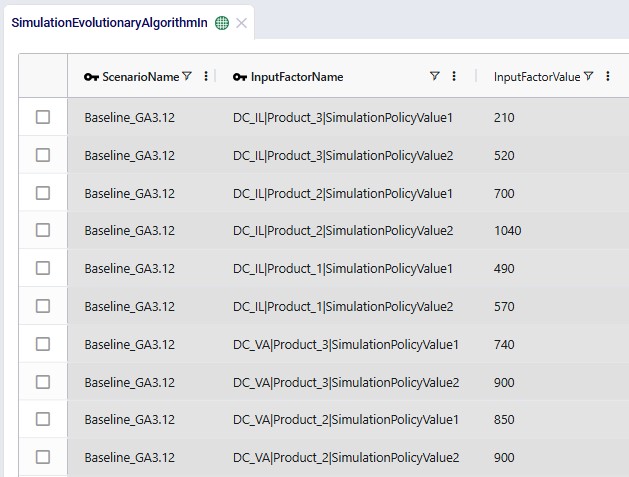

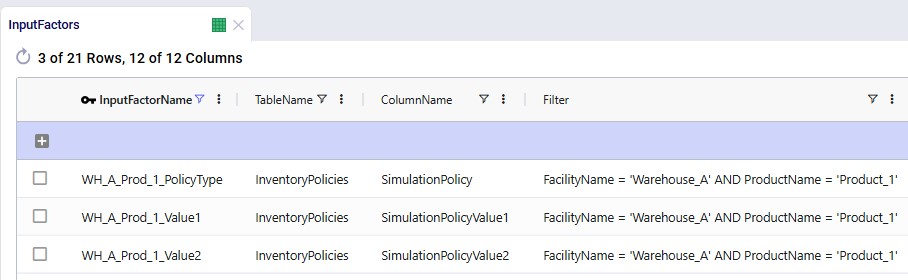

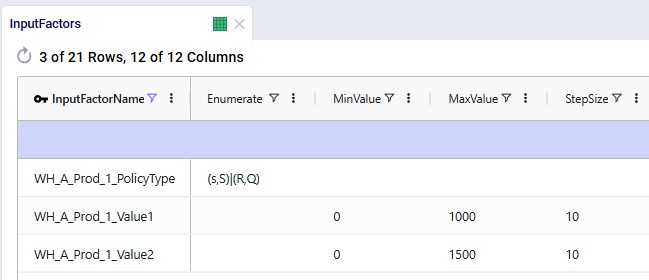

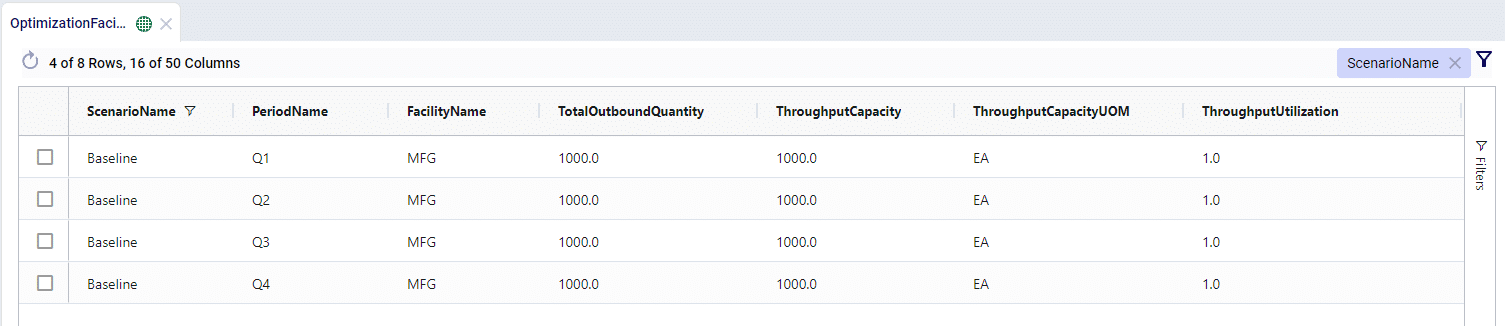

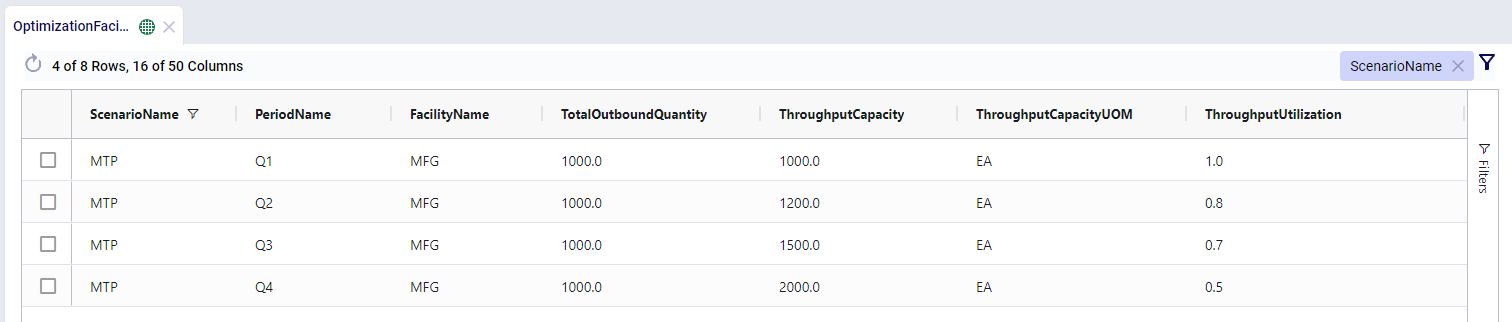

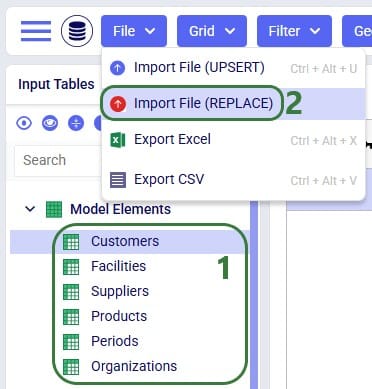

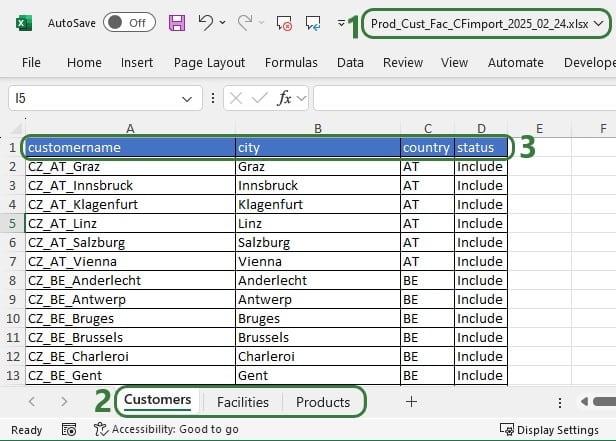

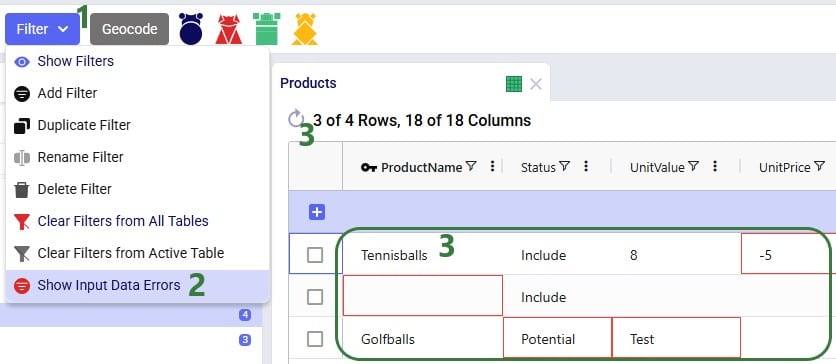

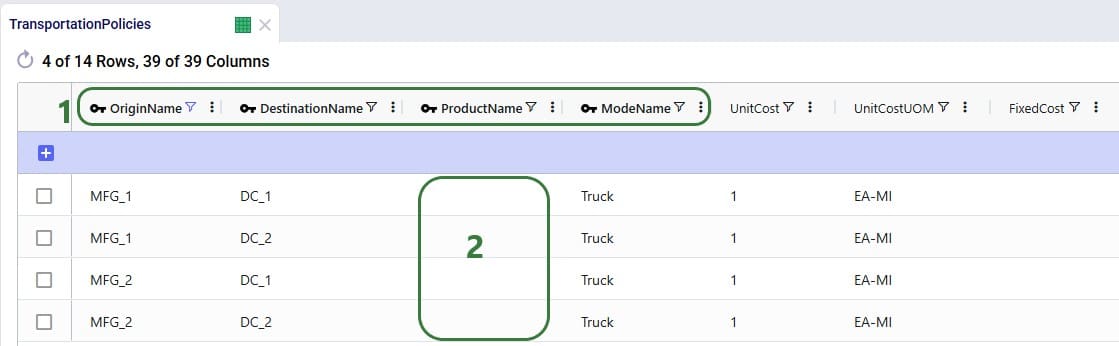

The following 2 screenshots show 6 records in an Input Factors input table in Cosmic Frog. Both simulation policy values for Product_1 at 3 different DCs are specified in these records:

Output factors define what Dendro tries to minimize. They are the "objectives" in your optimization problem. Output factors can be set in the Output Factors input table.

Common output factors:

How they combine - each output factor has a:

The Dendro: Output Factors Guide covers output factors in more detail.

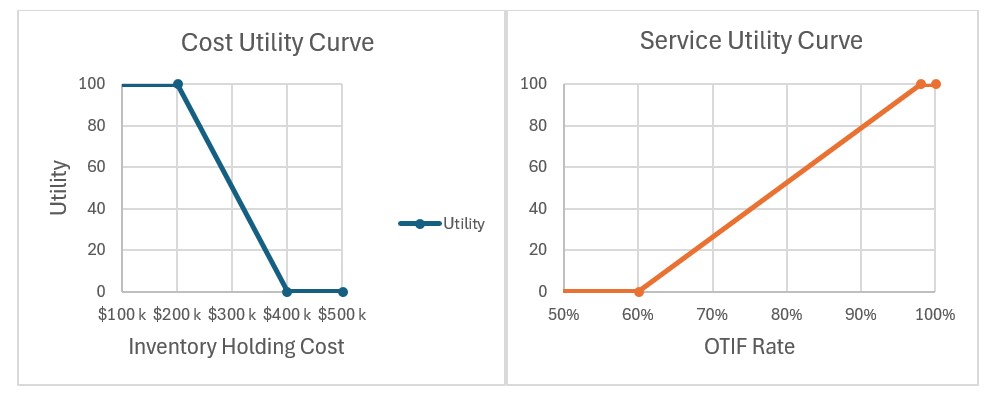

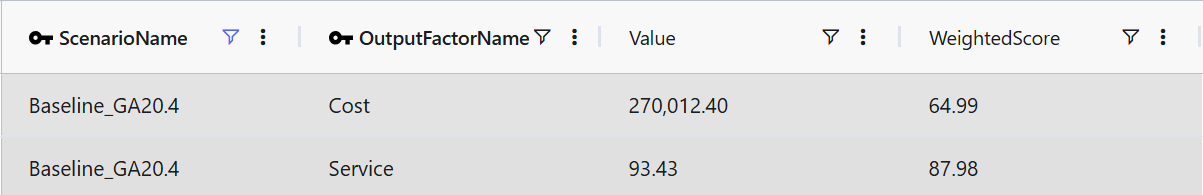

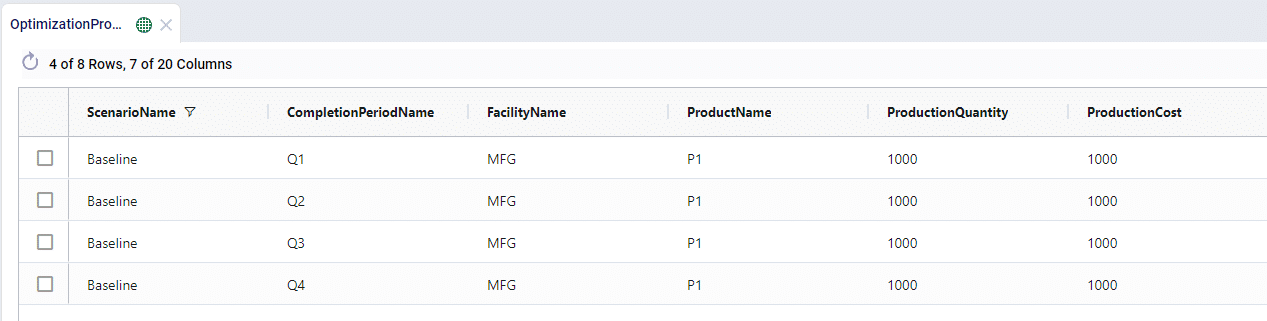

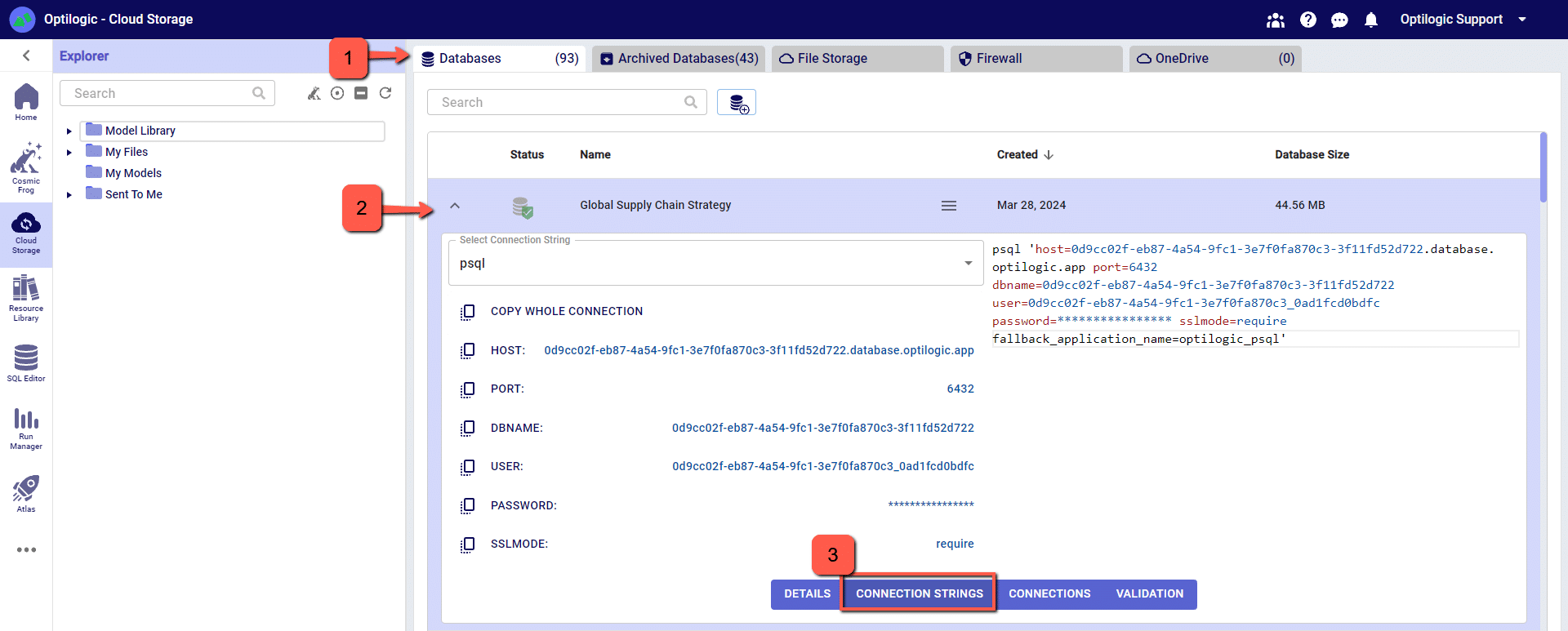

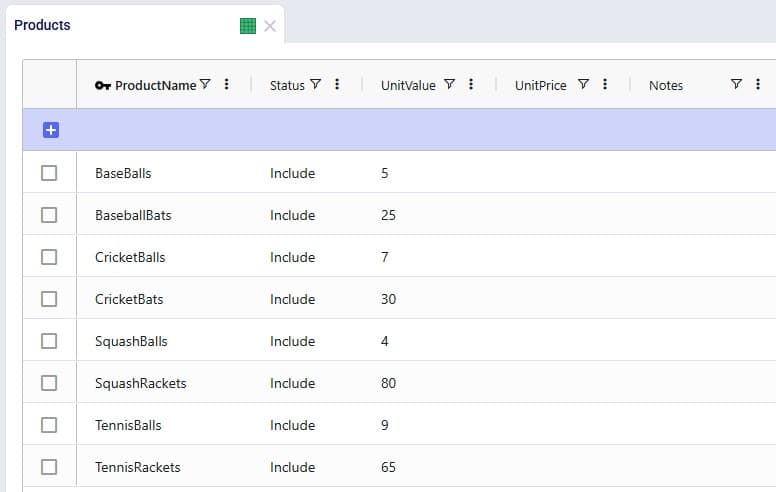

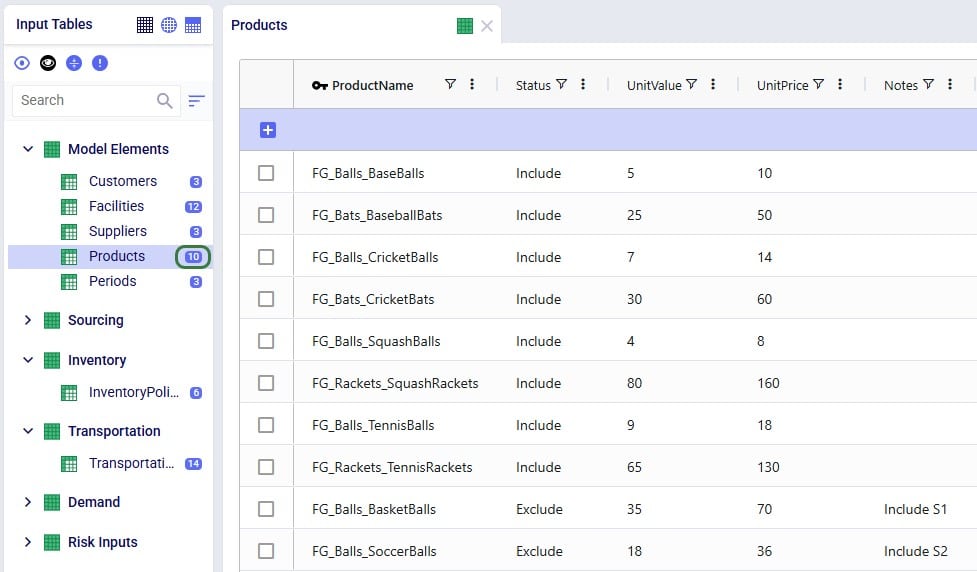

The following screenshot shows an output factors table containing 2 output factors, one measuring service and the other cost:

Utility curves convert simulation outputs into comparable fitness scores.

Why we need them:

How they work:

Example:

Holding Cost Utility Curve:

Business benefit: Utility curves let you define what "good" and "bad" mean for each metric. They translate apples-and-oranges metrics into a single comparable score.

The overall fitness score is the weighted sum of all output factors.

Formula:

Fitness Score = Σ(Weighted Score for each Output Factor)

Where for each factor:

Weighted Score = (Normalized Score from Utility Curve) × (Factor Weight)

Higher is better - Better solutions receive higher fitness scores.

Example calculation:

Output Factors:

1. Holding Cost: $75,000 → Raw Score 75 → Weighted 22.5 (weight 30%)

2. Transport Cost: $40,000 → Raw Score 60 → Weighted 15.0 (weight 25%)

3. Stockout Penalty: $10,000 → Raw Score 80 → Weighted 20.0 (weight 25%)

4. Service Level: 96% → Raw Score 90 → Weighted 18.0 (weight 20%)

Overall Fitness = 22.5 + 15.0 + 20.0 + 18.0 = 75.5 points

Important note: Scores are recalculated when new min/max values are discovered to ensure fair comparison across all generations.

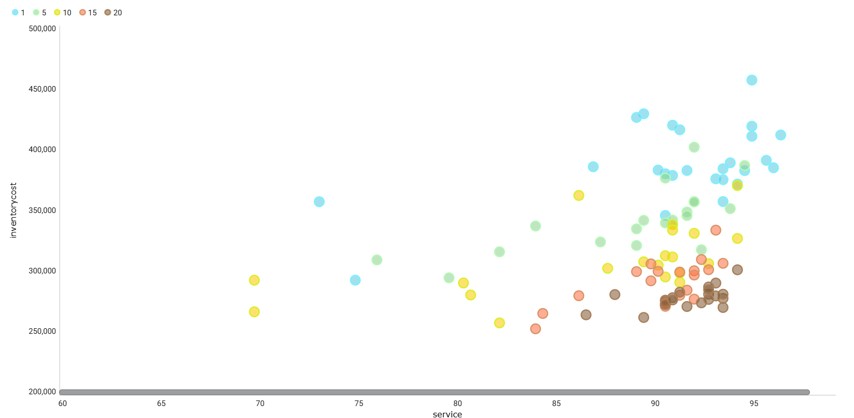

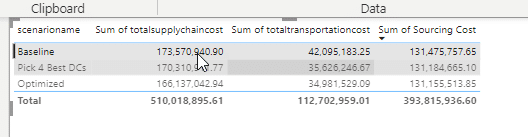

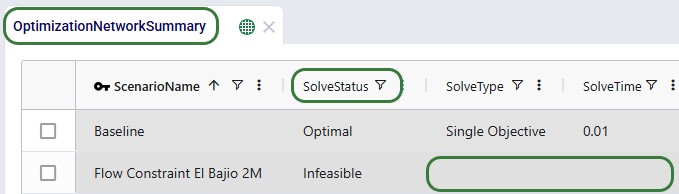

After a Dendro run completes, the fitness scores of all scenarios (=chromosomes) of all generations can be found in the Simulation Evolutionary Algorithm Summary output table (showing the top 10 records here with the highest overall fitness scores):

The challenge: Early in the optimization, Dendro does not know what the best and worst possible values are for each metric. As it explores, it might find:

The solution: Dendro dynamically rescales utility curves when new extremes are discovered.

What happens:

Business perspective: This ensures that a solution that looked "good" in Generation 2 is not unfairly favored if better solutions are discovered later. Everyone is judged by the same standard.

Keeping the best results:

Small population, many generations:

Characteristics:

Large population, fewer generations:

Characteristics:

Balanced approach (recommended):

High mutation (100% probability):

Low mutation (30-50% probability):

Single-point crossover (default):

Points To Crossover = 1

Multi-point crossover:

Points To Crossover = 2 or more

When to disable crossover:

Key indicators of healthy optimization:

The Simulation Evolutionary Algorithm Summary output table can be used to assess the above 2 points as it contains the fitness scores of all scenarios (=chromosomes) that were run for all generations. See an example screenshot of this table further above in the Fitness Score Calculation section.

This last point can be assessed by reviewing the logs of the base scenario used for the Dendro run.

Logging output: Dendro logs key events. These can be found in the Run Manager application, which is another application on the Optilogic platform. The Job Log of the base scenario that Dendro is run on contains quite a lot of detailed on events, such as:

This screenshot shows part of a Job Log for a Dendro run:

The Job Records log, which can be accessed by clicking on the second icon in the row of icons at the top right of the logs provides a higher level summary of the Dendro run, just calling out the main events:

The GA excels in following situations:

The mechanism: Dendro automatically detects and eliminates duplicate chromosomes.

How it works:

Business benefit: Ensures computational resources are used efficiently - never simulating the same policy combination twice.

Challenge: Each chromosome generates a simulation scenario with potentially gigabytes of output data.

Solution:

Business benefit: You can analyze the best solutions in detail without storing data for thousands of unsuccessful experiments.

Network:

Configuration:

Process:

Generation 1:

Generations 2-10:

Generations 11-15:

Generations 16-20:

Results:

Dendro's Genetic Algorithm is a powerful, flexible optimization engine that:

By understanding how the algorithm works, you can:

The GA is not magic – it is a systematic, intelligent search process. Like any tool, it works best when used thoughtfully and configured appropriately for your specific situation.

You may find these links helpful, some of which have already been mentioned above:

Please do not hesitate to contact the Optilogic Support team on support@optilogic.com for any questions or feedback.

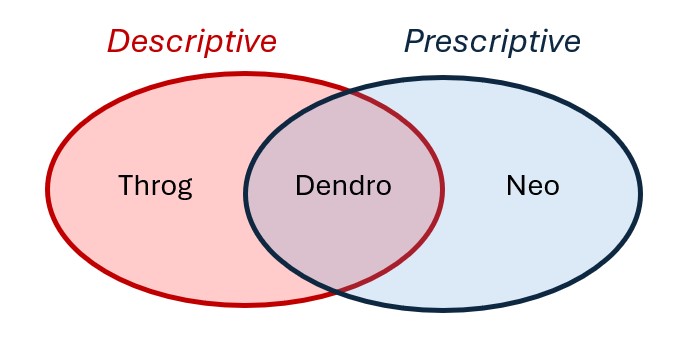

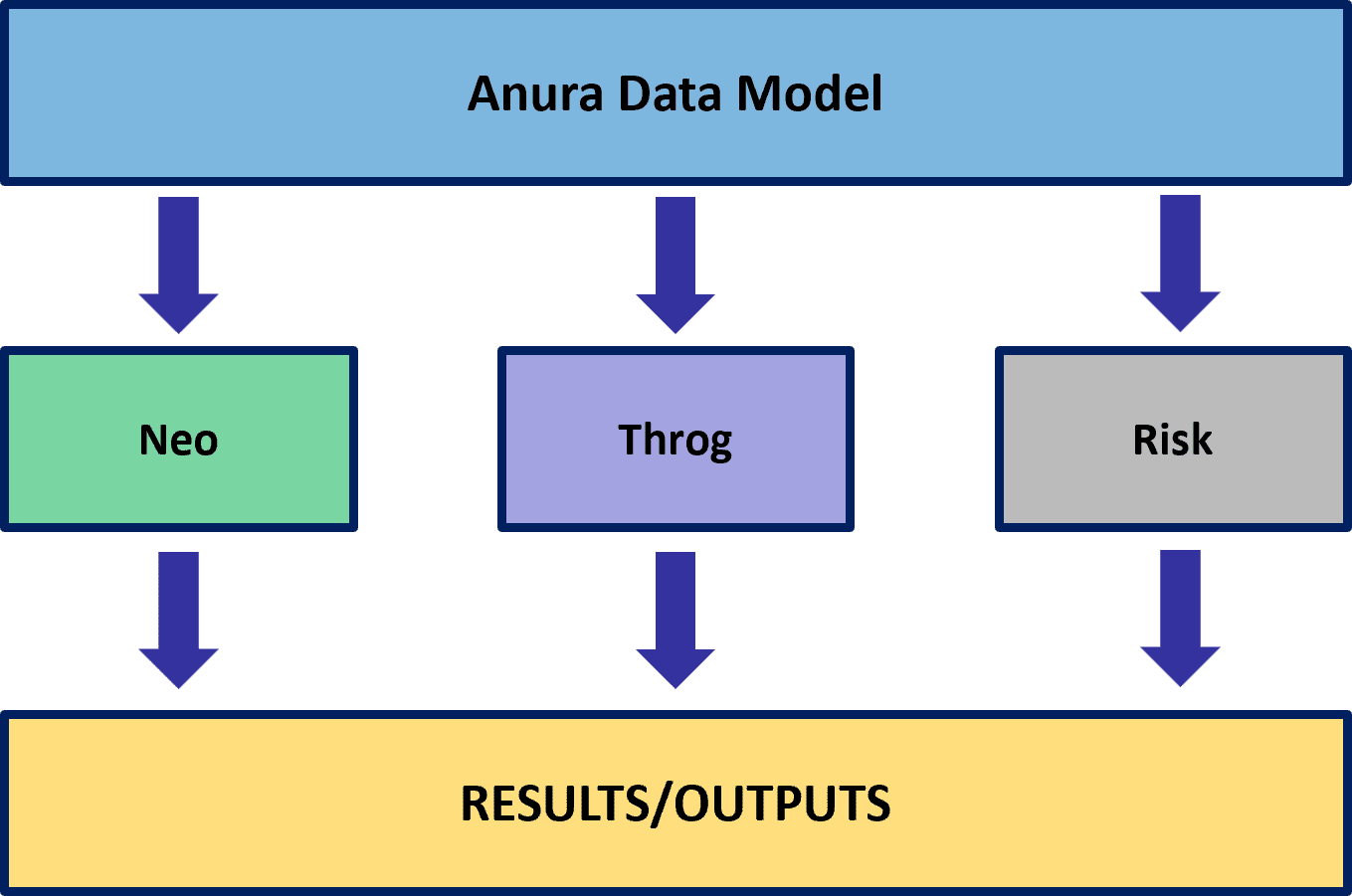

Dendro is Optilogic's simulation-optimization engine. A prime use case for Dendro is inventory policy optimization.

Simulation-optimization is a method in which simulation is leveraged to intelligently explore alternative configurations of a system. Dendro accomplishes this data-driven search by layering a genetic algorithm on top of simulation; simulation is the core of a Dendro model. Before a Dendro study can begin, a simulation model (run with the Throg engine) must be built, verified, and validated.

Simulation-optimization enables us to ask and answer questions that we cannot address in traditional network optimization or simulation alone.

In this article, we will explore:

The modeling methods of network optimization (Neo), discrete event simulation (Throg), and simulation-optimization (Dendro) address different supply chain design use cases.

A prime use case for Dendro is inventory policy optimization: right-sizing inventory levels by changing inventory policy parameters (reorder points, reorder quantities, etc.) with the goal of balancing cost and service. Dendro's foundation in simulation minimizes the abstraction of cost accounting, service metrics, and the business rules surrounding inventory management. Dendro provides actionable inventory policy recommendations and data-driven evidence to support those changes.

Primary Focus: Determining where inventory should be positioned across the network.

Use Case: Network design decisions that include high-level inventory considerations -- such as stocking locations, target turns, and working capital trade-offs.

How It Handles Inventory:

Primary Focus: Testing and observing how specific inventory policies perform under realistic operational dynamics.

Use Case: Evaluate inventory control logic (e.g., reorder point, order quantity, order-up-to level) in a time-based simulation environment.

How It Handles Inventory:

Primary Focus: Finding better inventory policies that balance cost and service, combining Throg's simulation accuracy with an optimization engine.

Use Case: Adjust inventory policy parameters (e.g., reorder point, reorder quantity, policy type) to find configurations that deliver the best cost-service trade-offs.

How It Handles Inventory:

While this comparison highlights how each engine contributes to inventory management decisions, the specific use case covered in this article is inventory policy optimization -- using Dendro to balance total inventory carrying cost and network-level customer service (measured as quantity fill rate).

That said, Dendro's capabilities extend far beyond inventory. The same framework of input factors, output factors, and utility functions can be applied to a wide range of optimization problems -- and its utility components are not limited to just cost and service. Dendro can optimize for any measurable performance metric that matters to your business.

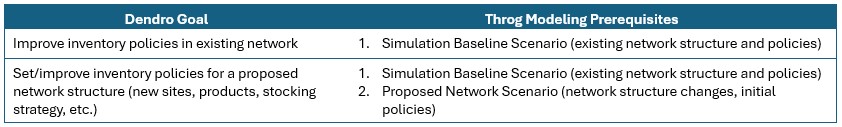

As stated above, a Dendro study cannot be initiated without first establishing a Throg simulation model; a Dendro project should be thought of as a simulation project with an added layer of analysis. Careful Throg model verification and validation are part of a simulation project and are therefore a prerequisite for a Dendro study. To learn how to set up a Throg simulation model, we recommend reviewing the following on-demand training content:

In addition, Throg-specific articles can be found here on the Help Center.

Throg scenario results serve as one piece of input for a Dendro run. Identification of the appropriate Throg scenario to apply Dendro to depends on the goal of the Dendro study. The network structure under which the modeler is seeking to optimize inventory policies must be represented in a Throg scenario.

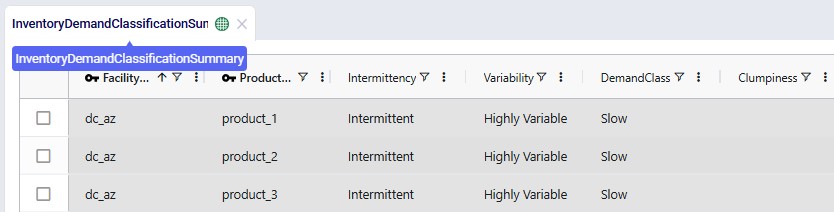

Inventory policies are required to run a simulation scenario. In some cases, a modeler may not have existing inventory policies to utilize in a baseline scenario. Similarly, simulating a proposed network structure requires first setting inventory policies for new site-product combinations. To set policies, we recommend utilizing the Demand Classification utility in Cosmic Frog's Utilities module.

Suggested inventory policies from the Inventory Policy Data Summary output table can then be employed in the Inventory Policies input table. For the sake of testing while the Throg model is being built and verified, users may find it helpful to initially leverage simple placeholder inventory policies where policies are unknown or not yet in existence (e.g., (s,S) = (0,0)).

Note: if the modeler's goal is to set policies for a proposed network structure, it is recommended to first optimize Baseline inventory policies. This enables the modeler to compare potential performance of the existing network structure (i.e., performance under optimized policies) with performance of the proposed network structure. This is especially encouraged if the Demand Classification utility was used to set Baseline inventory policies.

Before running Dendro to optimize inventory policies, the modeler must consider

The answers to these questions will inform the design of Dendro model inputs.

Every Dendro optimization begins with a well-defined model foundation and input configuration.

This configuration tells Dendro three essential things:

Together, these define the search space and fitness criteria that drive optimization.

Dendro builds directly on your validated Throg simulation model, which serves as the environment for its optimization runs.

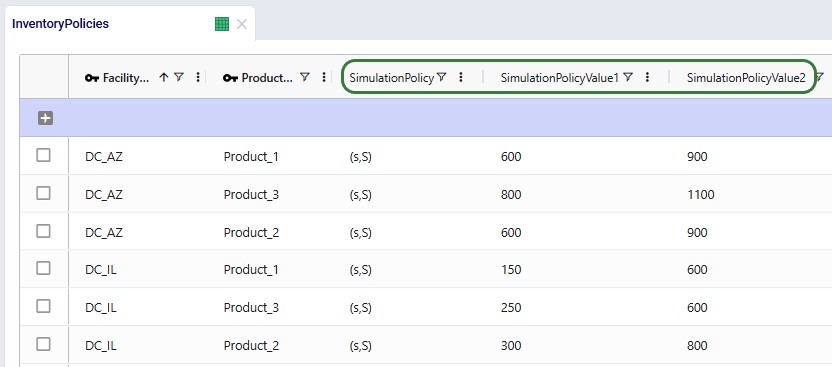

All Throg input tables -- facilities, customers, products, and policies -- are carried into Dendro.

For inventory-focused optimizations, the Inventory Policies input table is most critical.

It defines policy types and parameters such as reorder points and order-up-to levels. These become natural candidates for Dendro input factors.

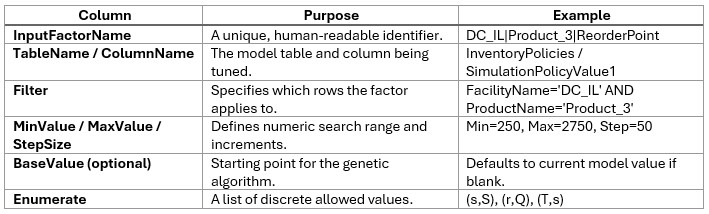

The Input Factors input table tells Dendro which parameters it can adjust during optimization. Each input factor represents a decision lever -- for instance, a reorder point or a policy type -- that Dendro will tune to seek better outcomes.

The search space defines both the breadth (how wide Dendro can explore) and the granularity (how detailed the exploration is).

The goal is a "computationally tractable search space" -- broad enough for Dendro to discover impactful alternatives but focused enough to identify patterns efficiently.

Learn all about input factors in the "Dendro: Input Factors Guide".

Once Dendro knows what it can vary, it needs to know how to judge success. The Output Factors input table defines the KPIs Dendro will use to evaluate each scenario and how they are scored.

Utility curves express your business preferences:

Examples:

Tip: Curves should span the expected simulation range (e.g., 5th-95th percentile of early results). Dendro will stretch the utility curves if results fall outside the range, which can distort scoring.

Weights dictate how much influence each KPI has in Dendro's overall fitness score calculation.

Learn more about output factors in the "Dendro: Output Factors Guide".

A well-scoped input configuration defines the playing field for Dendro's optimization.

By combining realistic input ranges, balanced KPI scoring, and robust run settings, you enable Dendro to explore intelligently finding high-performing, data-backed configurations without unnecessary computation.

Dendro is built to explore a wide range of supply chain configurations using your verified and validated Throg simulation scenario(s) as the base. Running a Dendro job is straightforward, but there are a few important steps and best practices to keep in mind to ensure smooth operation.

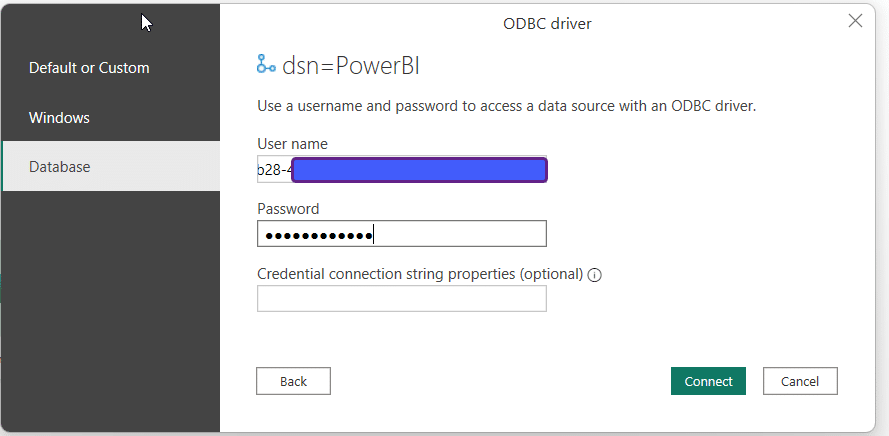

To get started:

INSERT INTO anura_2_8.modelrunoptions (

datatype,

description,

option,

status,

technology,

uidisplaycategory,

uidisplayname,

uidisplayorder,

uidisplaysubcategory,

value)

VALUES (

'double',

'Time limit (seconds) on individual Dendro scenarios',

'DendroTimeout',

'Include',

'[DENDRO]',

'Basic',

'Dendro Timeout',

'',

'',

'3600');

The technology parameters define how Dendro explores the solution space -- including how many scenarios to generate, how they evolve, and how long each simulation may run. As mentioned above, the default values are typically sufficient for a first run. The following are the main parameters that users may want to update in case their defaults are not suitable.

Key Settings

See also more detailed explanations of all Dendro technology parameters here.

Tuning Guidance

Use these principles to fine-tune your technology parameters:

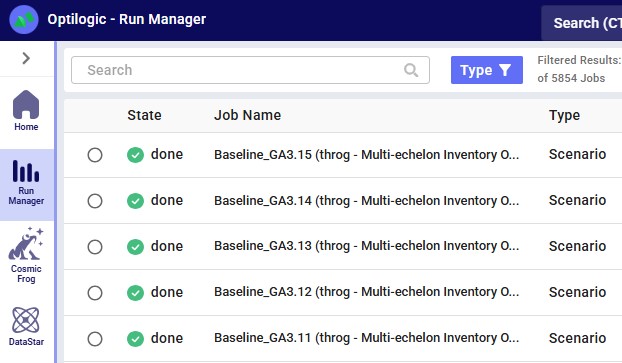

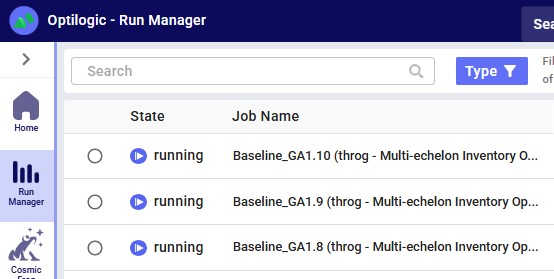

In the course of a Dendro run, the system launches many scenarios for each generation. This is the expected behavior for the genetic algorithm.

Key tips for tracking progress:

Running Dendro is as simple as selecting the engine and starting from a validated Throg model. From there, you can monitor progress, let the algorithm evolve scenarios, and apply best practices for canceling or troubleshooting runs. By following these guidelines, you ensure that Dendro can efficiently search for high-performing supply chain configurations.

To understand how the Dendro genetic algorithm works in detail, please see "Dendro: Genetic Algorithm Guide".

When a Dendro run completes, it produces a rich set of outputs that capture the results of the genetic algorithm's search. These outputs represent the different supply chain configurations that were explored, along with how each one performed according to the objectives you defined (e.g., cost, service level, or a balance of both).

Rather than giving you a single "right" answer, Dendro presents a spectrum of high-performing options. As the modeler, you use these results to evaluate trade-offs and select the scenarios that best align with your business goals.

During a run, Dendro evaluates each candidate scenario by:

Dendro records the input factors and output factors of every scenario it evaluates. However, only the top 20 highest-performing scenarios are saved as named scenarios in your model, making them easier to access and reuse.

After the run, the top 20 scenarios are automatically saved in your model. These represent the best balances of trade-offs Dendro discovered within your defined search space.

Each scenario has an overall fitness score, calculated as the weighted sum of its output factor utility values. A higher score means the scenario performed well according to the priorities you set (e.g., balancing low cost with high service).

Tip: The "best" scenario numerically may not always be the most practical operationally. Always interpret results in context.

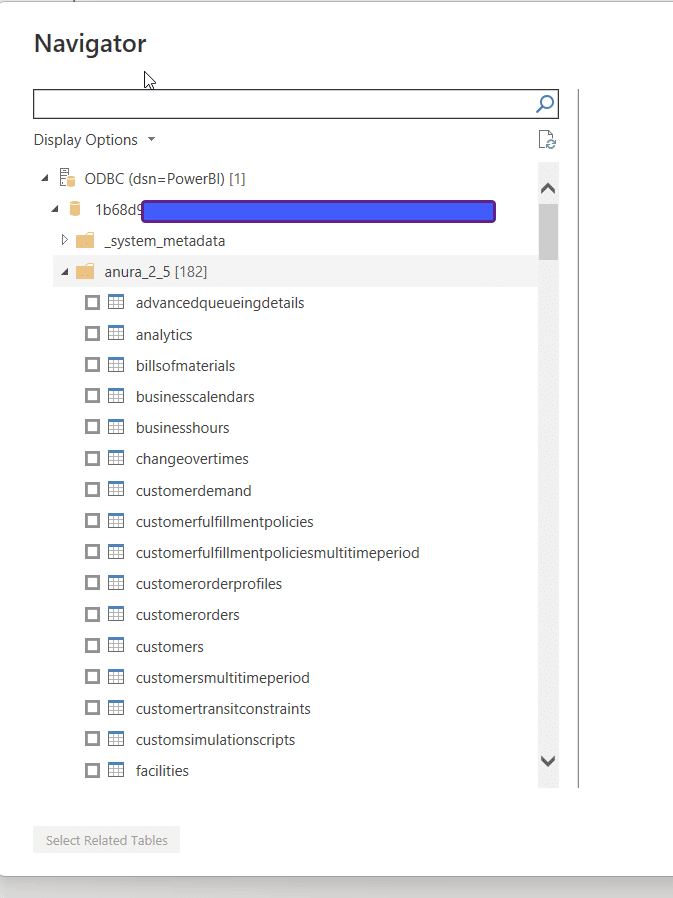

Where to Find Key Results

Tips for Reviewing Results

Dendro generates raw scenario outputs, but actionable recommendations come from your interpretation in the context of business goals.

In summary, Dendro does not hand you a single answer, it gives you a portfolio of high-performing options. By interpreting these scenarios in context, you can make informed decisions, balance trade-offs, and run deeper simulations where needed to guide your supply chain strategy.

You may find these links helpful, some of which have already been mentioned above:

As always, please feel free to reach out to the Optilogic Support team at support@optilogic.com in case of questions or feedback.

Input factors are at the heart of Dendro optimization - they define what Dendro can change to improve your supply chain performance. Think of input factors as the "knobs" Dendro can turn to find better inventory policies.

Key concepts:

This guide explains how to configure input factors to give Dendro the right level of control over your inventory policies. These can be specified in the Input Factors input table in Cosmic Frog.

Recommended reading prior to diving into this guide: Getting Started with Dendro, a high-level overview of how Dendro works, and Dendro: Genetic Algorithm Guide which explains the inner workings of Dendro in more detail.

An input factor tells Dendro's Genetic Algorithm:

Dendro explores these decisions systematically across your entire network to find the optimal combination.

Dendro supports three types of input factors, each suited for different kinds of optimization variables. These are all specified on the Input Factors input table, see also "The Input Factors Table" section further below.

What they are: Numeric values that can vary within a minimum and maximum range.

Best for:

Configuration fields:

InputFactorName: WH_A_Prod_1_ReorderPoint TableName: InventoryPolicies ColumnName: SimulationPolicyValue1 Filter: FacilityName='Warehouse A' AND ProductName='Product 1' MinValue: 100 MaxValue: 1000 StepSize: 10 BaseValue: 500 How it works:

Example:

Chromosome 1 (baseline): 500 Chromosome 2 (random): 730 Chromosome 3 (random): 220 Chromosome 4 (mutated from 2): 740 (increased by one step) What they are: Values selected from a predefined list of discrete options.

Best for:

Configuration fields:

InputFactorName: DC_Policy_Type TableName: InventoryPolicies ColumnName: SimulationPolicy Filter: FacilityName='Distribution Center' Enumerate: (s,S)|(R,Q)|(T,S) BaseValue: (s,S) How it works:

Example:

Chromosome 1 (baseline): (s,S) Chromosome 2 (random): (R,Q) Chromosome 3 (random): (T,S) Chromosome 4 (mutated from 2): (s,S) (switched from R,Q) What they are: Grouped input factors that manage related inventory policy parameters together as a coordinated set.

Best for:

How they work:

Example configuration:

Factor 1: InputFactorName: WH_A_Prod_1_PolicyType TableName: InventoryPolicies ColumnName: SimulationPolicy Filter: FacilityName='Warehouse A' AND ProductName='Product 1' Enumerate: (s,S)|(R,Q) Factor 2: InputFactorName: WH_A_Prod_1_Value1 TableName: InventoryPolicies ColumnName: SimulationPolicyValue1 Filter: FacilityName='Warehouse A' AND ProductName='Product 1' MinValue: 0 MaxValue: 1000 StepSize: 10 Factor 3: InputFactorName: WH_A_Prod_1_Value2 TableName: InventoryPolicies ColumnName: SimulationPolicyValue2 Filter: FacilityName='Warehouse A' AND ProductName='Product 1' MinValue: 0 MaxValue: 1500 StepSize: 10 Automatic policy conversion: When policy type changes from (R,Q) to (s,S):

When changing from (s,S) to (R,Q):

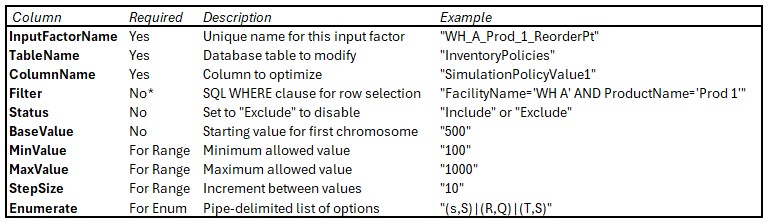

Input factors are configured in the Input Factors input table with the following columns:

*Filter is required for inventory policy input factors to identify the specific facility-product combination.

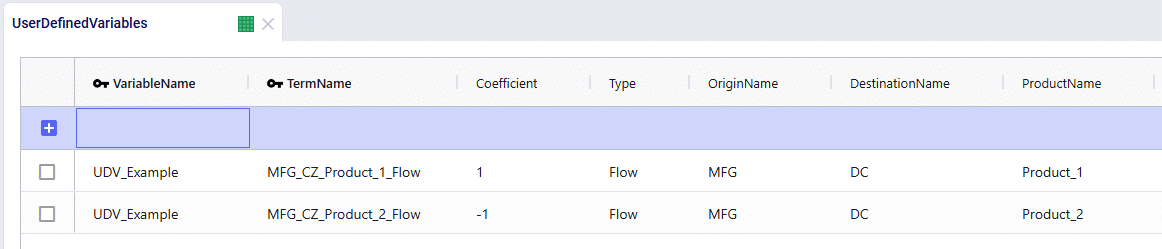

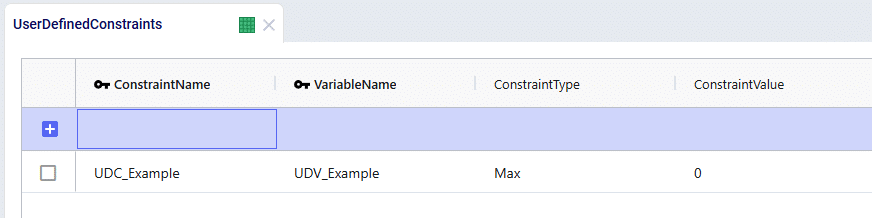

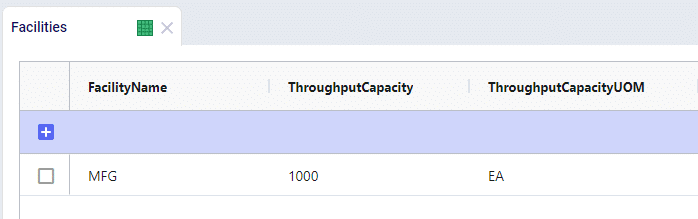

The following 2 screenshots show the Input Factors table in Cosmic Frog. It contains 3 records which are set up the same as the example in the "Inventory Policy Sets (Advanced)" section above:

Optimize the reorder point for a single facility-product:

InputFactorName: Seattle_Widget_ReorderPoint TableName: InventoryPolicies ColumnName: SimulationPolicyValue1 Filter: FacilityName='Seattle DC' AND ProductName='Widget A' MinValue: 200 MaxValue: 800 StepSize: 20 BaseValue: 500 Status: Include Result: Dendro will explore reorder points from 200 to 800 in steps of 20 (200, 220, 240, ..., 800).

Optimize order quantity with small increments:

InputFactorName: Boston_Gadget_OrderQty TableName: InventoryPolicies ColumnName: SimulationPolicyValue2 Filter: FacilityName='Boston WH' AND ProductName='Gadget B' MinValue: 50 MaxValue: 500 StepSize: 5 BaseValue: 200 Status: Include Result: Dendro will explore 91 different values (50, 55, 60, ..., 500).

Let Dendro choose between different inventory policies:

InputFactorName: Chicago_Part_PolicyType TableName: InventoryPolicies ColumnName: SimulationPolicy Filter: FacilityName='Chicago Plant' AND ProductName='Part C' Enumerate: (s,S)|(R,Q)|(T,S) BaseValue: (s,S) Status: Include Result: Dendro will test (s,S), (R,Q), and (T,S) policies to see which performs best.

Optimize both policy type and parameters:

Policy Type InputFactorName: LA_Motor_PolicyType TableName: InventoryPolicies ColumnName: SimulationPolicy Filter: FacilityName='LA Warehouse' AND ProductName='Motor D' Enumerate: (s,S)|(R,Q) BaseValue: (s,S) Parameter 1 (reorder point or 's') InputFactorName: LA_Motor_Value1 TableName: InventoryPolicies ColumnName: SimulationPolicyValue1 Filter: FacilityName='LA Warehouse' AND ProductName='Motor D' MinValue: 0 MaxValue: 500 StepSize: 10 BaseValue: 100 Parameter 2 (order-up-to or order quantity) InputFactorName: LA_Motor_Value2 TableName: InventoryPolicies ColumnName: SimulationPolicyValue2 Filter: FacilityName='LA Warehouse' AND ProductName='Motor D' MinValue: 0 MaxValue: 800 StepSize: 10 BaseValue: 300 Result: Dendro will:

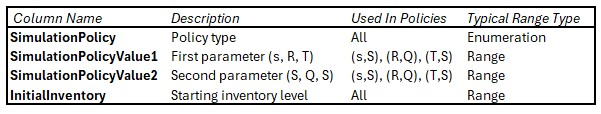

The most common input factors for inventory optimization target these columns in the Inventory Policies input table:

Example:

Input Factors:

[0] WH_A_Prod_1_ReorderPoint (Range: 100-1000, Step: 10, Base: 500) [1] WH_A_Prod_1_ReorderPoint (Range: 200-800, Step: 20, Base: 400) [2] WH_A_Prod_1_PolicyType (Enum: (s,S)|(R,Q), Base: (s,S))Results in:

Chromosome 1 (baseline): [500, 400, 0] (0 = first enum value = (s,S)) Chromosome 2 (random): [730, 560, 1] (1 = second enum value = (R,Q)) Chromosome 3 (random): [220, 640, 0] (0 = first enum value = (s,S))When Dendro mutates a chromosome, it changes one or more input factor values:

For Range factors:

For Enumeration factors:

Example mutation:

Original: [500, 400, 0] Mutated: [500, 420, 0] (Position 1 changed: 400 to 420) When Dendro creates a scenario to simulate using the Throg engine:

Result: Each chromosome becomes a complete, runnable supply chain scenario with its specific inventory policy settings.

When turned on, the Automatically Generate Input Factors option (a model run option in the Dendro section of the Technology Parameters) automatically creates input factor configurations based on your inventory policies and service targets.

When to use:

How it works:

The automatic builder uses intelligent rules to set min/max ranges based on current values and service performance:

MinValue: 0 MaxValue: value + 5 (or adjusted based on service) StepSize: 1 Rationale: Small values need fine-grained control and room to explore modest increases.

MinValue: max(1, rounded_value - 15) MaxValue: rounded_value + 15 StepSize: 5 Values are rounded to base 5

Rationale: Medium values can use larger steps (5) while still providing good resolution.

MinValue: max(1, rounded_value - 50) MaxValue: rounded_value + 50 StepSize: 10 Values are rounded to base 10

Rationale: Larger inventories need wider exploration ranges with proportionally larger steps.

MinValue: max(1, rounded_value - 200) MaxValue: rounded_value + 200 StepSize: 20 Values are rounded to base 20

Rationale: Very large inventories benefit from broad exploration with coarse granularity.

The builder adjusts ranges based on current service performance:

When fill rate < target service level: The ratio = target_service / current_fill_rate is used to expand the maximum:

MaxValue = max(value + buffer, ratio * value)

Example:

When fill rate => target service level: The builder is more conservative, allowing exploration below current value:

MaxValue = min(value + buffer, quantity_bounds)

Rationale: If service is already good, there may be opportunity to reduce inventory without harming service.

When fill rate <= 85% (poor service): MinValue is set to current value - does not explore lower values that would worsen service further.

For each inventory policy, the automatic generator typically creates:

Result: A complete Input Factors table ready for Dendro optimization.

Too narrow:

MinValue: 490 MaxValue: 510 StepSize: 1 Problem: Dendro cannot explore significantly different solutions. Only 21 values to test.

Too wide:

MinValue: 0 MaxValue: 100000 StepSize: 1 Problem: 100,000 possible values means Dendro will likely miss the optimal value in the haystack.

Just right:

MinValue: 200 MaxValue: 800 StepSize: 20 Why: Reasonable range around current value (500), coarse enough for efficient exploration (31 values), fine enough for precision.

Rule of thumb: Step size should be 1-5% of the expected optimal value.

For value of approximately 100:

For value of approximately 1000:

Why it matters:

Bad:

MinValue: 100 MaxValue: 1000 StepSize: 40 Problem: (1000-100) / 40 = 22 remainder 20; the max might not be reachable exactly.

Good:

MinValue: 100 MaxValue: 1000 StepSize: 30Check: (1000-100) / 30 = 30 remainder 0; the max can be reached exactly.

Dendro automatically adjusts MaxValue if needed to ensure even division.

Best practice: Set BaseValue to your current policy value.

Why:

When BaseValue is null:

For inventory policies, always configure factors for the same facility-product together:

Good - Coordinated filters:

All three factors have identical FilterWH_A_Prod_1_PolicyType Filter: FacilityName='WH A' AND ProductName='Prod 1' WH_A_Prod_1_Value1 Filter: FacilityName='WH A' AND ProductName='Prod 1' WH_A_Prod_1_Value2 Filter: FacilityName='WH A' AND ProductName='Prod 1' Bad - Mismatched filters:

WH_A_Prod_1_PolicyType Filter: FacilityName='WH A' AND ProductName='Prod 1' WH_A_Prod_1_Value1 Filter: FacilityName='WH A' AND ProductName='Prod 1' WH_A_AllProds_Value2 Filter: FacilityName='WH A' # WRONG - does not match! Why: Dendro groups factors by filter. Mismatched filters create separate uncoordinated genes.

Guideline: More input factors = larger search space = longer optimization

Example calculation:

Search space size: 50^200 = enormous!

Best practice:

Use the Status column: Set Status = 'Exclude' for factors you want to temporarily disable without deleting.

Common mistakes:

Wrong - Missing quotes:

Filter: FacilityName=Warehouse A AND ProductName=Widget

Correct - Proper quotes:

Filter: FacilityName='Warehouse A' AND ProductName='Widget'

Wrong - Incorrect column names:

Filter: Facility='WH A' AND Product='Widget'

Correct - Match actual table column names:

Filter: FacilityName='WH A' AND ProductName='Widget'

Testing tip: Run your filter as a SQL WHERE clause to verify it returns exactly one row (you can use the SQL Editor application on the Optilogic platform for this):

SELECT * FROM InventoryPolicies

WHERE FacilityName='WH A' AND ProductName='Widget';Goal: Keep current policy types and order quantities; optimize reorder points only.

Configuration:

For each facility-product:

InputFactorName: {Facility}_{Product}_ReorderPt TableName: InventoryPolicies ColumnName: SimulationPolicyValue1 Filter: FacilityName='{Facility}' AND ProductName='{Product}' MinValue: [calculated] MaxValue: [calculated] StepSize: [appropriate for value scale] BaseValue: [current value] Result: Dendro explores different reorder points while keeping other policy parameters fixed.

Goal: Let Dendro choose policy types and all parameters.

Configuration:

For each facility-product:

Policy typeInputFactorName: {Facility}_{Product}_Policy TableName: InventoryPolicies ColumnName: SimulationPolicy Filter: FacilityName='{Facility}' AND ProductName='{Product}' Enumerate: (s,S)|(R,Q)Parameter 1 InputFactorName: {Facility}_{Product}_Value1 TableName: InventoryPolicies ColumnName: SimulationPolicyValue1 Filter: FacilityName='{Facility}' AND ProductName='{Product}' [Range configuration] Parameter 2 InputFactorName: {Facility}_{Product}_Value2 TableName: InventoryPolicies ColumnName: SimulationPolicyValue2 Filter: FacilityName='{Facility}' AND ProductName='{Product}' [Range configuration] Result: Maximum flexibility - Dendro explores different policy types and parameter values.

Goal: Optimize top 20% of items by revenue; leave others unchanged.

Approach:

SQL example for selective generation - Create Input Factors for top revenue items only:

INSERT INTO InputFactors (...)

SELECT ... FROM InventoryPolicies ip

JOIN ProductRevenue pr ON ip.ProductName = pr.ProductName

WHERE pr.AnnualRevenue > [threshold]

Result: Focused optimization on items that matter most, faster runtime.

Goal: Use wider ranges for variable-demand products, tighter for stable products.

Configuration:

Stable, predictable products:

MinValue: current_value * 0.8 MaxValue: current_value * 1.2 StepSize: small (fine control) Variable, unpredictable products:

MinValue: current_value * 0.5 MaxValue: current_value * 2.0 StepSize: larger (broader exploration) Result: Appropriate exploration ranges based on demand variability.

Symptom: During loading, you see "no matching inventory policy" in the Job Log.

Cause: The Filter does not match any row in the InventoryPolicies table.

Solutions:

SELECT * FROM InventoryPolicies WHERE [your filter]

Symptom: Multiple separate genes instead of one coordinated policy set.

Cause: Filters do not match exactly across related factors.

Example problem:

Factor 1 Filter: FacilityName='WH A' AND ProductName='Widget' Factor 2 Filter: FacilityName='WH A' and ProductName='Widget' # lowercase 'and'! Solution: Ensure identical filter text across all factors for the same facility-product.

Symptom: Dendro tests impractical inventory levels (too high or too low).

Cause: Min/Max ranges too wide or poorly set.

Solutions:

Symptom: Fitness scores are not getting better across generations.

Possible causes:

Cause 1: Ranges too narrow

Cause 2: Step size too coarse

Cause 3: Wrong factors optimized

Cause 4: Conflicting objectives

Overall Fitness Scores can be assessed in the Simulation Evolutionary Algorithm Summary output table after a Dendro run has completed, while scores of individual output factors can be reviewed in the Simulation Evolutionary Algorithm Output Factor Report output table:

Symptom: Warnings or errors about order-up-to levels below reorder points.

Cause: Ranges configured without considering logical constraints.

Solution: Dendro handles this automatically through special mutation logic, but you can help by:

Note: Dendro automatically enforces S => s during gene creation and mutation.

Input factors are the foundation of Dendro optimization:

Key takeaways:

Well-configured input factors give Dendro the right level of control to find optimal solutions efficiently. Too much freedom creates an intractably large search space; too little prevents discovering improvements. The art is finding the right balance for your specific supply chain.

You may find these links helpful, some of which have already been mentioned above:

Please do not hesitate to contact the Optilogic Support team on support@optilogic.com for any questions or feedback.

The use of non alpha-numeric characters can present the possibilities of data issues when running scenarios. The only special characters that will be officially supported are periods, dashes, parentheses and underscores.

Please note that while other special characters in input data or scenario names can still function as expected, we can not guarantee that they will always work. If you encounter any issues or have questions about the data being used, please feel free to contact support at support@optilogic.com.

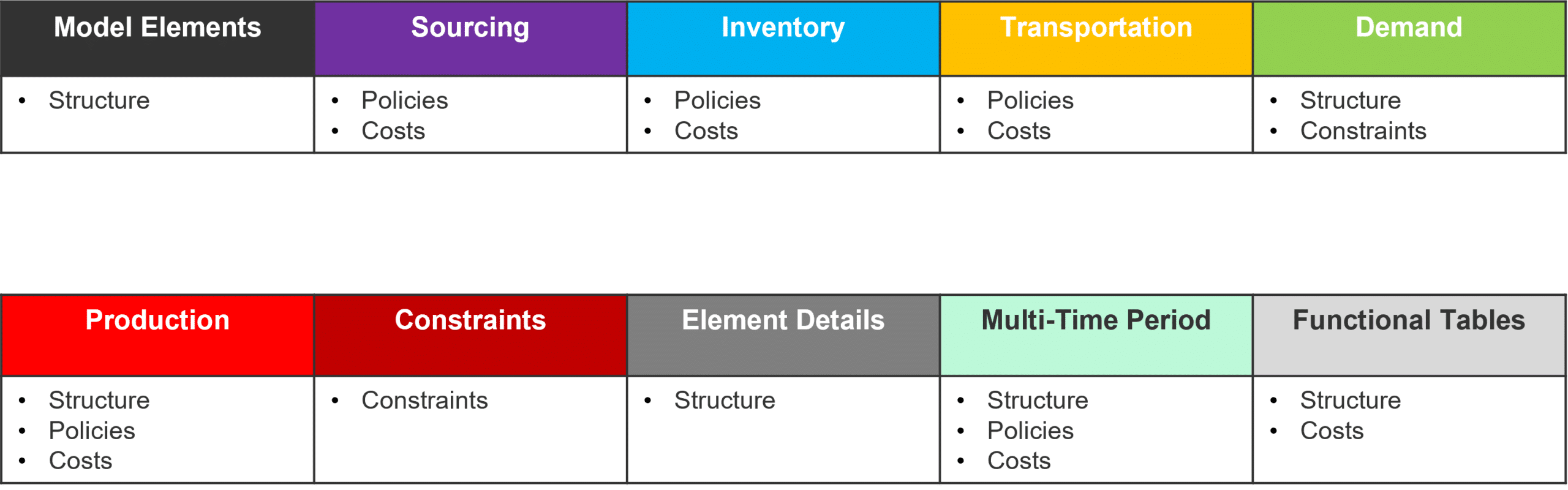

The Anura data schema enables design in the Optilogic platform. It sits above the design algorithms to create a consistent modeling paradigm for our algorithms:

Anura is comprised of modeling categories required to build a model. Each modeling category contains tables to add pertinent detail and/or complexity to your model as well as identify structure, policies, costs, and constraints.

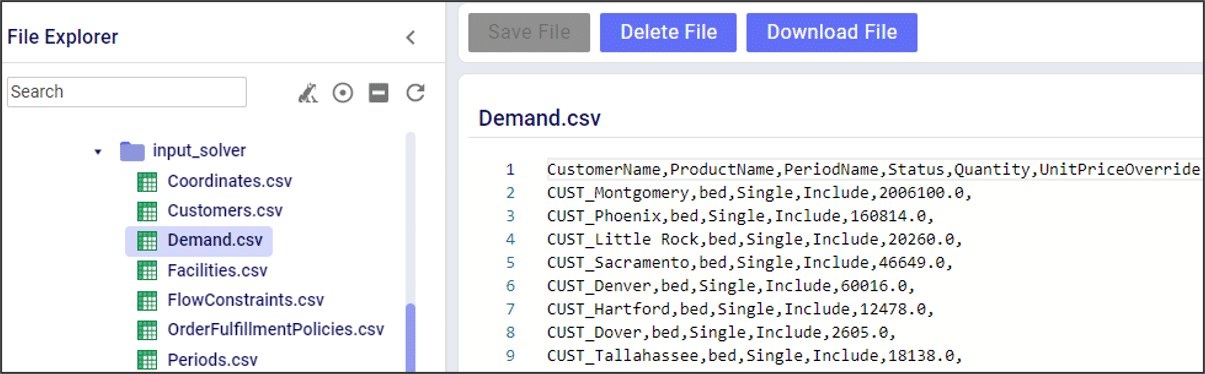

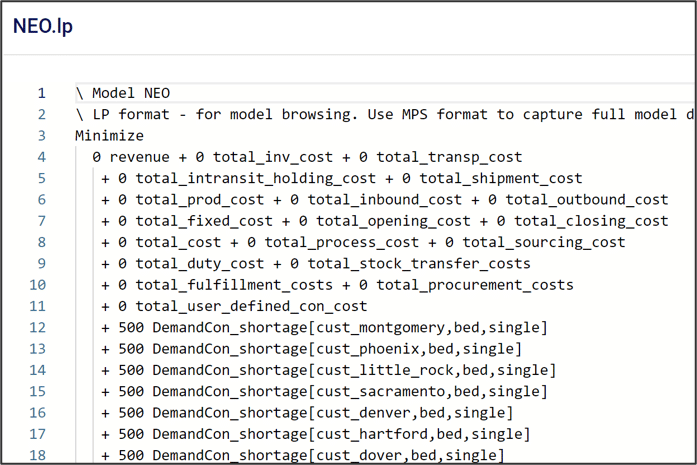

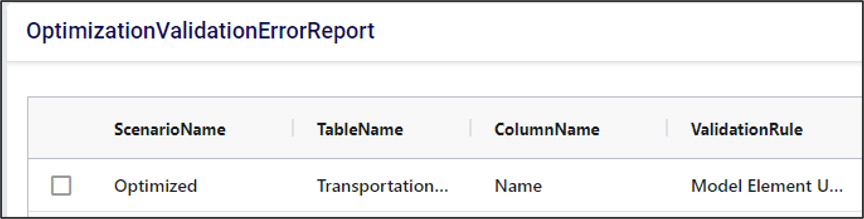

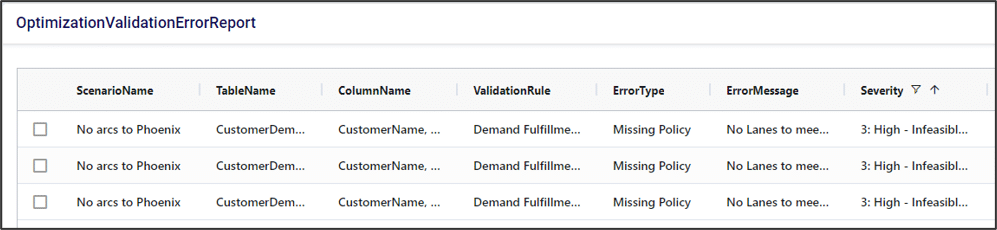

A complete list of the tables and fields within each table can be downloaded locally here.

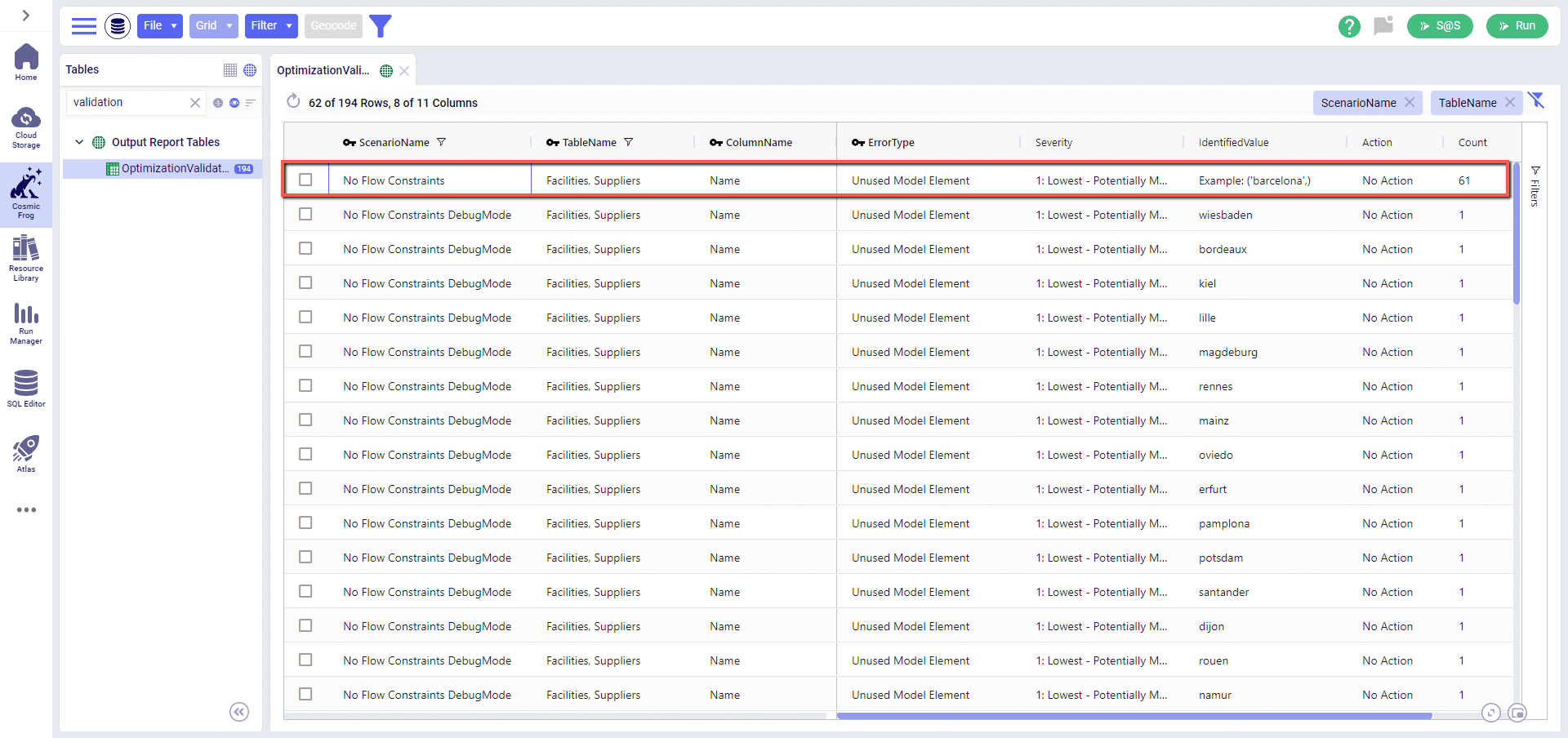

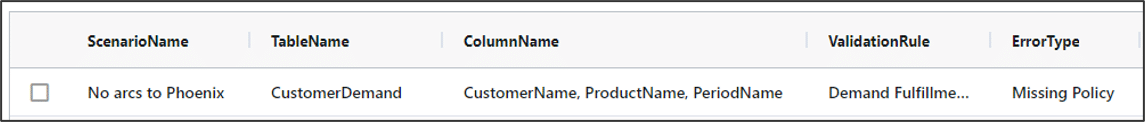

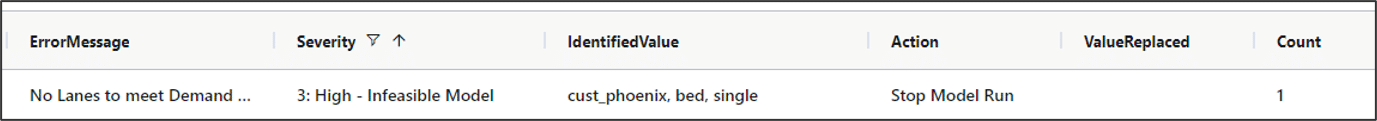

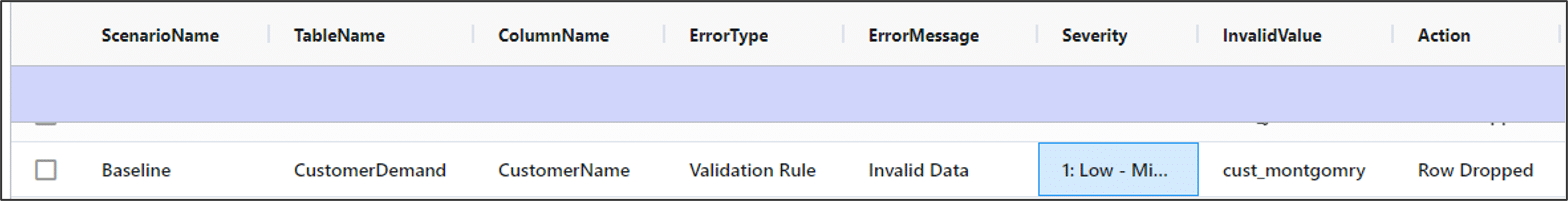

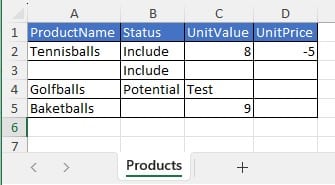

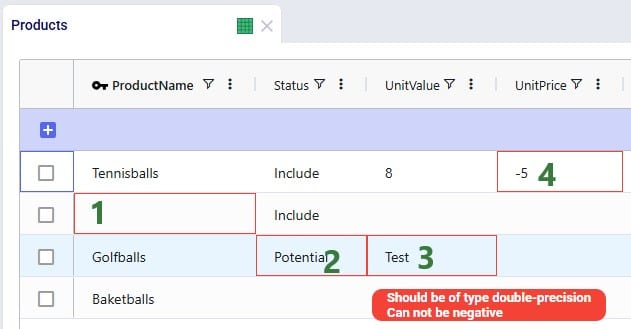

The validation error report table will be populated for all model solves and contains a lot of helpful information for both reviewing and troubleshooting models. A set of results will be saved for each scenario and every record will report the table and column where the issue has been identified, a description of the error, the value that was identified as the problem along with the action taken by the solver. In the instance where multiple records are generated for the same type of error, you will see an example record populated as the identified value and a count of the errors will be displayed – detailed records can be printed using debug mode. Each record will also be rated on the severity of its impact on a scale from 0 – 3 with 3 being the highest.

The validation error report can serve as a great tool to help troubleshoot an infeasible model. The best approach for utilizing this table is to first sort on the Severity column so that the highest level issues are displayed. If severity 3 issues are present, they must be addressed. If no severity 3 issues exist, the next steps would be to review any severity 2 issues to consider policy impact. It is also helpful to search for any instances where rows are being dropped as these dropped rows will likely influence other errors. To do this, filter the Action field for ‘Row Dropped’.

While the report can be helpful in trying to proactively diagnose infeasible models, it won’t always have all of the answers. To learn more about the dedicated engine for infeasibility diagnosis please check out this article: Troubleshooting With The Infeasibility Diagnostic Engine

Severity 3 records capture instances of syntax issues that are preventing the model from being built properly. This can be presented as 2 types:

Severity 3 records can also be instances where model infeasibility can be detected before the model solve begins, in the instance where a severity 3 error is detected the model solve will be cancelled immediately. There are 3 common instances of these Severity 3 errors:

Severity 2 records capture sources of potential infeasibility and while not always a definitive reason for a problem being infeasible, they will highlight potential issues with the policy structure of a model. There are 2 common instances of Severity 2 errors:

These severity 2 errors don’t necessarily indicate a problem, they can often times be caused by grouped-based policies and members overlapping from one group to another. It is still a good idea to review these gaps in policies and make sure that all of the lanes which should be considered in your solve are in fact being read into the solver.

Severity 1 records will capture issues in model data that can cause rows from input tables to be dropped when writing the solver files for a model. These can be issues on allowed numerical ranges, a negative quantity for customer demand as an example. Detailed policy linkages that do not align can also cause errors, for example a process which uses a specific workcenter that doesn’t exist at the assigned facility. There are other causes of severity 1 errors, and it is important to review these errors for any changes that are made to your input data. Be sure to check the Action column to see if a default value was applied and note the new value that was used, or if a row was being dropped.

Severity 1 records will also note other issues that have been identified in the model input data. These can be unused model elements such as a customer which is included but has no demand, or transportation modes that exist in the model but aren’t assigned to any lanes. There can also be records generated for policies that have no cost charged against them to let you know that a cost is missing. Duplicate data records will also be flagged as severity 1 errors – the last row read in by the solver will be persisted. If you have duplicated data with different detailed information these duplicates should be addressed in your input tables so that you can make sure the correct information is read in by the solver.

A point to look out for is any severity 1 issue that is related to an invalid UOM entry – these will cause records to be dropped and while the dropped records don’t always directly cause model infeasibilities themselves, they can often times be at the root of other issues identified as higher severity or through infeasibility diagnosis runs.

Severity 0 records are for informational purposes only and are generated when records have been excluded upstream, either in the input tables directly or by other corrective actions that the solver has taken. These records are letting you know that the solver read in the input records but they did not correspond to anything that was in the current solve. An example of this would be if you only included a subset of your facilities for a scenario, but still had included records for an out-of-scope MFG location. If the MFG is excluded in the facilities table but its production policies are still included, a record will be written to the table to let you know that these production policy rows were skipped.

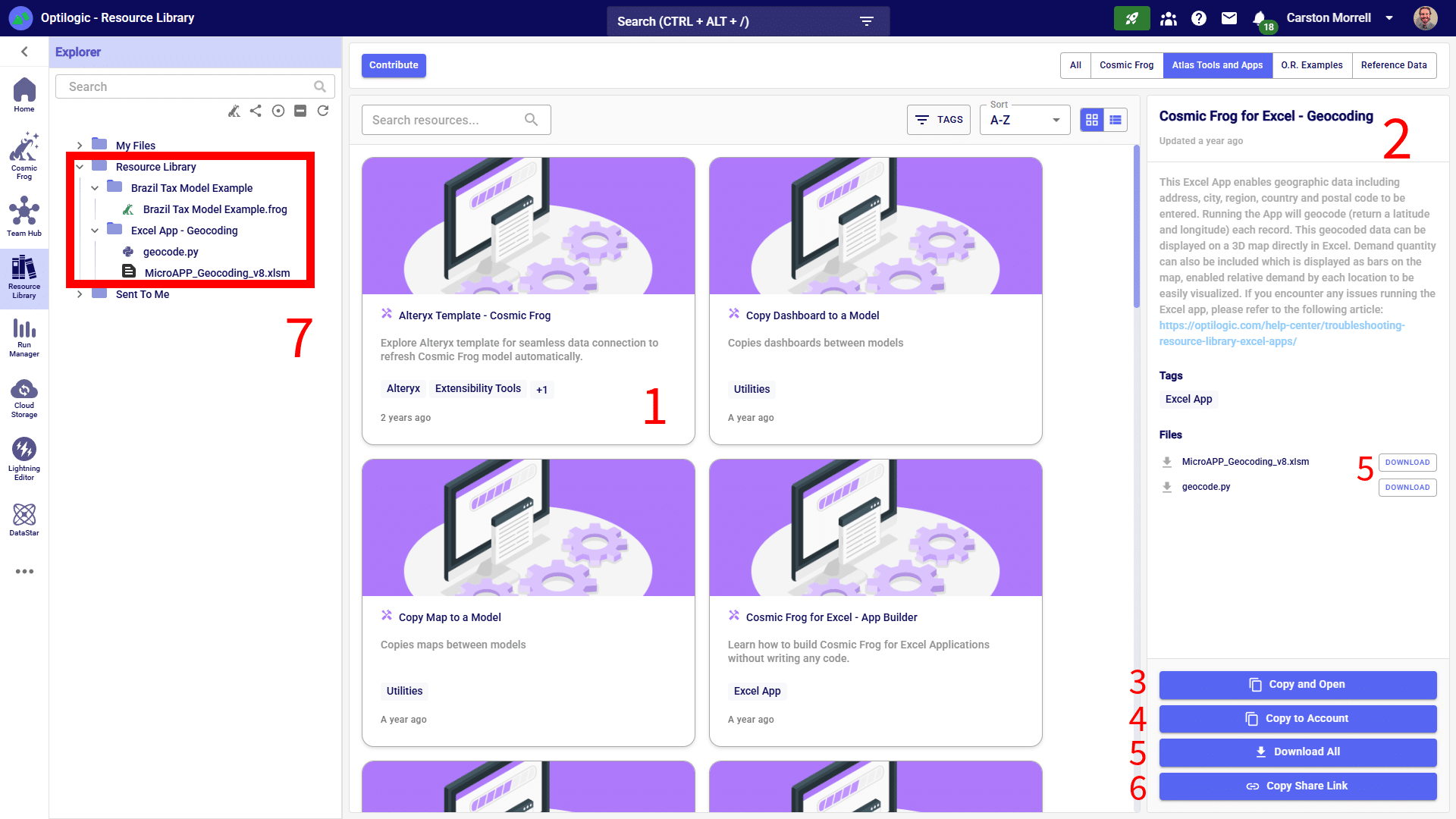

Utilities enable powerful modelling capabilities for use cases like integration to other services or data sources, repeatable data transformation or anything that can be supported by Python! System Utilities are available as a core capability in Cosmic Frog for use cases like LTL rate lookups, TransitMatrix time & distance generation, and copying items like Maps and Dashboards from one model to another. More useful System Utilities will become available in Cosmic Frog over time. Some of these System Utilities are also available in the Resource Library where they can be downloaded from, and then customized and made available to modelers for specific projects or models. In this Help Article we will cover both how to use use System Utilities as well as how to customize and deploy Custom Utilities.

The “Using and Customizing Utilities” resource in the Resource Library includes a helpful 15-minute video on Cosmic Frog Model Utilities and users are encouraged to watch this.

In this Help Article, System Utilities will be covered first, before discussing the specifics of creating one’s own Utilities. Finally, how to use and share Custom Utilities will be explained as well.

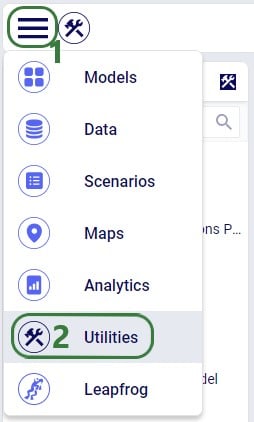

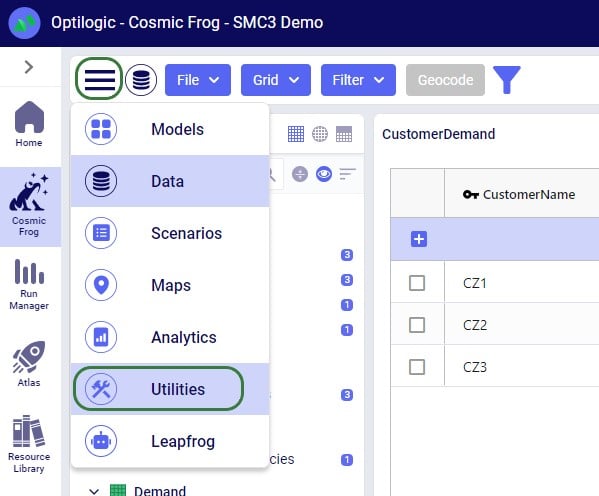

Users can access utilities within Cosmic Frog by going to the Utilities section via the Module Menu drop-down:

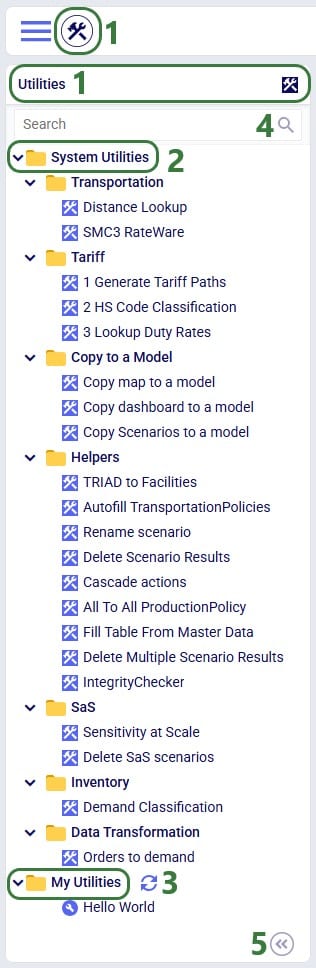

Once in the Utilities section, user will see the list of available utilities:

The appendix of this Help Article contains a table of all System Utilities and their descriptions.

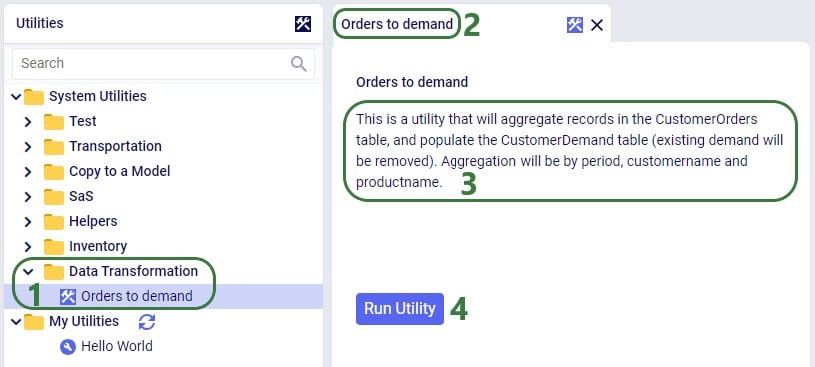

Utilities vary in complexity by how many input parameters a user can configure and range from those where no parameters need to be set at all to those where many can be set. Following screenshot shows the Orders to Demand utility which does not require any input parameters to be set by the user:

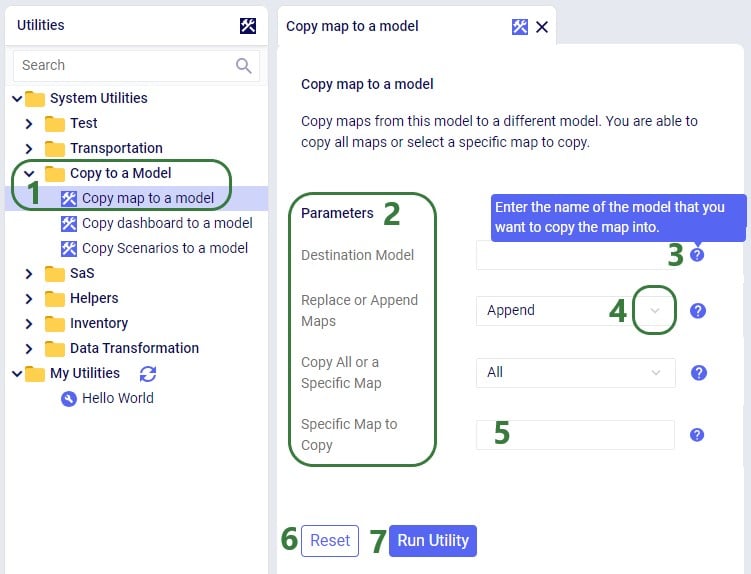

The Copy map to a model utility shown in the next screenshot does require several parameters to be set by the user:

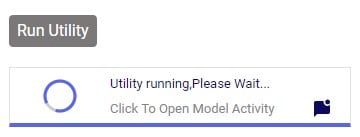

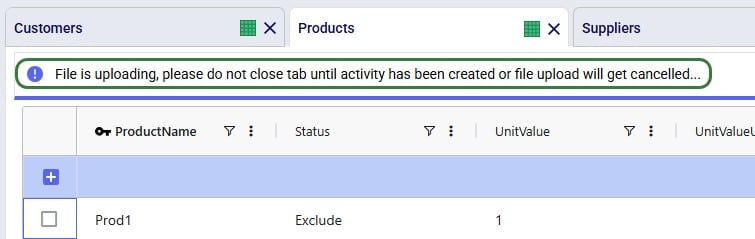

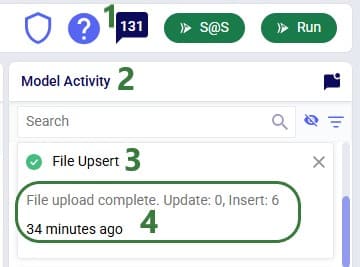

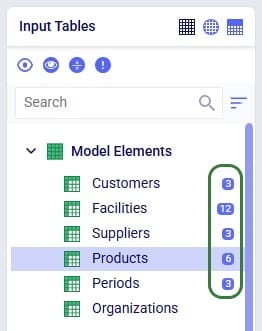

When the Run Utility button has been clicked, a message appears beneath it briefly:

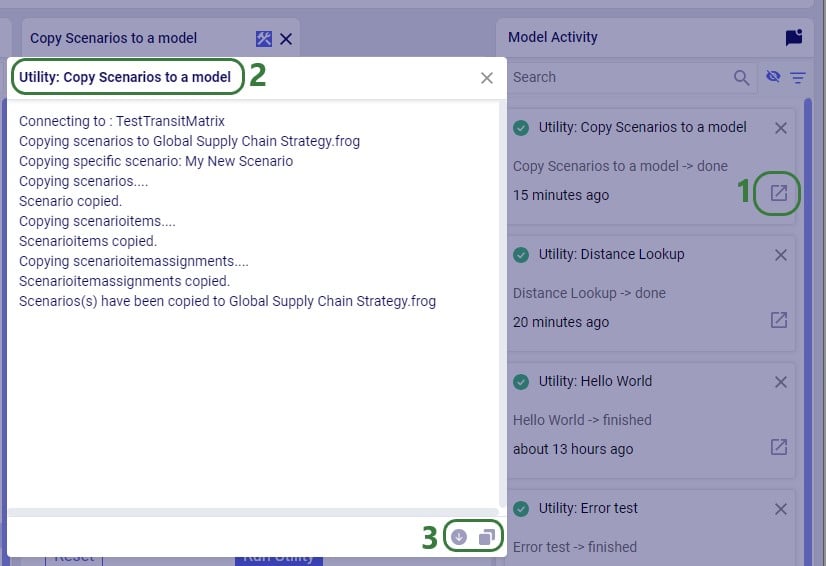

Clicking on this message will open the Model Activity pane to the right of the tab(s) with open utilities:

Users will not only see activities related to running utilities in the Model Activity list. Other actions that are executed within Cosmic Frog will be listed here too, like for example when user has geocoded locations by using the Geocode tool on the Customers / Facilities / Suppliers tables or when user makes a change in a master table and chooses to cascade these changes to other tables.

Please note that the following System Utilities have separate Help Articles where they are explained in more detail:

The utilities that are available in the Resource Library can be downloaded by users and then customized to fit the user’s specific needs. Examples are to change the logic of a data transformation, apply similar logic but to a different table, etc. Or users may even build their own utilities entirely. If a user updates a utility or creates a new one, they can share these back with other users so they can benefit from them as well.

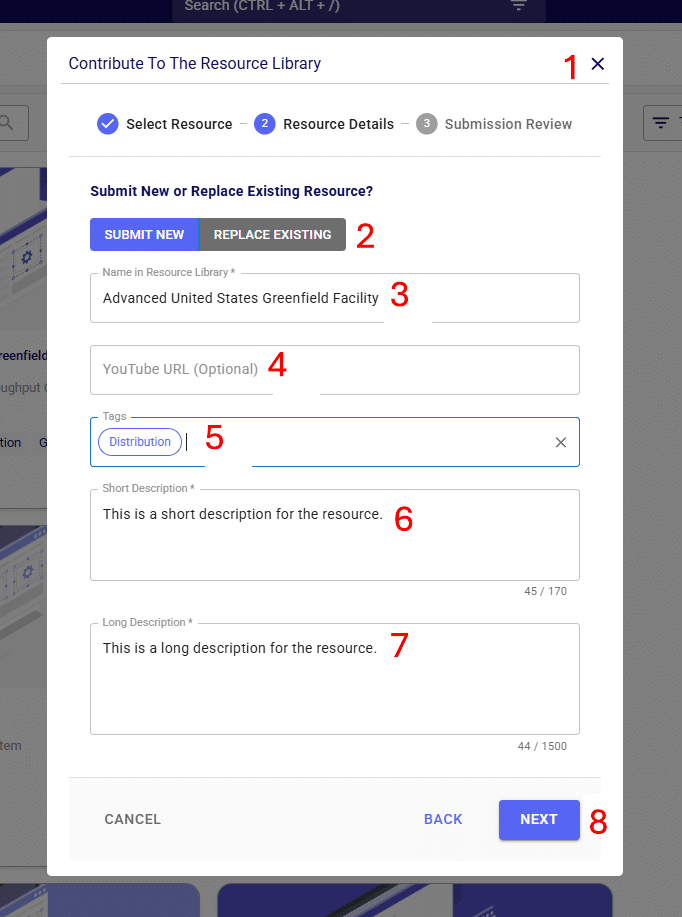

Utilities are Python scripts that contain a specific structure which will be explained in this section. They can be edited directly in the Atlas application on the Optilogic platform or users can download the Python file that is being used as a starting point and edit it using an IDE (Integrated Development Environment) installed on their computer. A rich text editor geared towards coding, like for example Visual Studio Code, will work fine too for most. An advantage of working locally is that user can take advantage of code completion features (auto-completion while typing, showing what arguments functions need, catch incorrect syntax/names, etc.) by installing an extension package like for example IntelliSense (for Visual Studio Code). The screenshots of the Python files underlying the utilities that follow in this documentation are taken while working with them in Visual Studio Code locally and on a machine that has the IntelliSense extension package installed.

A great resource on how to write Python scripts for Cosmic Frog models is this “Scripting with Cosmic Frog” video. In this video, the cosmicfrog Python library, which adds specific functionality to the existing Python features to work with Cosmic Frog models, is covered in some detail.

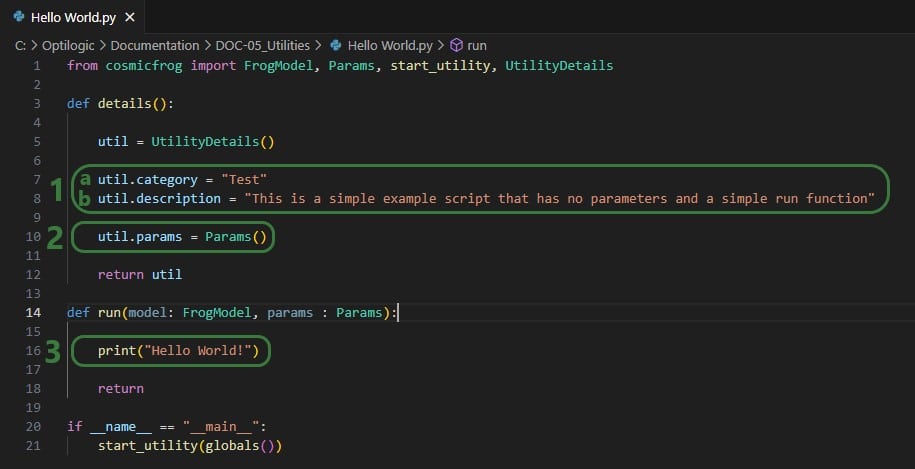

We will start by looking at the Python file of the very simple Hello World utility. In this first screenshot, the parts that can stay the same for all utilities are outlined in green:

Next, onto the parts of the utility’s Python script that users will want to update when customizing / creating their own scripts:

Now, we will discuss how input parameters, which users can then set in Cosmic Frog, can be added to the details function. After that we will cover different actions that can be added to the run function.

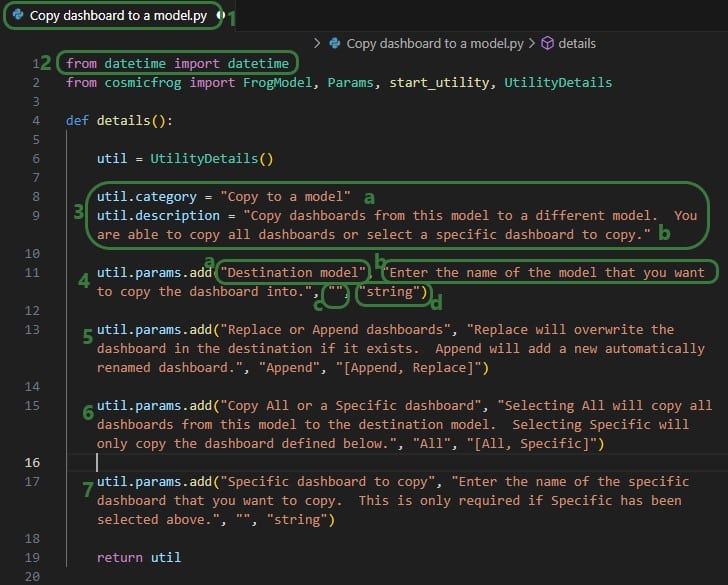

If a utility needs to be able to take any inputs from a user before running it, these are created by adding parameters in the details function of the utility’s Python script:

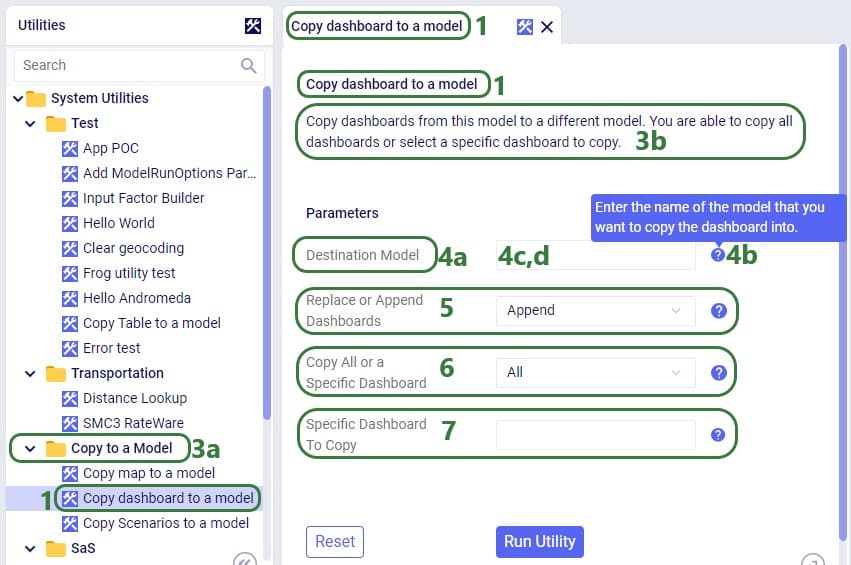

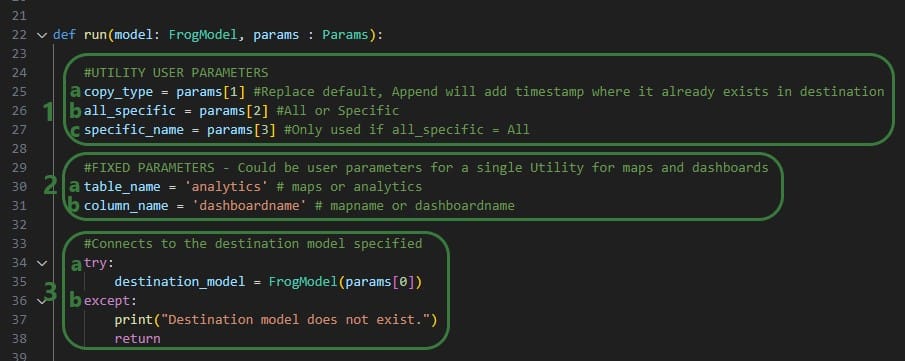

We will take a closer look at a utility that uses parameters and map the arguments of the parameters back to what the user sees when the utility is open in Cosmic Frog, see the next 2 screenshots: the numbers in the script screenshot are matched to those in the Cosmic Frog screenshot to indicate what code leads to what part of the utility when looking at it in Cosmic Frog. These screenshots use the Copy dashboard to a model utility of which the Python script (Copy dashboard to a model.py) was downloaded from the Resource Library.

Note that Python lists are 0-indexed, meaning that the first parameter (Destination Model in this example) is referenced by typing params[0], the second parameter (Replace of Append dashboards) by typing params[1], etc. We will see this in the code when adding actions to the run function below too.

Now let’s have a look at how the above code translates to what a user sees in the Cosmic Frog user interface for the Copy dashboard to a model System Utility (note that the numbers in this screenshot match with those in the above screenshot):

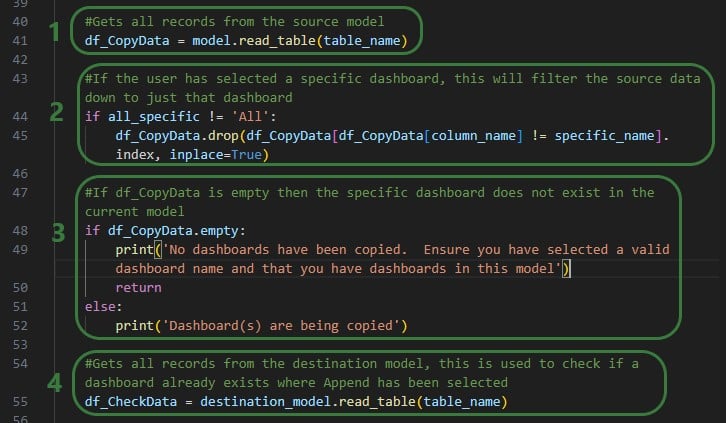

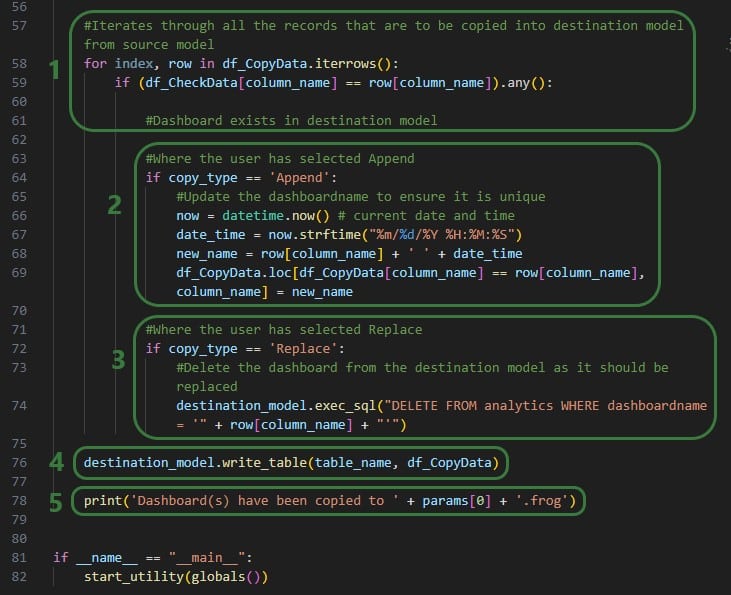

The actions a utility needs to perform are added to the run function of the Python script. These will be different for different types of utilities. We will cover the actions the Copy dashboard to a model utility uses at a high level and refer to Python documentation if user is interested in understanding all the details. There are a lot of helpful resources and communities online where users can learn everything there is to know about using & writing Python code. A great place to start is on the Python for Beginners page on python.org. This page also mentions how more experienced coders can get started with Python. Also note that text in green font that follows a hash sign are comments to add context to code.

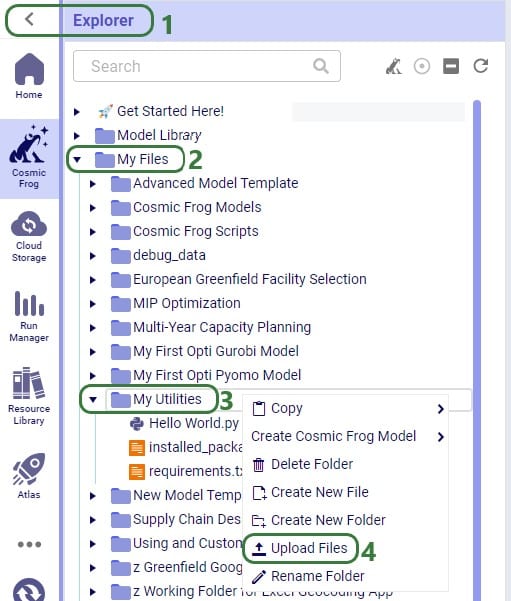

For a custom utility to be showing in the My Utilities category of the utilities list in Cosmic Frog, it needs to be saved under My Files > My Utilities in the user’s Optilogic account:

Note that if a Python utility file is already in user’s Optilogic account, but in a different folder, user can click on it and drag it to the My Utilities folder.

For utilities to work, a requirements.txt file which only contains the text cosmicfrog needs to be placed in the same My Files > My Utilities folder (if not there already):

A customized version of the Copy dashboard to a model utility was uploaded here, and a requirements.txt file is present in the same folder too.

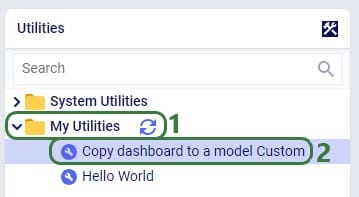

Once a Python utility file is uploaded to My Files > My Utilities, it can be accessed from within Cosmic Frog:

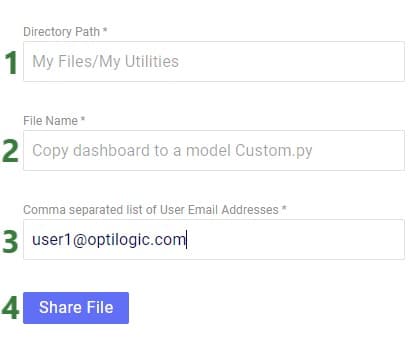

If users want to share custom utilities with other users, they can do so by right-clicking on it and choosing the “Send Copy of File” option:

The following form then opens:

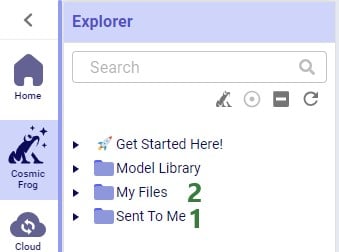

When a custom utility has been shared with you by another user, it will be saved under the Sent To Me folder in your Optilogic account:

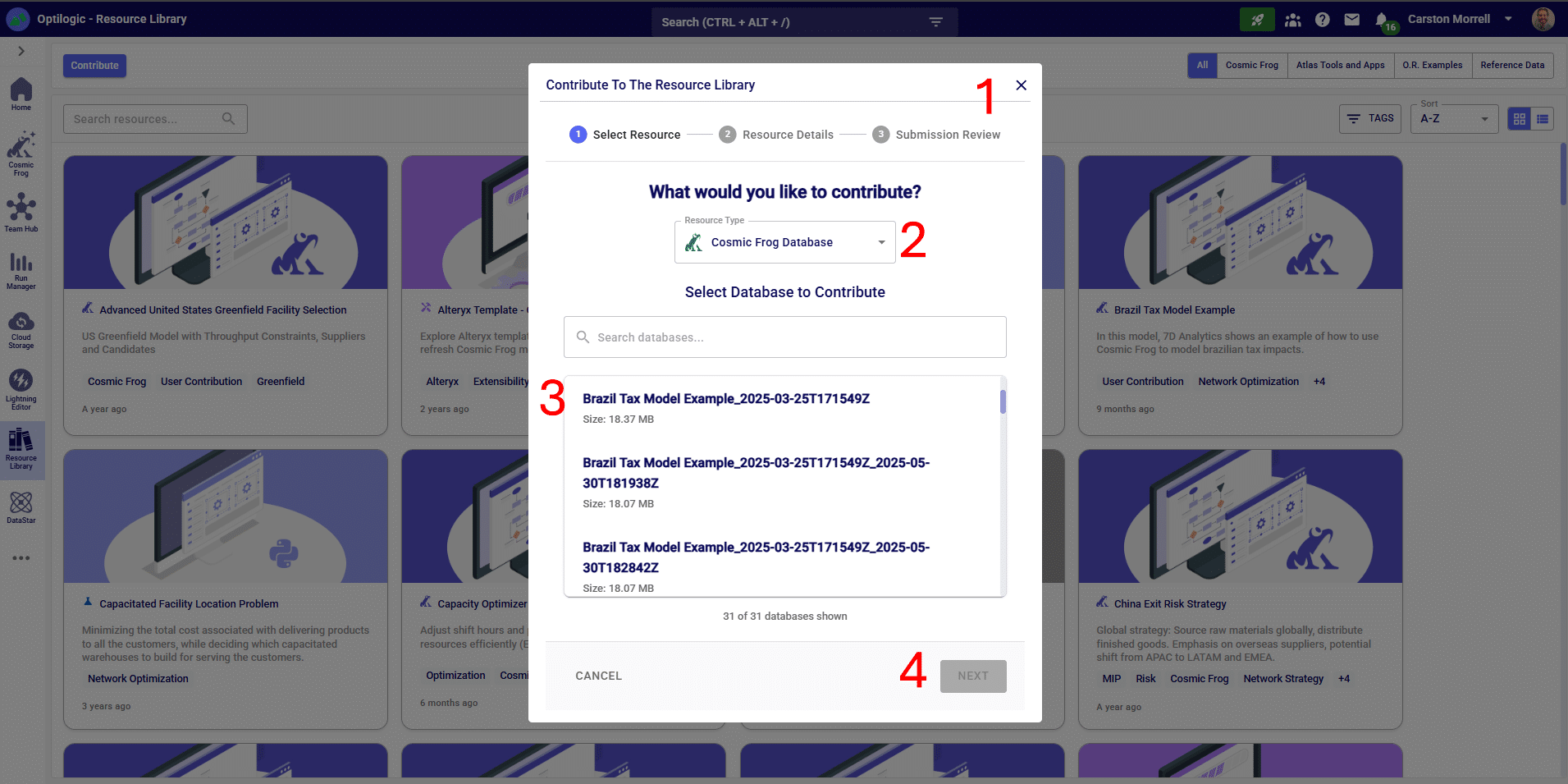

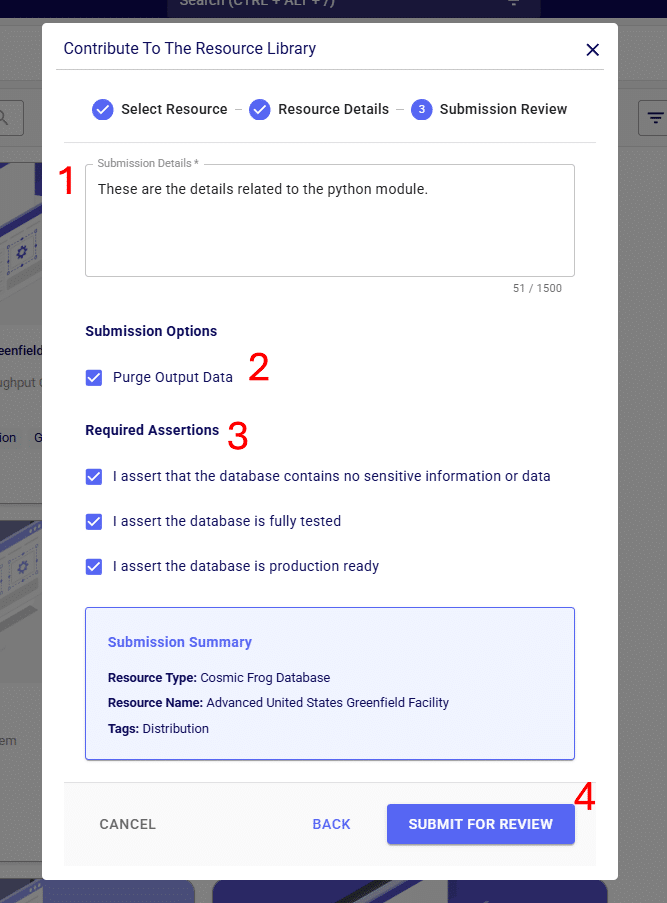

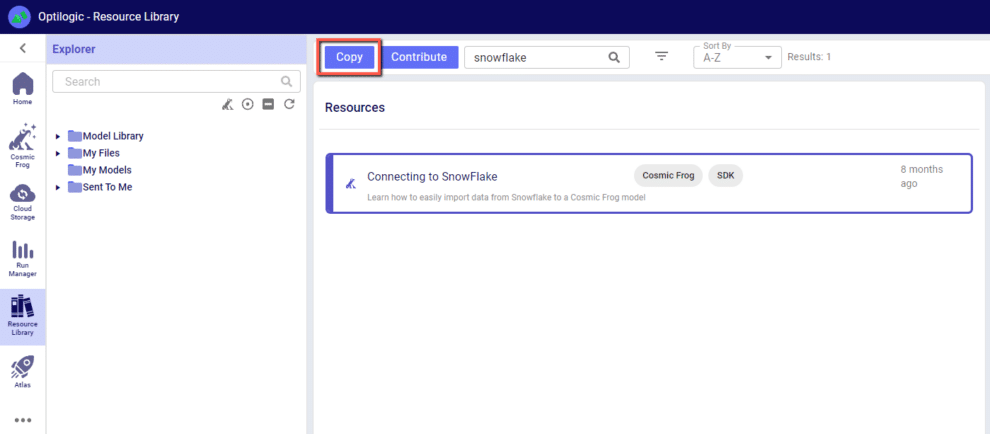

Should you have created a custom utility that you feel a lot of other users can benefit from and you are allowed to share outside of your organization, then we encourage you to submit it into Optilogic’s Resource Library. Click on the Contribute button at the left top of the Resource Library and then follow the steps as outlined in the “How can I add Python Modules to the Resource Library?” section towards the end of the “How to use the Resource Library” help article.

Utility names and descriptions by category:

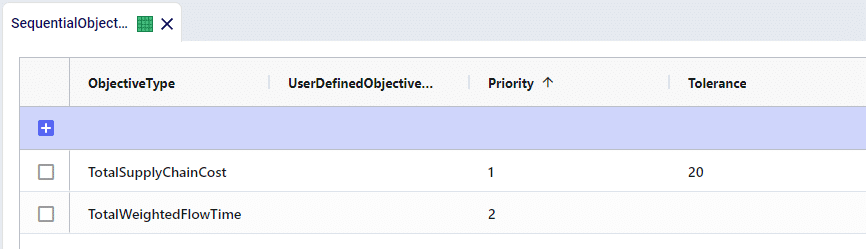

Sequential Objectives allow for you to set multiple tiers of objectives for the optimization solve to consider, where each tier of objectives can be relaxed by a defined percentage when solving for the next tier.

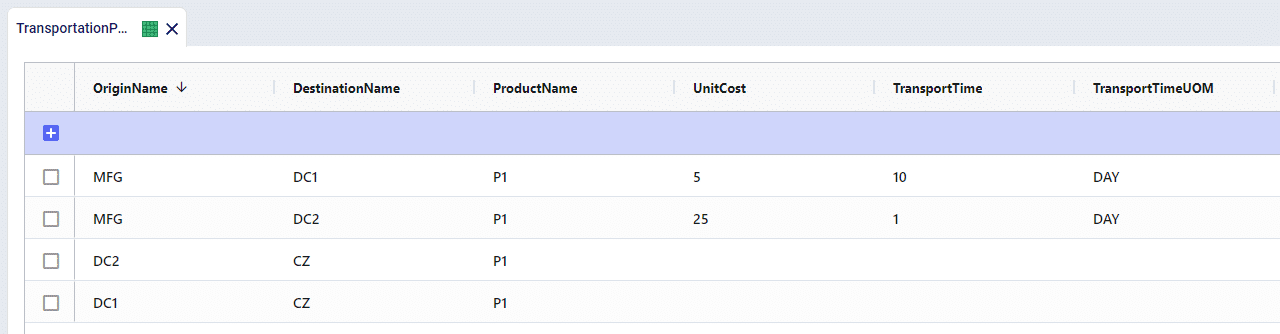

Here is a basic example of how Sequential Objectives can be used.

100 units of demand for P1 at CZ.

Available pathways for flow are as follows:

Find a solution that will first minimize total costs, but then will work to minimize the total amount of travel time in the solution while only relaxing the Total Cost solution by 20%.

When just solving with the standard objective of Profit Maximization, the cheapest path will be utilized. All flows will come from MFG > DC1 > CZ and the total cost will be $500.

We’ve built the Sequential Objectives so that we will first optimize over the Total Supply Chain Cost as Priority 1. We have also set the Tolerance to be 20 which will allow for a 20% relaxation in the solution to solve for the secondary objective – Total Weighted Flow Time.

We’ll now see that the more expensive pathway of MFG > DC2 > CZ is used as it requires less travel time. Because the initial cost was $500, we will send as many units as possible through DC2 up until the total cost reaches $600 – a 20% deviation from the initial cost. This results is 5 units flowing via DC2, while 95 units remain through DC1 for a total cost of $600.

This example model can be found in the Resource Library listed under the name of Sequential Objectives Demo.

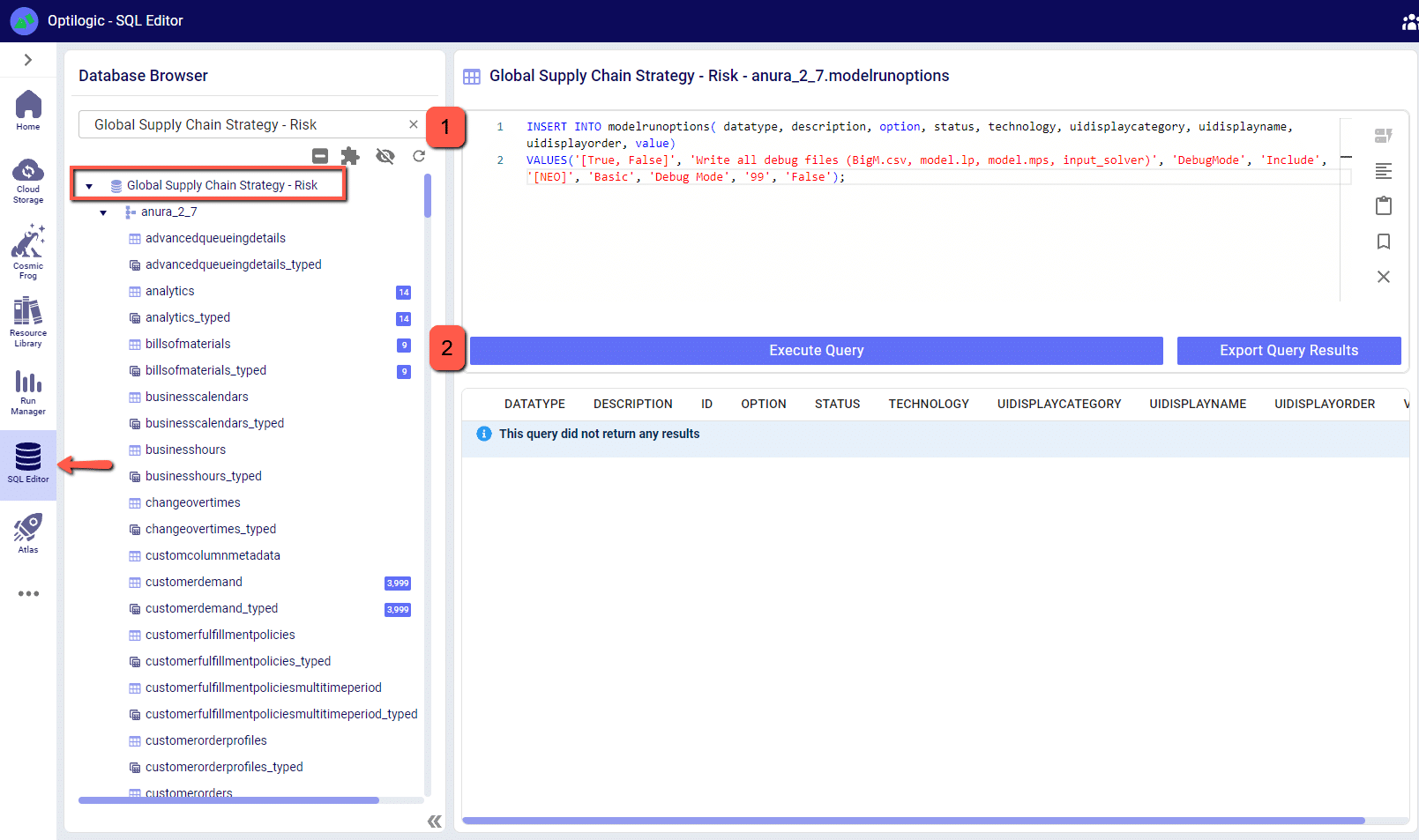

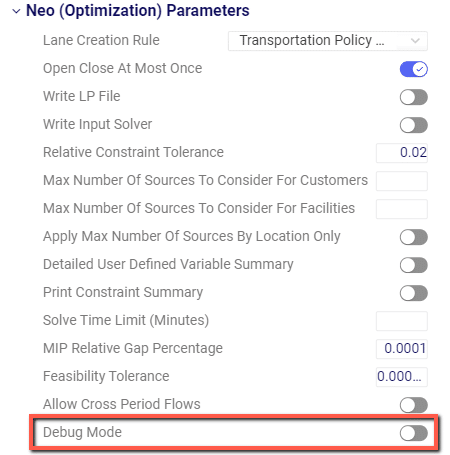

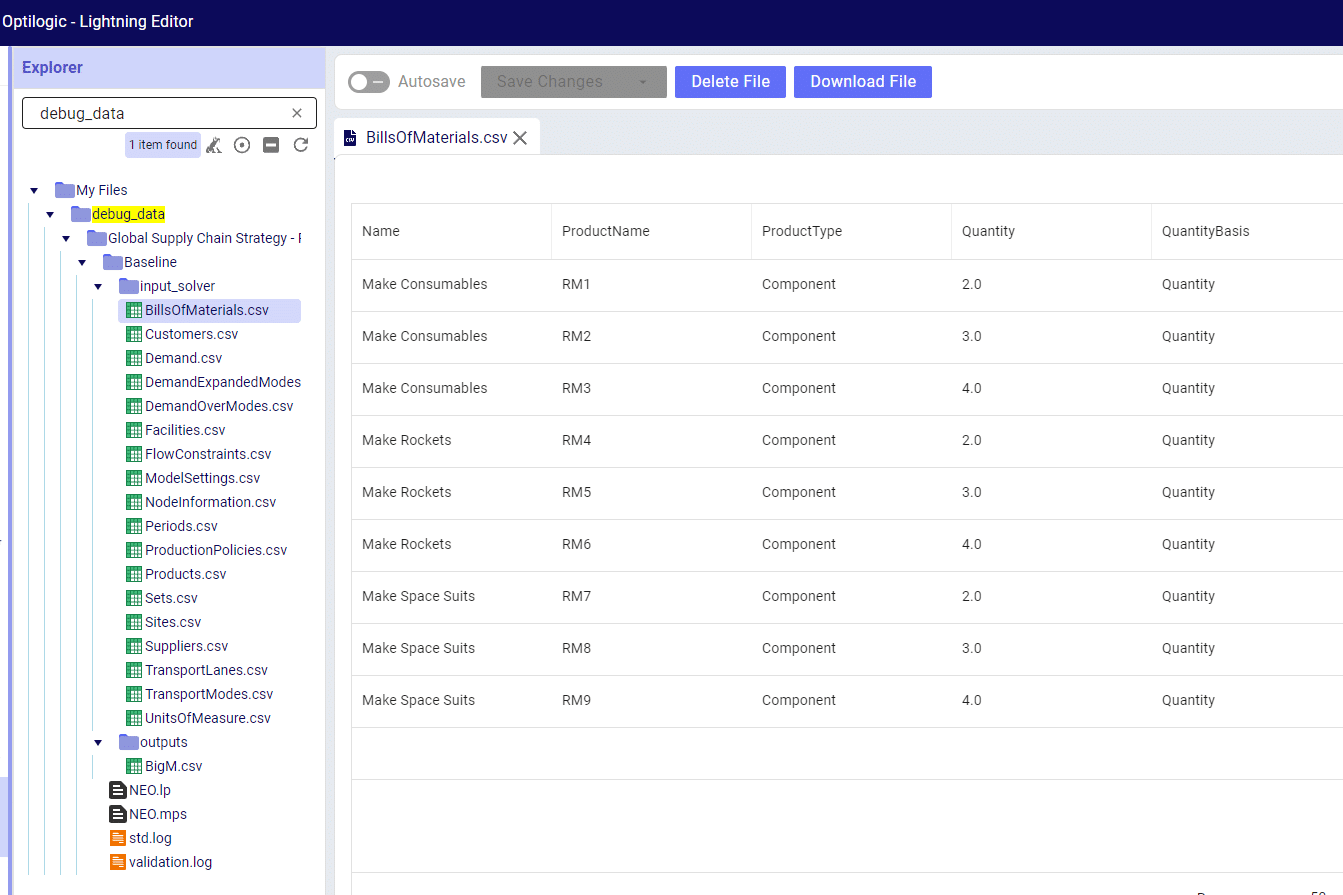

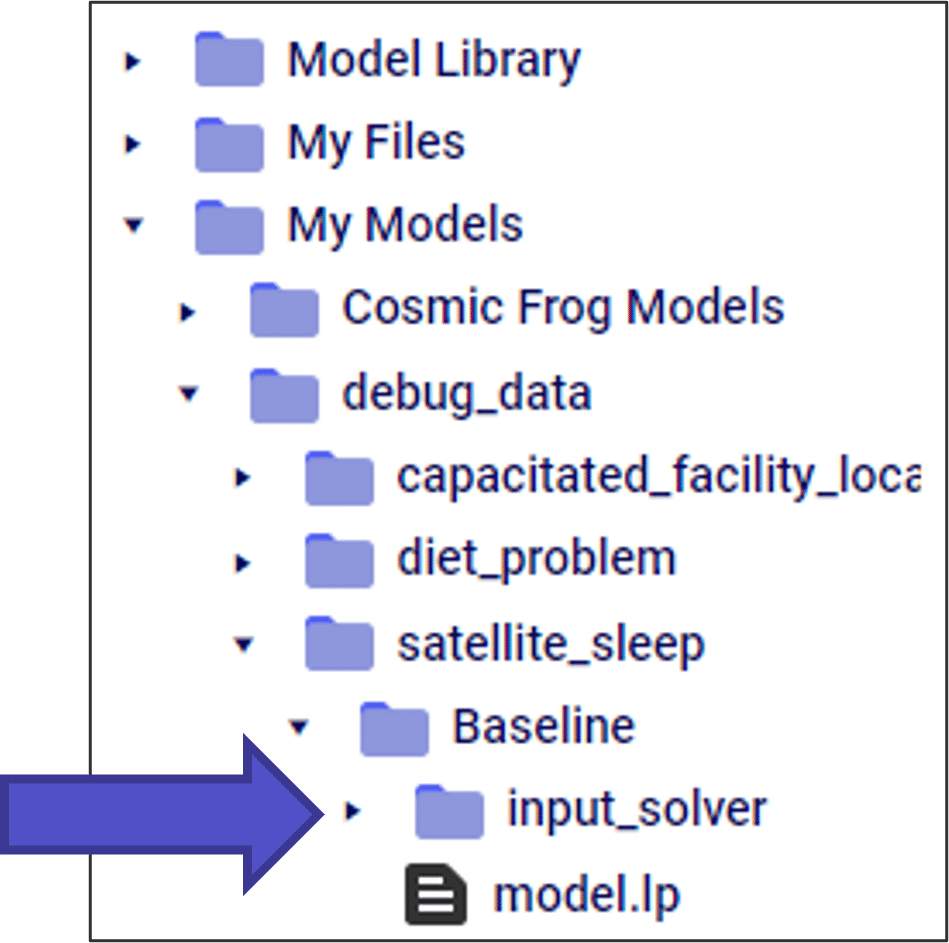

Running a model in debug mode can be a helpful troubleshooting tool as it will print more detailed reports of model issues.

The run option for Debug Mode is not included as a default in models but it can be added via the SQL Editor. Please copy and paste the following SQL query and run it against the model database you wish to add the option to.

Copy SQL Query Here: Add Debug Mode Model Run Option SQL Statement

Now, if you open the model again in Cosmic Frog you will see that Debug Mode is an available option in the run screen.

When set to True, Debug Mode will show all instances of validation errors instead of displaying an example of an error and the count of occurrences. You can see the difference in the following screenshot, where 1 row with 61 instances of the same error is turned into 61 individual rows.

Debug Mode will also print and save the input files that are passed to the solver – these will be saved in your File Explorer at My Files > debug_data > ModelName > ScenarioName. For each scenario run with debug mode enabled you will have the following:

Please note that this data is saved to your File Explorer and for larger models can take up quite a bit of disk space. If you reach 100% disk space utilization you will be unable to run any new jobs as they won’t have any space to write their required data to. The debug_data folder is almost always the cause of this disk space utilization issue, clearing its contents will allow jobs to start again.

If you have any questions or concerns about how this might impact your models, please don’t hesitate to reach out to support@optilogic.com.

The nature of LTL freight rating is complex and multi-faceted. The RateWare® XL LTL rating engine of SMC3 enables customers to manage parcel pricing and LTL rating complexity, for both class and density rates, with comprehensive rating solutions.

Cosmic Frog users that hold a license to the RateWare XL LTL Rating Engine of SMC3 can easily use it within Cosmic Frog to lookup LTL rates and use them in their models. In this documentation we will explain where to find the SMC3 RateWare utility within Cosmic Frog and how to use it. First, we will cover the basic inputs needed in Cosmic Frog models, then how to configure and run the SMC3 RateWare Utility to look up LTL rates, and finally how to add those rates for usage in the model.

Before running the SMC3 RateWare Utility to retrieve LTL rates, we need to set up our model, including the origin-destination pairs for which we want to look up the LTL rates. Here, we will cover the inputs of a basic demo model showing how to use the SMC3 RateWare Utility. Of course, models using this utility may be much more complex in setup as compared to what is shown here.

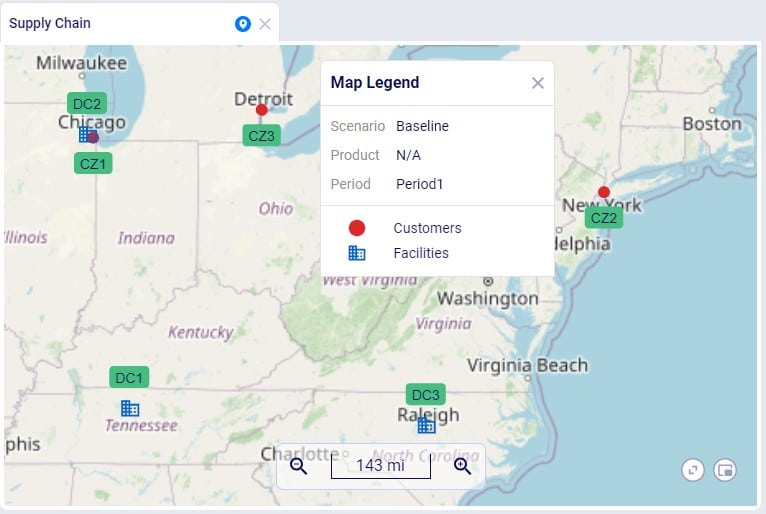

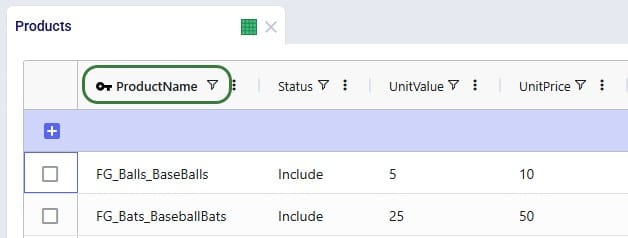

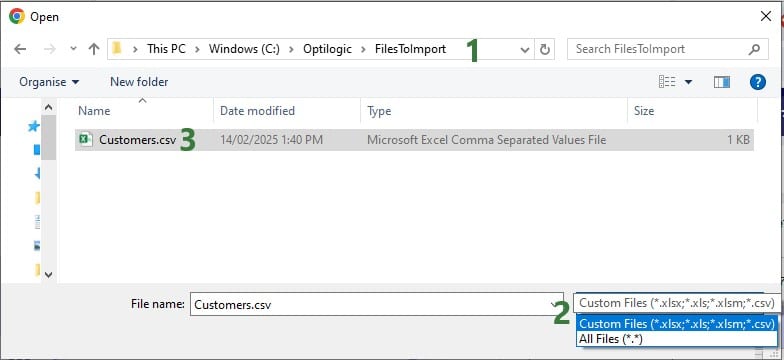

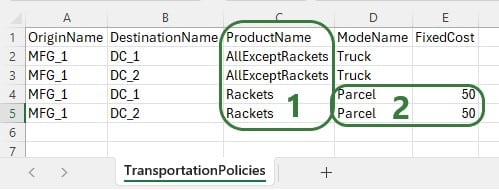

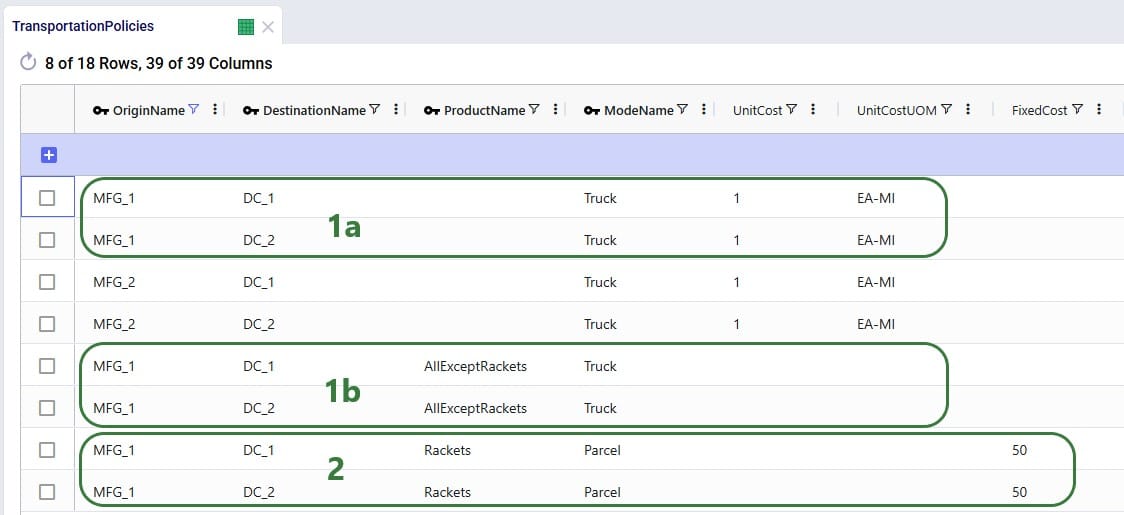

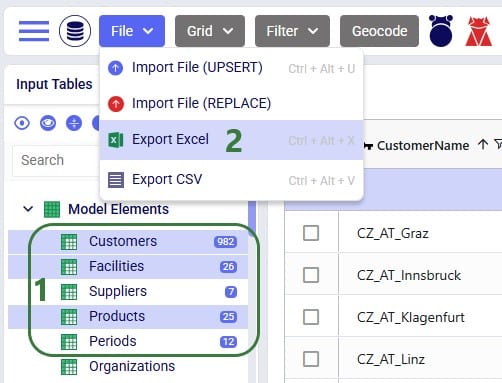

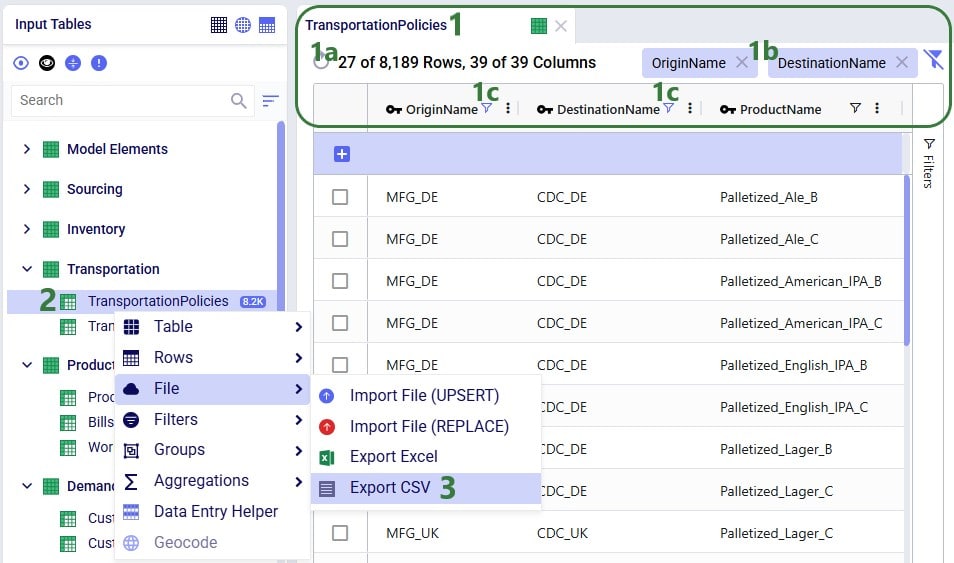

The next 3 screenshots show:

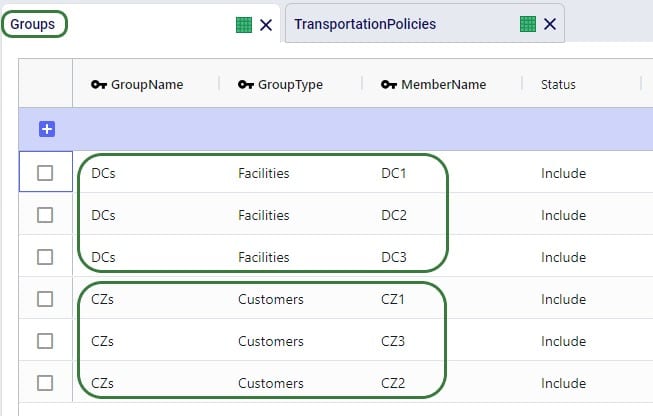

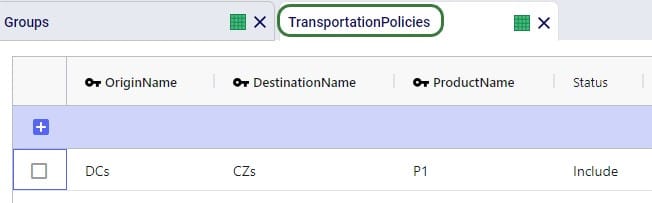

To facilitate model building, this model uses groups for Customer and DCs, as shown in the next screenshot. All DCs are members of the DCs group and all CZs are members of the Customers group:

Using these groups, the Transportation Policies table is easily set up with 1 record from the DCs group to the CZs group as shown in the next screenshot. At runtime this 1 record is expanded into all possible OriginName-DestinationName combinations of the group members. So, this is an all DCs to all Customers policy that covers 9 possible origin-destination combinations.

Besides these tables, this simple model also has several other tables that are populated:

The SMC3 RateWare Utility is available by default from the Utilities module in Cosmic Frog, see next screenshot. Click on the Module Menu icon with 3 horizontal bars at the top left in Cosmic Frog, then click on Utilities in the menu that pops up:

You will now see a list of Utilities, similar to the one shown in this next screenshot (your Utilities are likely all expanded, whereas most are collapsed in this screenshot):

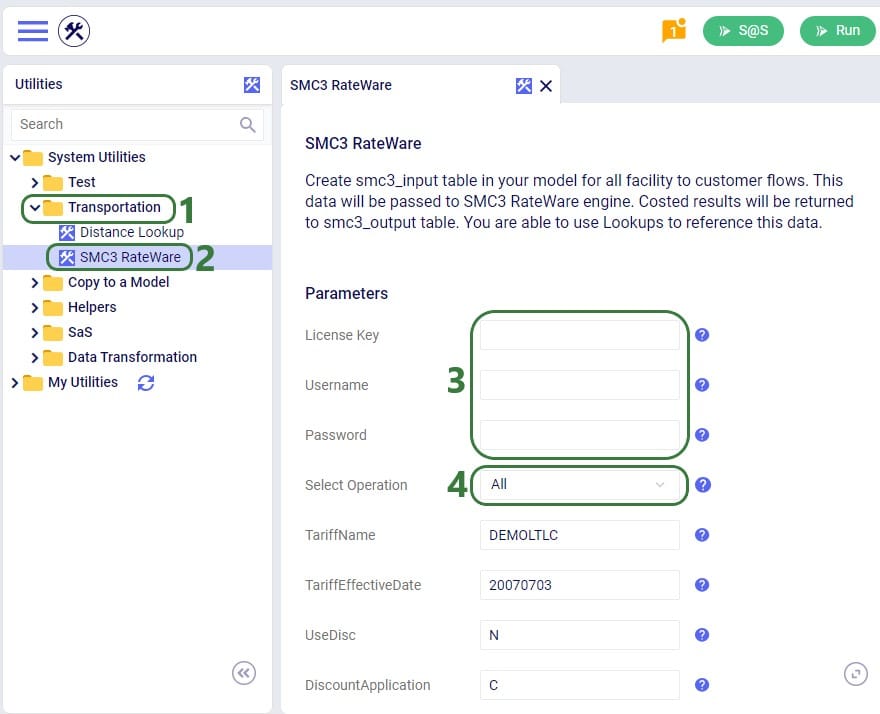

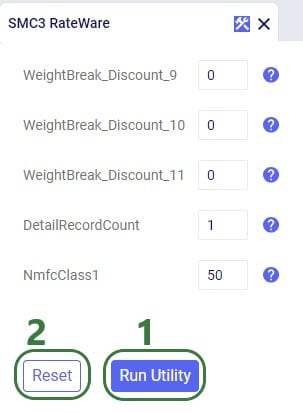

The rest of the inputs that can be configured are specific to the RateWare XL LTL Rating Engine and we refer user to SMC3’s documentation for the configuration of these settings.

When the utility is finished running, we can have a look at the smc3_inputs and smc3_outputs tables (if the option of All was used for Select Operation). First, here is a screenshot of the smc3_inputs table:

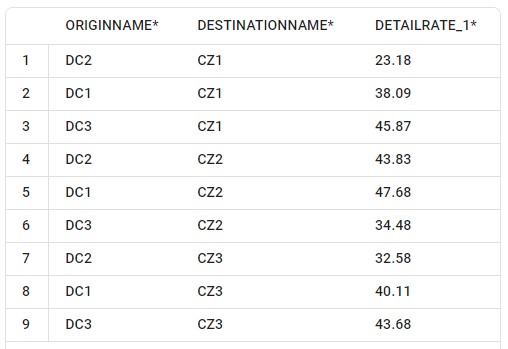

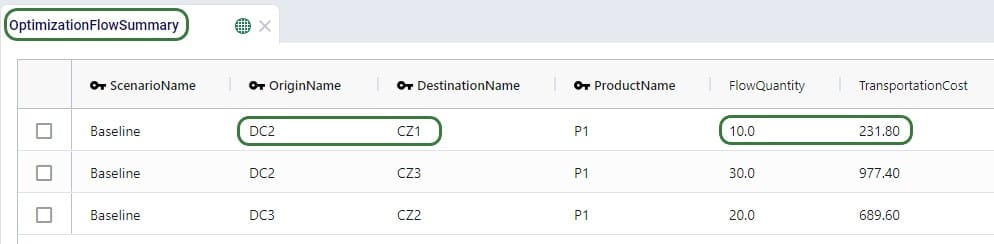

The next screenshot shows the smc3_outputs table in Optilogic’s SQL Editor. This is also a table with many columns as it contains origin and destination information, repeats the inputs of the utility, and contains details of the retrieved rates. Here, we are only showing the 3 most relevant columns: originname (the source DC), destinationname (the customer), and detailrate_1 which is the retrieved LTL rate:

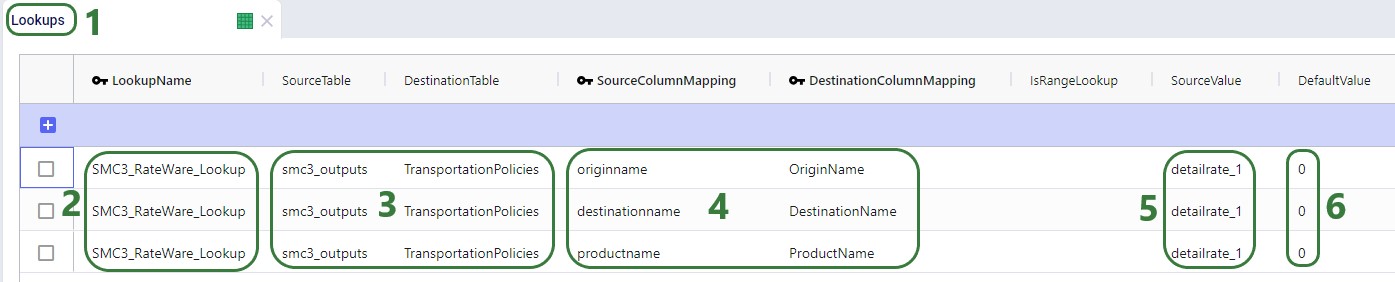

Now that we have used the SMC3 RateWare Utility to retrieve the LTL rates for our 9 origin-destination pairs, we need to configure the model to use them as they are currently only listed in the smc3_outputs custom table. We use the Lookups table (an Input table in the Functional Tables section) to create a lookup link between the smc3_outputs custom table and the Transportation Policies input table, as follows: