This video guides you though creating your free account and the features of the Optilogic Cosmic Frog supply chain design platform.

If you are running into issues receiving your account confirmation email, please see the troubleshooting article linked here.

Greenfield analysis (GF) is a method for determining the optimal location of facilities in a supply chain network. The Greenfield engine in Cosmic Frog is called Triad and this name comes from the oldest known species of frogs – Triadobatrachus. You can think of it as the starting point for the evolution of all frogs, and it serves as a great starting point for modeling projects too! We can use Triad to identify 3 key parameters:

GF is a great starting point for network design—it solves quickly and can reduce the number of candidate site locations in complicated design problems. However, a standard GF requires some assumptions to solve (e.g. single time period, single product). As a result, the output of a Triad model is best suited as initial information for building a more robust Cosmic Frog optimization (Neo) or simulation (Throg) model.

You can run GF in any Cosmic Frog model. Running a GF model only requires two input tables to be populated:

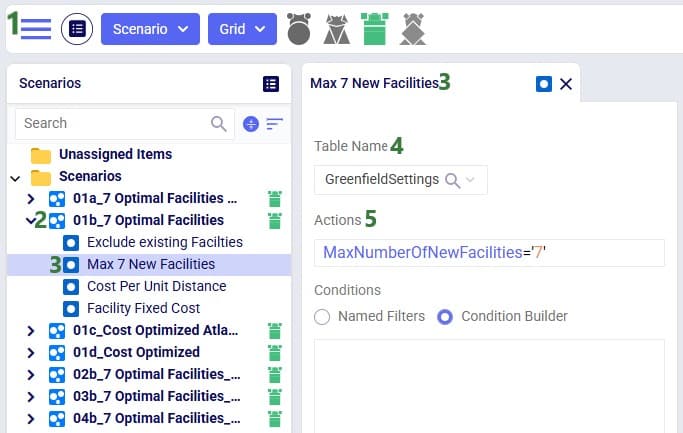

A third important table for running GF is the Greenfield Settings table in the Functional Tables section of the input tables. We call our GF approach “Intelligent Greenfield” because of the different parameters available by configuring this settings table. The Greenfield Settings table is always populated with defaults and users can change these as needed. See the Greenfield Setting Explained help article for an explanation of the fields in this table.

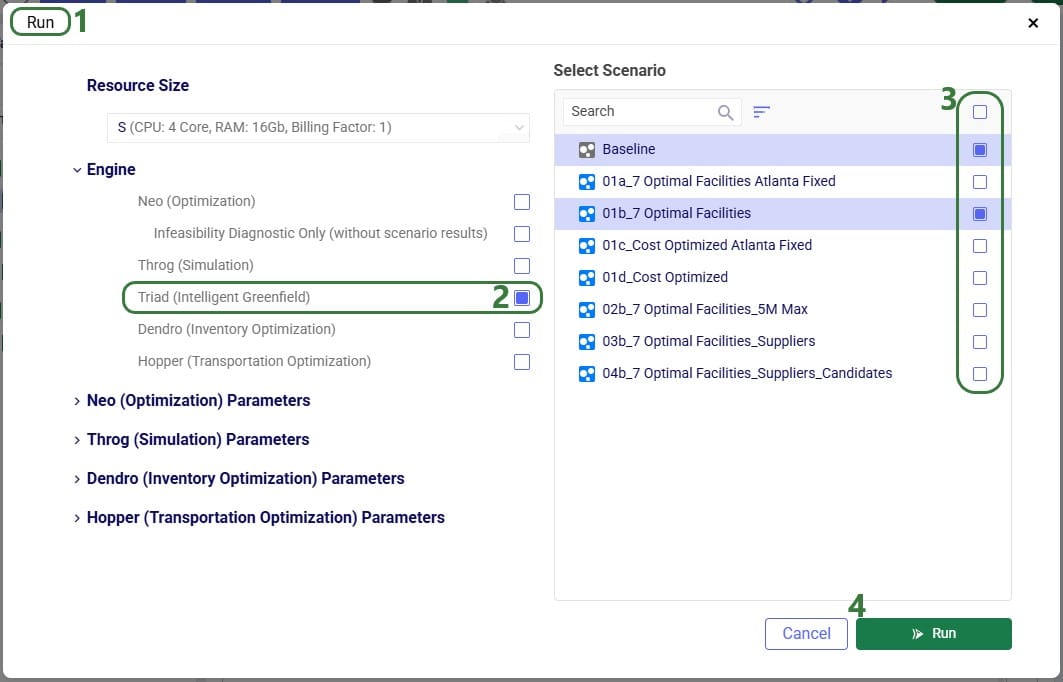

A greenfield analysis starts with clicking the “Run” button at the right top of the Cosmic Frog application, just like a Neo or Throg model.

After clicking on the Run button, the Run screen comes up:

Besides making changes to values in the Customers and/or Customer Demand tables, GF scenarios often make changes to 1 or multiple settings on the Greenfield Settings table. The next screenshot shows an example of this:

To improve the solve speed of a Triad model, we can use customer clustering. Customer clustering reduces the size of the supply chain by grouping customers within a given geometric range into a single customer. We can set the clustering radius (in miles) in the Greenfield Settings table in the Customer Cluster Radius column.

Clustering is optional, and leaving this column blank is the same as turning off clustering.

While grouping customers can significantly improve the run time of the model, clustering may result in a loss of optimality. However, Greenfield is typically used as a starting point for a future Neo optimization model, so small losses in optimality at this phase are typically manageable.

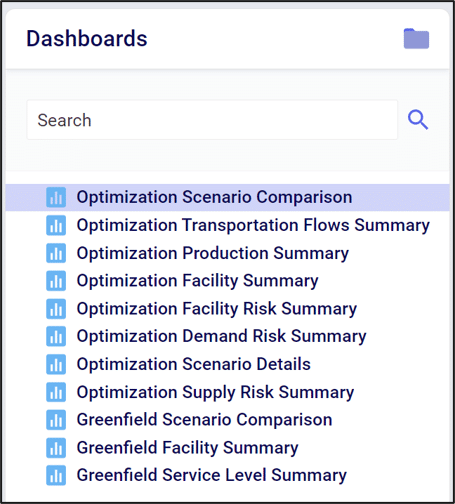

Once you have run a model, you can visualize your results using the Analytics tab.

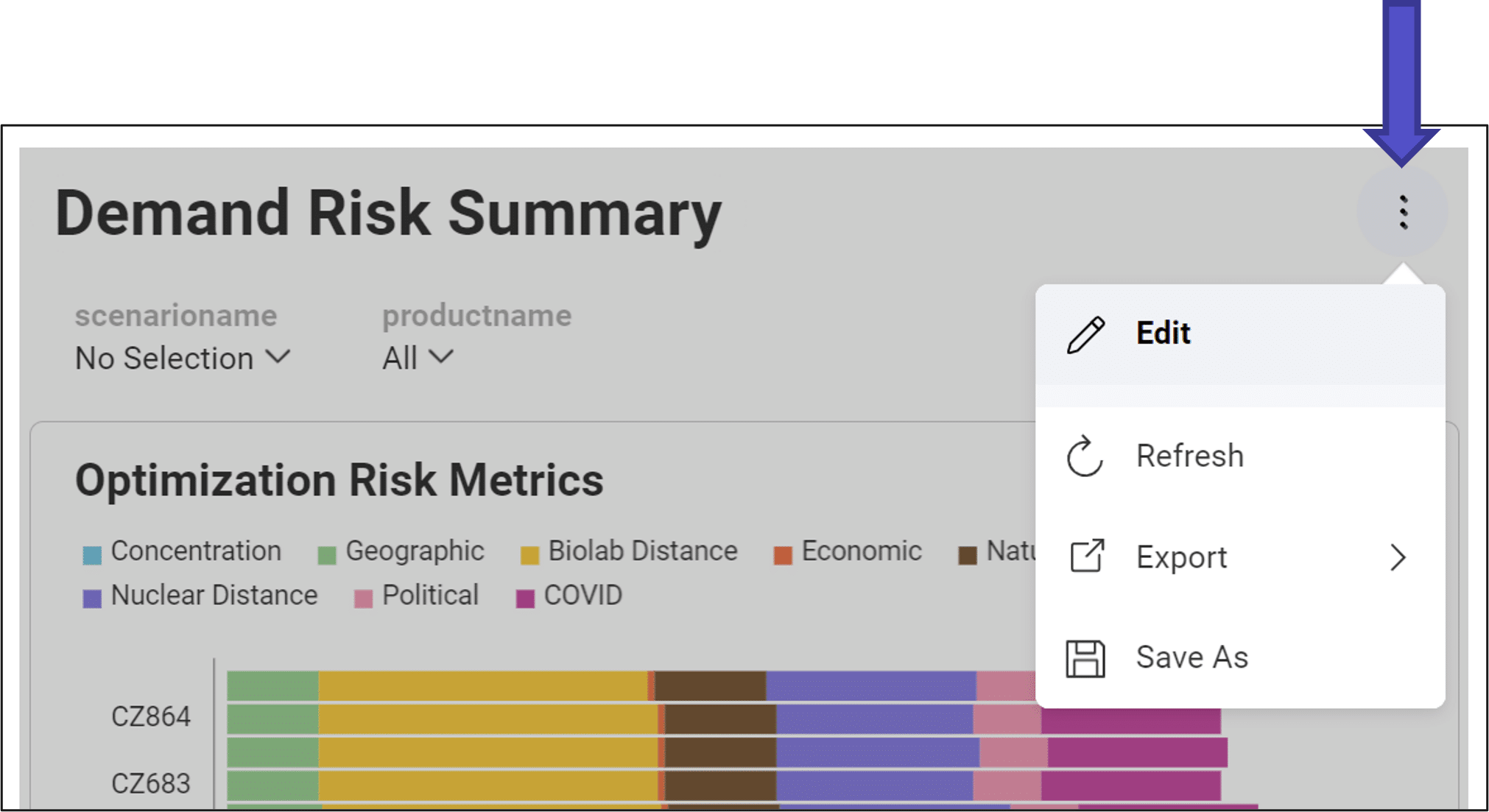

In Analytics, a dashboard is a collection of visualizations. Visualizations can take on many forms, such as charts, tables or maps.

In Cosmic Frog, there are default dashboards available to help you analyze your model results.

The default dashboards highlight some common analytics and metrics. They are designed to be interacted with through a set of filters.

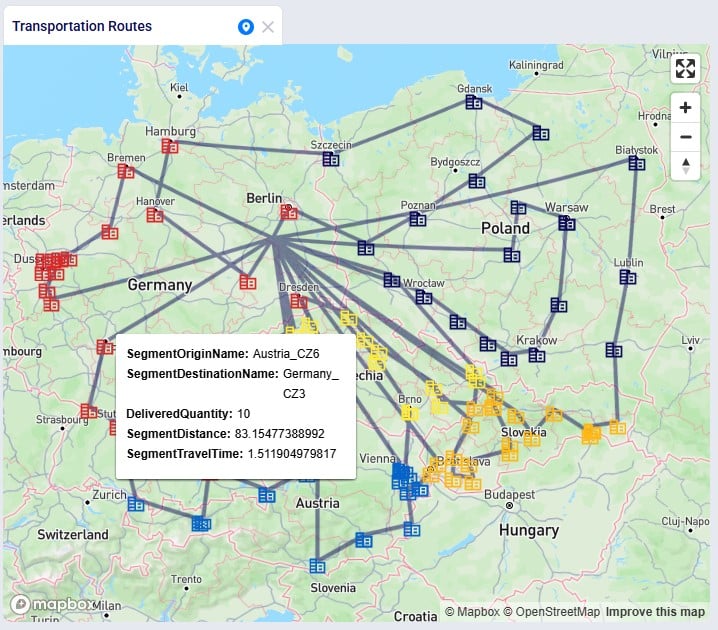

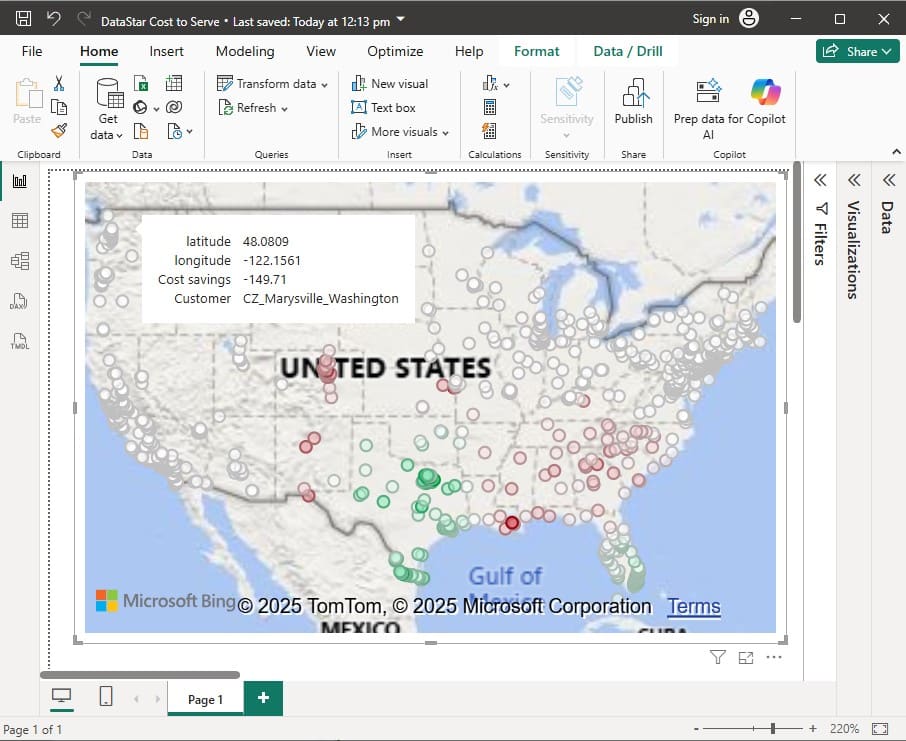

We can hover over visualization elements to get more information. This floating card of information is called a “Tooltip”.

We can customize existing dashboards to fit our needs. For more information see Editing Dashboards and Visualizations.

Showing supply chains on maps is a great way to visualize them, to understand differences between scenarios, and to show how they evolve over time. Cosmic Frog offers users many configuration options to customize maps to their exact needs and compare them side-by-side. In this documentation we will cover how to create and configure maps in Cosmic Frog.

In Cosmic Frog, a map represents a single geographic visualization composed of different layers. A layer is an individual supply chain element such as a customer, product flow, or facility. To show locations on a map, these need to exist in the master tables (e.g. Customers, Facilities, and Suppliers) and they need to have been geocoded (see also the How to Geocode Locations section in this help center article). Flow based layers are based on output tables, such as the OptimizationFlowSummary or SimulationFlowSummary and to draw these, the model needs to have been run so outputs are present in these output tables.

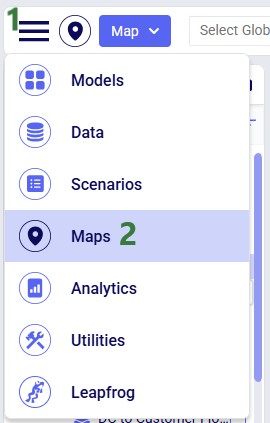

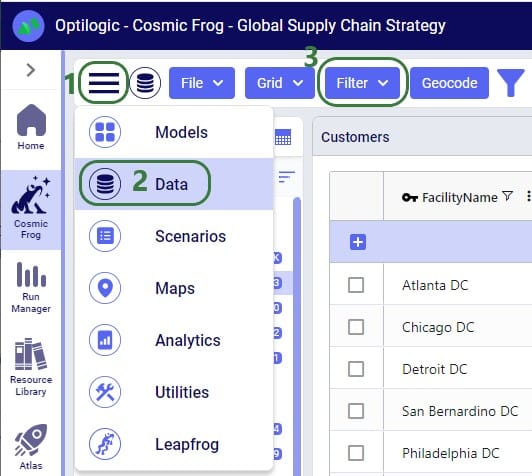

Maps can be accessed through the Maps module in Cosmic Frog:

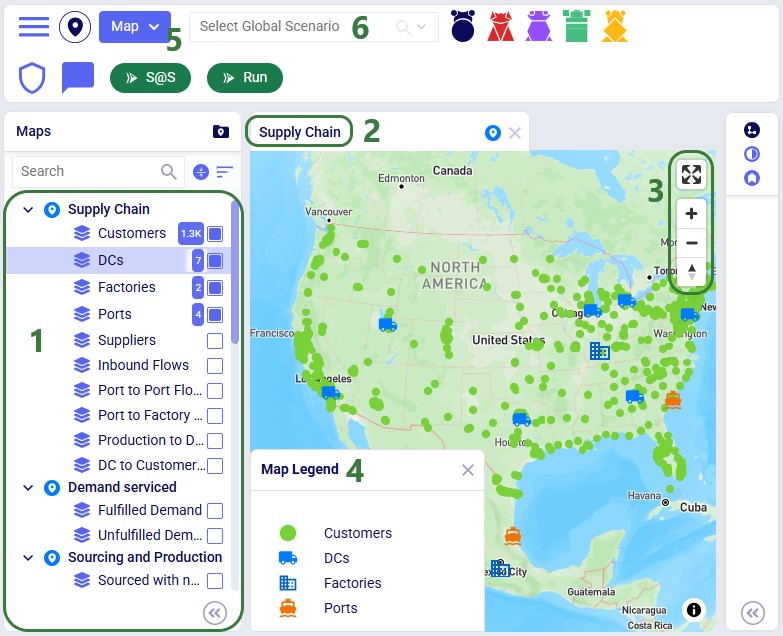

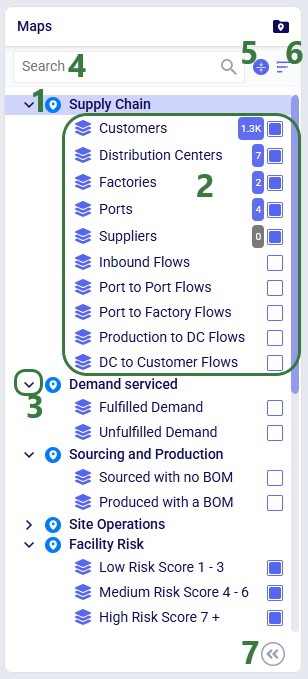

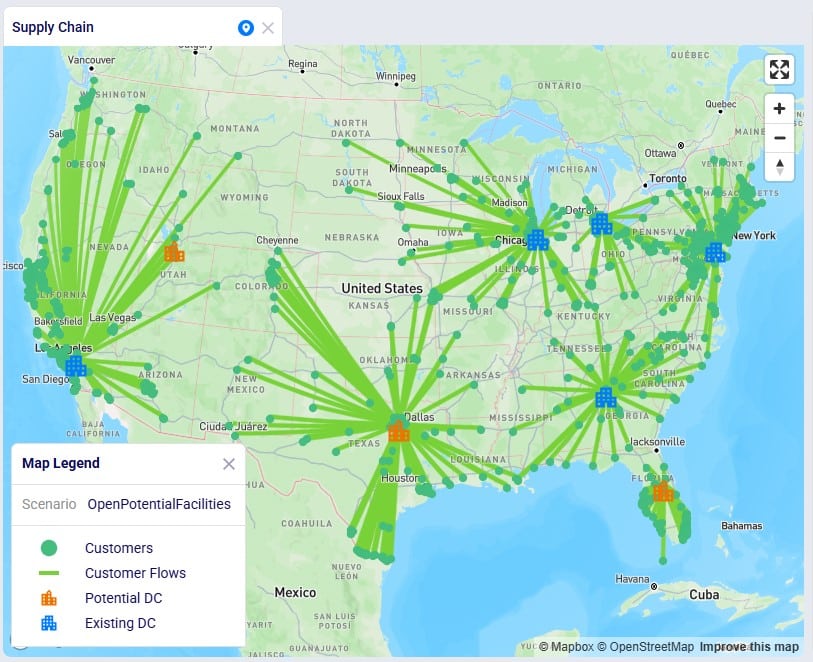

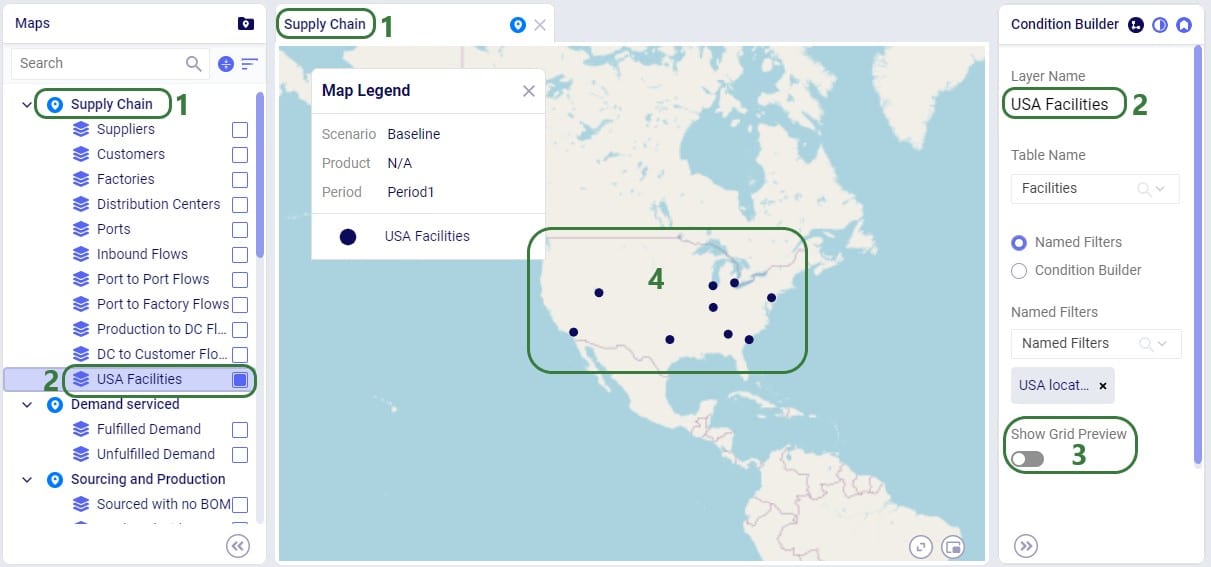

The Maps module opens and shows the first map in the Maps list; this will be the default pre-configured “Supply Chain” map for maps the user created and most models copied from the Resource Library:

In addition to what is mentioned under bullet 4 of the screenshot just above, users can also perform following actions on maps:

As we have seen in the screenshot above, the Maps module opens with a list of pre-configured maps and layers on the left-hand side:

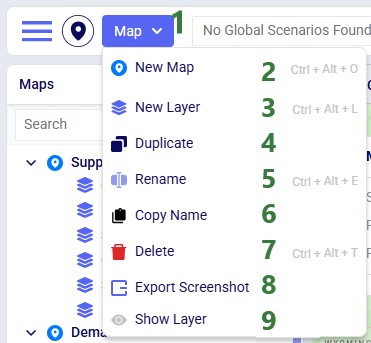

The Map menu in the toolbar at the top of the Maps module allows users to perform basic map and layer operations:

These options from the Map menu are also available in the context menu that comes up when right-clicking on a map or layer in the Maps list.

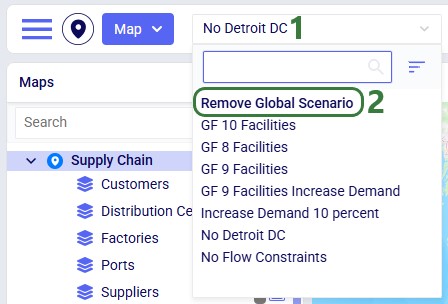

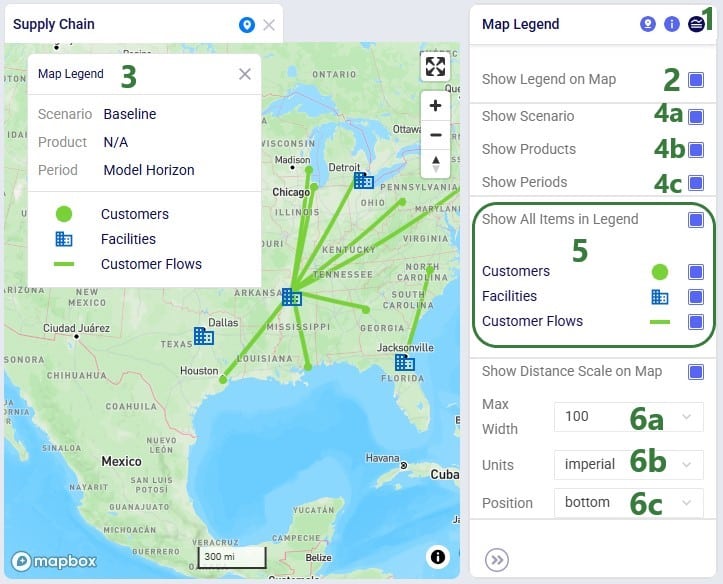

The Map Filters panel can be used to set scenarios for each map individually. If users want to use the same scenario for all maps present in the model, they can use the Global Scenario Filter located in the toolbar at the top of the Maps module:

Now all maps in the model will use the selected scenario, and the option to set the scenario at the map-level is disabled.

When a global scenario has been set, it can be removed using the Global Scenario Filter again:

The zoom level, how the map is centered, and the configuration of maps and their layers persist. After moving between other modules within Cosmic Frog or switching between models, when user comes back to the map(s) in a specific model, the map settings are the same as when last configured.

Now let us look at how users can add new maps, and the map configuration options available to them.

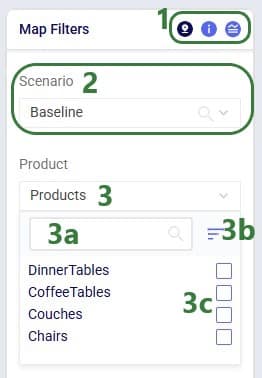

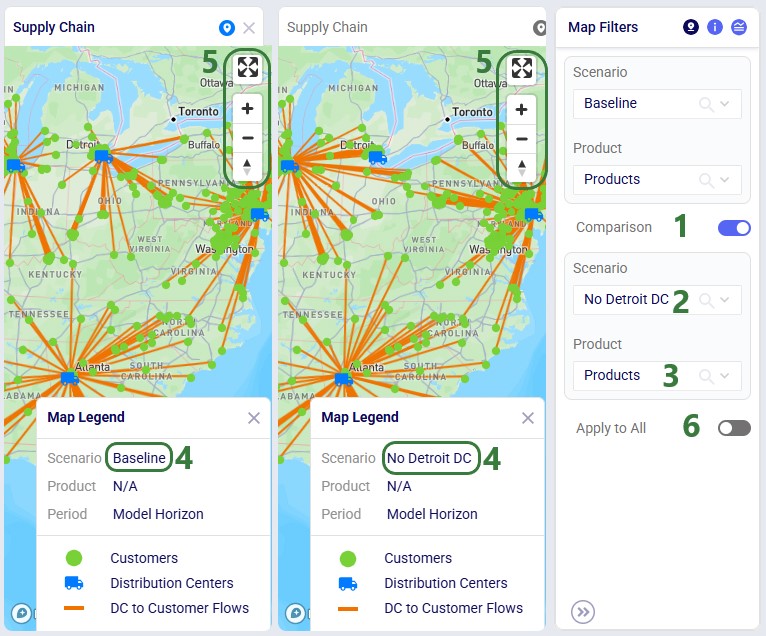

Once done typing the name of the new map, the panel on the right-hand side of the map changes to the Map Filters panel which can be used to select the scenario and products the map will be showing. If the user wants to see a side-by-side map comparison of 2 scenarios in the model, this can be configured here too:

In the screenshot above, the Comparison toggle is hidden by the Product drop-down. In the next screenshot it is shown. By default, this toggle is off; when sliding it right to be on, we can configure which scenario we want to compare the previously selected scenario to:

Please note:

Instead of setting which scenario to use for each map individually on the Map Filters panel, users can instead choose to set a global scenario for all maps to use, as discussed above in the Global Scenario Filter section. If a global scenario is set, the Scenario drop-down on the Map Filters panel will be disabled and the user cannot open it:

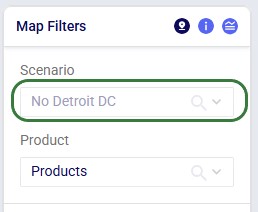

On the Map Information panel, users have a lot of options to configure what the map looks like and what entities (outside of the supply chain ones configured in the layers) are shown on it:

Users can choose to show a legend on the map and configure it on the Map Legend pane:

To start visualizing the supply chain that is being modelled on a map, user needs to add at least 1 layer to a map, which can be done by choosing “New Layer” from the Map-menu:

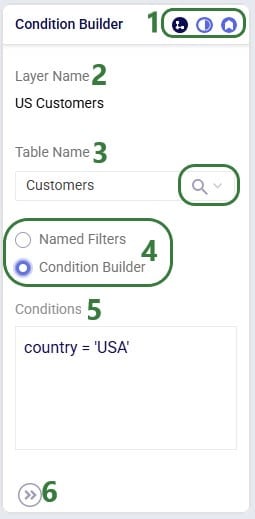

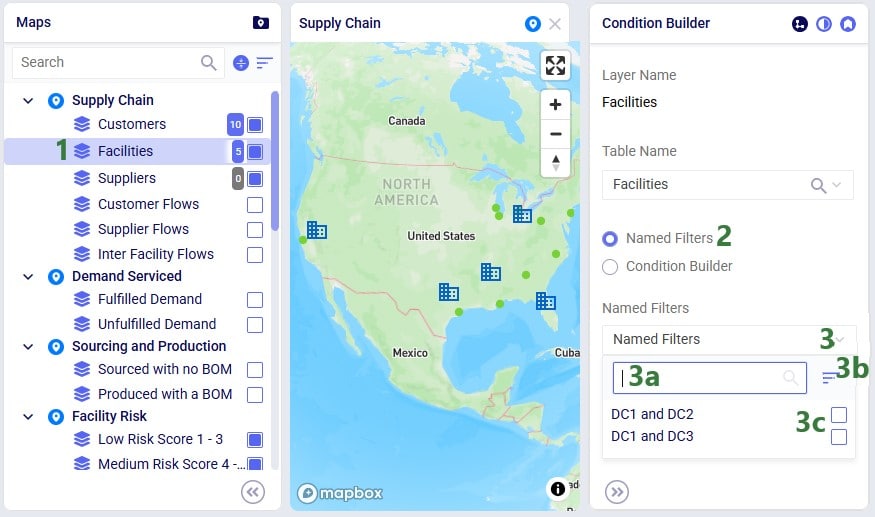

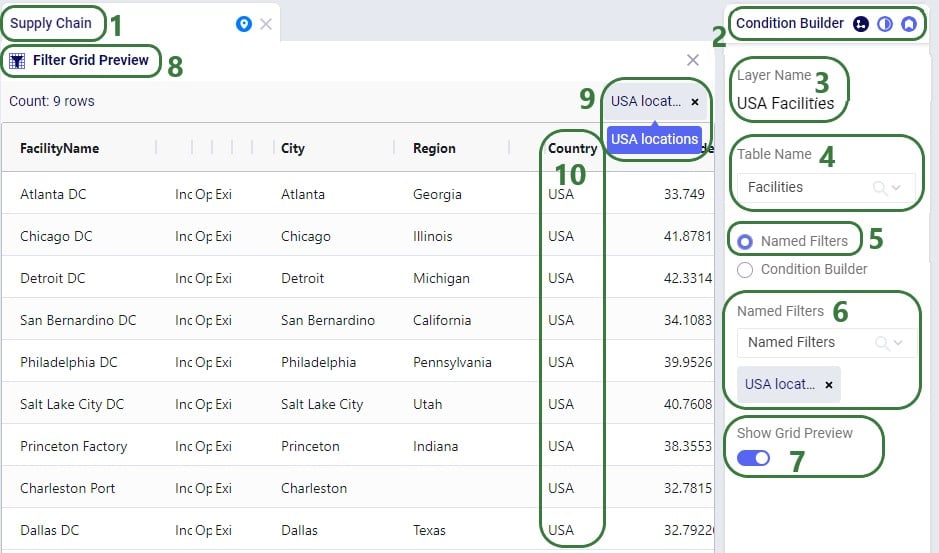

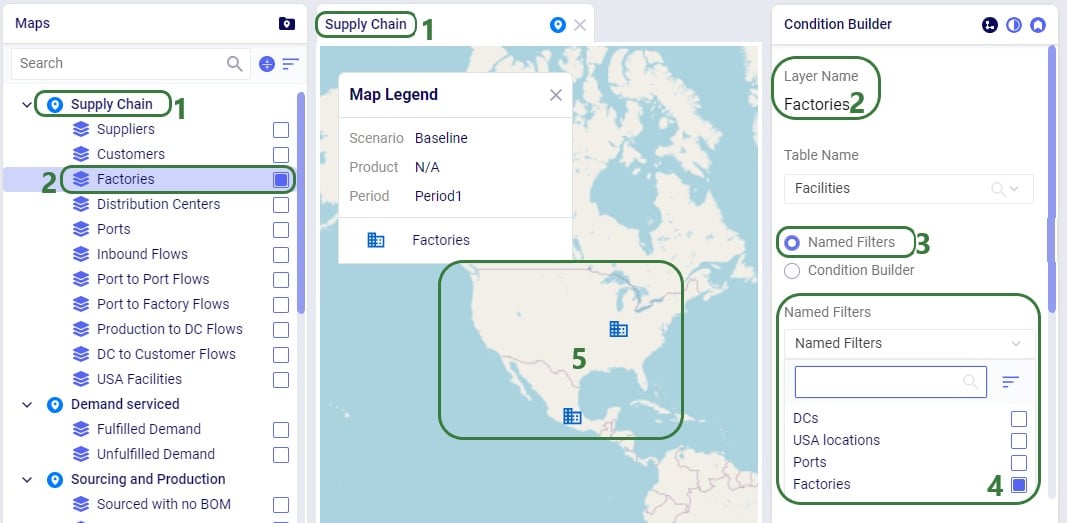

Once a layer has been added or is selected in the Maps list, the panel on the right-hand side of the map changes to the Condition Builder panel which can be used to select the input or output table and any filters on it to be used to draw the layer:

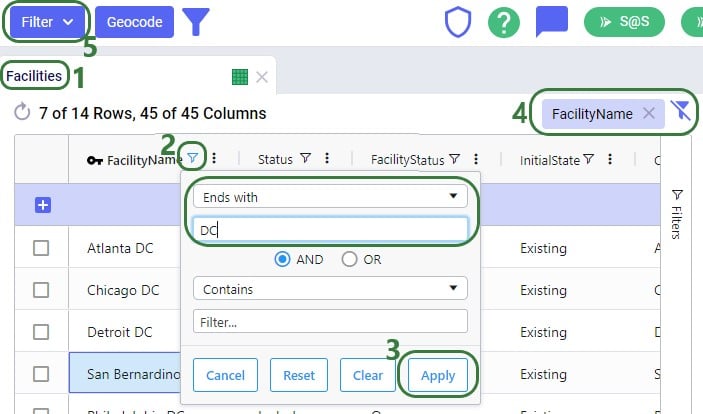

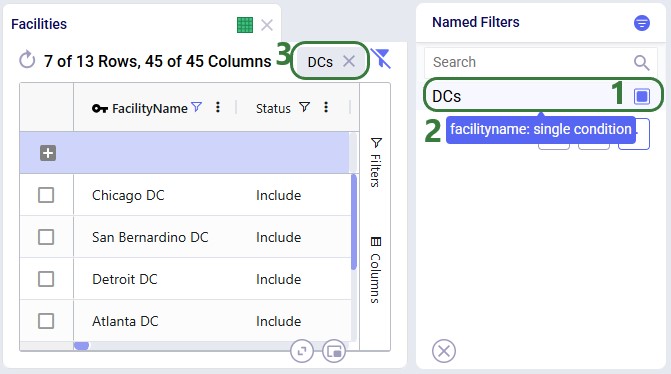

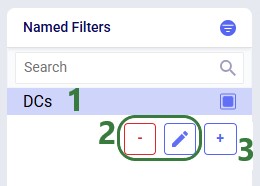

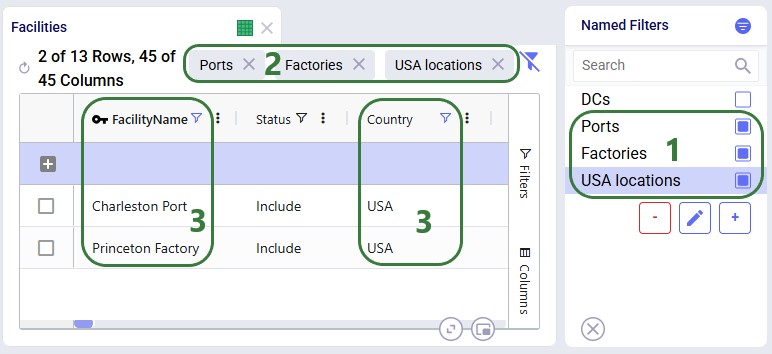

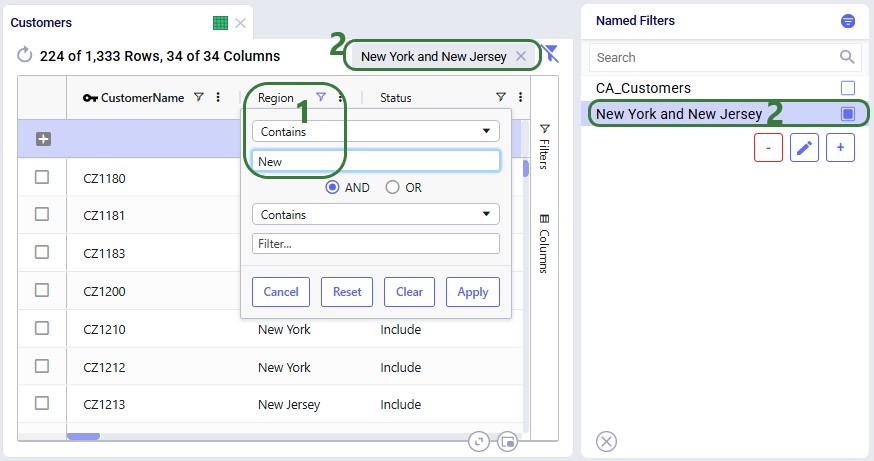

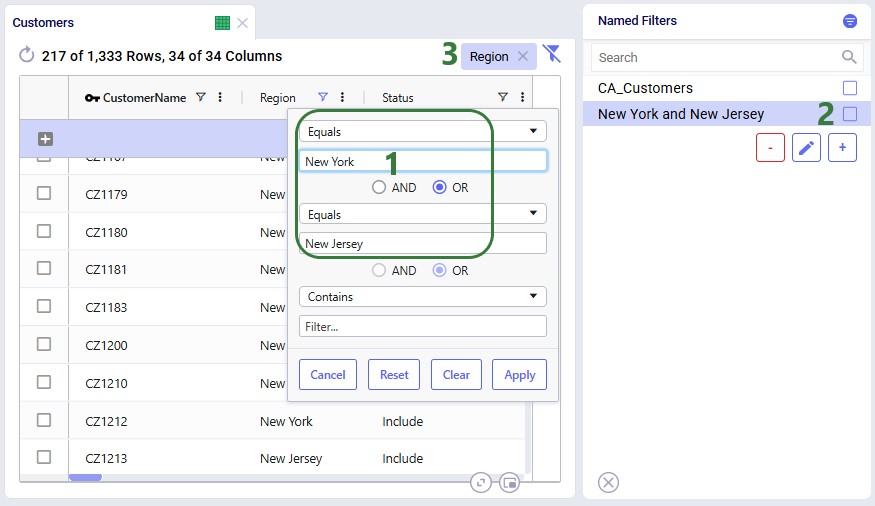

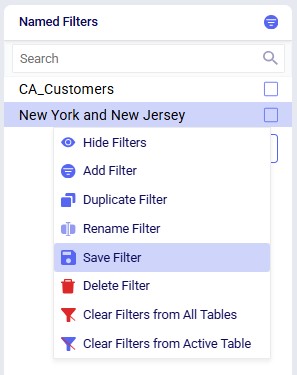

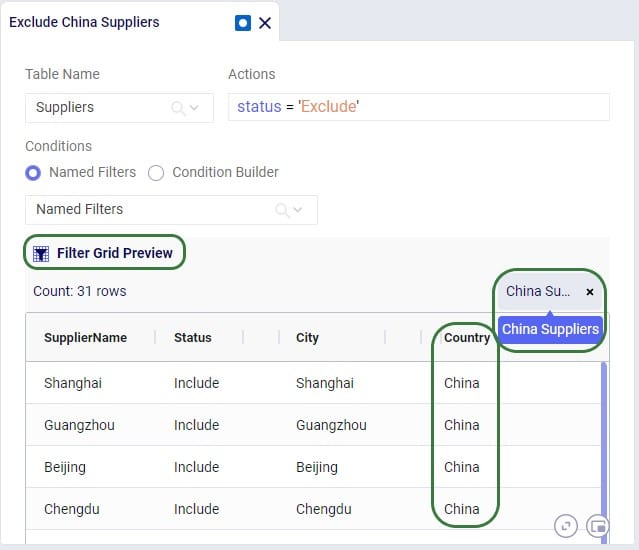

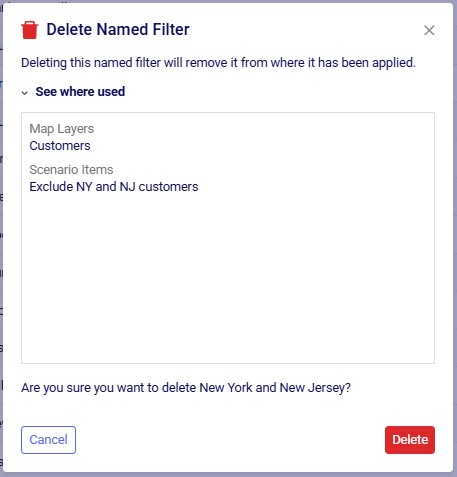

We will now also look at using the Named Filters option to filter the table used to draw the map layer:

In this walk-through example, user chooses to enable the “DC1 and DC2” named filter:

Lastly on the Named Filters option, users have the option to view a grid preview to ensure the correct filtered records are being drawn on the map:

In the next layer configuration panel, Layer Style, users can choose what the supply chain entities that the layer shows will look like on the map. This panel looks somewhat different for layers that show locations (Type = Point) than for those that show flows (Type = Line). First, we will look at a point type layer (Customers):

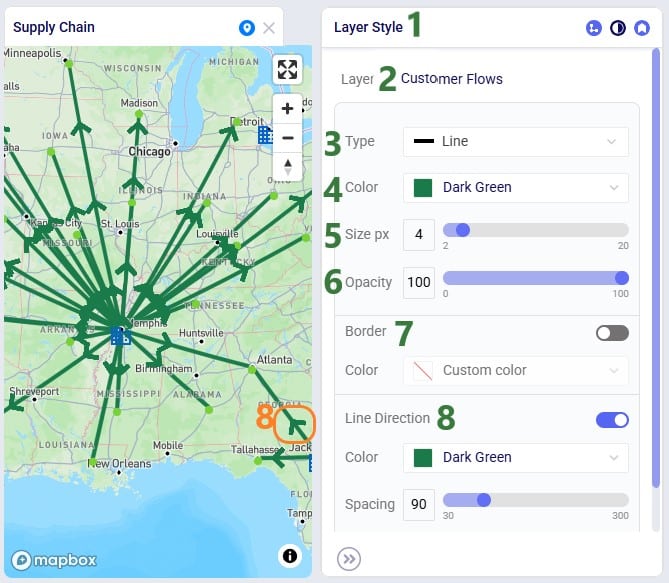

Next, we will look at a line type layer, Customer Flows:

At the bottom of the Layer Style pane a Breakpoints toggle is available too (not shown in the screenshots above). To learn more about how these can be used and configured, please see the "Maps - Styling Points & Flows based on Breakpoints" Help Center article.

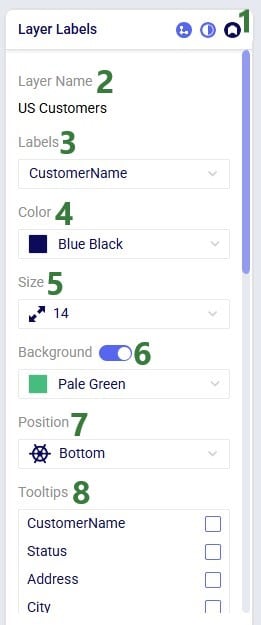

Labels and tooltips can be added to each layer, so users can more easily see properties of the entities shown in the layer. The Layer Labels configuration panel allows users to choose what to show as labels and tooltips, and configure the style of the labels:

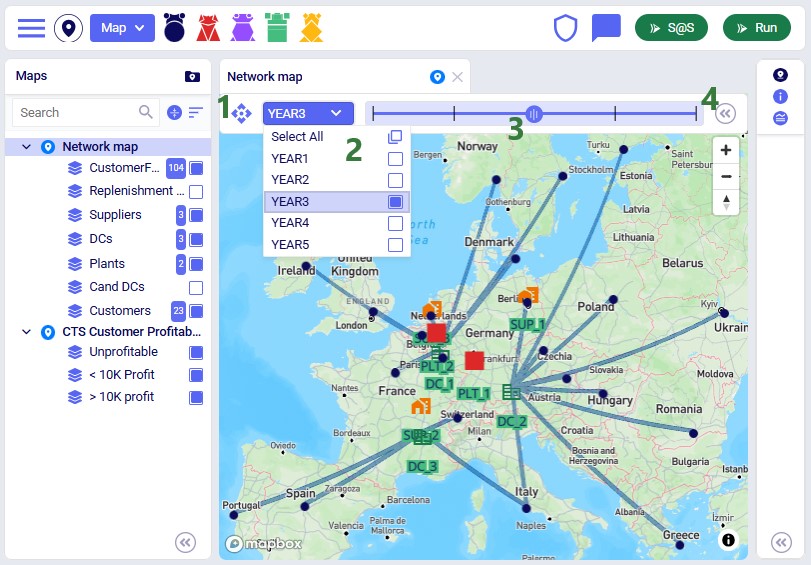

When modelling multiple periods in network optimization (Neo) models, users can see how these evolve over time using the map:

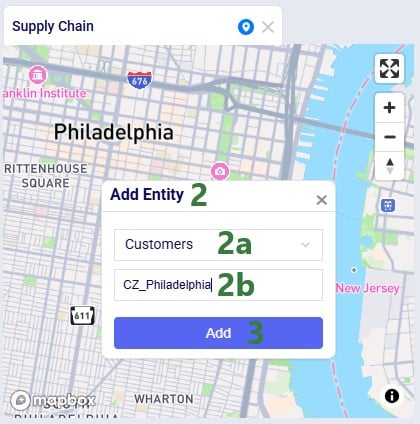

Users can now add Customers, Facilities and Suppliers via the map:

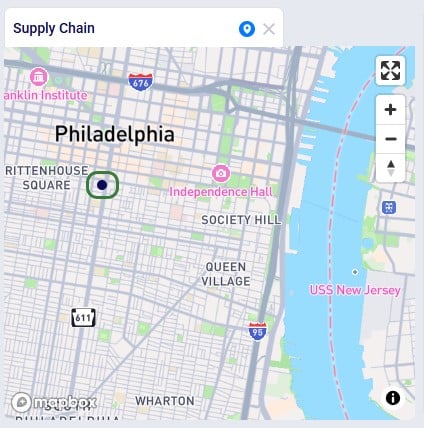

After adding the entity, we see it showing on the map, here as a dark blue circle, which is how the Customers layer is configured on this map:

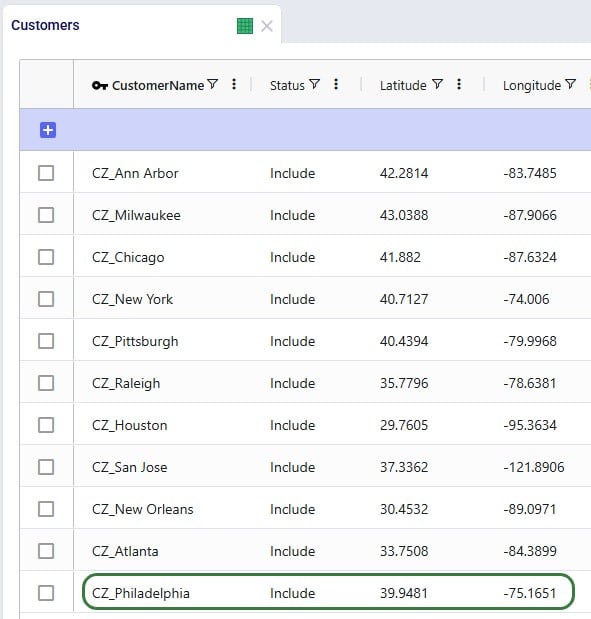

Looking in the Customers table, we notice that CZ_Philadelphia has been added. Note that while its latitude and longitude fields are set, other fields such as City, Country and Region are not automatically filled out for entities added via the map:

In this final section, we will show a few example maps to give users some ideas of what maps can look like. In this first screenshot, a map for a Transportation Optimization (Hopper engine) model, Transportation Optimization UserDefinedVariables available from Optilogic’s Resource Library (here), is shown:

Some notable features of this map are:

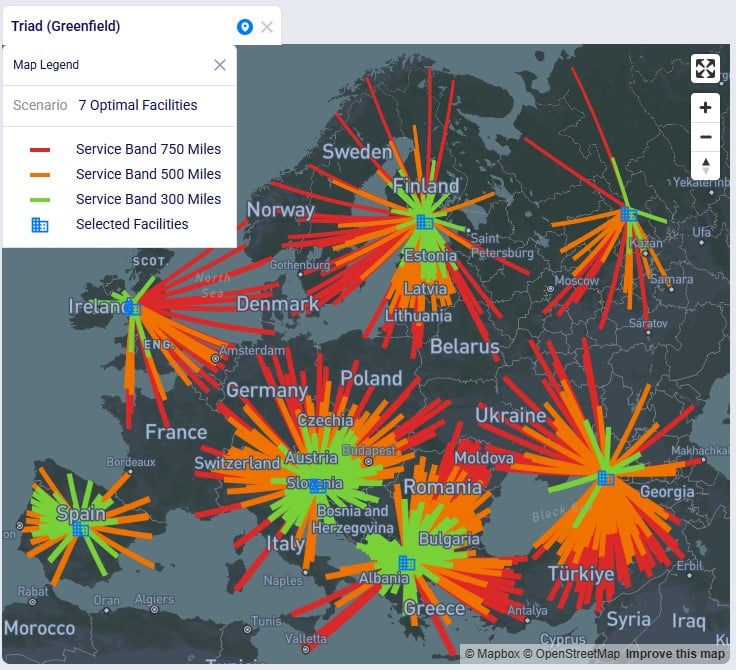

The next screenshot shows a map of a Greenfield (Triad engine) model:

Some notable features of this map are:

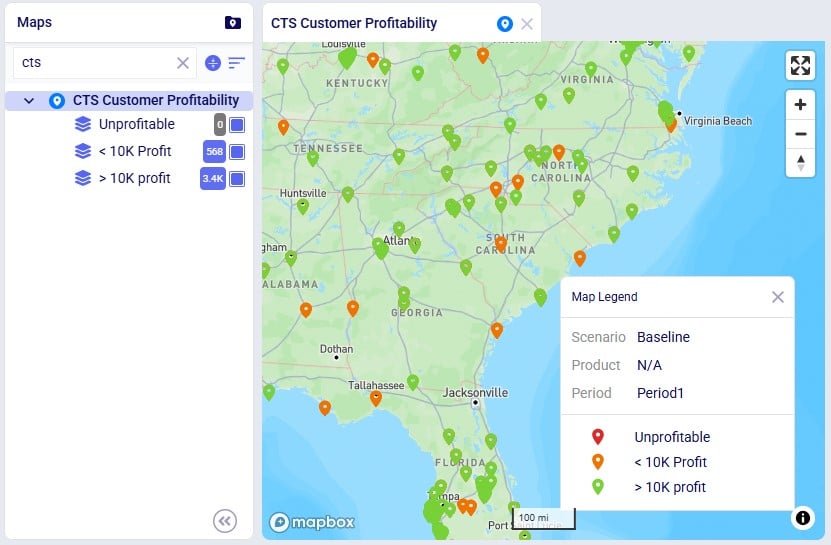

This following screenshot shows a subset of the customers in a Network Optimization (Neo engine) model, the Global Sourcing – Cost to Serve model available from Optilogic’s Resource Library (here). These customers are color-coded based on how profitable they are:

Some notable features of this map are:

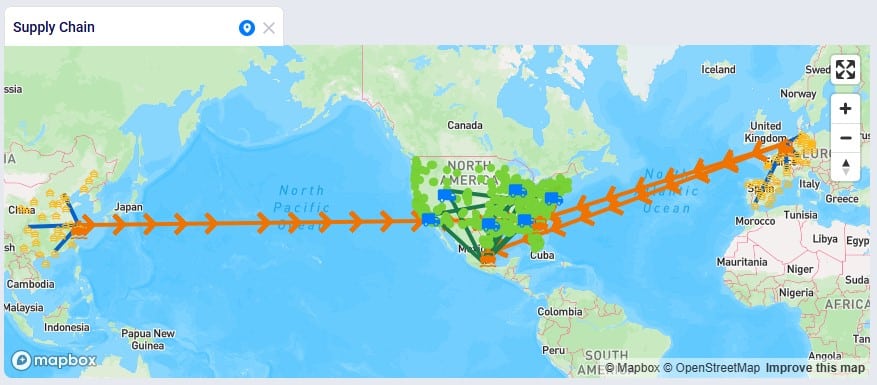

Lastly, the following screenshot shows a map of the Tariffs example model, a network optimization (Neo engine) model available from Optilogic’s Resource Library (here), where suppliers located in Europe and China supply raw materials to the US and Mexico:

Some notable features of this map are:

We hope users feel empowered to create their own insightful maps. For any questions, please do not hesitate to contact Optilogic support at support@optilogic.com.

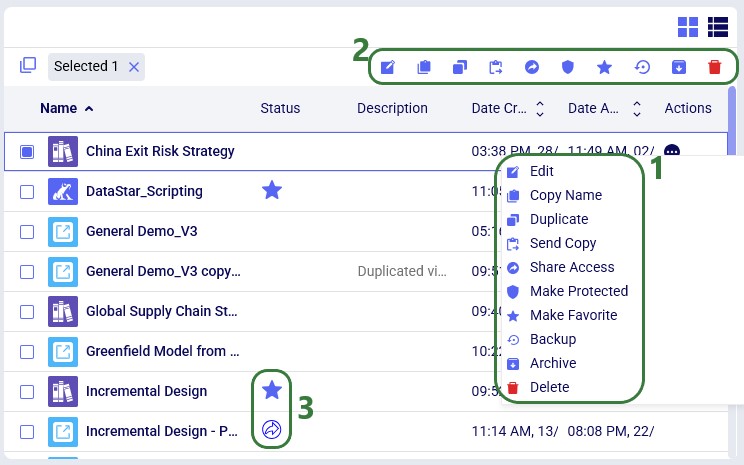

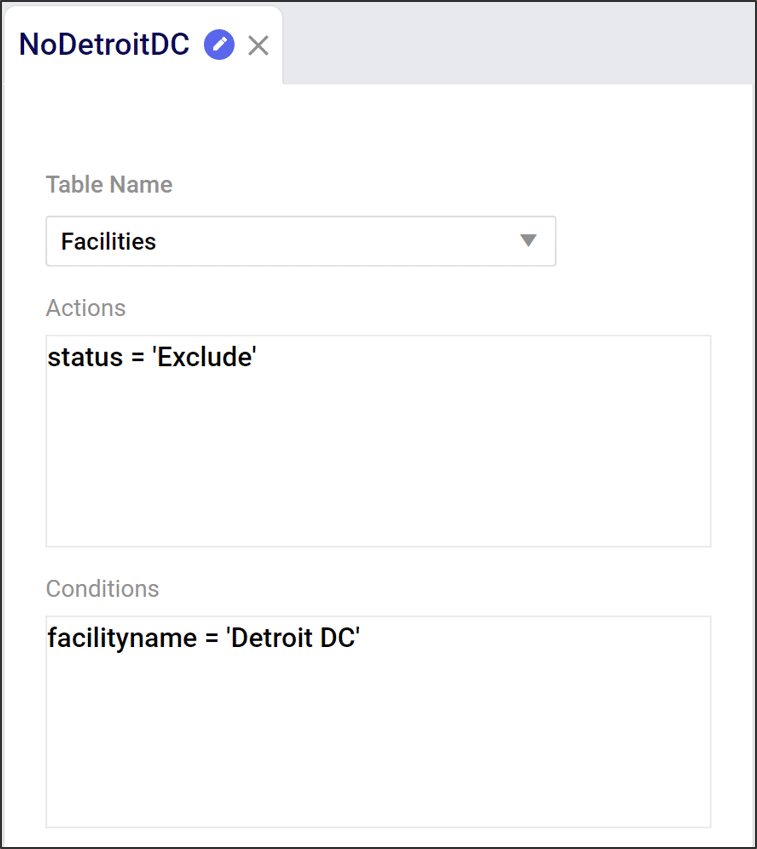

Scenarios allow you to run edited versions of your model in specific, controlled ways.

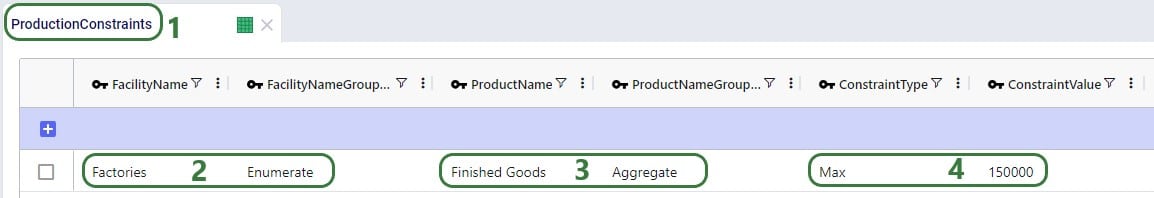

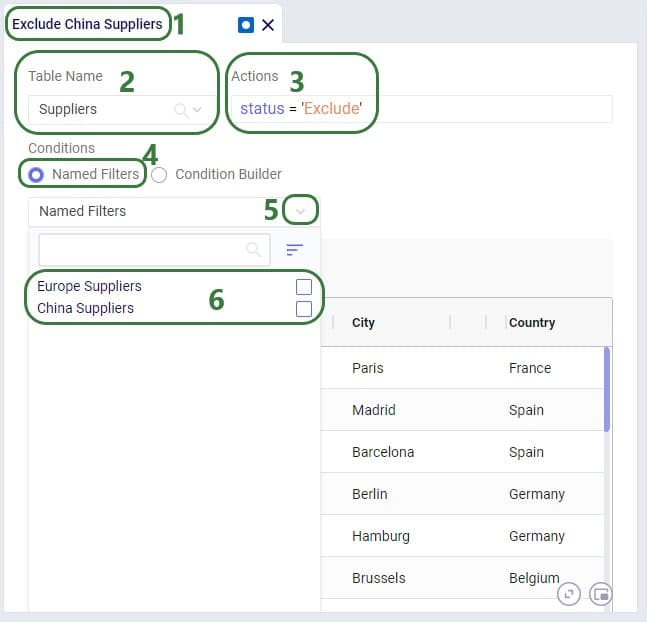

All scenarios have 3 components:

For an overview of the scenario features with a video please watch the video:

The only constant is change. When building our supply chains, the “optimal” design doesn’t only mean lowest cost. What happens if (or perhaps when) a disruption occurs? Fragile, low-cost supply chains can end up costing more in the long run if they aren’t resilient to the dynamic nature of today’s world.

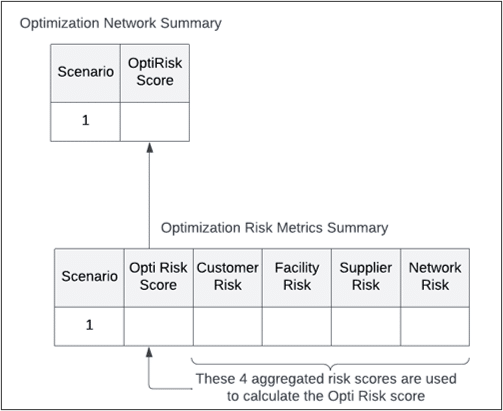

We believe that optimality includes resilience. That’s why every Cosmic Frog run includes a risk rating from our DART risk engine.

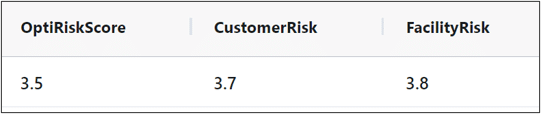

Every Cosmic Frog run outputs an Opti Risk score. The Opti Risk score is an aggregate measure of the overall supply chain risk. It includes the following sub-categories:

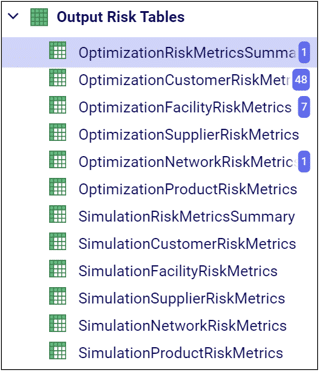

After running a model, you can find the Opti Risk score (as well as the scores for each of the sub-categories) in the output risk tables. The Opti Risk score can also be found in the OptimizationNetworkSummary table.

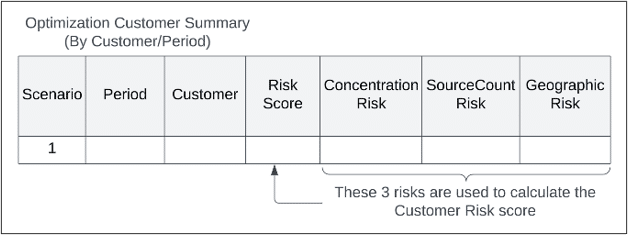

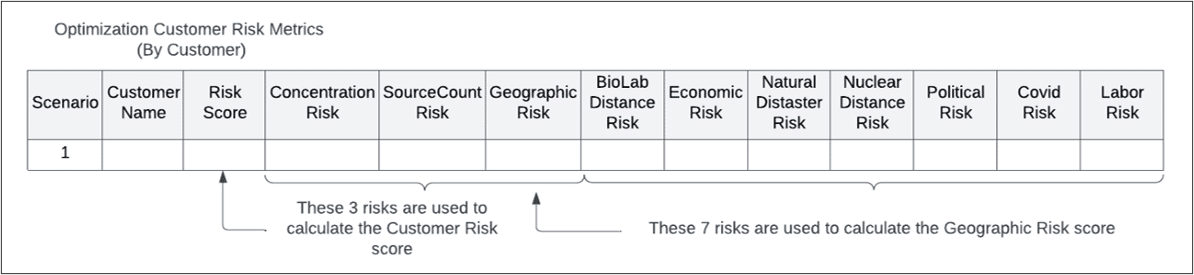

The overall Customer Risk score is an aggregation of each individual customer’s risk described in the OptimizationCustomerRiskMetrics or SimulationCustomerRiskMetrics tables. In each scenario, there is one risk score per customer per period.

Each customer risk score includes:

For each sub-category, the geographic risk score is also an aggregation of several risk factors:

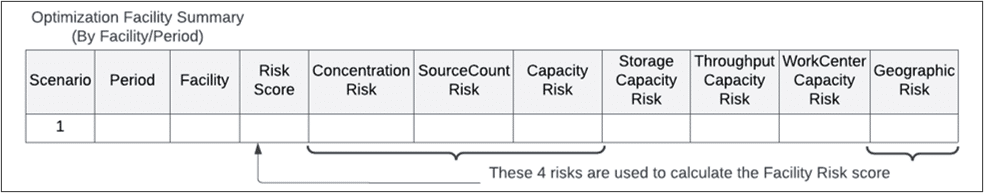

Like the customer risk score, the overall facility risk score is an aggregation of risk across all facilities in your supply chain. In the FacilityRiskMetric tables, there is an individual risk score per facility per period.

The facility risk score includes:

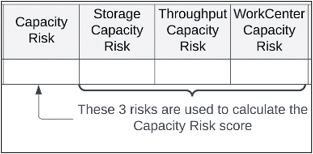

The capacity risk has three sub-components:

The facility geographic risk has the same components as the customer geographic risk.

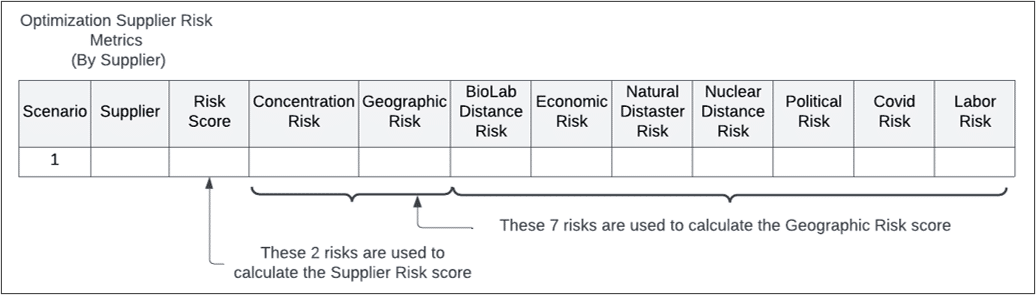

The supplier risk is calculated per supplier per period and includes:

Both the concentration and geographic risks include the same elements as described previously.

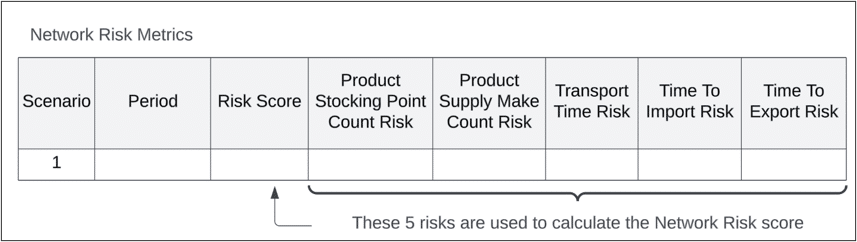

Network risk differs from the other risk scores in that it is not tied to a specific supply chain element. There is only one network risk score per scenario, and it includes:

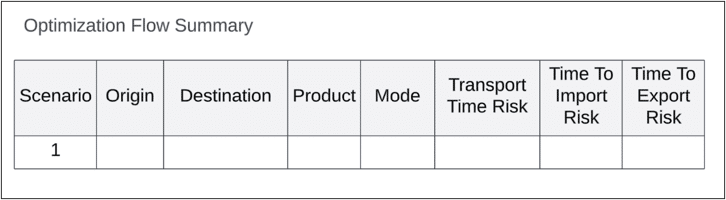

The transport and import/export time risks are aggregated across individual origin/destination pairs for every product and transport mode. The individual risk scores can be found in the OptimizationFlowSummary table.

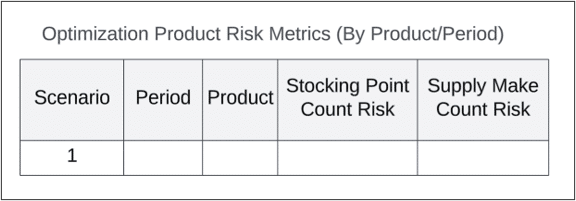

The stocking point count and supply make count risks are aggregations across every product and period. The individual risk scores can be found in the ProductRiskMetrics tables.

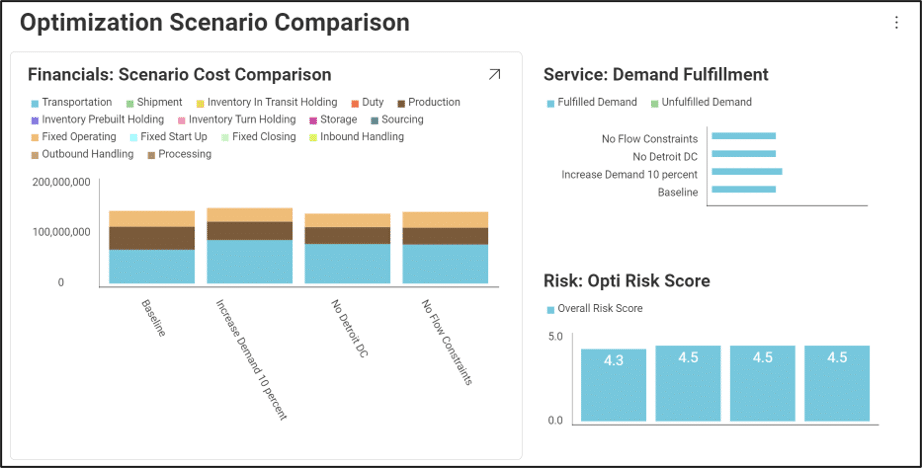

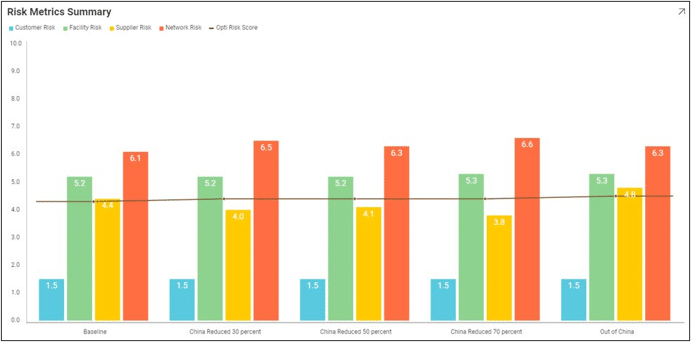

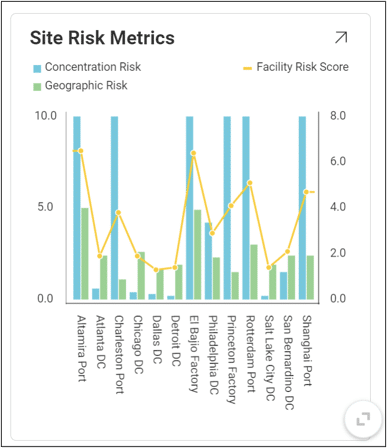

We can use our visualization tools to get a better sense of how risk varies across design scenarios.

Please watch this 5-minute video for an overview of DataStar, Optilogic’s new AI-powered data application designed to help supply chain teams build and update models & scenarios and power apps faster & easier than ever before!

For detailed DataStar documentation, please see Navigating DataStar on the Help Center.

DataStar is Optilogic’s new AI-powered data product designed to help supply chain teams build and update models & scenarios and power apps faster & easier than ever before. It enables users to create flexible, accessible, and repeatable workflows with zero learning curve—combining drag-and-drop simplicity, natural language AI, and deep supply chain context.

Today, up to an estimated 80% of a modeler's time is spent on data—connecting, cleaning, transforming, validating, and integrating it to build or refresh models. DataStar drastically shrinks that time, enabling teams to:

The 2 main goals of DataStar are 1) ease of use, and 2) effortless collaboration, these are achieved by:

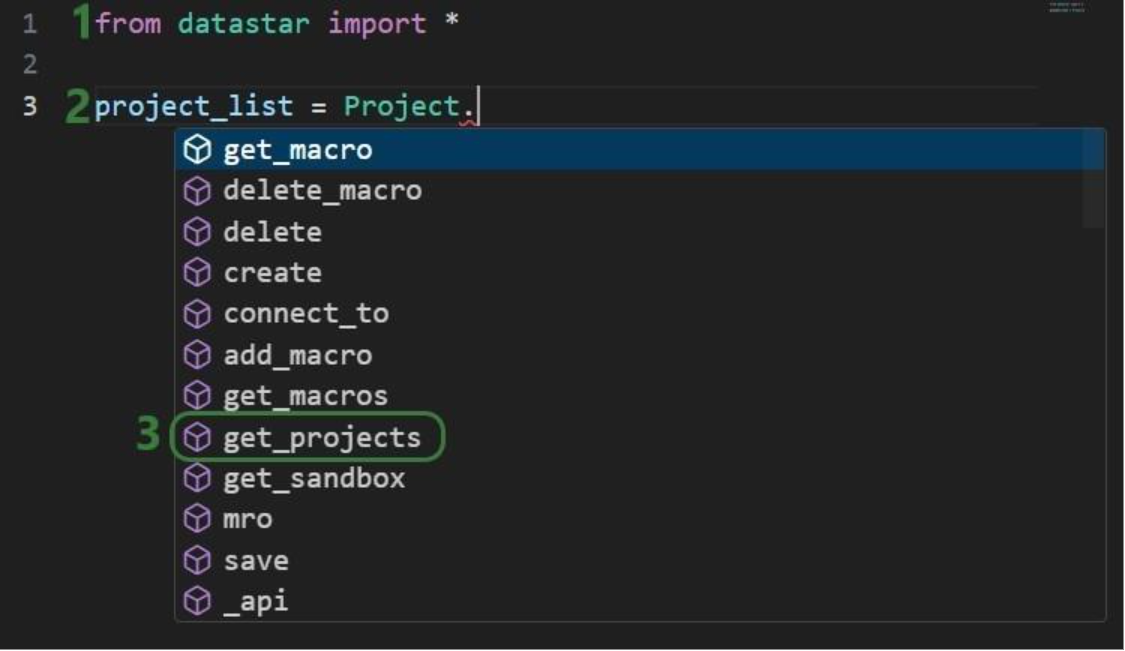

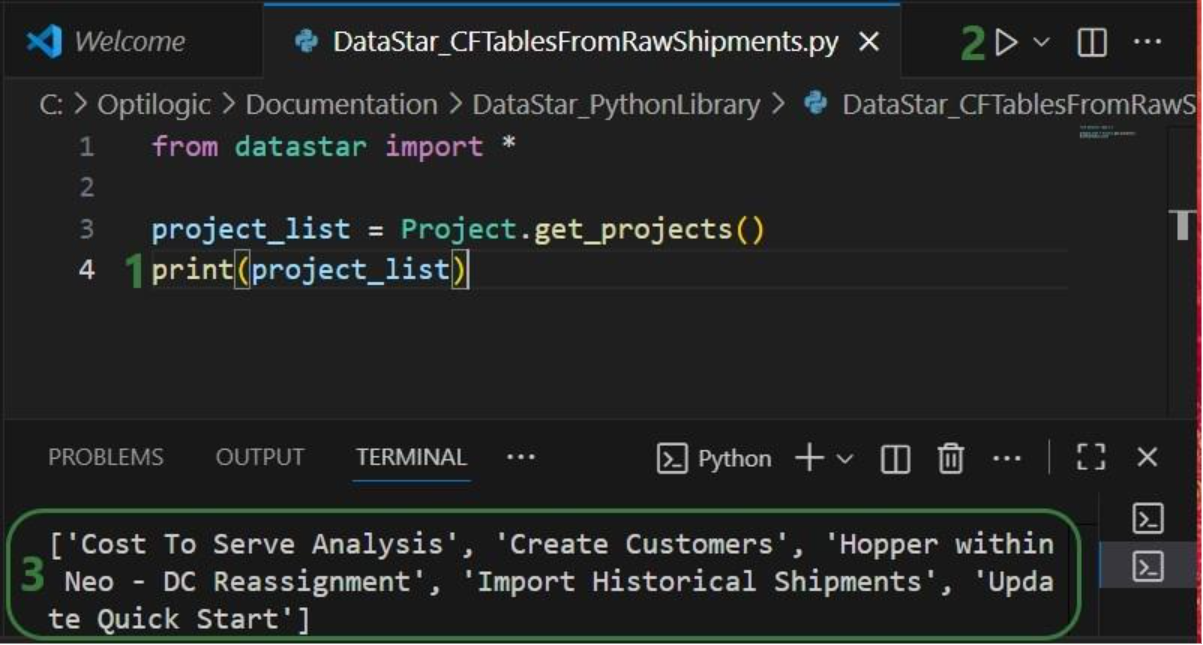

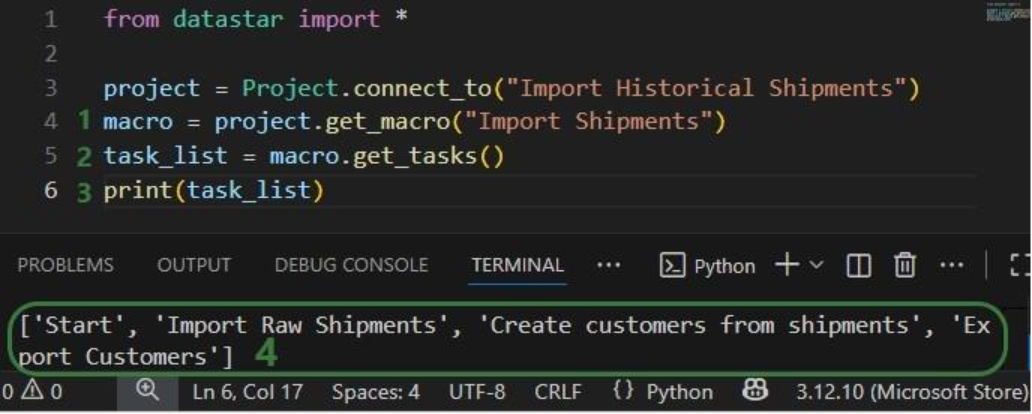

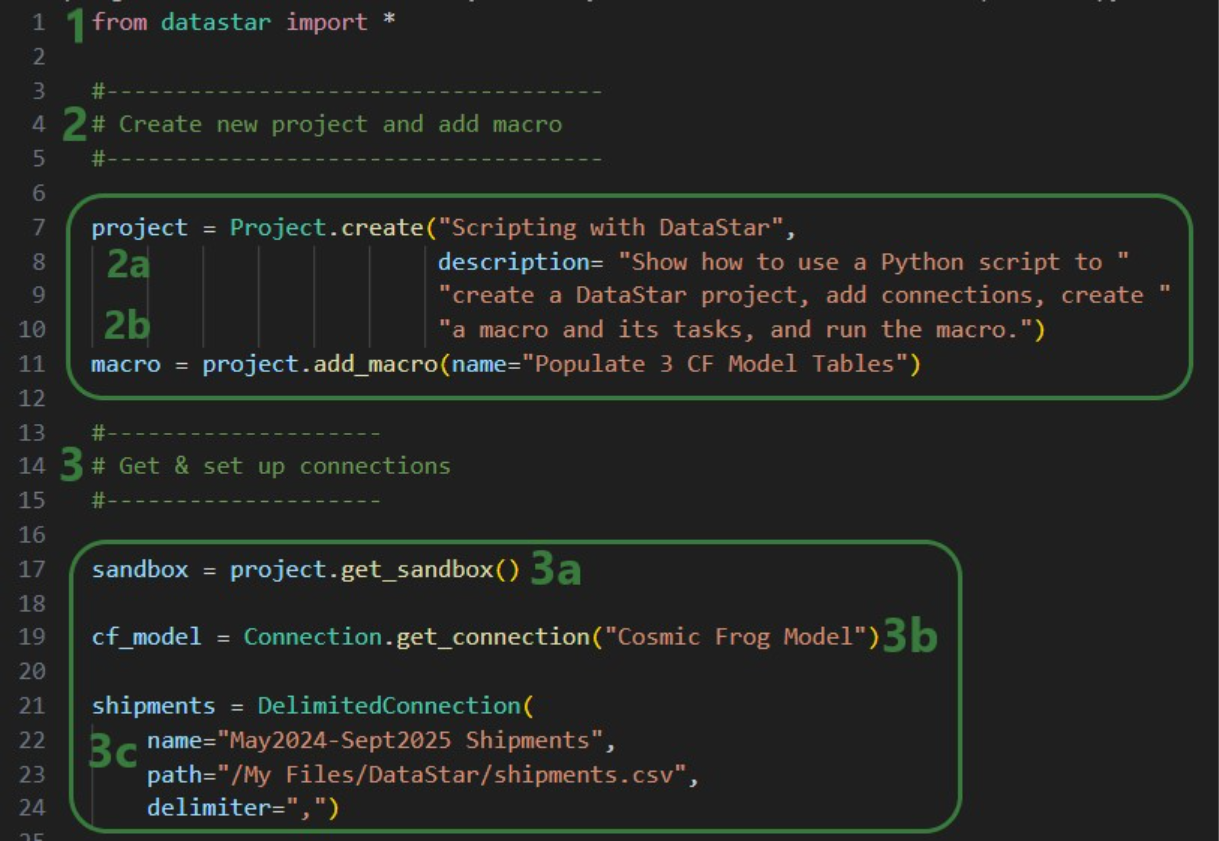

In this documentation, we will start with a high-level overview of the DataStar building blocks. Next, creating projects and data connections will be covered before diving into the details of adding tasks and chaining them together into macros, which can then be run to accomplish the data goals of your project.

Please see this "Getting Started with DataStar: Application Overview" video for a quick 5-minute overview of DataStar.

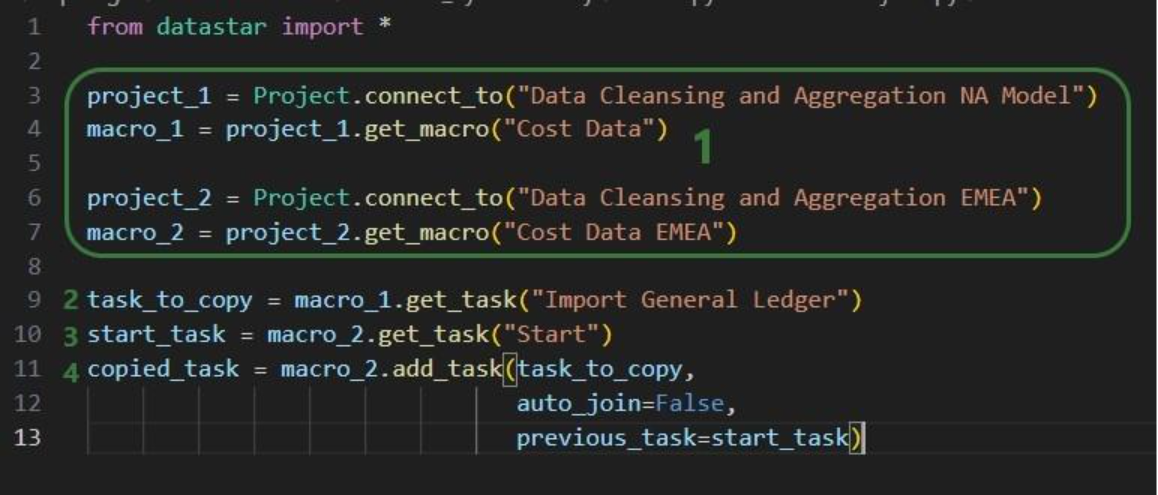

Before diving into more details in later sections, this section will describe the main building blocks of DataStar, which include Data Connections, Projects, Macros, and Tasks.

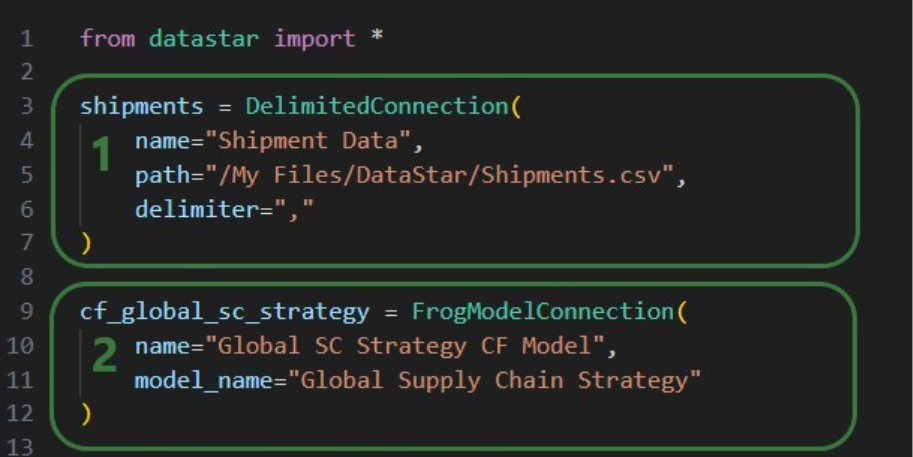

Since DataStar is all about working with data, Data Connections are an important part of DataStar. These enable users to quickly connect to and pull in data from a range of data sources. Data Connections in DataStar:

Connections to other common data resources such as MySQL, OneDrive, SAP, and Snowflake will become available as built-in connection types over time. Currently, these data sources can be connected to by using scripts that pull them in from the Optilogic side or using ETL tools or automation platforms that push data onto the Optilogic platform. Please see the "DataStar: Data Integration" article for more details on working with both local and external data sources.

Users can check the Resource Library for the currently available template scripts and utilities. These can be copied to your account or downloaded and after a few updates around credentials, etc. you will be able to start pulling data in from external sources:

Projects are the main container of work within DataStar. Typically, a Project will aim to achieve a certain goal by performing all or a subset of importing specific data, then cleansing, transforming & blending it, and finally publishing the results to another file/database. The scope of DataStar Projects can vary greatly, think for example of following 2 examples:

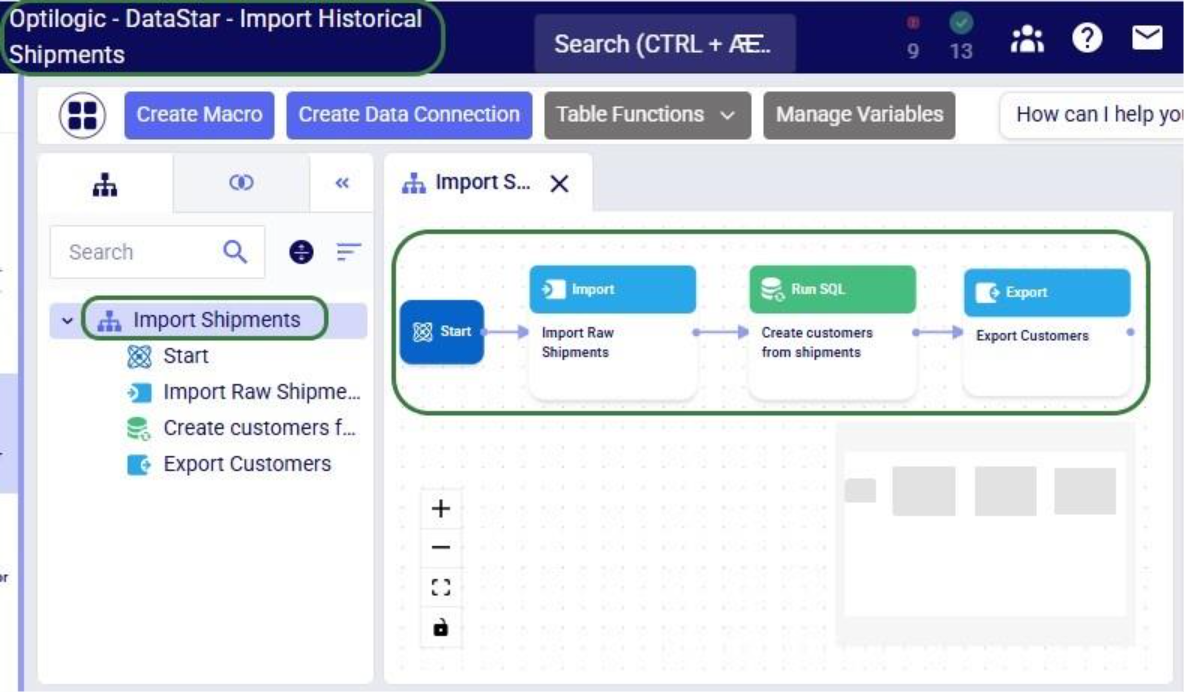

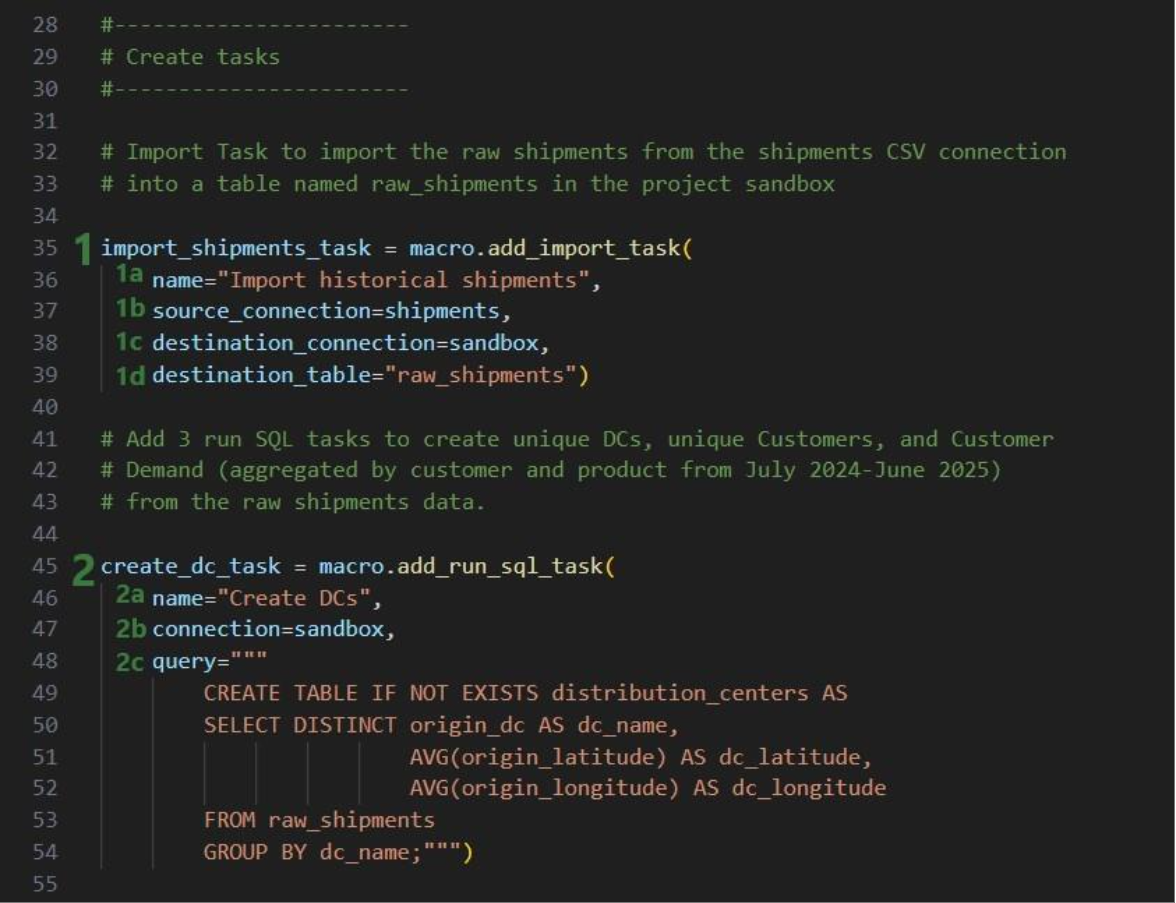

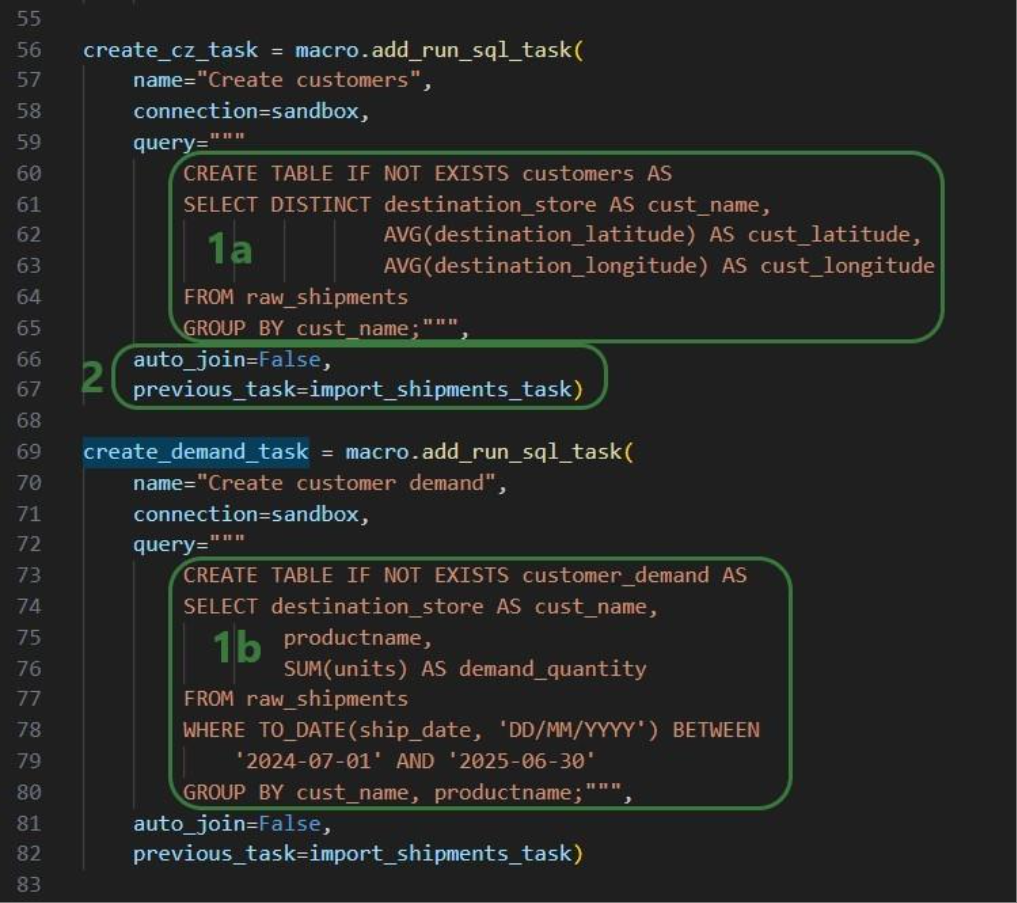

Projects consist of one or multiple macros which in turn consist of 1 or multiple asks. Tasks are the individual actions or steps which can be chained together within a macro to accomplish a specific goal.

The next screenshot shows an example Macro called "Transportation Policies" which consists of 7 individual tasks that are chained together to create transportation policies for a Cosmic Frog model from imported Shipments and Costs data:

Every project by default contains a Data Connection named Project Sandbox. This data connection is not global to all DataStar projects; it is specific to the project it is part of. The Project Sandbox is a Postgres database where users generally import the raw data from the other data connections into, perform transformations in, save intermediate states of data in, and then publish the results out to a Cosmic Frog model (which is a data connection different than the Project Sandbox connection). It is also possible that some of the data in the Project Sandbox is the final result/deliverable of the DataStar Project or that the results are published into a different type of file or system that is set up as a data connection rather than into a Cosmic Frog model.

The next diagram shows how Data Connections, Projects, and Macros relate to each other in DataStar:

As referenced above too, to learn more about working with both local and external data, please see this "DataStar: Data Integration" article.

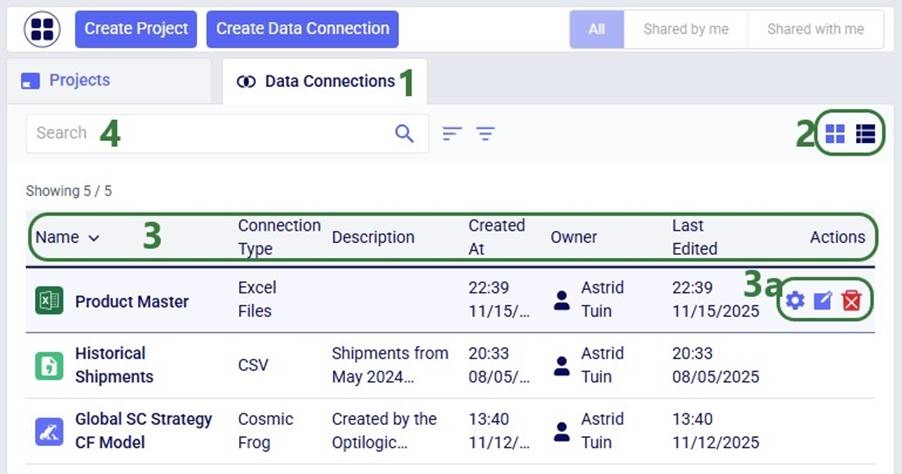

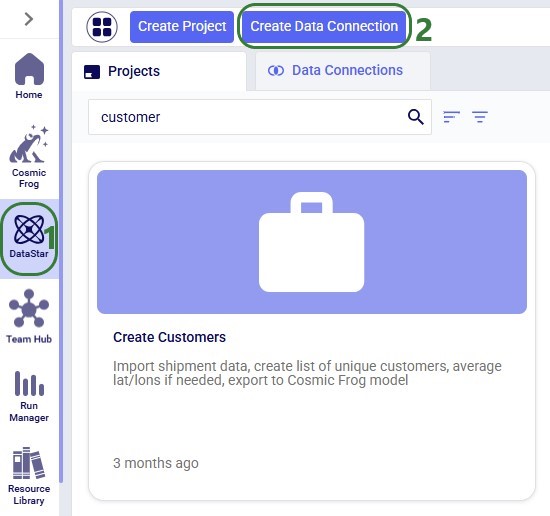

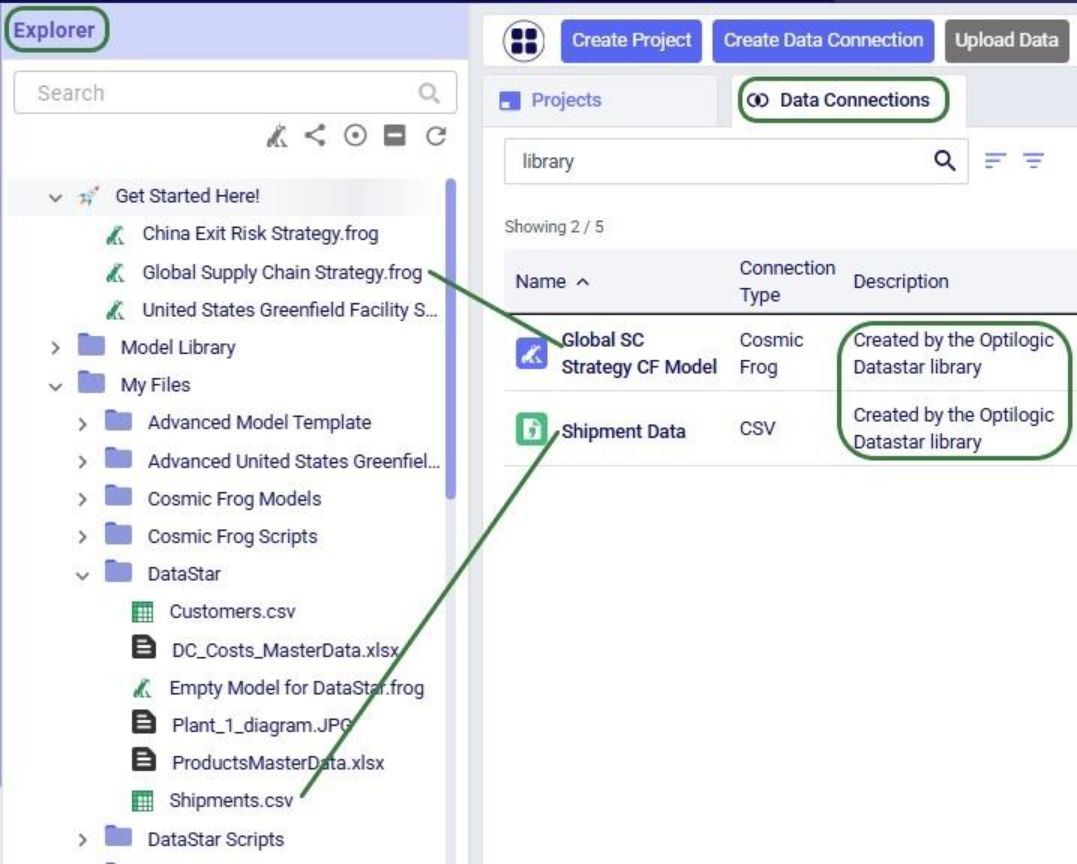

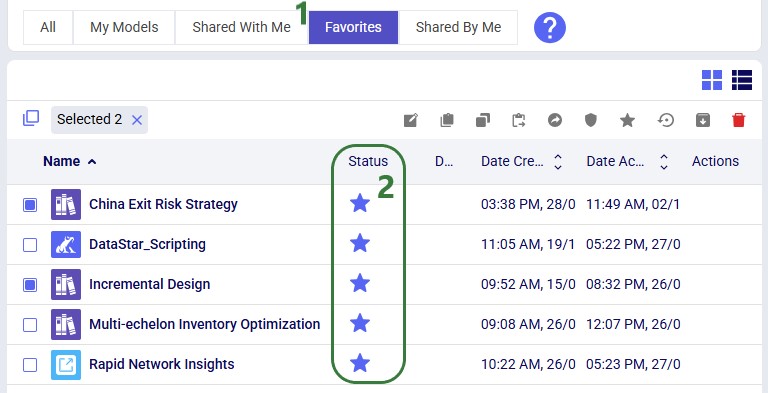

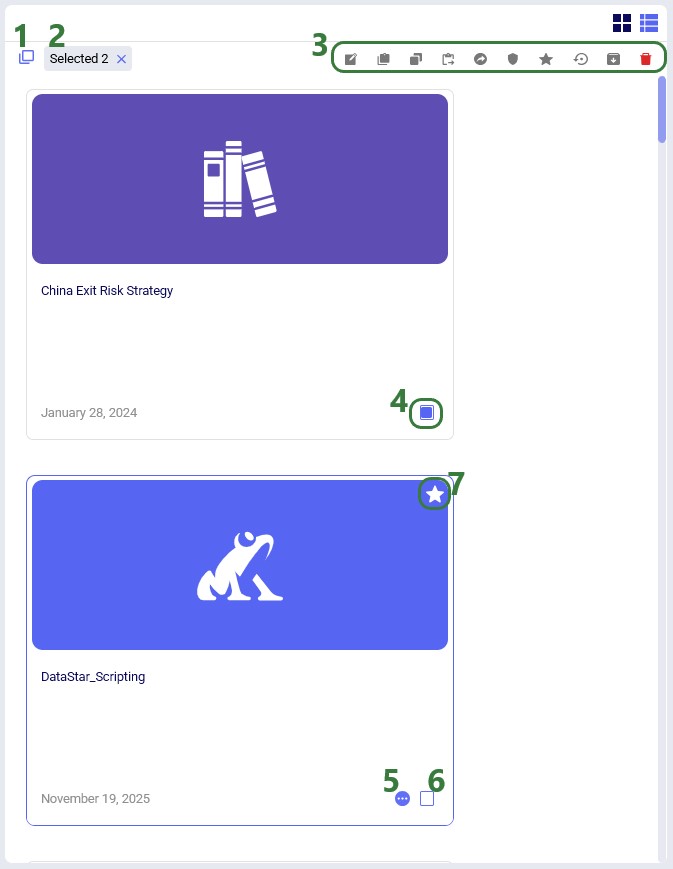

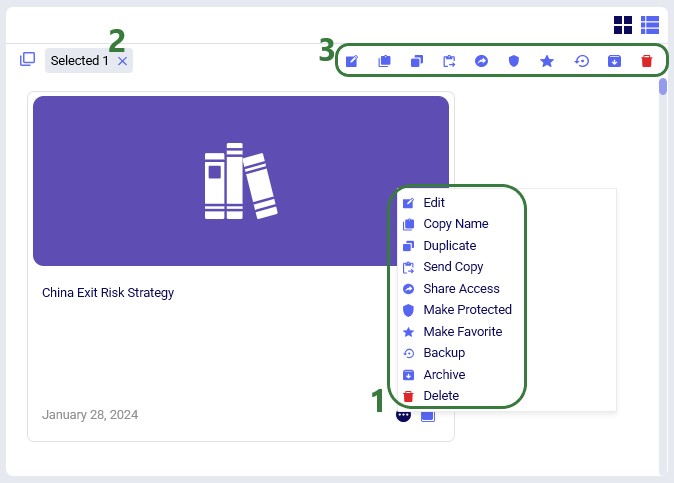

On the start page of DataStar, the user will be shown their existing projects and data connections. They can be opened, or deleted here, and users also have the ability to create new projects and data connections from this start page.

The next screenshot shows the existing projects in card format:

New projects can be created by clicking on the Create Project button in the toolbar at the top of the DataStar application:

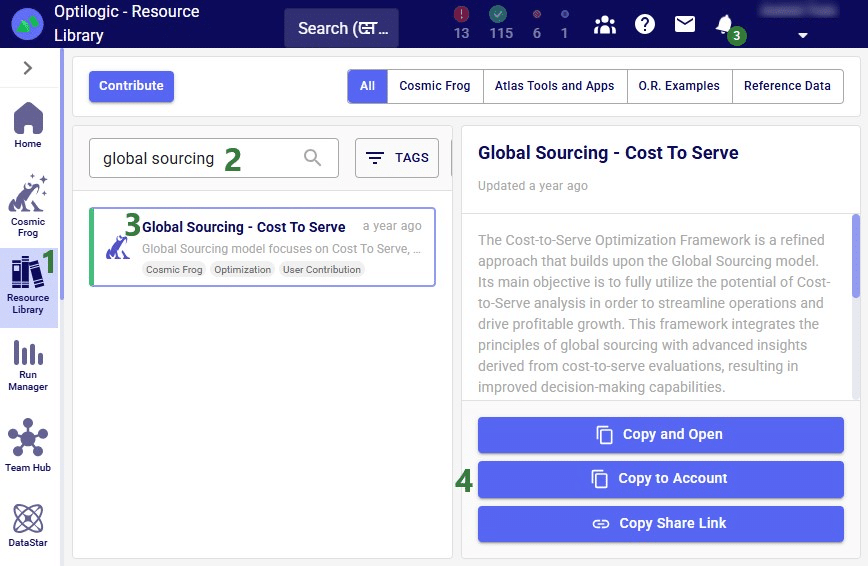

If on the Create Project form a user decides they want to use a Template Project rather than a new Empty Project, it works as follows:

These template projects are also available on Optilogic's Resource Library:

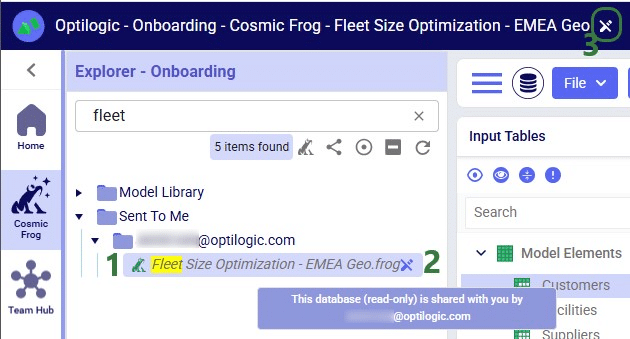

After the copy process completes, we can see the project appear in the Explorer and in the Project list in DataStar:

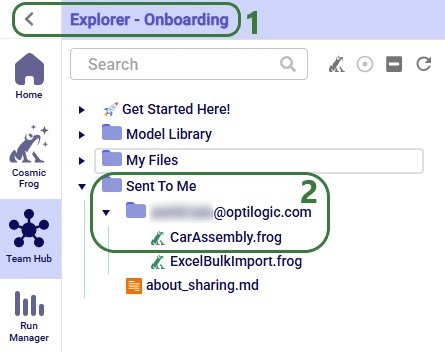

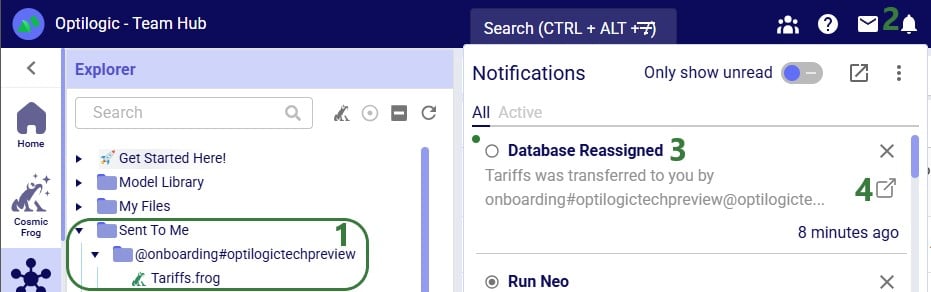

Note that any files needed for data connections in template projects copied from the Resource Library can be found under the "Sent to Me" folder in the Explorer. They will be in a subfolder named @datastartemplateprojects#optilogic (the sender of the files).

The next screenshot shows the Data Connections that have already been set up in DataStar in list view:

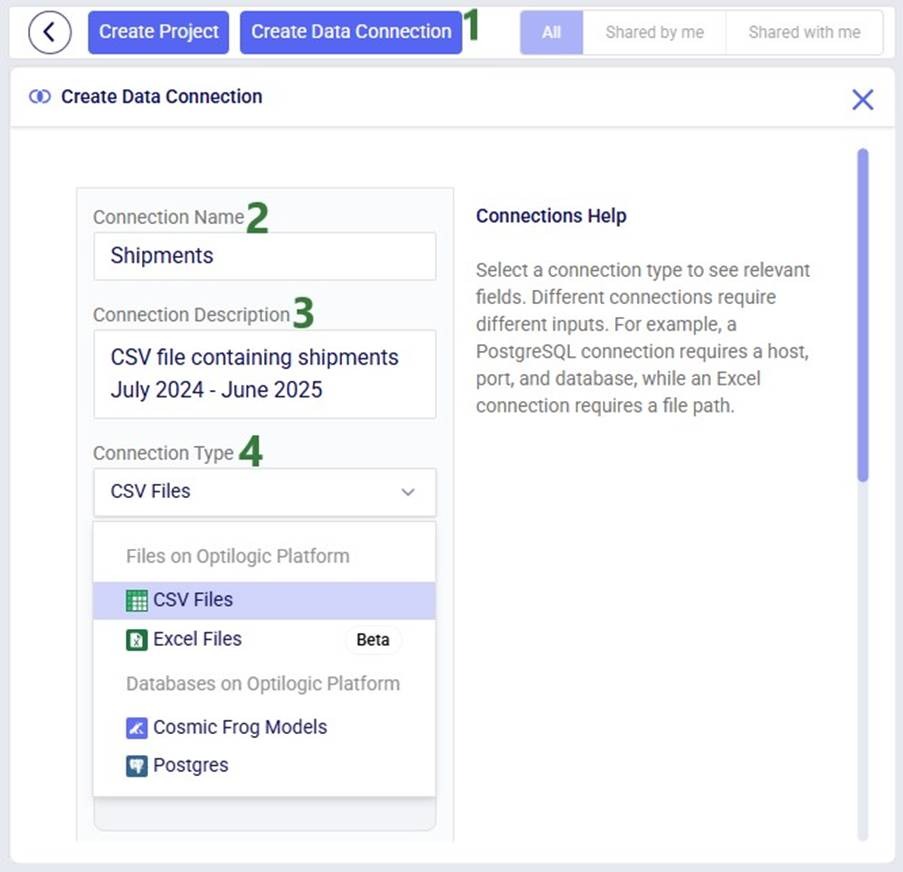

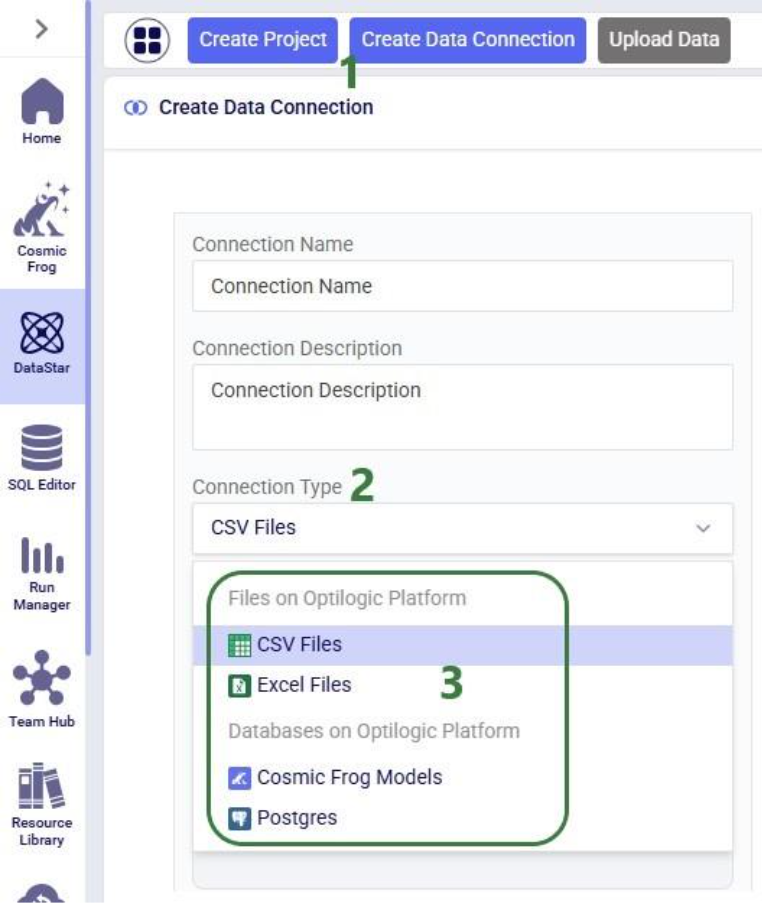

New data connections can be created by clicking on the Create Data Connection button in the toolbar at the top of the DataStar application:

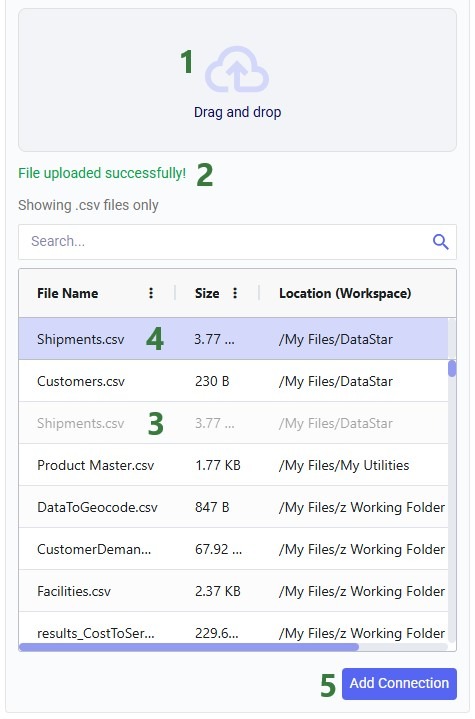

The remainder of the Create Data Connection form will change depending on the type of connection that was chosen as different types of connections require different inputs (e.g. host, port, server, schema, etc.). In our example, the user chooses CSV Files as the connection type:

In our walk-through here, the user drags and drops a Shipments.csv file from their local computer on top of the Drag and drop area:

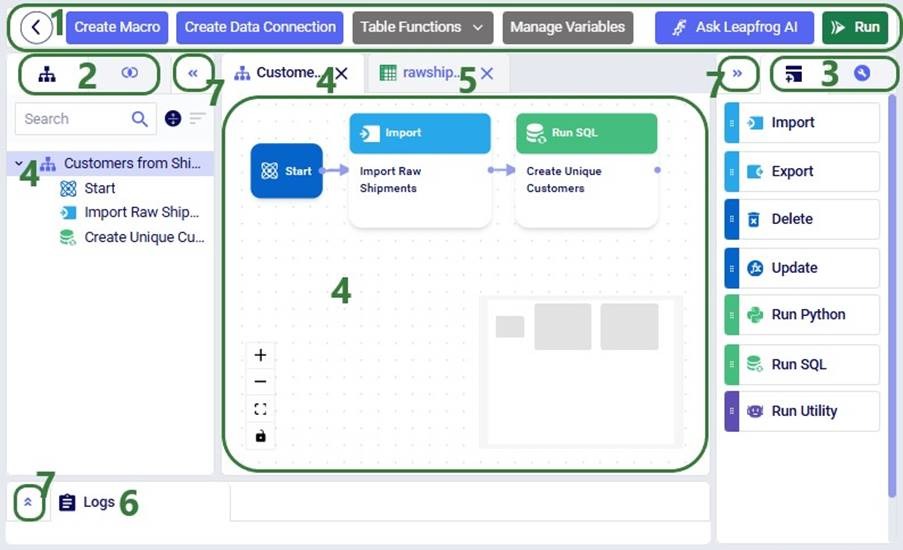

Now let us look at a project when it is open in DataStar. We will first get a lay of the land with a high-level overview screenshot and then go into more detail for the different parts of the DataStar user interface:

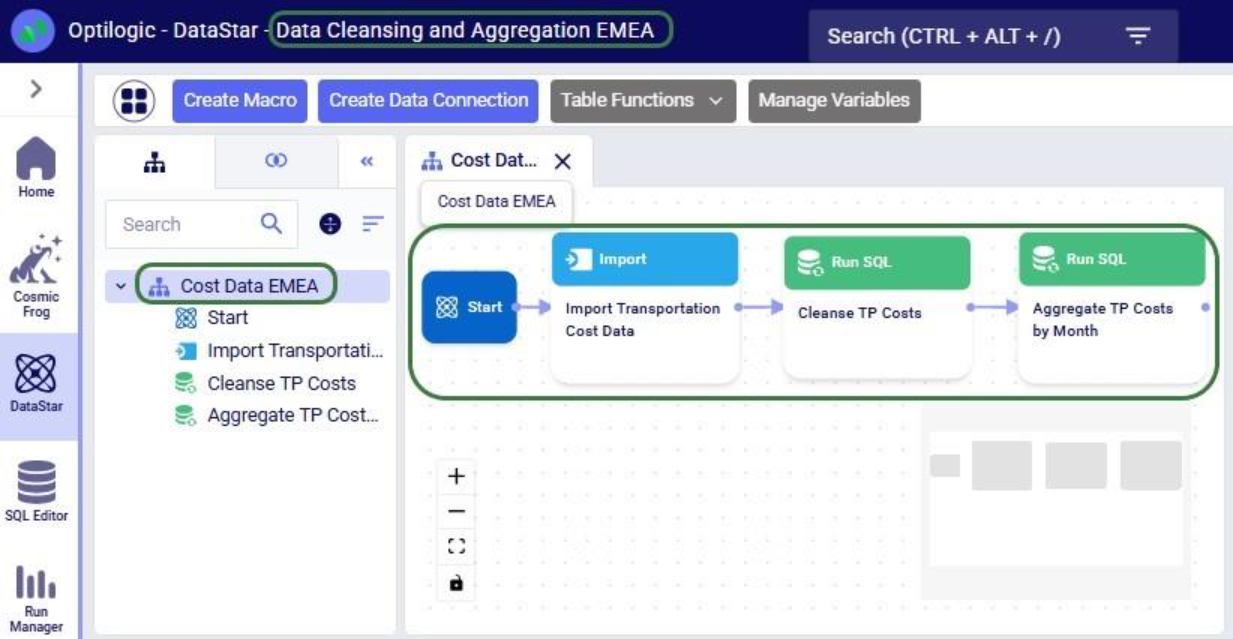

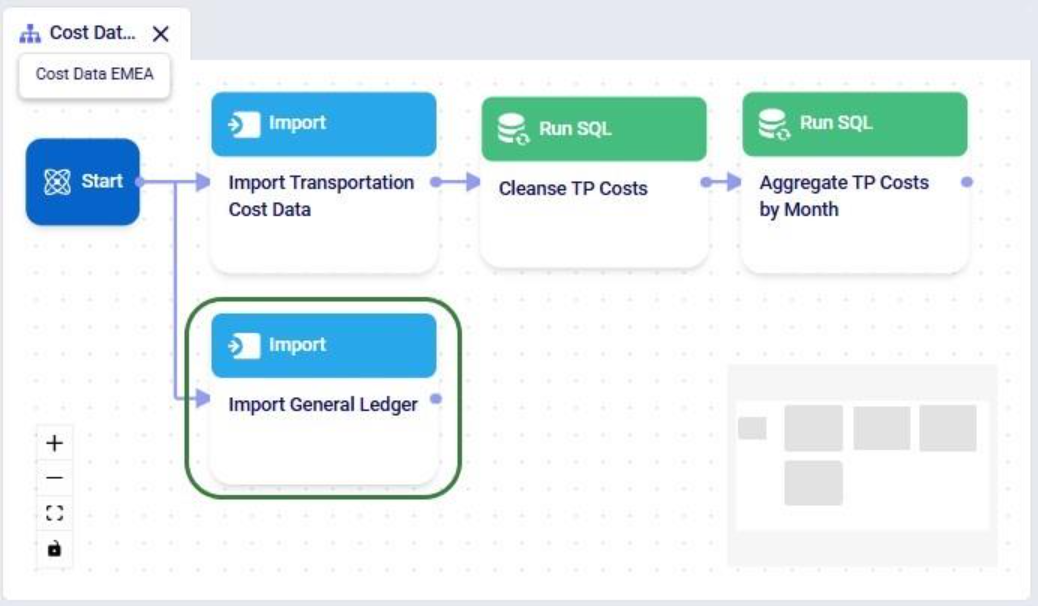

Next, we will dive a bit deeper into a macro:

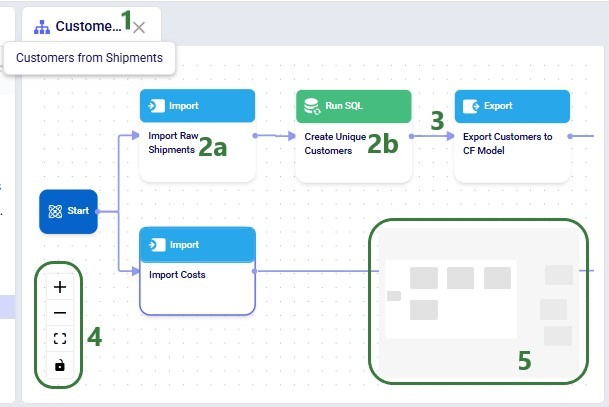

The Macro Canvas for the Customers from Shipments macro is shown in the following screenshot:

In addition to the above, please note following regarding the Macro Canvas:

We will move on to covering the 2 tabs on the right-hand side pane, starting with the Tasks tab. Keep in mind that in the descriptions of the tasks below, the Project Sandbox is a Postgres database connection. The following tasks are currently available:

Users can click on a task in the tasks list and then drag and drop it onto the macro canvas to incorporate it into a macro. Once added to a macro, a task needs to be configured; this will be covered in the next section.

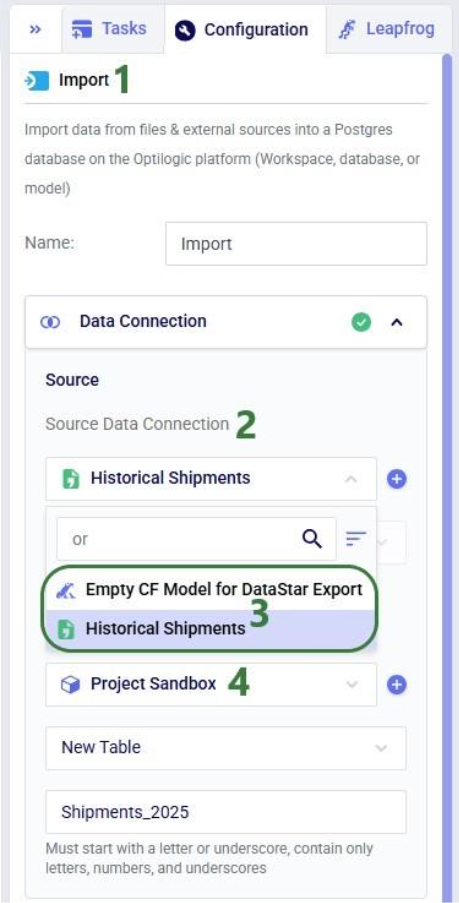

When adding a new task, it needs to be configured, which can be done on the Configuration tab. When a task is newly dropped onto the Macro Canvas its Configuration tab is automatically opened on the right-hand side pane. To make the configuration tab of an already existing task active, click on the task in the Macros tab on the left-hand side pane or click on the task in the Macro Canvas. The configuration options will differ by type of task, here the Configuration tab of an Import task is shown as an example:

Please note that:

The following table provides an overview of what connection type(s) can be used as the source / destination / target connection by which task(s), where PG is short for a PostgreSQL database connection and CF for a Cosmic Frog model connection:

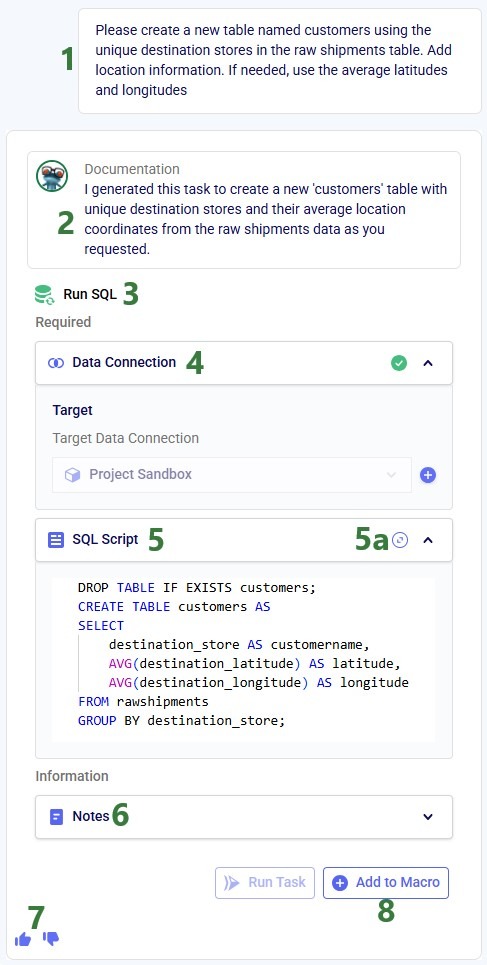

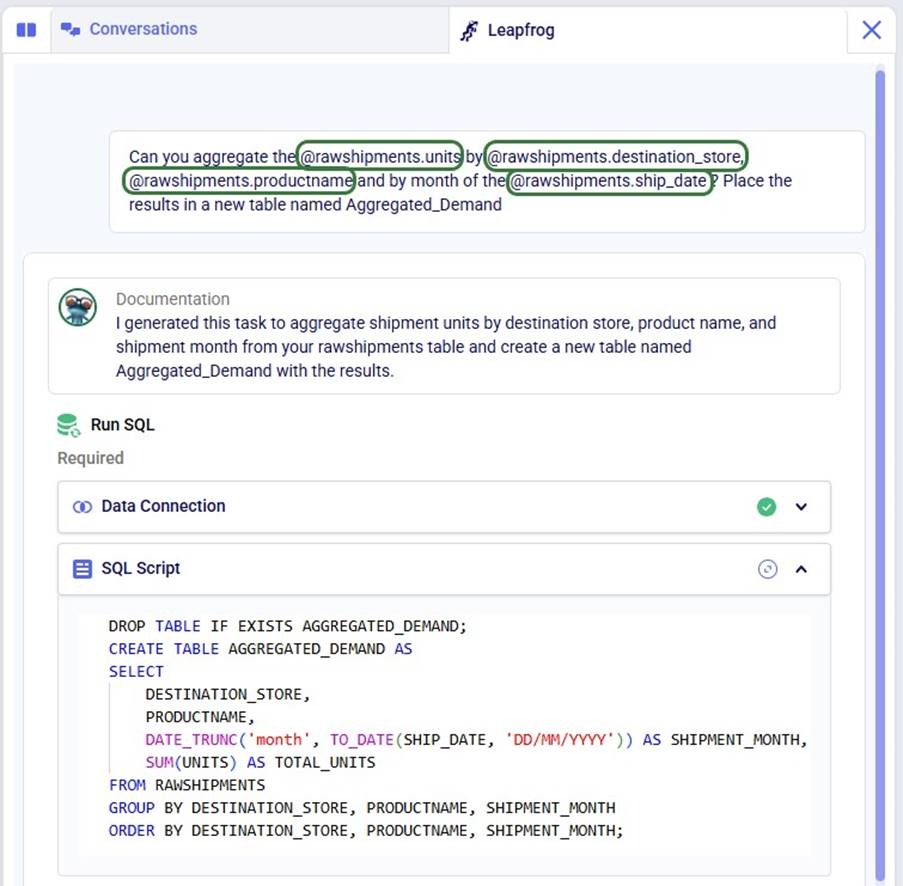

Leapfrog in DataStar (aka D* AI) is an AI-powered feature that transforms natural language requests into executable DataStar Update and Run SQL tasks. Users can describe what they want to accomplish in plain language, and Leapfrog automatically generates the corresponding task query without requiring technical coding skills or manual inputs for task details. This capability enables both technical and non-technical users to efficiently manipulate data, build Cosmic Frog models, and extract insights through conversational interactions with Leapfrog within DataStar.

Note that there are 2 appendices at the end of this documentation where 1) details around Leapfrog in DataStar's current features & limitations are covered and 2) Leapfrog's data usage and security policies are summarized.

Leapfrog’s response to this prompt is as follows:

DROP TABLE IF Exists customers;

CREATE TABLE customers AS

SELECT

destination_store AS customer,

AVG(destination_latitude) AS latitude,

AVG(destination_longitude) AS longitude

FROM rawshipments

GROUP BY destination_storeTo help users write prompts, the tables present in the Project Sandbox and their columns can be accessed from the prompt writing box by typing an @:

This user used the @ functionality repeatedly to write their prompt as follows, which helped to generate their required Run SQL task:

Now, we will also have a look at the Conversations tab while showing the 2 tabs in Split view:

Within a Leapfrog conversation, Leapfrog remembers the prompts and responses thus far. Users can therefore build upon previous questions, for example by following up with a prompt along the lines of “Like that, but instead of using a cutoff date of August 10, 2025, use September 24, 2025”.

Additional helpful DataStar Leapfrog links:

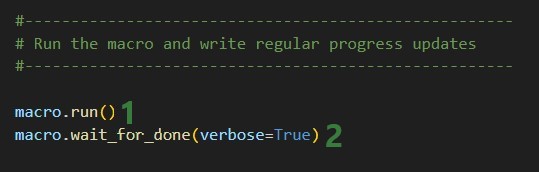

Users can run a Macro by selecting it and then clicking on the green Run button at the right top of the DataStar application:

Please note that:

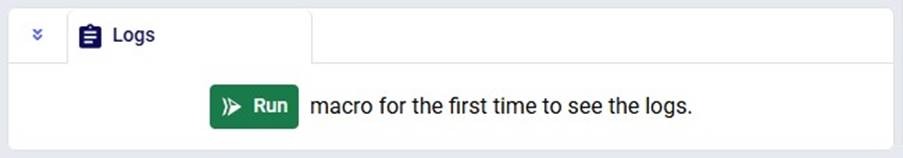

Next, we will cover the Logs tab at the bottom of the Macro Canvas where logs of macros that are running/have been run can be found:

When a macro has not yet been run, the Logs tab will contain a message with a Run button, which can also be used to kick off a macro run. When a macro is running or has been run, the log will look similar to the following:

The next screenshot shows the log of a run of the same macro where the third task ended in an error:

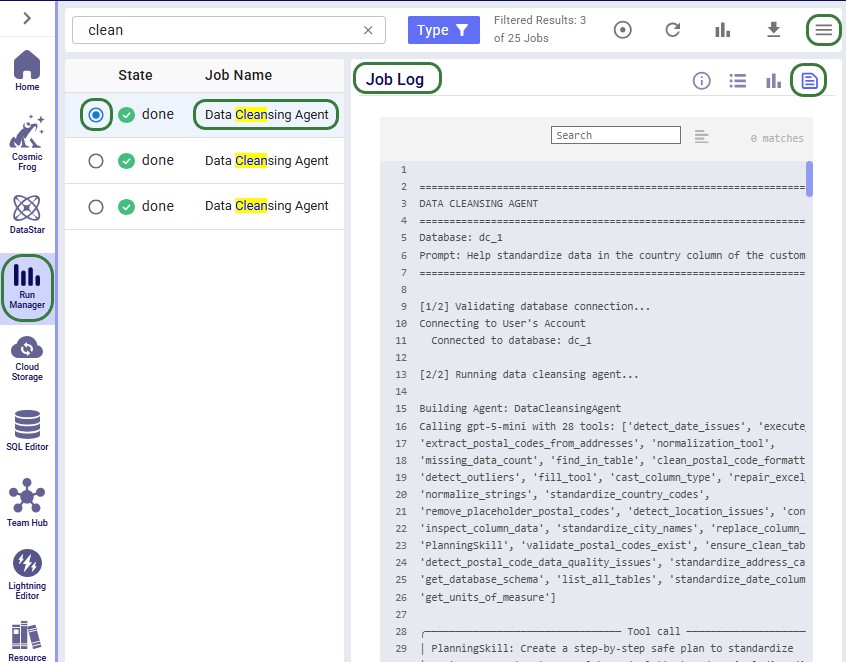

The progress of DataStar macro and task runs can also be monitored in the Run Manager application where runs can be cancelled if needed too:

Please note that:

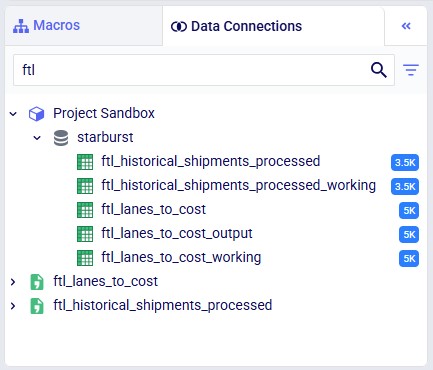

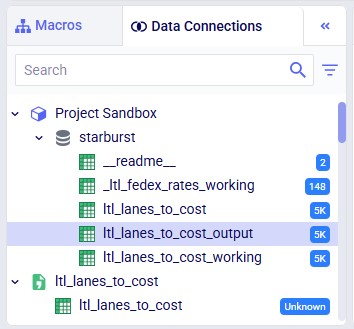

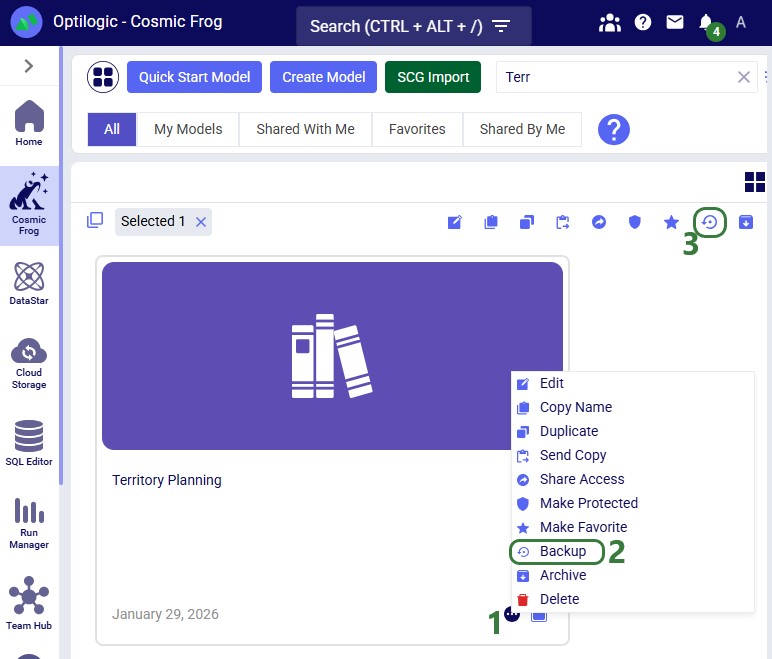

In the Data Connections tab on the left-hand side pane the available data connections are listed:

Next, we will have a look at what the connections list looks like when the connections have been expanded:

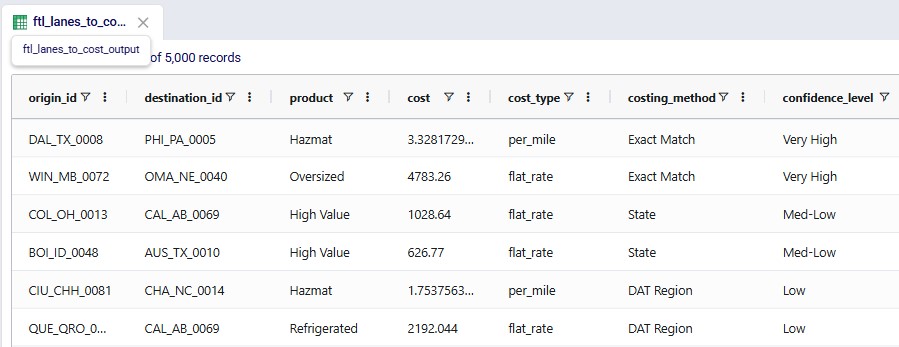

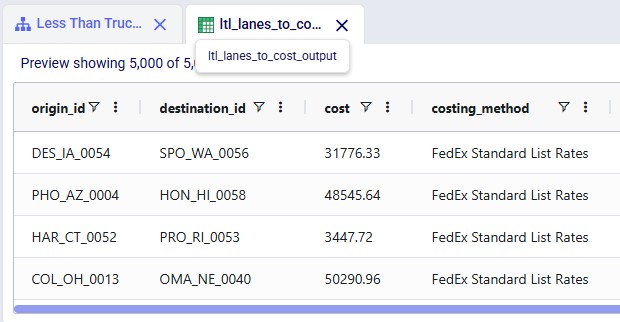

The tables within a connection can be opened within DataStar. They are then displayed in the central part of DataStar where the Macro Canvas is showing when a macro is the active tab.

Please note:

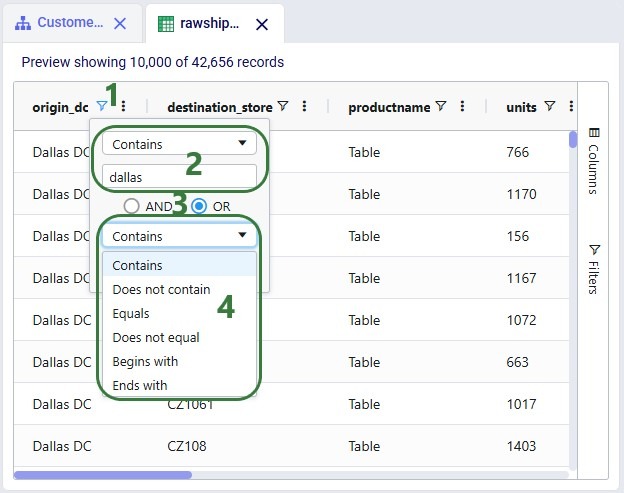

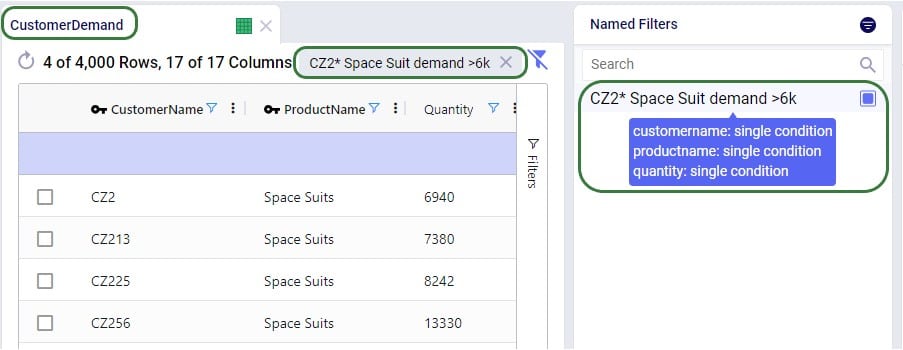

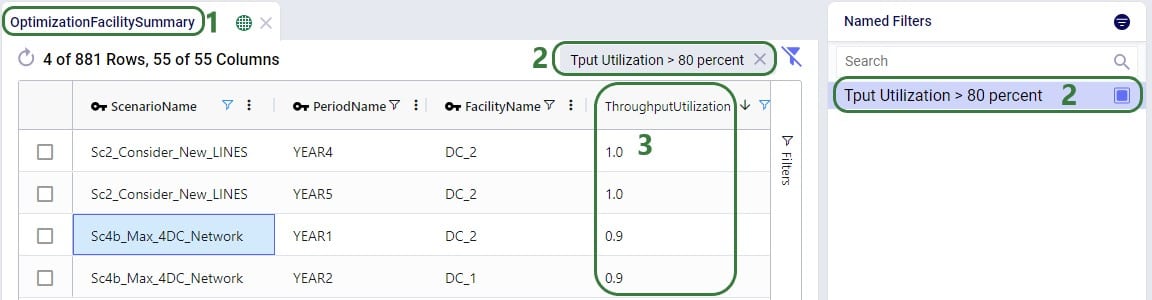

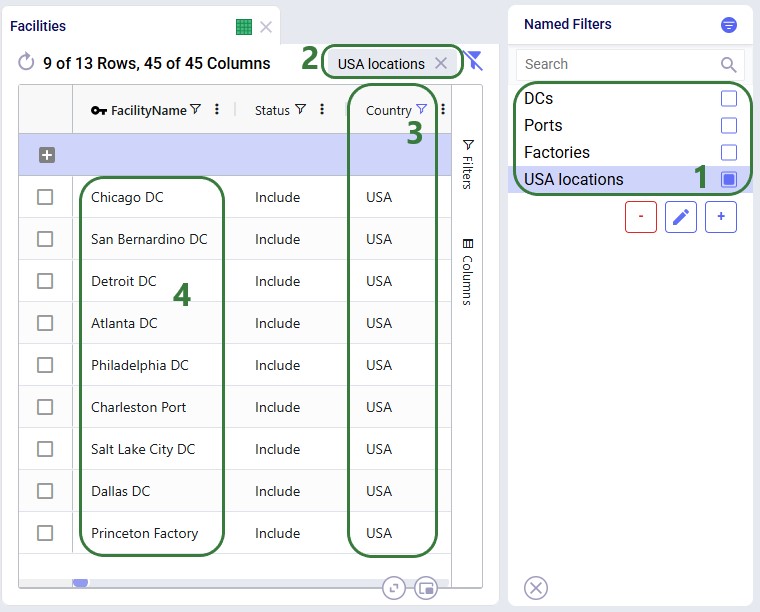

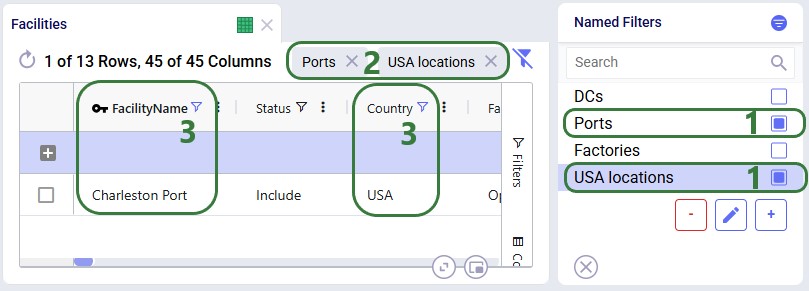

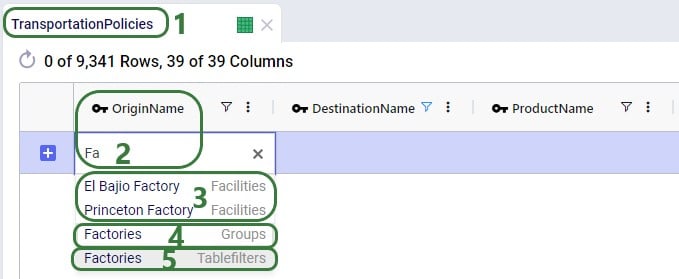

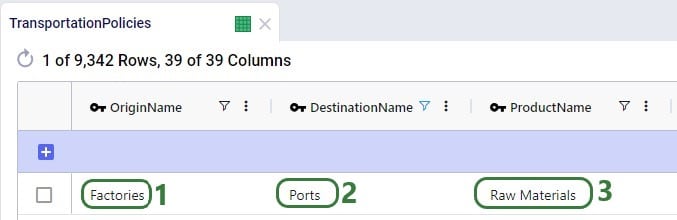

A table can be filtered based on values in one or multiple columns:

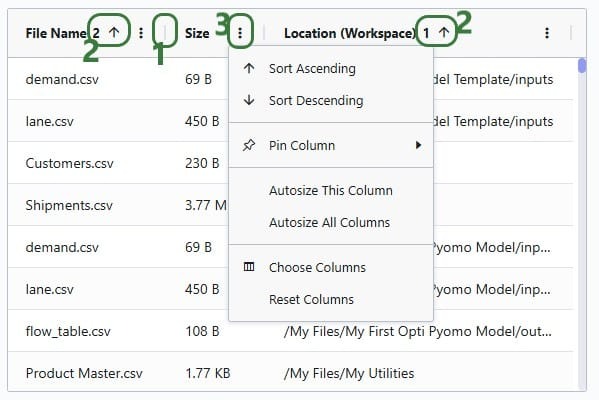

Columns can be re-ordered and hidden/shown as described in the Appendix; this can be done using the Columns fold-out pane too:

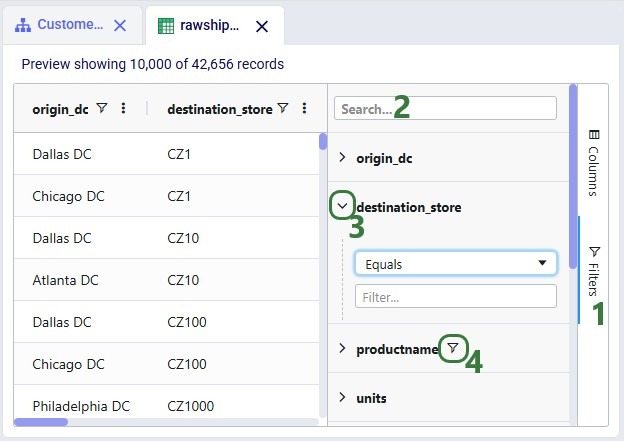

Finally, filters can also be configured from a fold-out pane:

Users can explore the complete dataset of connections with tables larger than 10k records in other applications on the Optilogic platform, depending on the type of connection:

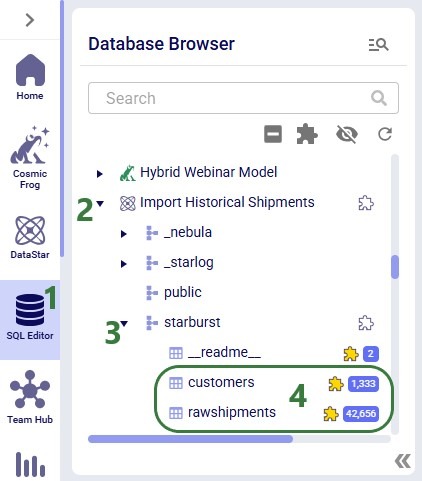

Here is how to find the database and table(s) of interest in SQL Editor:

Here are a few additional links that may be helpful:

We hope you are as excited about starting to work with DataStar as we are! Please stay tuned for regular updates to both DataStar and all the accompanying documentation. As always, for any questions or feedback, feel free to contact our support team at support@optilogic.com.

The grids used in DataStar can be customized and we will cover the options available through the screenshot below. This screenshot is of the list of CSV files in user's Optilogic account when creating a new CSV File connection. The same grid options are available on the grid in the Logs tab and when viewing tables that are part of any Data Connections in the central part of DataStar.

Leapfrog's brainpower comes from:

All training processes are owned and managed by Optilogic — no outside data is used.

When you ask Leapfrog a question:

Your conversations (prompts, answers, feedback) are stored securely at the user level.

Exciting tools that drastically shorten the time spent wrangling data, building supply chain models for Cosmic Frog, and analyzing outputs of these models are now available on the Optilogic platform.

This documentation briefly explains how to access these AI Agents and Utilities, lists the available tools with a short description of each, and provides links to detailed documentation for several of these tools.

Before we dive into how to access the AI Agents & Utilities, here are a few links you may find helpful:

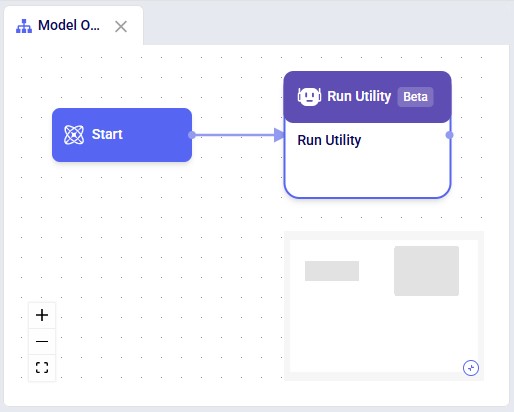

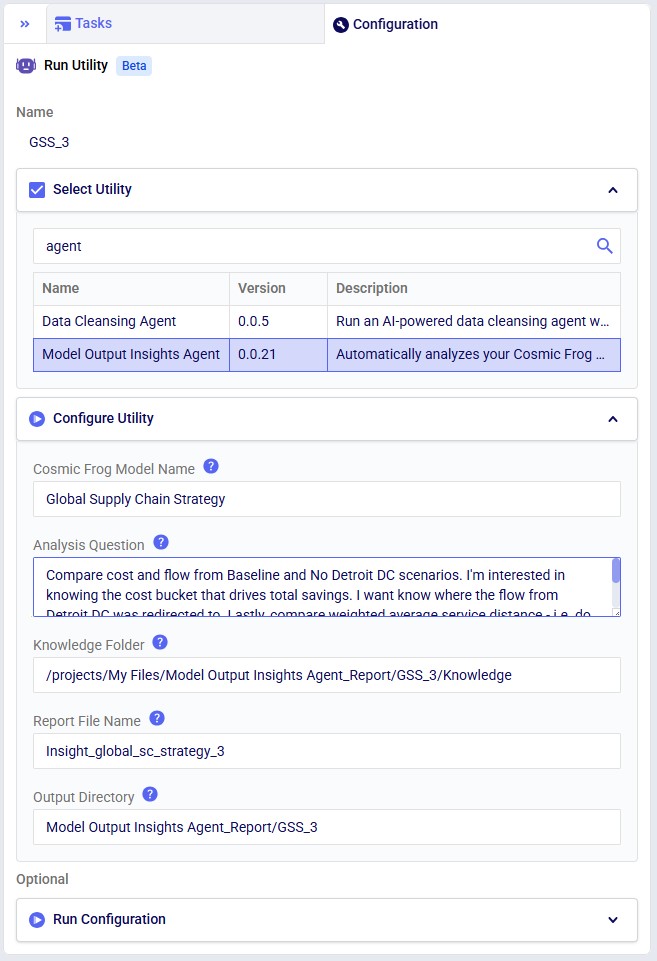

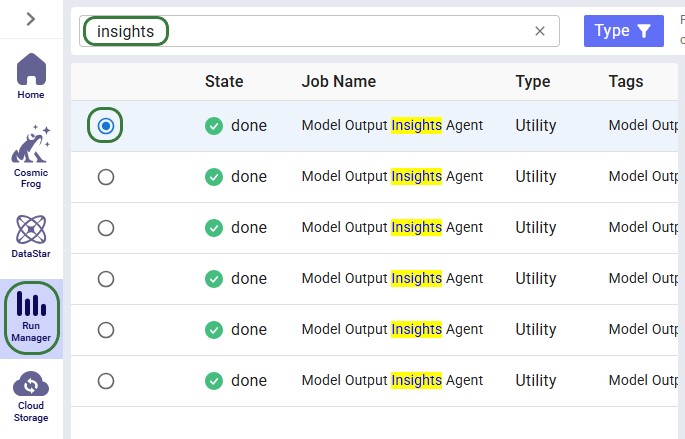

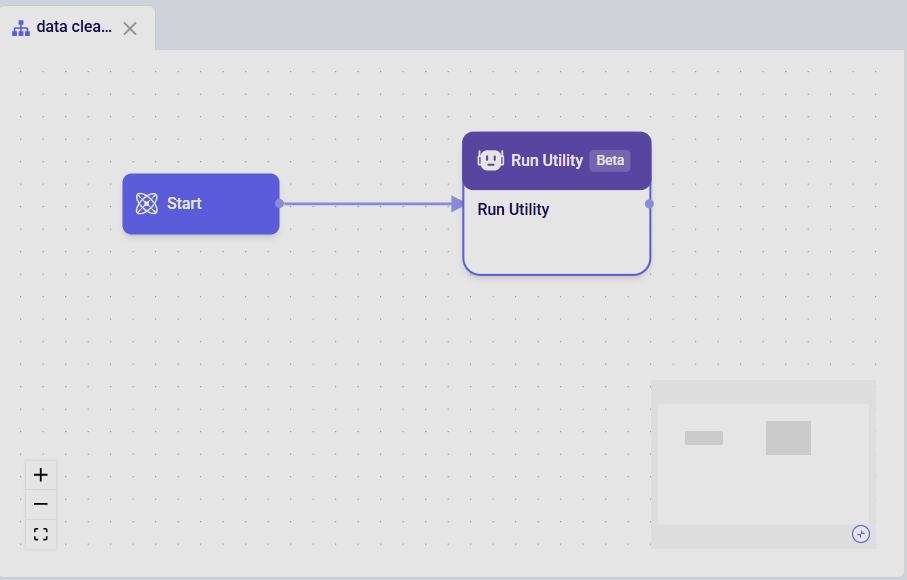

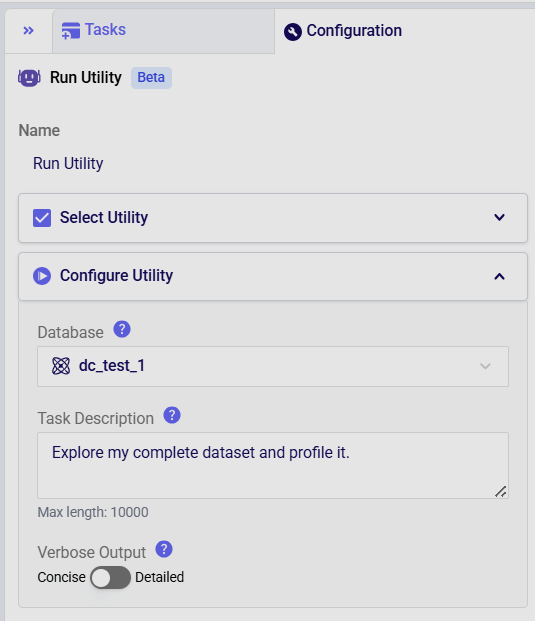

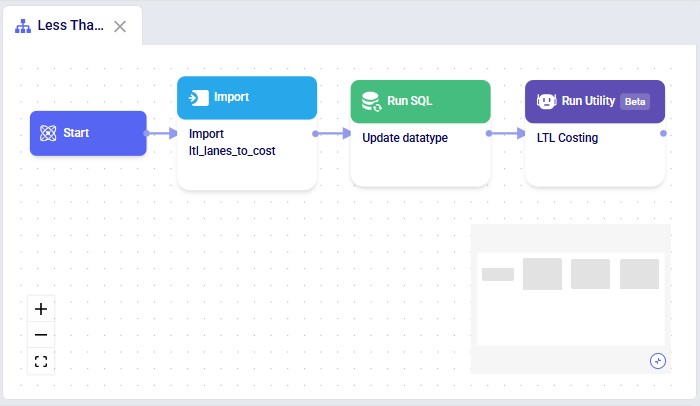

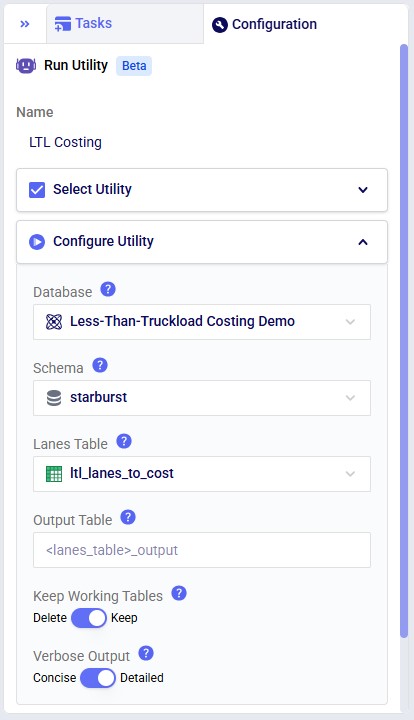

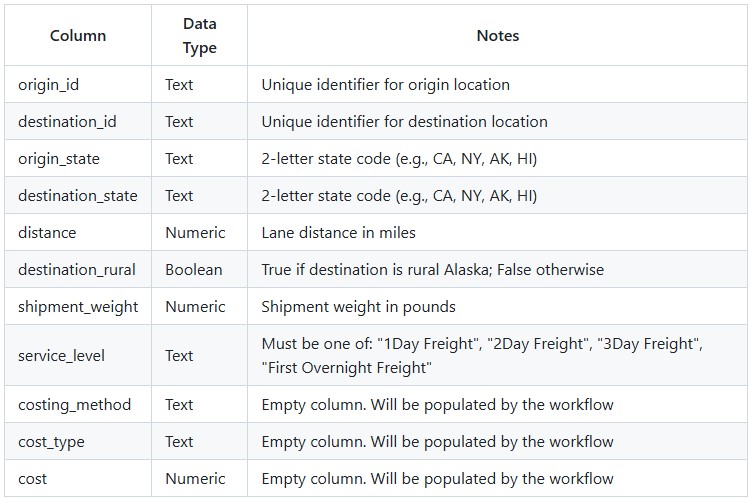

Currently, the available Agents and Utilities are accessed by using Run Utility tasks in DataStar. At a high level, the steps are as follows (screenshots follow beneath):

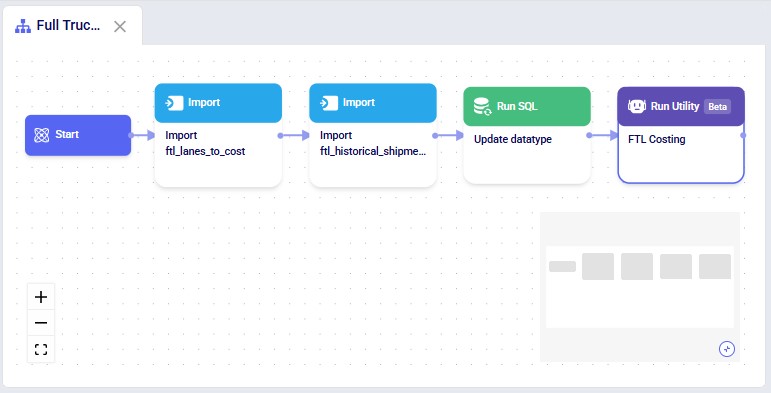

Your macro canvas will look similar to the following screenshot after step #4:

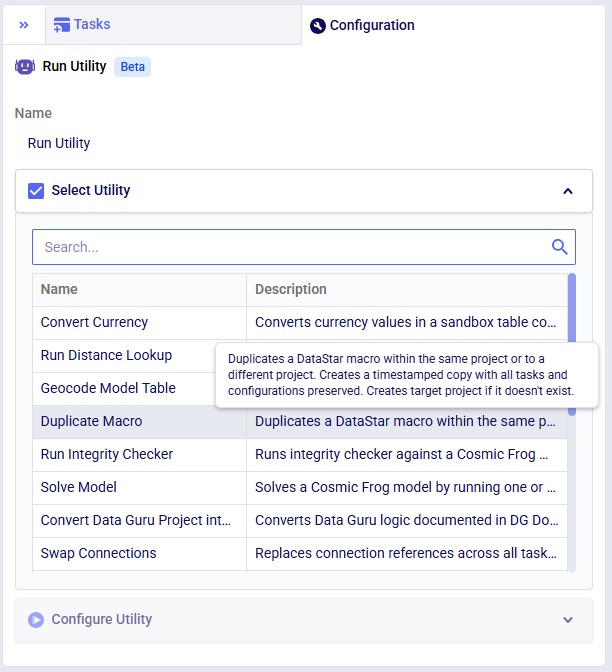

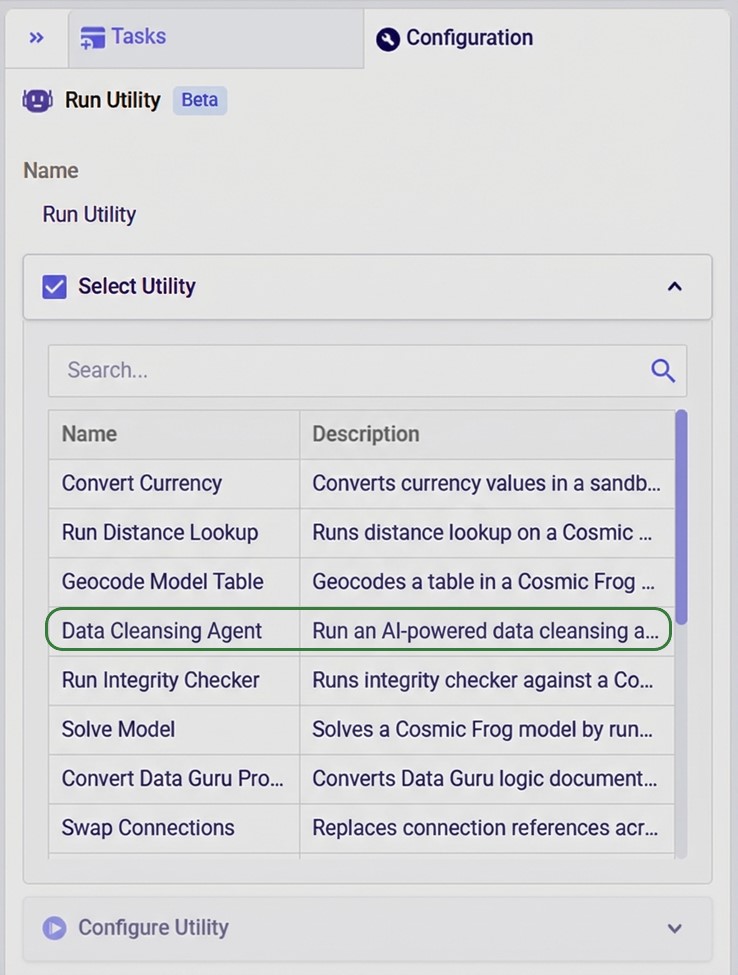

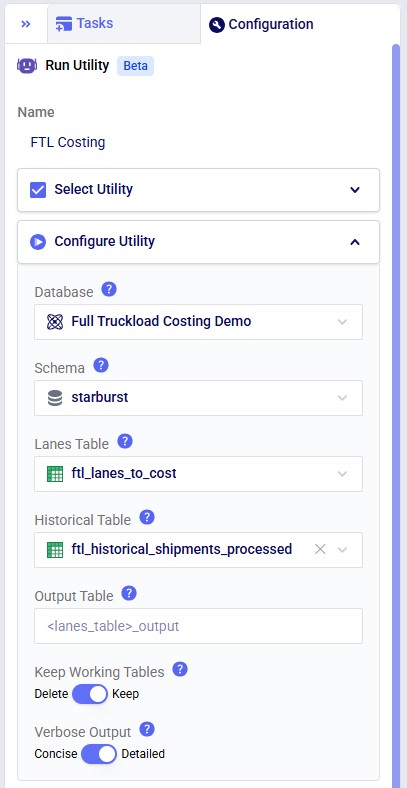

After adding a task, its configuration tab is automatically shown on the right-hand side. Give the task a name, and then select the Agent or Utility you want to use from the list of available Agents/Utilities in the Select Utility section. You can also use the Search box to quickly find any Agent/Utility that contains certain text in its name or description. Hover over the description of an Agent/Utility to see the full description in case it is not entirely visible:

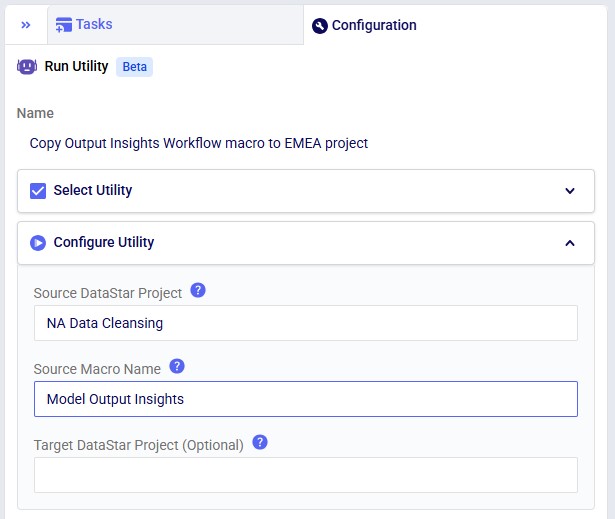

Once an Agent/Utility has been selected by clicking on it, the Configure Utility section becomes available. The inputs here will differ based on the Agent/Utility that has been selected. In the next screenshot the Configure Utility section of the Duplicate Macro utility is shown:

Provide the inputs for at least the required parameters, and if desired for any optional ones. Note that hovering over a blue question mark icon will bring up a hover box with a description of the parameter. For example, hovering over the blue question mark of the Source Macro Name parameter brings up "Name of the macro to duplicate (case-sensitive)".

The following AI Agents and Utilities are currently available. More are being added as they come available. For each a short description is given and for those that have more detailed documentation to go with them, a link to this documentation is included.

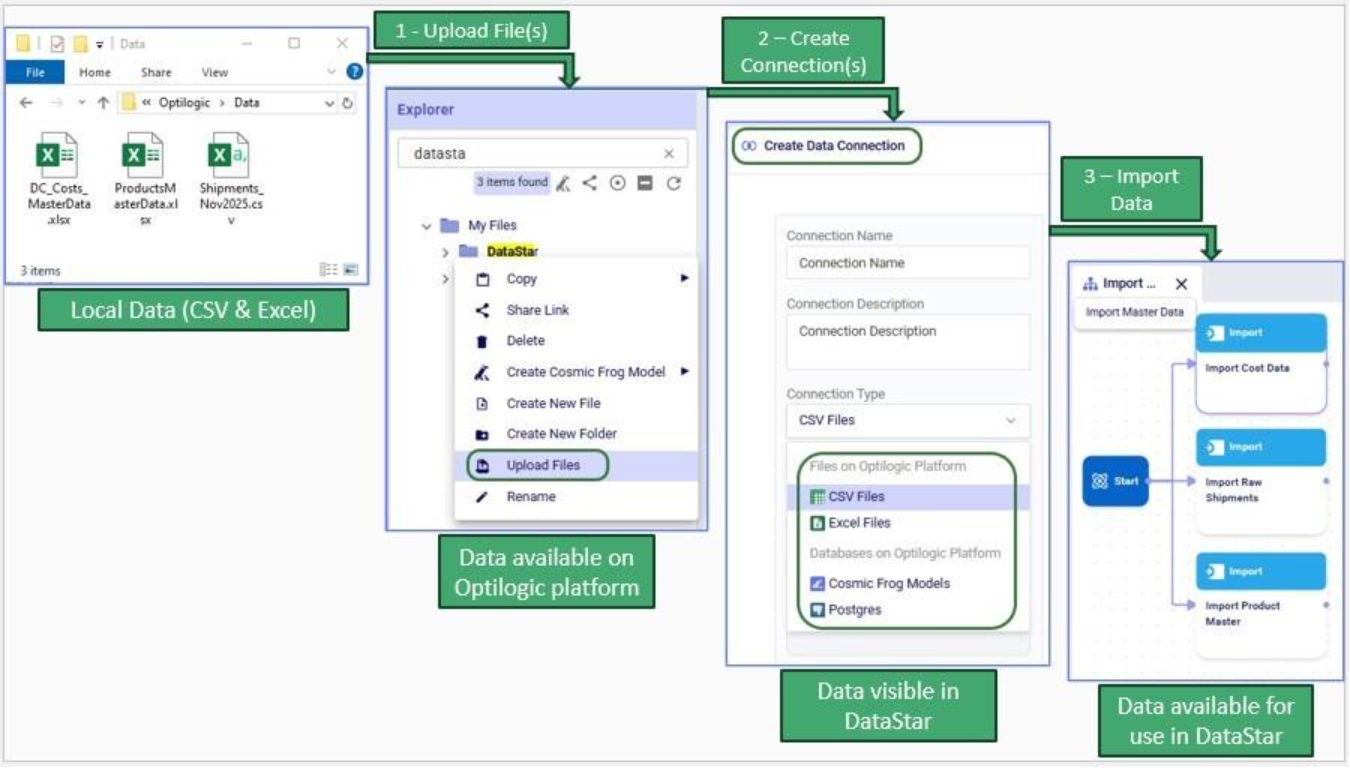

DataStar users typically will want to use data from a variety of sources in their projects. This data can be in different locations and systems and there are multiple methods available to get the required data into the DataStar application. In this documentation we will describe the main categories of data sources users may want to use and the possible ways of making these available in DataStar for usage.

If you would first like to learn more about DataStar before diving into data integration specifics, please see the Navigation DataStar articles on the Optilogic Help Center.

The following diagram shows different data sources and the data transfer pathways to make them available for use in DataStar:

We will dive a bit deeper into making local data available for use in DataStar building upon what was covered under bullets 5a-5c in the previous screenshot. First, we will familiarize ourselves with the layout of the Optilogic platform:

Next, we will cover the 3 steps to go from data sitting on a user’s computer locally to being able to use it in DataStar in detail through the next set of screenshots. At a high-level the steps are:

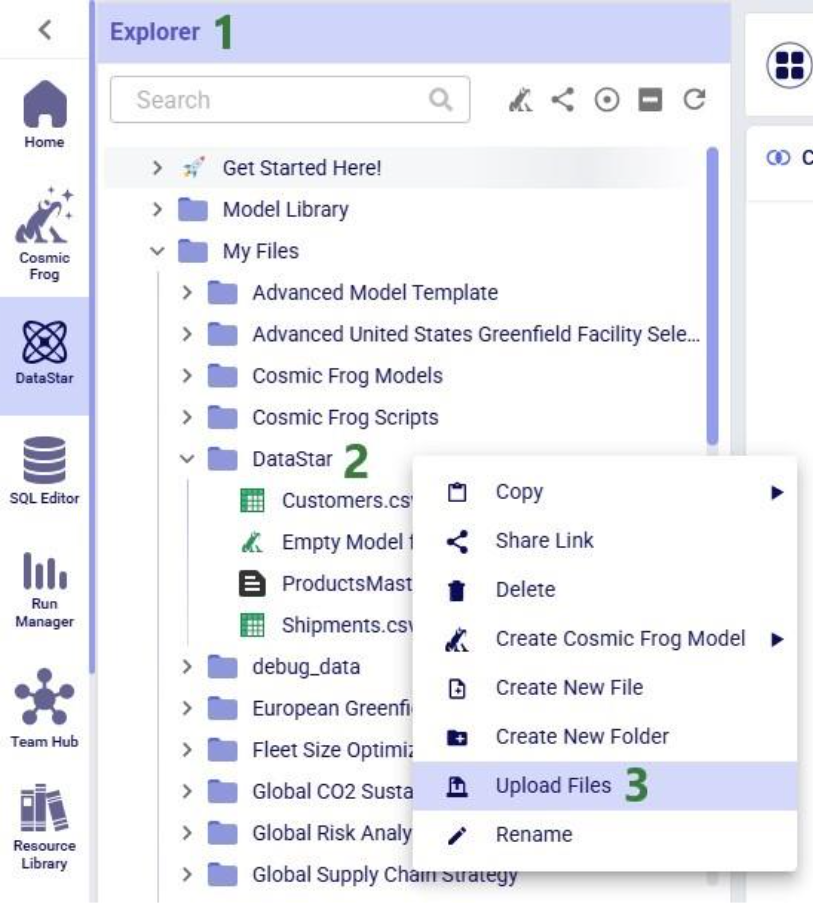

To get local data onto the Opitlogic platform, we can use the file / folder upload option:

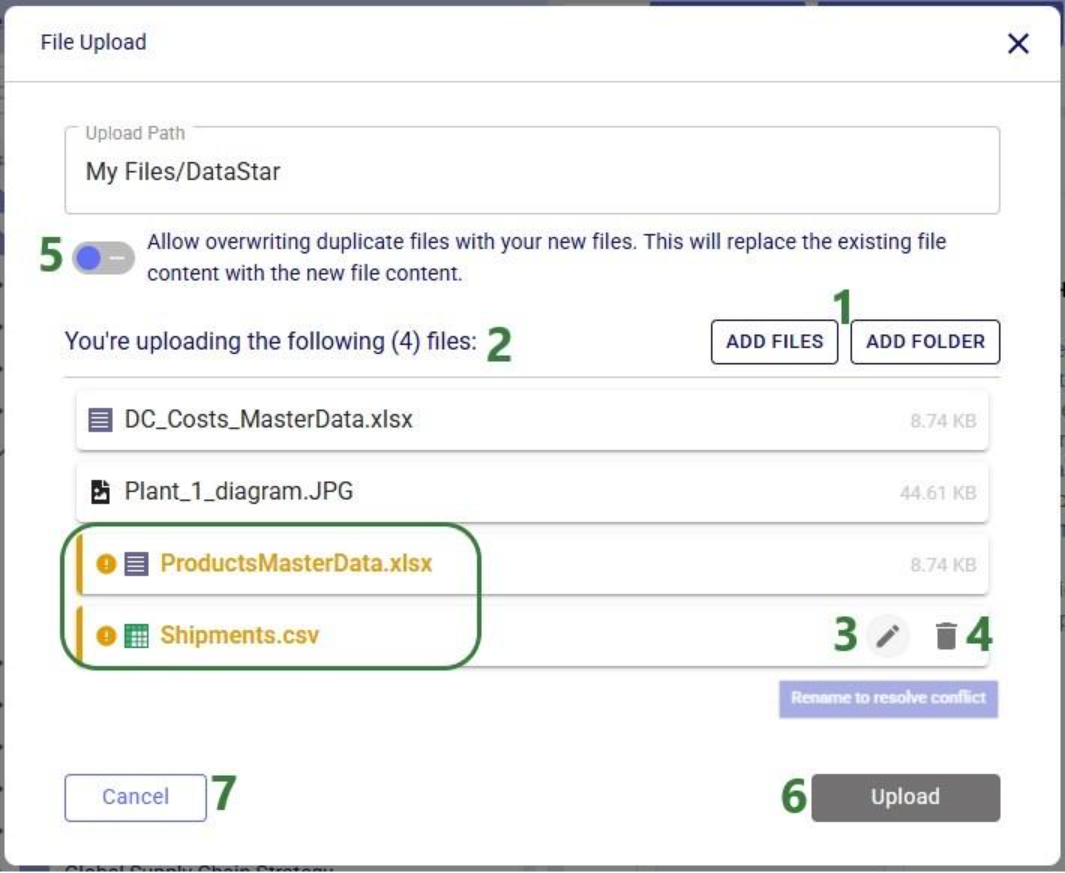

Select either the file(s) or folder you want to upload by browsing to it/them. After clicking on Open, the File Upload form will be shown again:

Note that files in the upload list that will not cause name conflicts can also be renamed or removed from the list if so desired. This can for example be convenient when wanting to upload most files in a folder, except for a select few. In that case use the Add Folder option and in the list that will be shown, remove the few that should not be uploaded rather than using Add Files and then manually selecting almost all files in a folder.

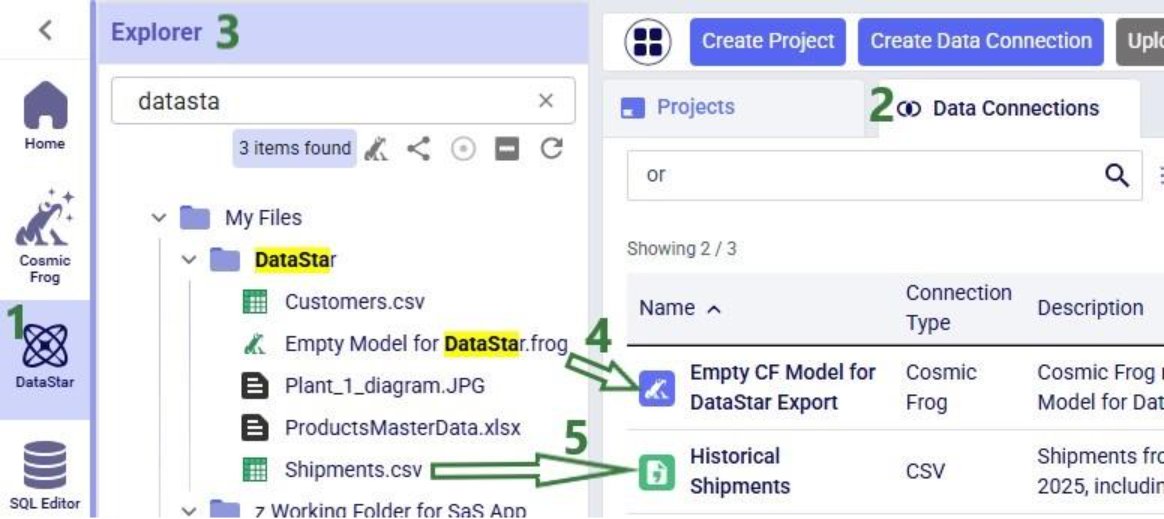

Once the files are uploaded, you will be able to see them in the Explorer by expanding the folder they were uploaded to or searching for (part of) their name using the Search box.

The second step is to then make these files visible to DataStar by setting up Data Connections to them:

After setting up a Data Connection to a Cosmic Frog model and to a CSV file, we can see the source files in the Explorer, and the Data Connections pointing to these in DataStar side-by-side:

To start using the data in DataStar, we need to take the third step of importing the data from the data connections into a project. Typically, the data will be imported into the Project Sandbox, but this could also be into another Postgres database, including a Cosmic Frog model. Importing data is done using Import tasks; the Configuration tab of one is shown in this next screenshot:

The 3 steps described above are summarized in the following sequence of screenshots:

For a data workflow that is used repeatedly and needs to be re-run using the latest data regularly, users do not need to go through all 3 steps above of uploading data, creating/re-configuring data connections, and creating/re-configuring Import tasks to refresh local data. If the new files to be used have the same name and same data structure as the current ones, replacing the files on the Optilogic platform with the newer ones will suffice (so only step 1 is needed); the data connections and Import tasks do not need to be updated or re-configured. Users can do this manually or programmatically:

This section describes how to bring external data into DataStar using supported integration patterns where the data transfer is started from an external system, e.g. the data is “pushed” onto the Optilogic platform.

External systems such as ETL tools, automation platforms, or custom scripts can load data into DataStar through the Optilogic Pioneer API (please see the Optilogic REST API documentation for details). This approach is ideal when you want to programmatically upload files, refresh datasets, or orchestrate transformations without connecting directly to the underlying database.

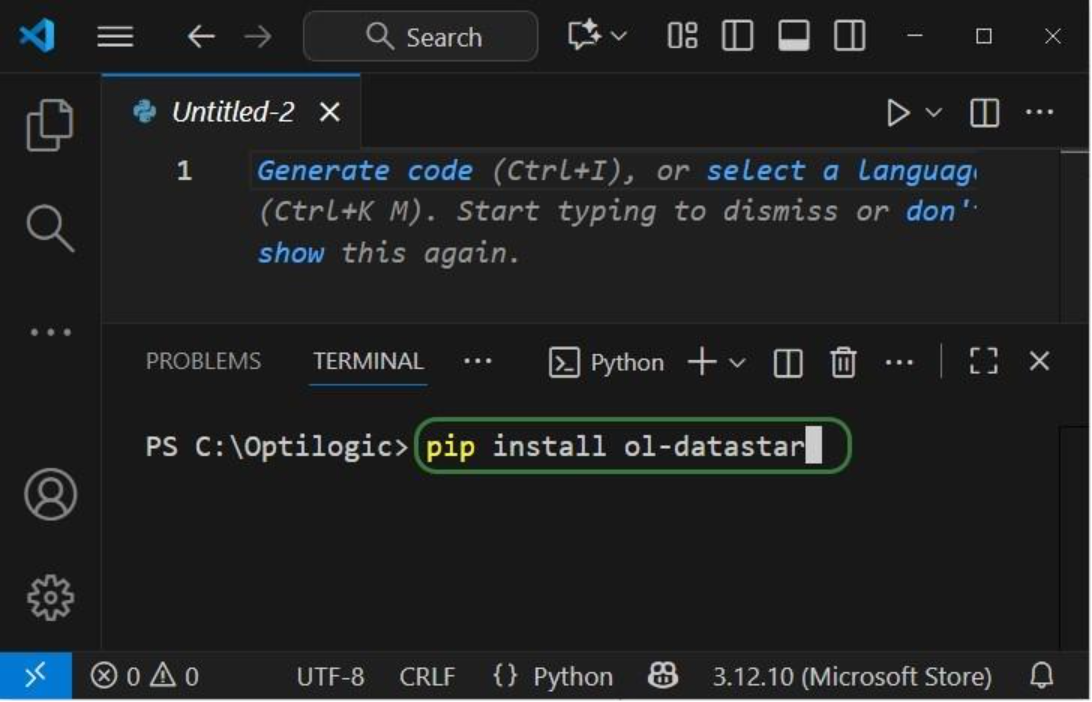

Key points:

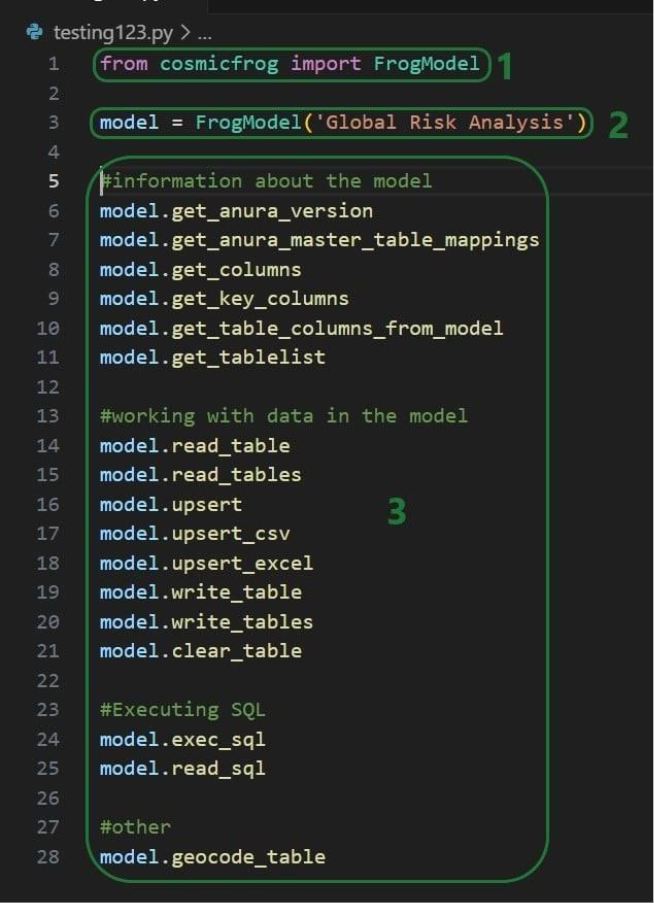

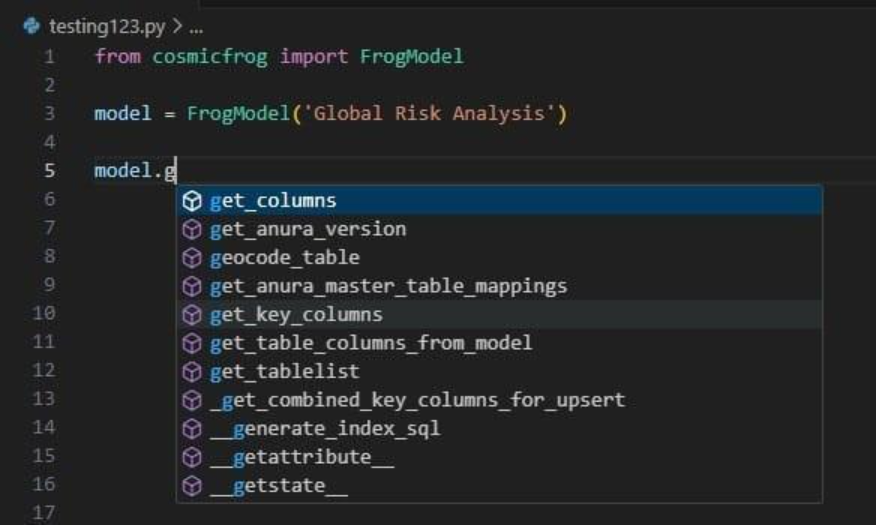

Please note that Optilogic has developed a Python library to facilitate scripting for DataStar. If your external system is Python based, you can leverage this library as a wrapper for the API. For more details on working with the library and a code example of accessing a DataStar project’s sandbox, see this “Using the DataStar Python Library” help center article.

Every DataStar project is backed by a PostgreSQL database. You can connect directly to this database using any PostgreSQL-compatible driver, including:

This enables you to write or update data using SQL, query the sandbox tables, or automate recurring loads. The same approach applies to both DataStar projects and Cosmic Frog models since both use PostgreSQL under the hood. Please see this help center article on how to retrieve connection strings for Cosmic Frog model and DataStar project databases; these will need to be passed into the database connection to gain access to the model / project database.

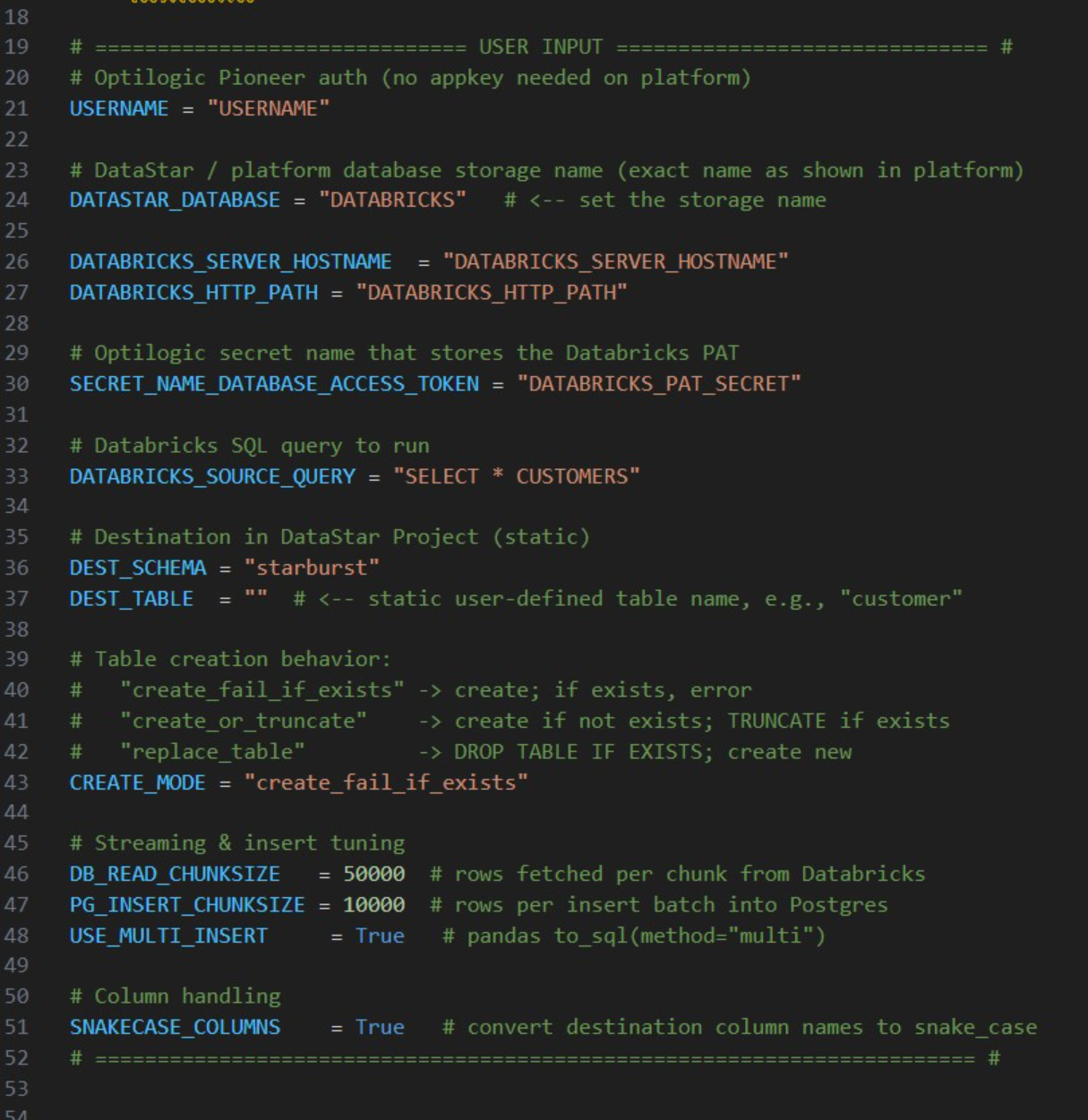

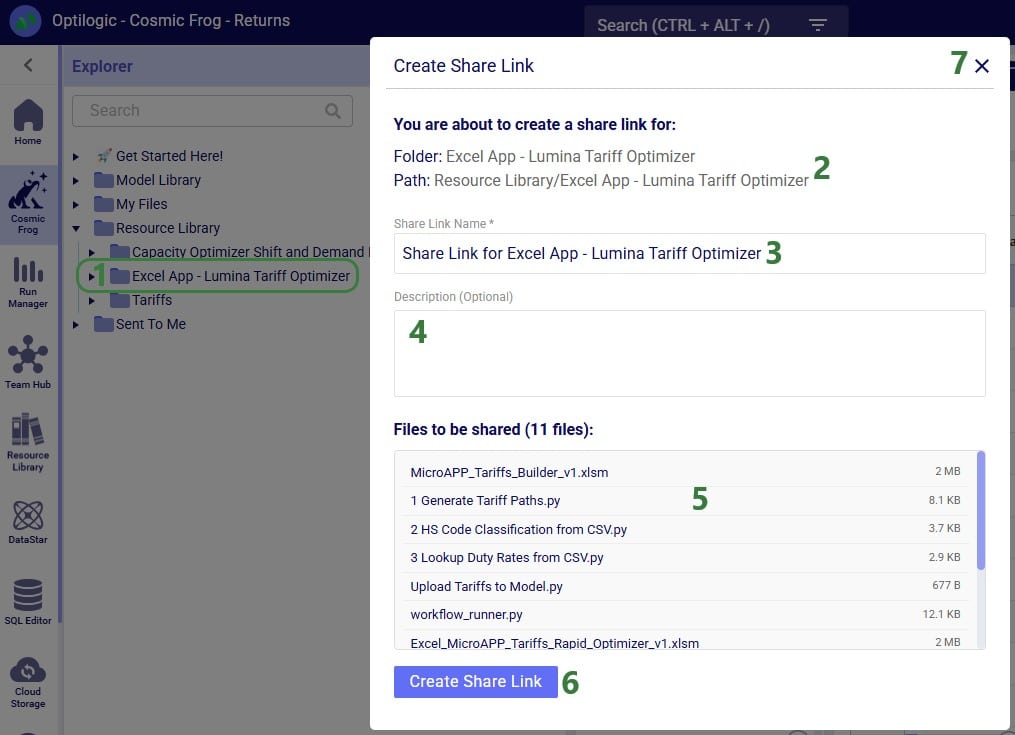

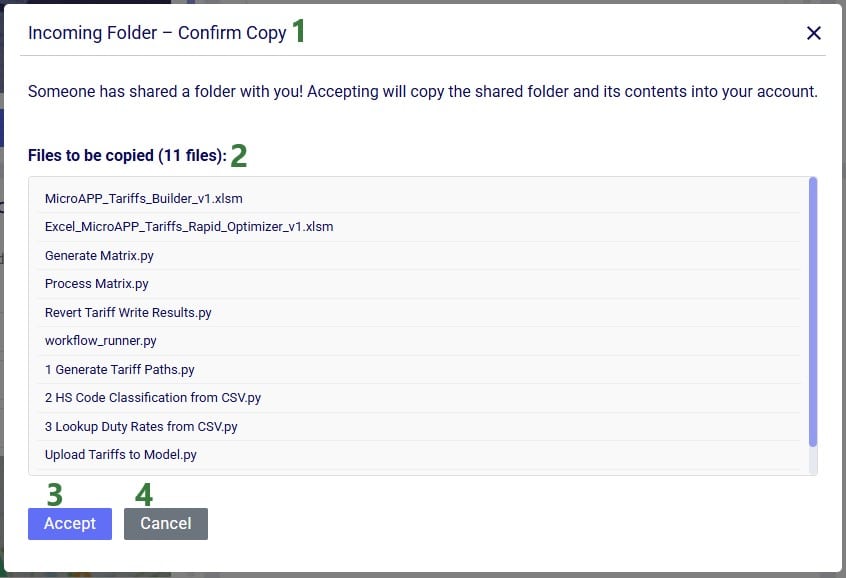

Several scripts and utilities to connect to common external data sources, including Databricks, Google Big Query, Google Drive, and Snowflake, are available on Optilogic’s Resource Library:

These utilities and scripts can function as a starting point to modify into your own desired script for connecting to and retrieving data from a certain data source. You will need to update authentication and connection information in the scripts and configure the user settings to your needs. For example, this is the User Input section of the “Databricks Data Import Script”:

The user needs to update following lines; others can be left at defaults and only updated if desired/required:

For any questions or feedback, please feel free to reach out to the Optilogic support team on support@optilogic.com.

In this quick start guide we will walk-through importing a CSV file into the Project Sandbox of a DataStar project. The steps involved are:

Our example CSV file is one that contains historical shipments from May 2024 through August 2025. There are 42,656 records in this Shipments.csv file, and if you want to follow along with the steps below you can download a zip-file containing it here (please note that the long character string at the beginning of the zip's file name is expected).

Open the DataStar application on the Optilogic platform and click on the Create Data Connection button in the toolbar at the top:

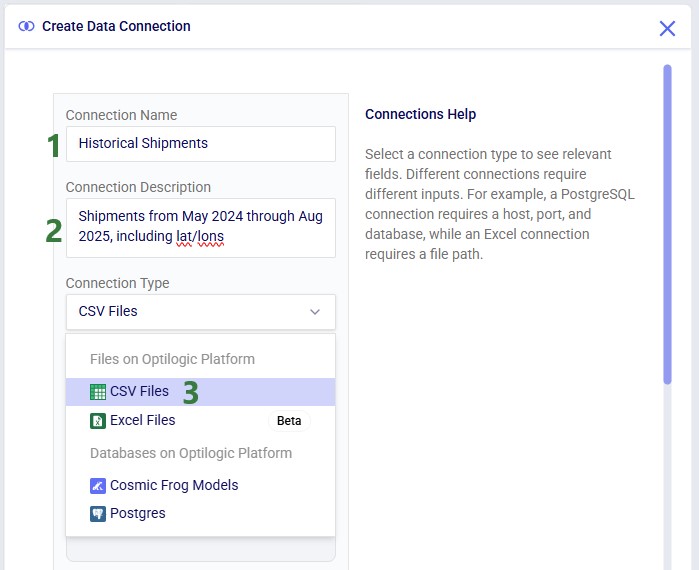

In the Create Data Connection form that comes up, enter the name for the data connection, optionally add a description, and select CSV Files from the Connection Type drop-down list:

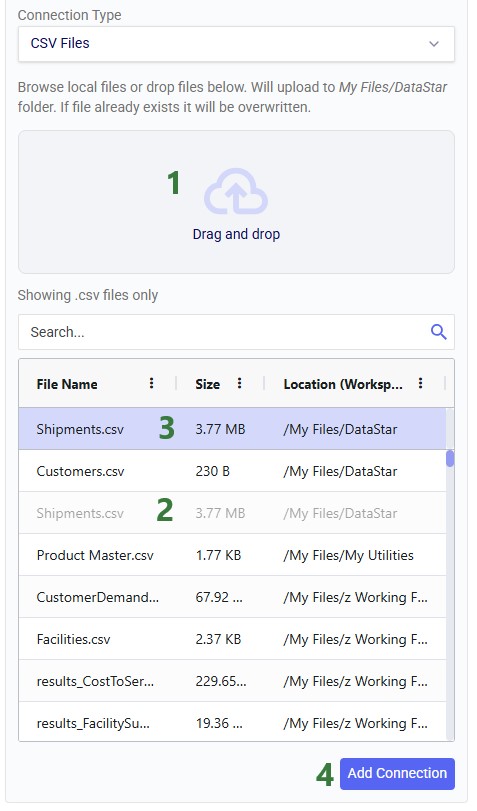

If your CSV file is not yet on the Optilogic platform, you can drag and drop it onto the “Drag and drop” area of the form to upload it to the /My Files/DataStar folder. If it is already on the Optilogic platform or after uploading it through the drag and drop option, you can select it in the list of CSV files. Once selected it becomes greyed out in the list to indicate it is the file being used; it is also pinned at the top of the list with darker background shade so users know without scrolling which file is selected. Note that you can filter this list by typing in the Search box to quickly find the desired file. Once the file is selected, clicking on the Add Connection button will create the CSV connection:

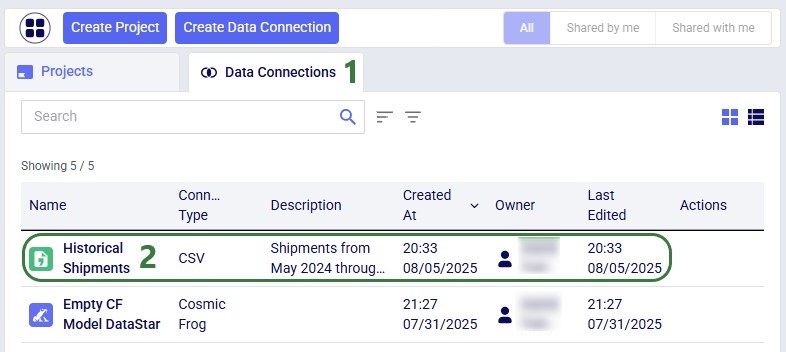

After creating the connection, the Data Connections tab on the DataStar start page will be active, and it shows the newly added CSV connection at the top of the list (note the connections list is shown in list view here; the other option is card view):

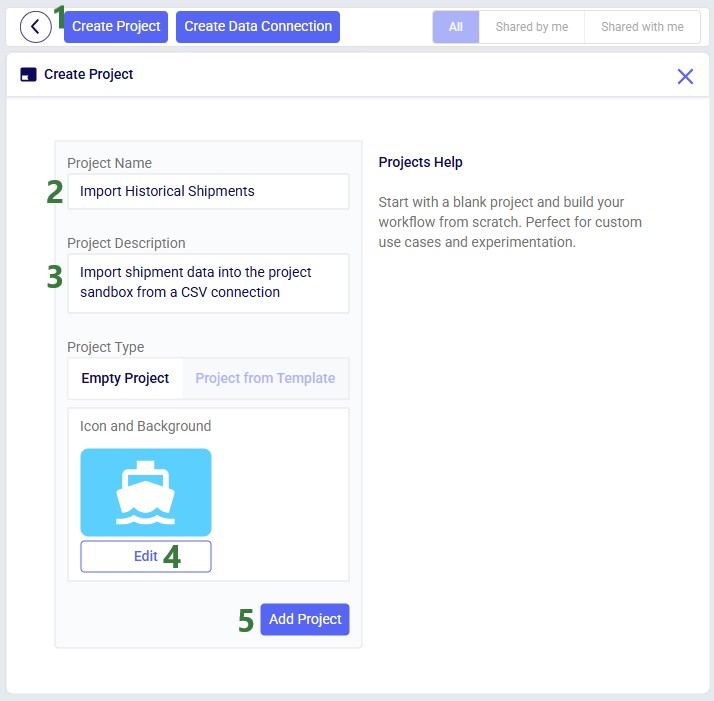

You can either go into an existing DataStar project or create a new one to set up a Macro that will import the data from the Historical Shipments CSV connection we just set up. For this example, we create a new project by clicking on the Create Project button in the toolbar at the top when on the start page of DataStar. Enter the name for the project, optionally add a description, change the appearance of the project if desired by clicking on the Edit button, and then click on the Add Project button:

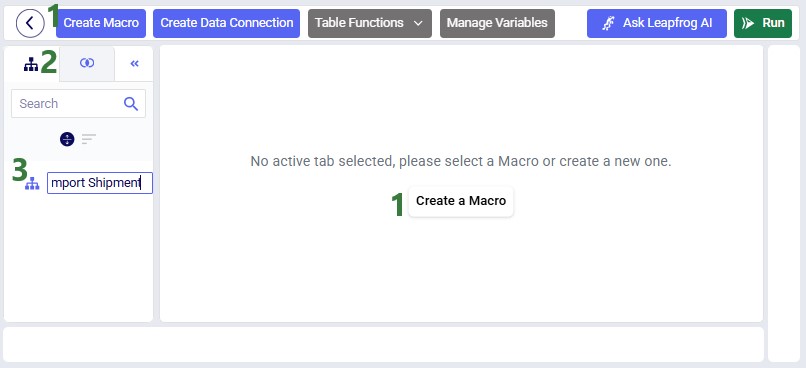

After the project is created, the Projects tab will be shown on the DataStar start page. Click on the newly created project to open it in DataStar. Inside DataStar, you can either click on the Create Macro button in the toolbar at the top or the Create a Macro button in the center part of the application (the Macro Canvas) to create a new macro which will then be listed in the Macros tab in the left-hand side panel. Type the name for the macro into the textbox:

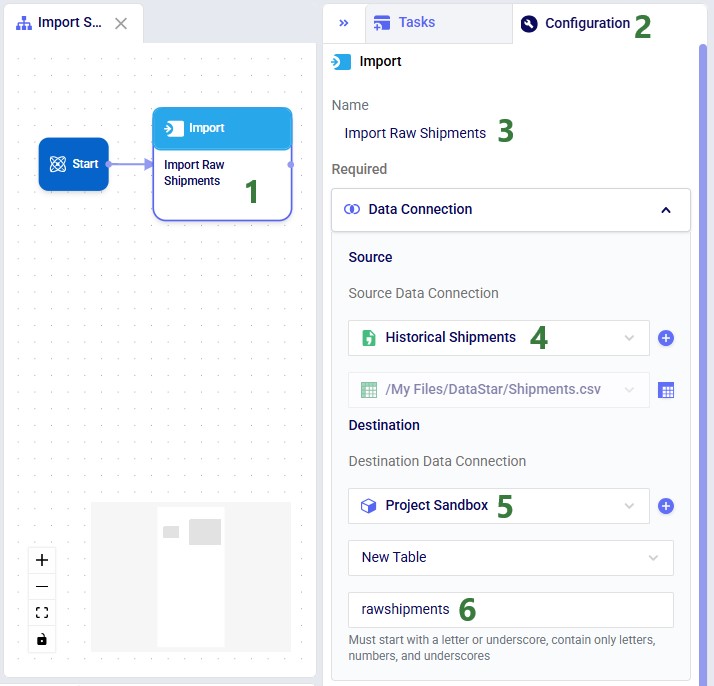

When a macro is created, it automatically gets a Start task added to it. Next, we open the Tasks tab by clicking on tab on the left in the panel on the right-hand side of the macro canvas. Click on Import and drag it onto the macro canvas:

When hovering close to the Start task, it will be suggested to connect the new Import task to the Start task. Dropping the Import task here will create the connecting line between the 2 tasks automatically. Once the Import task is placed on the macro canvas, the Configuration tab in the right-hand side panel will be opened. Here users can enter the name for the task, select the data connection that is the source for the import (the Historical Shipments CSV connection), and the data connection that is the destination of the import (a new table named “rawshipments” in the Project Sandbox):

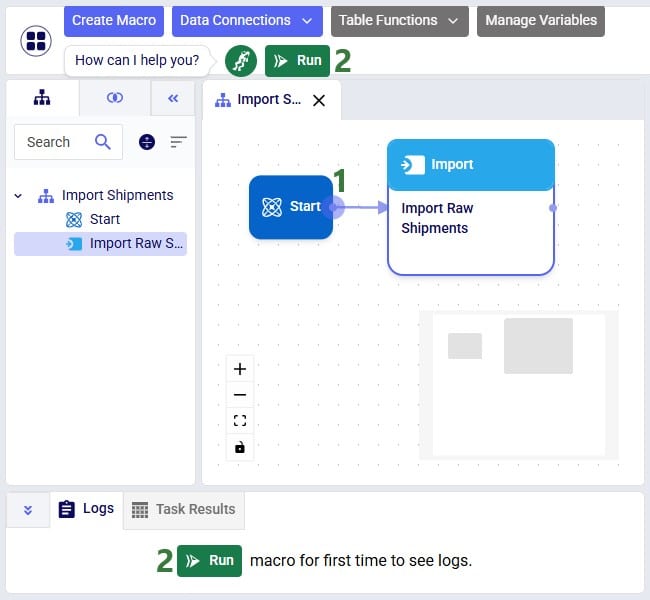

If not yet connected automatically in the previous step, connect the Import Raw Shipments task to the Start task by clicking on the connection point in the middle of the right edge of the Start task, holding the mouse down and dragging the connection line to the connection point in the middle of the left edge of the Import Raw Shipments task. Next, we can test the macro that has been set up so far by running it: either click on the green Run button in the toolbar at the top of DataStar or click on the Run button in the Logs tab at the bottom of the macro canvas:

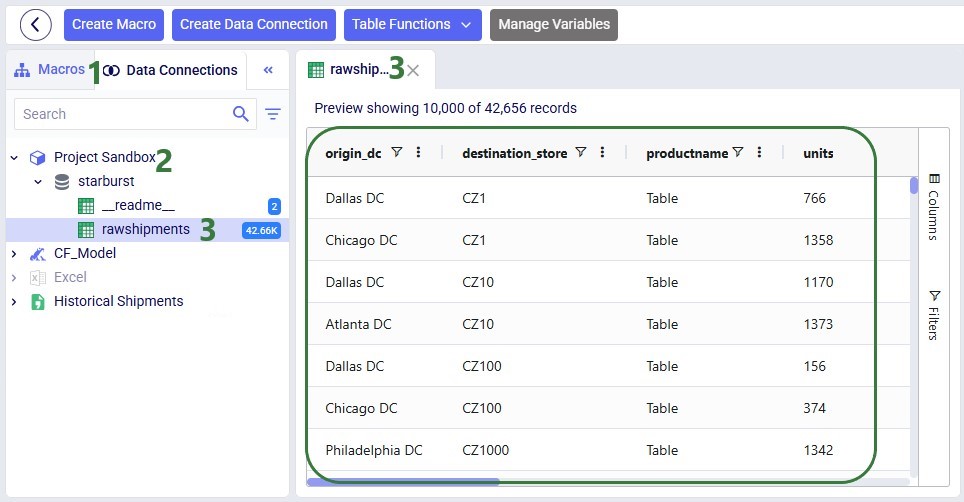

You can follow the progress of the Macro run in the Logs tab and once finished examine the results on the Data Connections tab. Expand the Project Sandbox data connection to open the rawshipments table by clicking on it. A preview of the table of up to 10,000 records will be displayed in the central part of DataStar:

In this quick start guide we will show how Leapfrog AI can be used in DataStar to generate tasks from natural language prompts, no coding necessary!

This quick start guide builds upon the previous one where a CSV file was imported into the Project Sandbox, please follow the steps in there first if you want to follow along with the steps in this quick start. The starting point for this quick start is therefore a project named Import Historical Shipments that has a Historical Shipments data connection of type = CSV, and a table in the Project Sandbox named rawshipments, which contains 42,656 records.

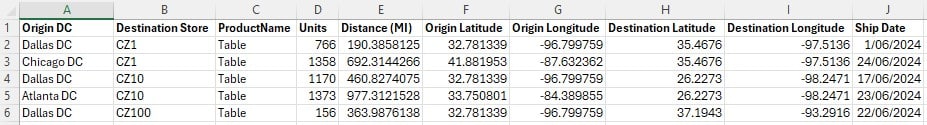

The Shipments.csv file that was imported into the rawshipments table has following data structure (showing 5 of the 42,656 records):

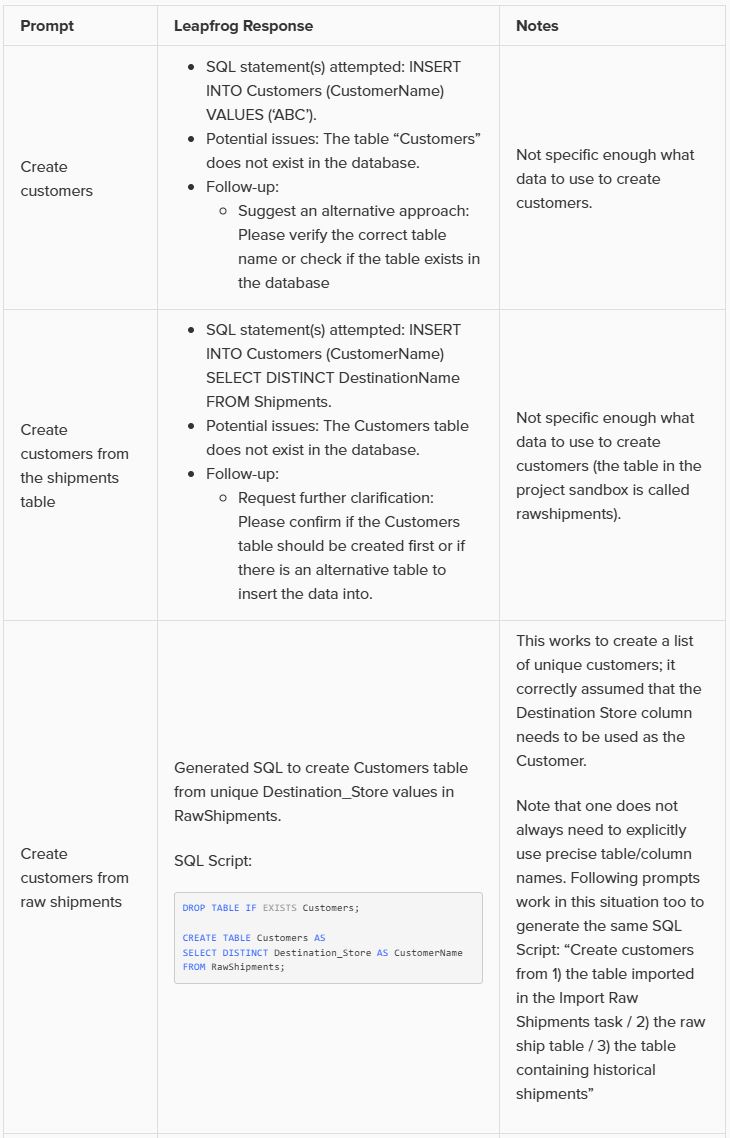

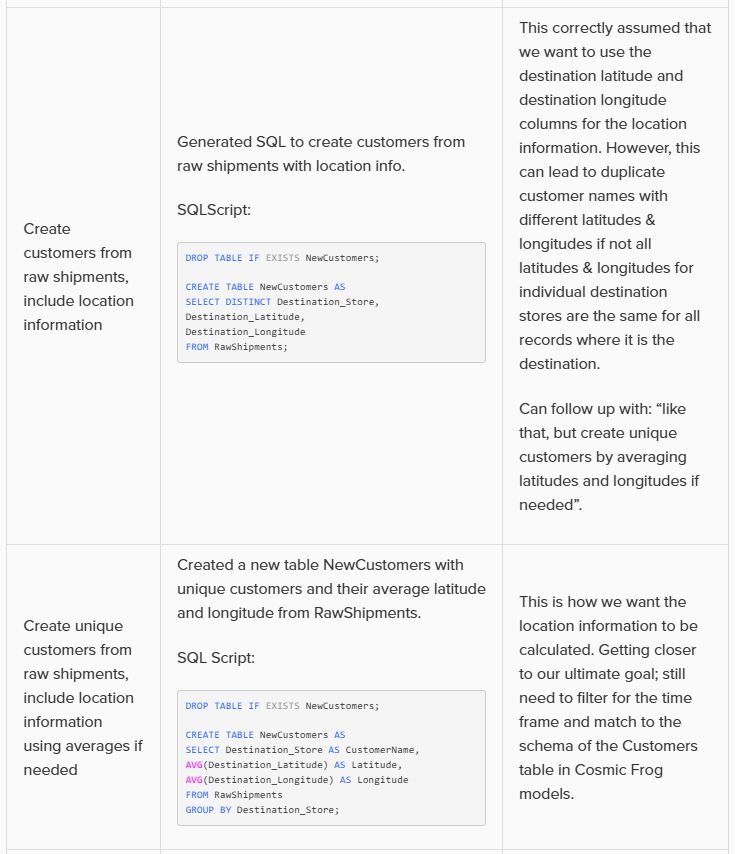

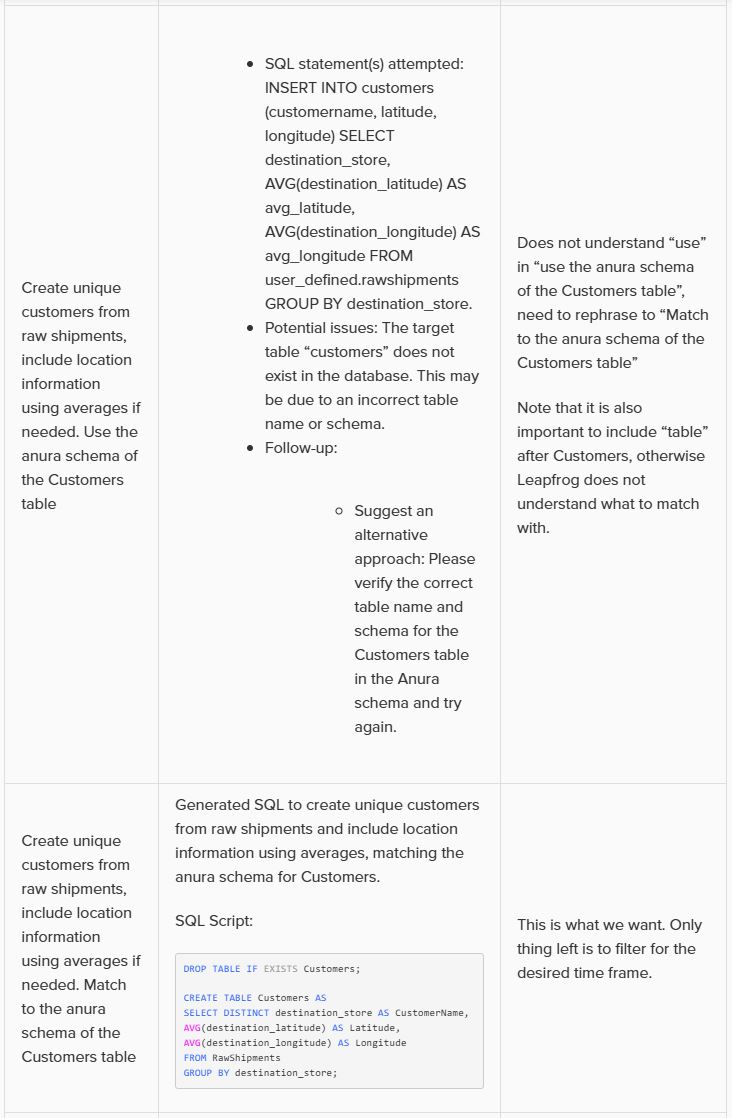

Our goal in this quick start is to create a task using Leapfrog that will use this data (from the rawshipments table in the Project Sandbox) to create a list of unique customers, where the destination stores function as the customers. Ultimately, this list of customers will be used to populate the Customers input table of a Cosmic Frog model. A few things to consider when formulating the prompt are:

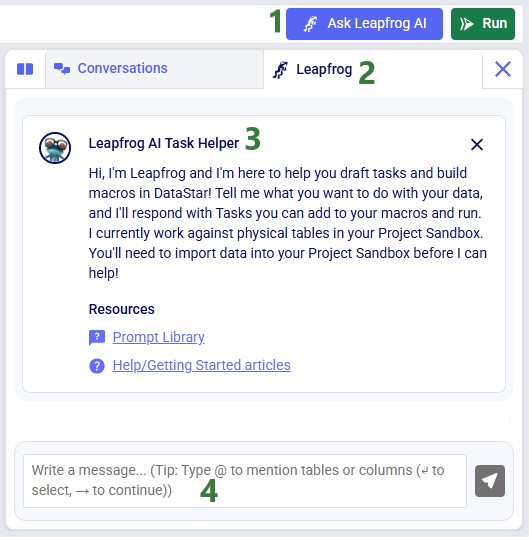

Within the Import Historical Shipments DataStar project, click on the Import Shipments macro to open it in the macro canvas, you should see the Start and Import Raw Shipments tasks on the canvas. Then open Leapfrog by clicking on the Ask Leapfrog AI button to the right in the toolbar at the top of DataStar. This will open the Leapfrog tab where a welcome message will be shown. Next, we can write our prompt in the “Write a message…” textbox.

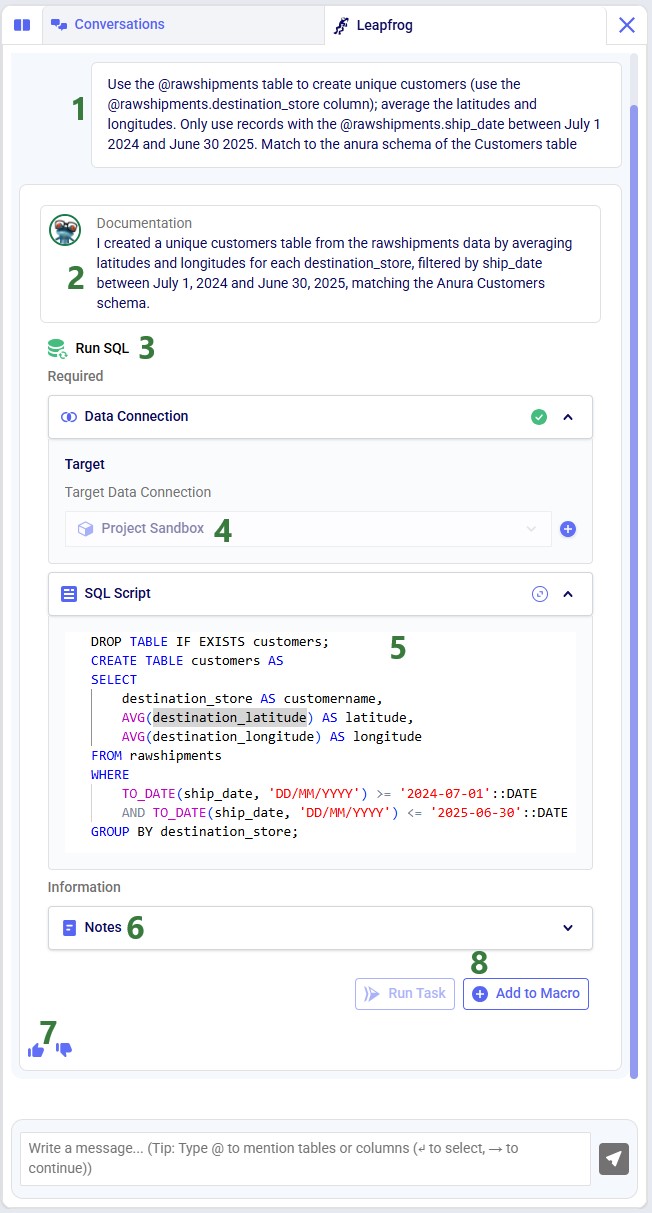

Keeping in mind the 5 items mentioned above, the prompt we use is the following: “Use the @rawshipments table to create unique customers (use the @rawshipments.destination_store column); average the latitudes and longitudes. Only use records with the @rawshipments.ship_date between July 1 2024 and June 30 2025. Match to the anura schema of the Customers table”. Please note that:

After clicking on the send icon to submit the prompt, Leapfrog will take a few seconds to consider the prompt and formulate a response. The response will look similar to the following screenshot, where we see from top to bottom:

For copy-pasting purposes, the resulting SQL Script is repeated here:

DROP TABLE IF EXISTS customers;

CREATE TABLE customers AS

SELECT

destination_store AS customername,

AVG(destination_latitude) AS latitude,

AVG(destination_longitude) AS longitude

FROM rawshipments

WHERE

TO_DATE(ship_date, 'DD/MM/YYYY') >= '2024-07-01'::DATE

AND TO_DATE(ship_date, 'DD/MM/YYYY') <= '2025-06-30'::DATE

GROUP BY destination_store;

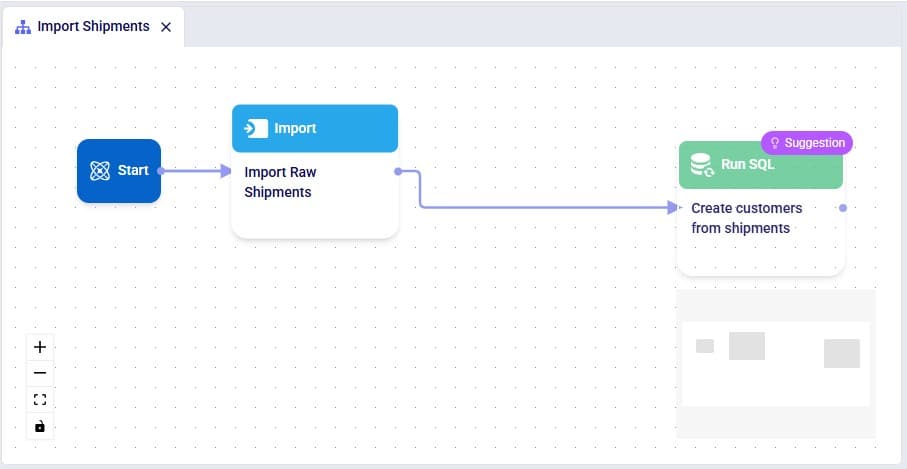

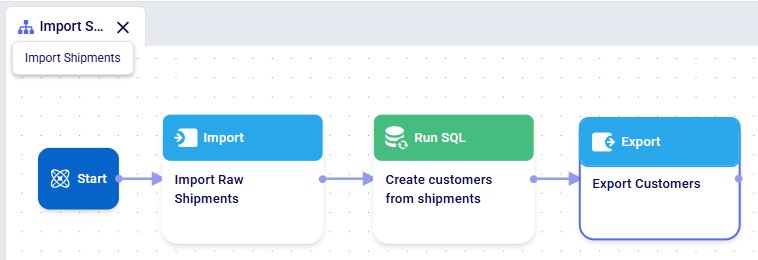

Those who are familiar with SQL, will be able to tell that this will indeed achieve our goal. Since that is the case, we can click on the Add to Macro button at the bottom of Leapfrog’s response to add this as a Run SQL task to our Import Shipments macro. When hovering over this button, you will see Leapfrog suggests where to put it on the macro canvas and to connect it to the Import Raw Shipments task, which is what we want. When next clicking on the Add to Macro button it will be added.

We can test our macro so far, by clicking on the green Run button at the right top of DataStar. Please note that:

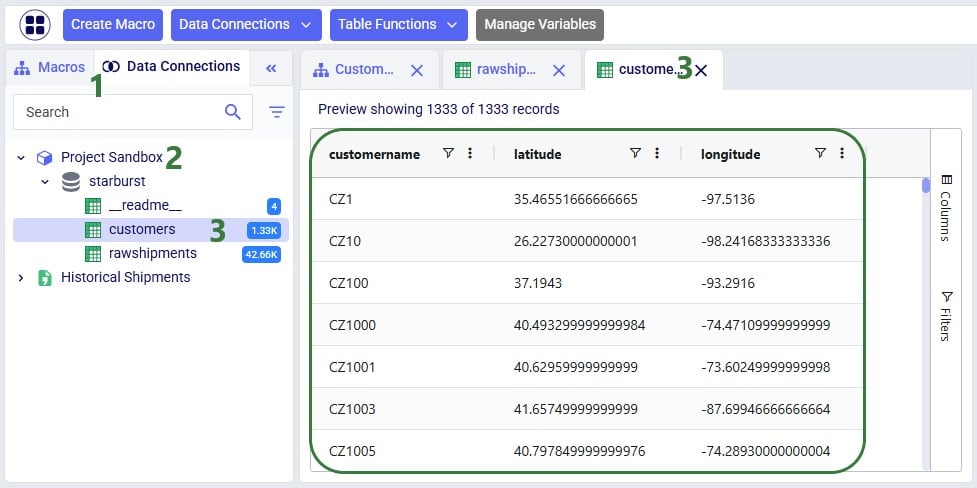

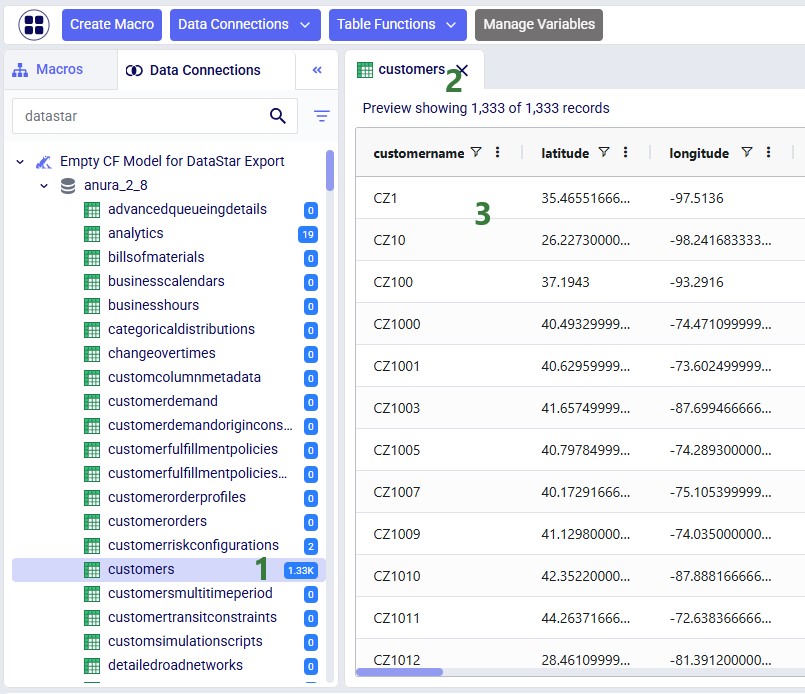

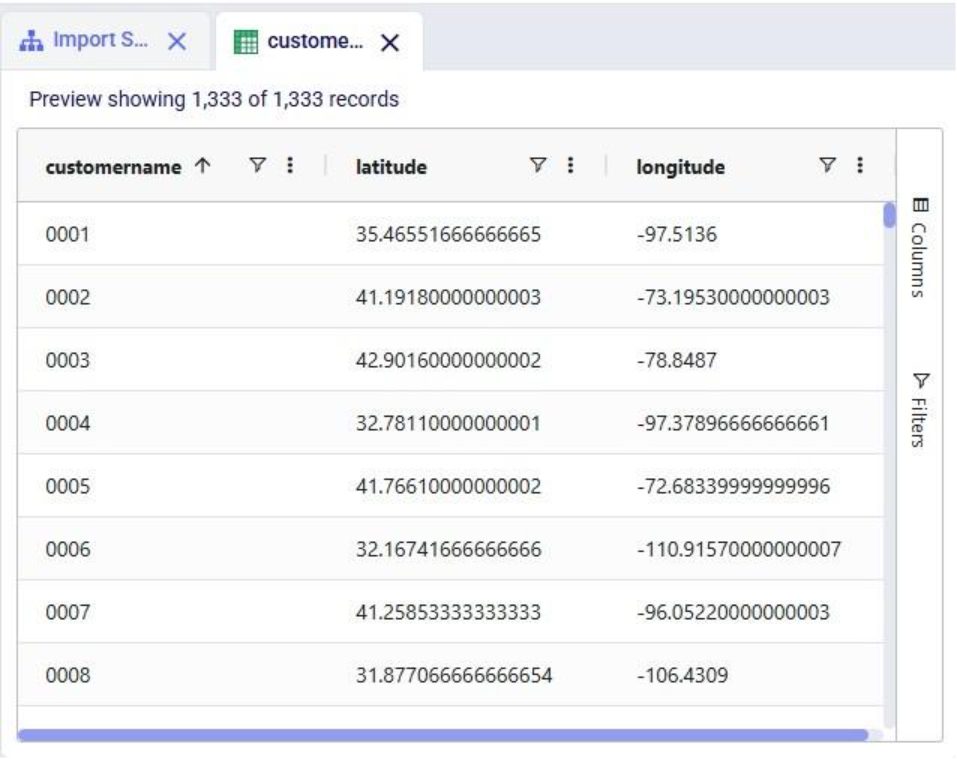

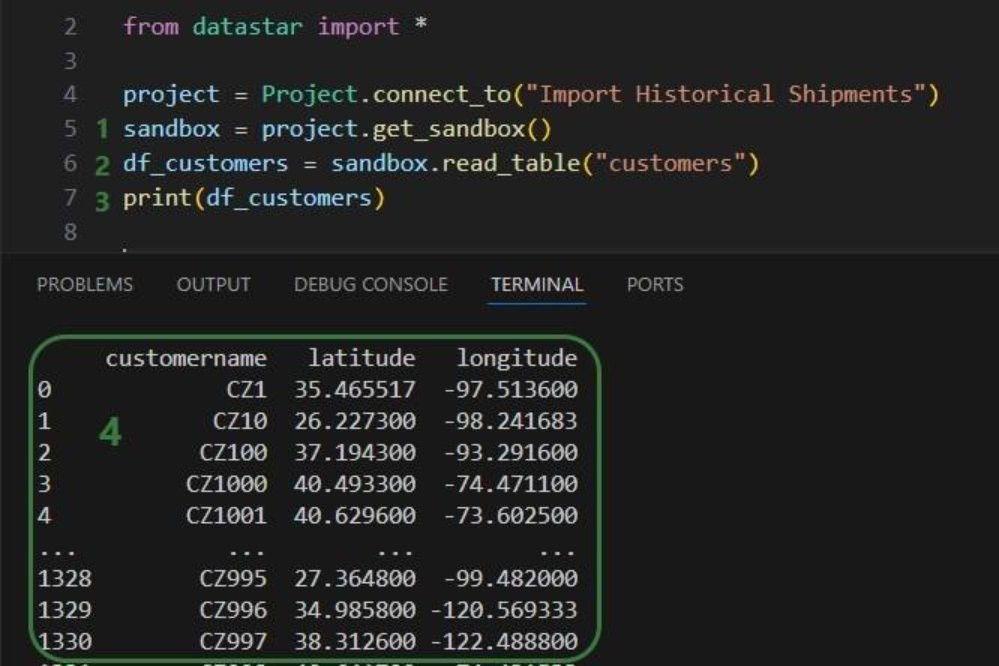

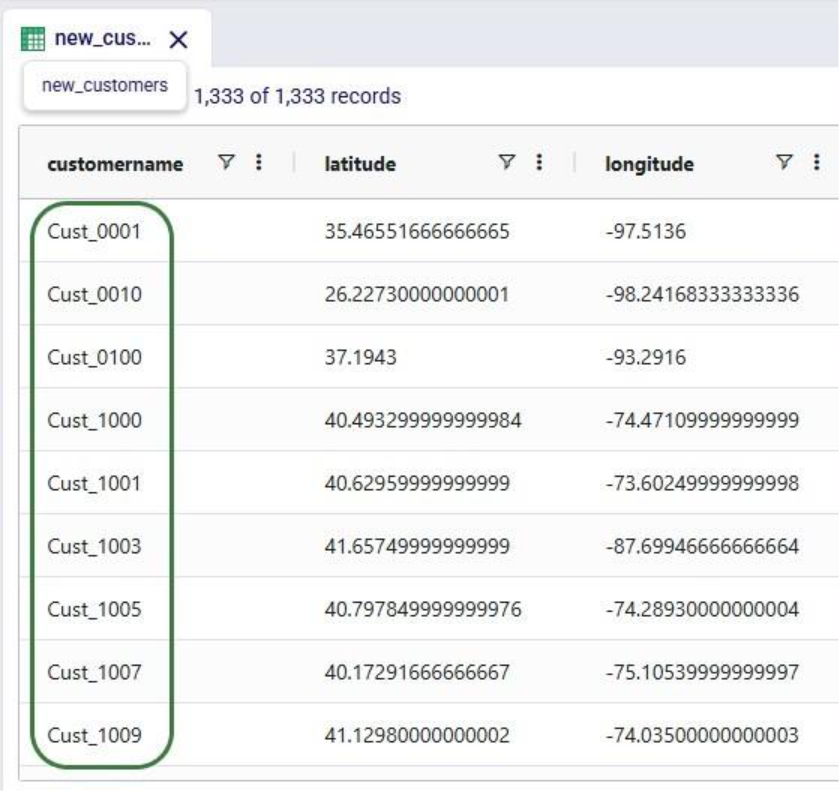

Once the macro is done running, we can check the results. Go to the Data Connections tab, expand the Project Sandbox connection and click on the customers table to open it in the central part of DataStar:

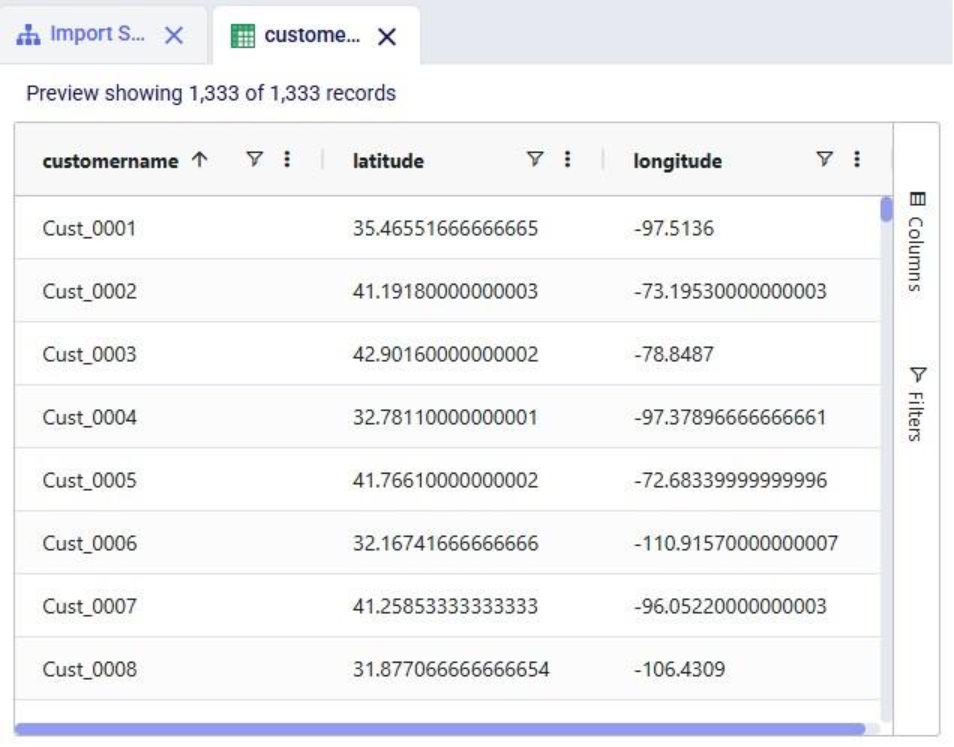

We see that the customers table resulting from running the Leapfrog-created Run SQL task contains 1,333 records. Also notice that its schema matches that of the Customers table of Cosmic Frog models, which includes columns named customername, latitude, and longitude.

Writing prompts for Leapfrog that will create successful responses (e.g. the SQL Script generated will achieve what the prompt-writer intended) may take a bit of practice. This Mastering Leapfrog for SQL Use Cases: How to write Prompts that get Results post on the Frogger Pond community portal has some great advice which applies to Leapfrog in DataStar too. It is highly recommended to give it a read; the main points of advice follow here too:

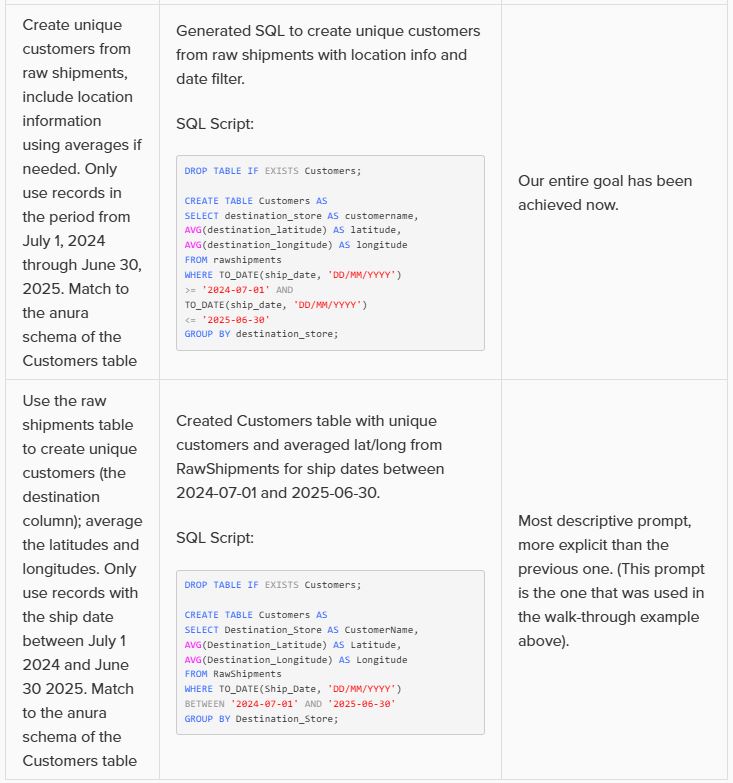

As an example, let us look at variations of the prompt we used in this quick start guide, to gauge the level of granularity needed for a successful response. In this table, the prompts are listed from least to most granular:

Note that in the above prompts, we are quite precise about table and column names and no typos are made by the prompt writer. However, Leapfrog can generally manage well with typos and often also pick up table and column names when not explicitly used in the prompt. So while generally being more explicit results in higher accuracy, it is not necessary to always be extremely explicit and we just recommend to be as explicit as you can be.

In addition, these example prompts do not use the @ character to specify tables and columns to use, but they could to facilitate prompt writing further.

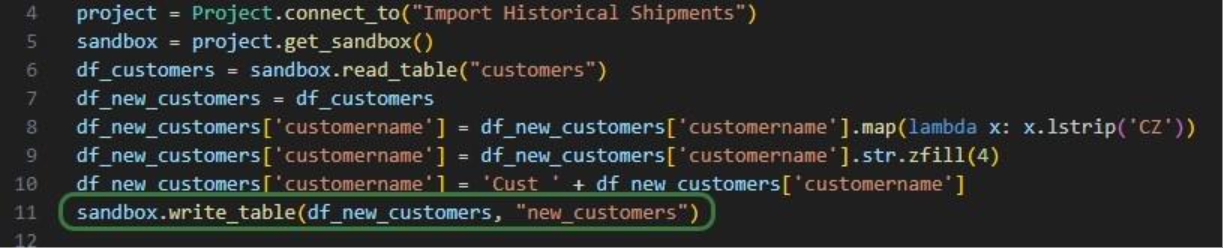

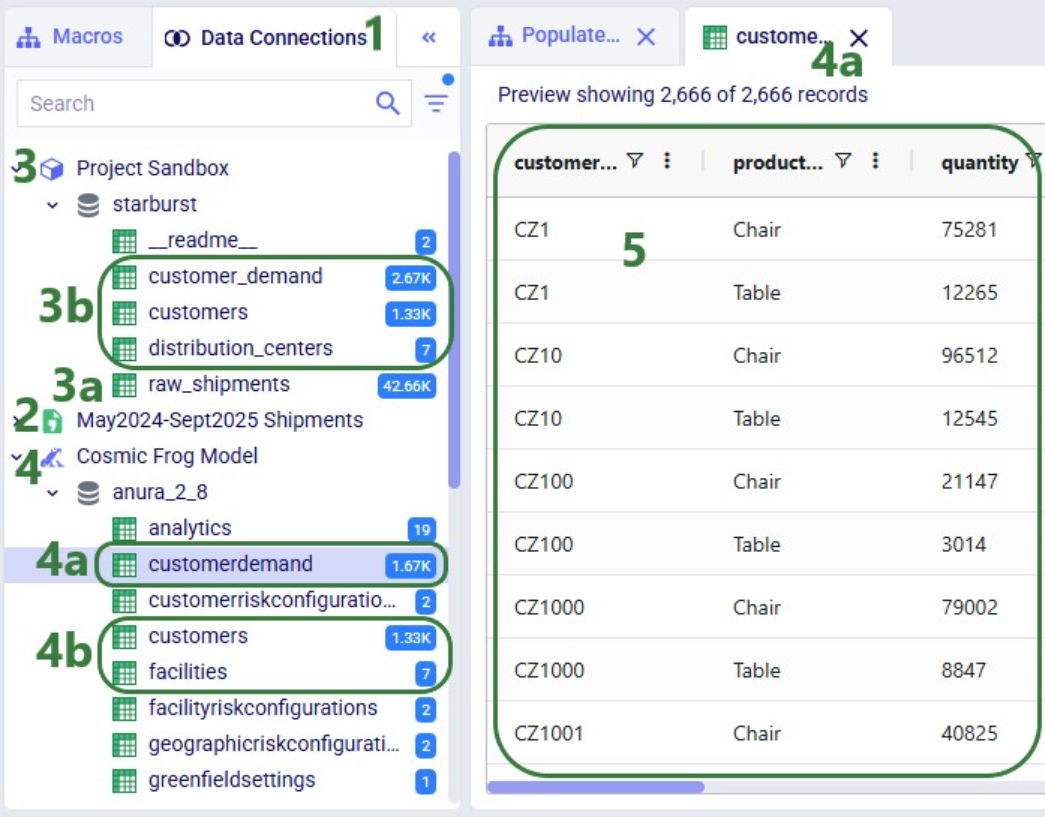

In this quick start guide we will walk through the steps of exporting data from a table in the Project Sandbox to a table in a Cosmic Frog model.

This quick start guide builds upon a previous one where unique customers were created from historical shipments using a Leapfrog-generated Run SQL task. Please follow the steps in that quick start guide first if you want to follow along with the steps in this one. The starting point for this quick start is therefore a project named Import Historical Shipments, which contains a macro called Import Shipments. This macro has an Import task and a Run SQL task. The project has a Historical Shipments data connection of type = CSV, and the Project Sandbox contains 2 tables named rawshipments (42,656 records) and customers (1,333 records).

The steps we will walk through in this quick start guide are:

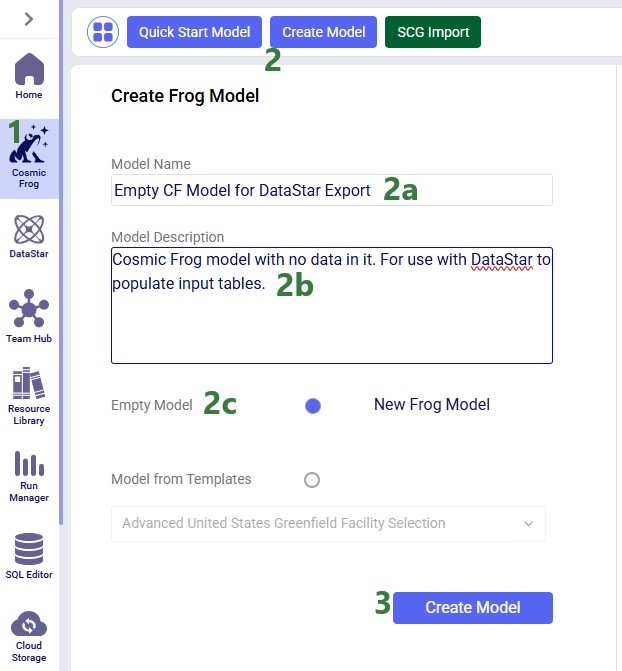

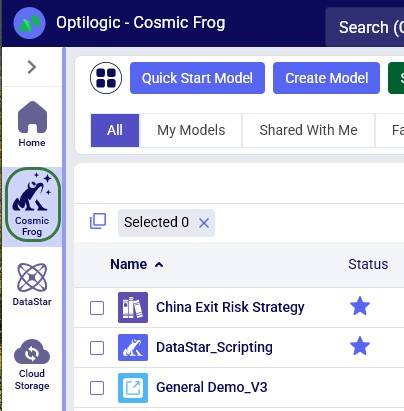

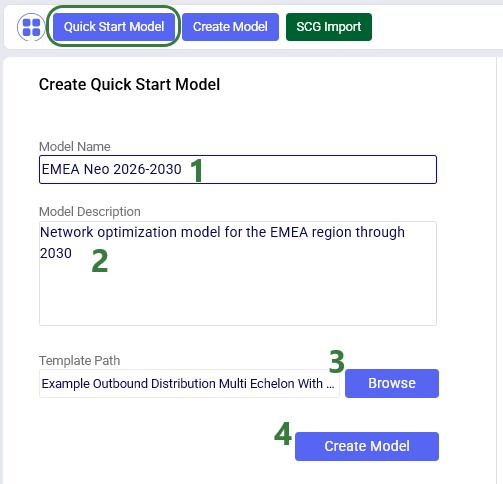

First, we will create a new Cosmic Frog model which does not have any data in it. We want to use this model to receive the data we export from the Project Sandbox.

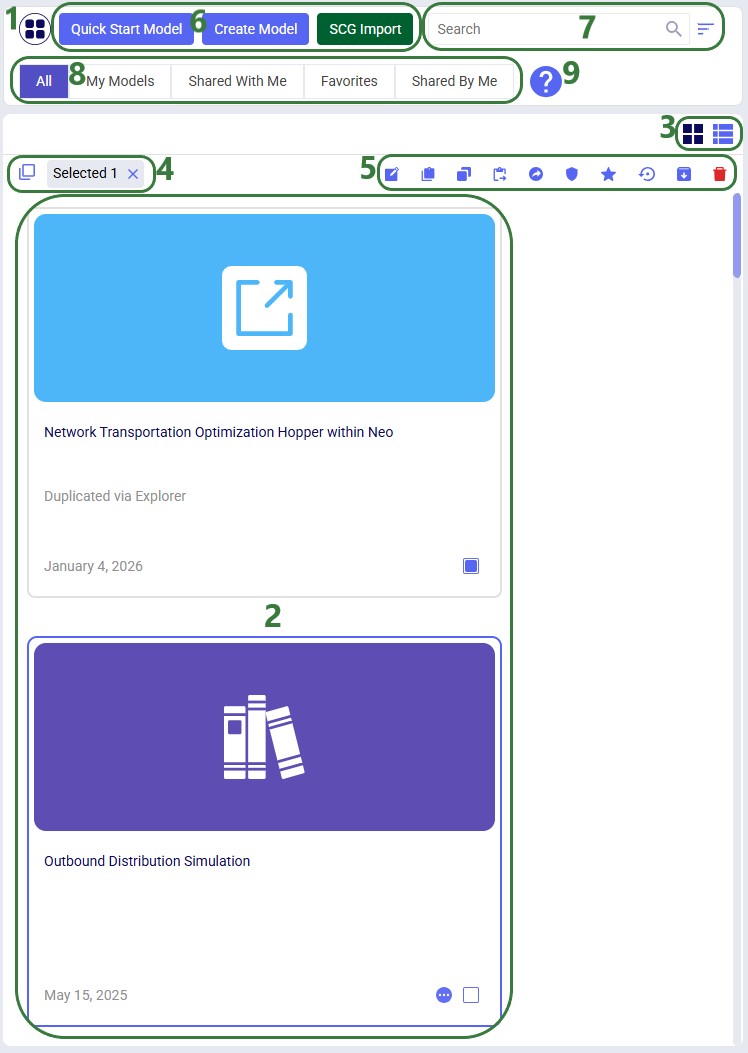

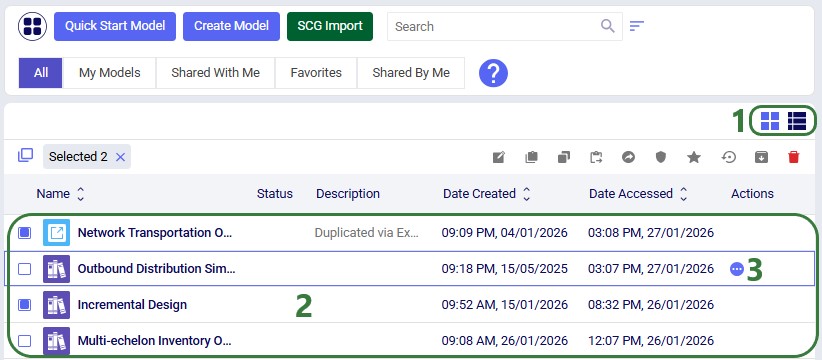

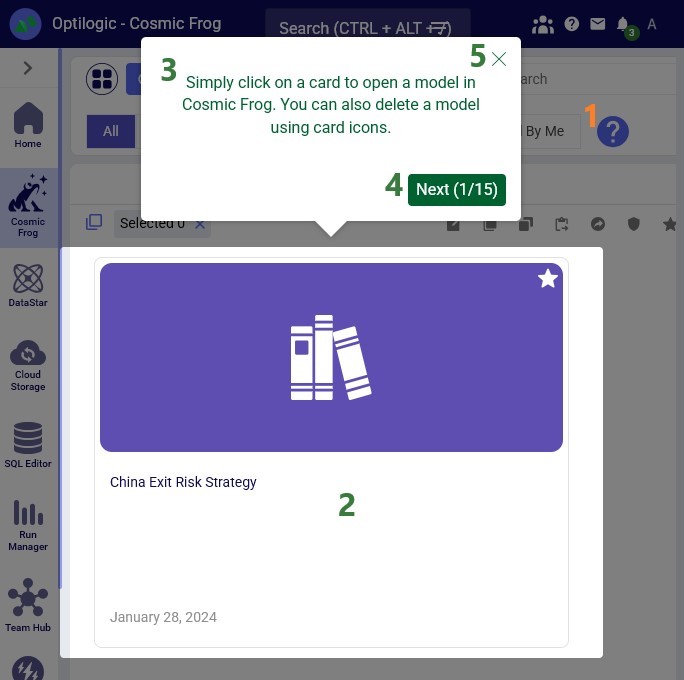

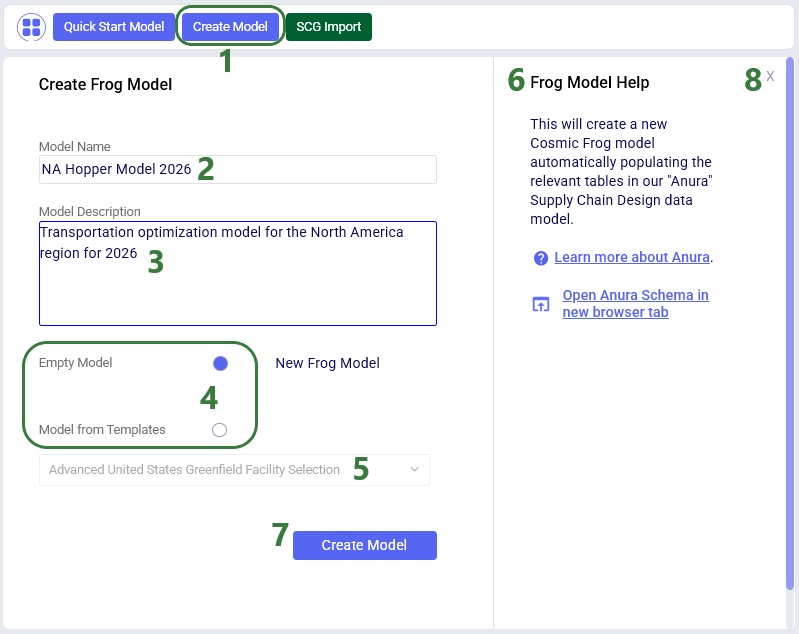

As shown with the numbered steps in the screenshot below: while on the start page of Cosmic Frog, click on the Create Model button at the top of the screen. In the Create Frog Model form that comes up, type the model name, optionally add a description, and select the Empty Model option. Click on the Create Model button to complete the creation of the new model:

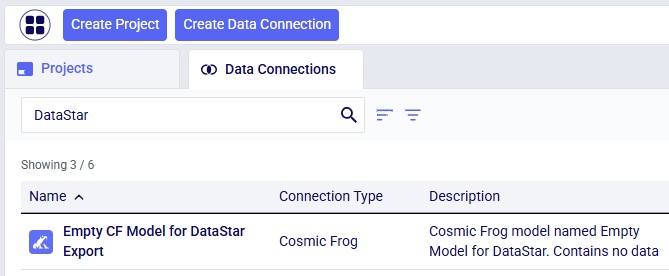

Next, we want to create a connection to the just created empty Cosmic Frog model in DataStar. To do so: open your DataStar application, then click on the Create Data Connection button at the top of the screen. In the Create Data Connection form that comes up, type the name of the connection (we are using the same name as the model, i.e. “Empty CF Model for DataStar Export”),optionally add a description, select Cosmic Frog Models in the Connection Type drop-down list, click on the name of the newly created empty model in the list of models, and click on Add Connection. The new data connection will now be shown in the list of connections on the Data Connections tab (shown in list format here):

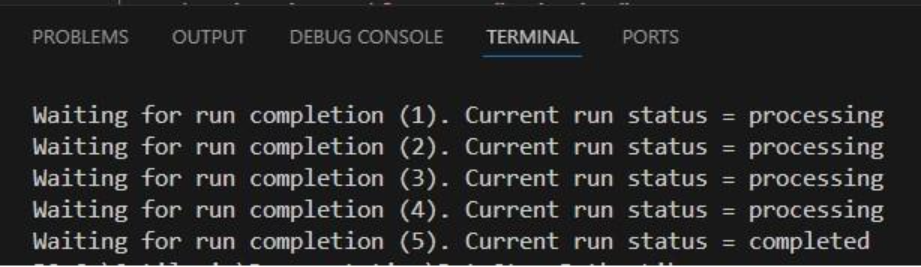

Now, go to the Projects tab, and click on the “Import Historical Shipments” project to open it. We will first have a look at the Project Sandbox and the empty Cosmic Frog model connections, so click on the Data Connections tab:

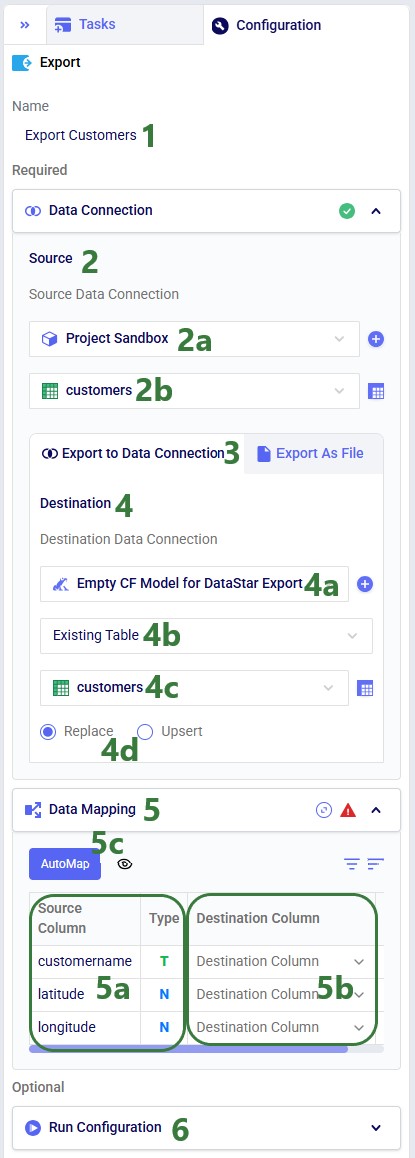

The next step is to add and configure an Export Task to the Import Shipments macro. Click on the Macros tab in the panel on the left-hand side, and then on the Import Shipments macro to open it. Click on the Export task in the Tasks panel on the right-hand side and drag it onto the Macro Canvas. If you drag it close to the Run SQL task, it will automatically connect to it once you drop the Export task:

The Configuration panel on the right has now become the active panel:

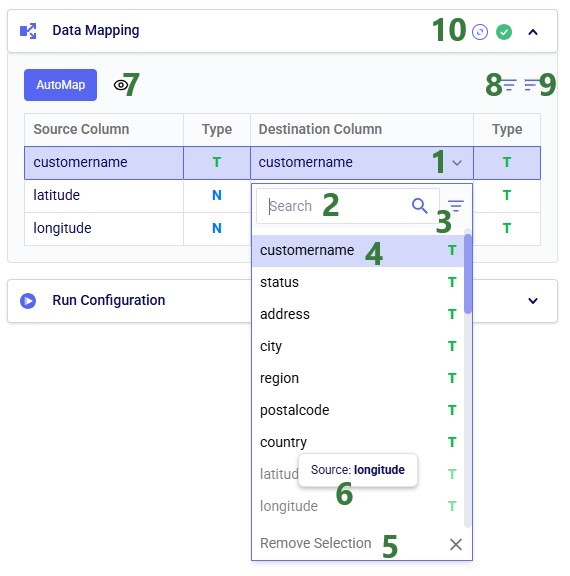

Click on the AutoMap button, and in the message that comes up, select either Replace Mappings or Add New Mappings. Since we have not mapped anything yet, the result will be the same in this case. After using the AutoMap option, the mapping looks as follows:

We see that each source column is now mapped to a destination column of the same name. This is what we expect, since in the previous quick start guide, we made sure to tell Leapfrog when generating the Run SQL task for creating unique customers to match the schema of the customers table in Cosmic Frog models (“the Anura schema”).

If the Import Shipments macro has been run previously, we can just run the new Export Customers task by itself (hover over the task in the Macro Canvas and click on the play button that comes up), otherwise we can choose to run the full macro by clicking on the green Run button at the right top. Once completed, click on the Data Connections tab to check the results:

Above, the AutoMap functionality was used to map all 3 source columns to the correct destination columns. Here, we will go into some more detail on manually mapping and additional options users have to quickly sort and filter the list of mappings.

In this quick start guide we will walk through the steps of modifying data in a table in the Project Sandbox using Update tasks. These changes can either be made to all records in a table or a subset based on a filtering condition. Any PostgreSQL function can be used when configuring the update statements and conditions of Update tasks.

This quick start guide builds upon a previous one where unique customers were created from historical shipments using a Leapfrog-generated Run SQL task. Please follow the steps in that quick start guide first if you want to follow along with the steps in this one. The starting point for this quick start is therefore a project named “Import Historical Shipments”, which contains a macro called Import Shipments. This macro has an Import task and a Run SQL task. The project has a Historical Shipments data connection of type = CSV, and the Project Sandbox contains 2 tables named rawshipments (42,656 records) and customers (1,333 records). Note that if you also followed one of the other quick start guides on exporting data to a Cosmic Frog model (see here), your project will also contain an Export task, and a Cosmic Frog data connection; you can still follow along with this quick start guide too.

The steps we will walk through in this quick start guide are:

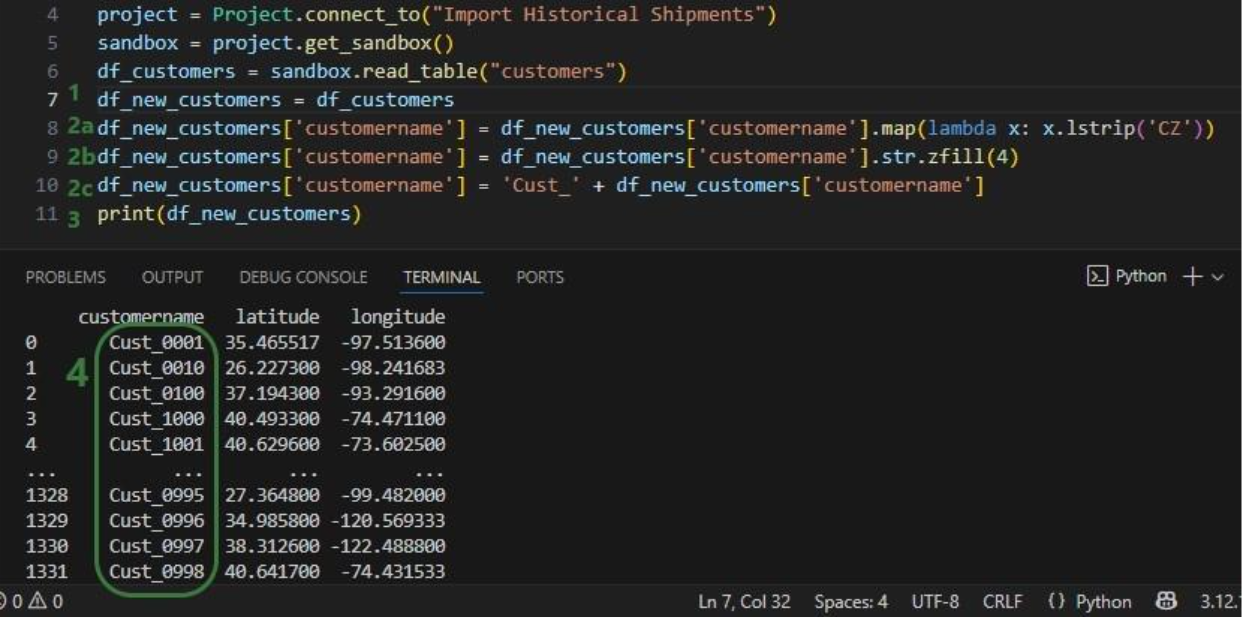

We have a look at the customers table which was created from the historical shipment data in the previous 2 quick start guides, see the screenshot below. Sorting on the customername column, we see that they are ordered in alphabetical order. This is because the customer name column is of type text as it starts with the string “CZ”. This leads to them not being ordered based on the number part that follows the “CZ” prefix.

If we want ordering customer names alphabetically to result in an order that is the same as sorting the number part of the customer name, we need to make sure each customer name has the same number of digits. We will use Update tasks to change the format of the number part of the customer names so that they are all 4 digits by adding leading 0’s to those that have less than 4 digits. While we are at it, we will also replace the “CZ” prefix with “Cust_” to make the data consistent with other data sources that contain customer names. We will break the updates to the customer name column up into 3 steps using 3 Update tasks initially. At the end, we will see how they can be combined into a single Update task. The 3 steps are:

Let us add the first Update task to our Import Shipments macro:

After dropping the Update task onto the macro canvas, its configuration tab will be opened automatically on the right-hand side:

If you have not already, click on the plus button to add your first update statement:

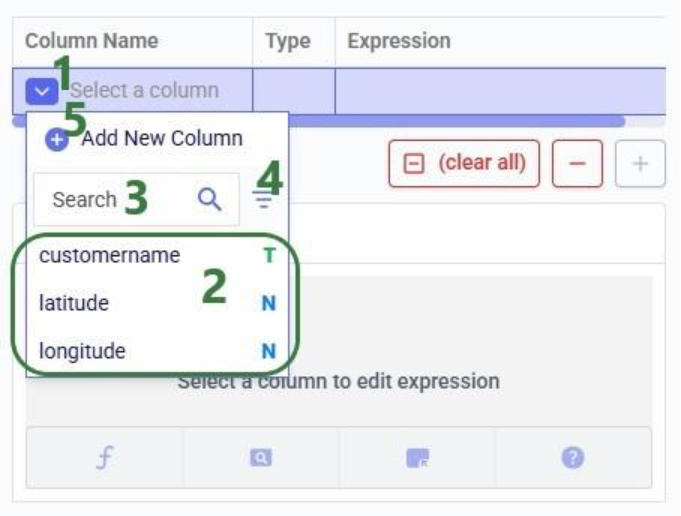

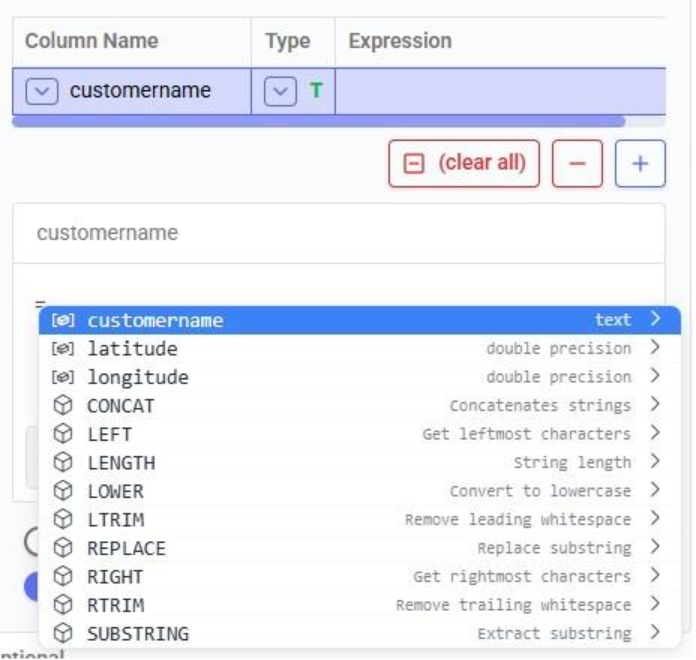

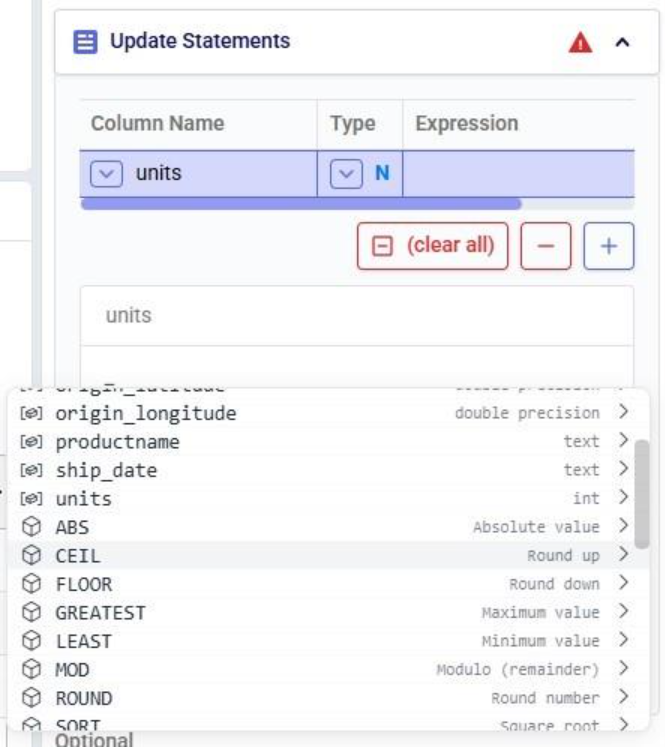

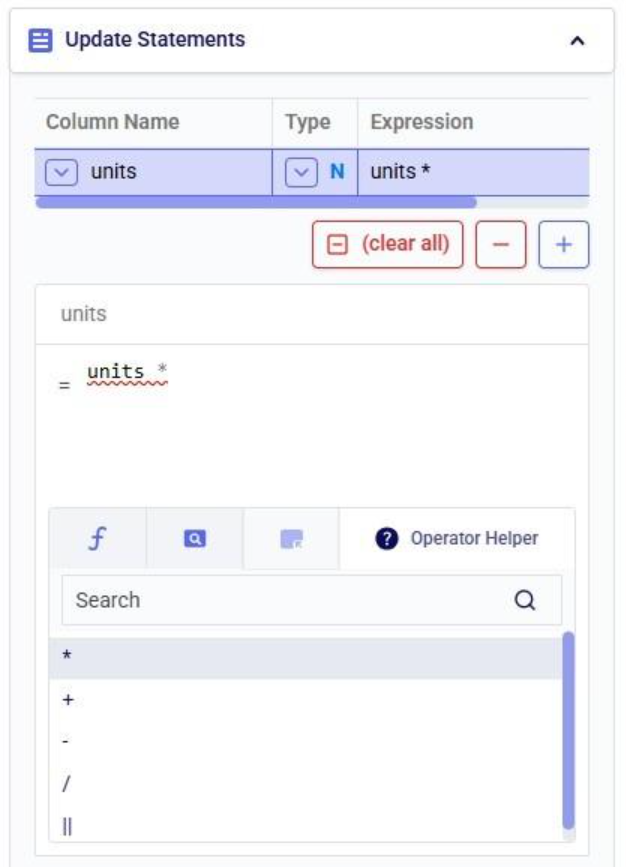

Next, we will write the expression for which we can use the Expression Builder area just below the update statements table. What we type there will also be added to the Expression column of the selected Update Statement. These expressions can use any PostgreSQL function, also those which may not be pre-populated in the helper lists. Please see the PostgreSQL documentation for all available functions.

When clicking in the Expression Builder, an equal sign is already there, and a list of items comes up. At the top are the columns that are present in the target table and below those is a list of string functions which we can select to use. Here, the functions shown are string functions, since we are working on a text type column, when working on column with a different data type, other functions, those relevant to the data type, will be shown. We will select the last option shown in the screenshot, the substring function, since we want to first remove the “CZ” from the start of the customer names:

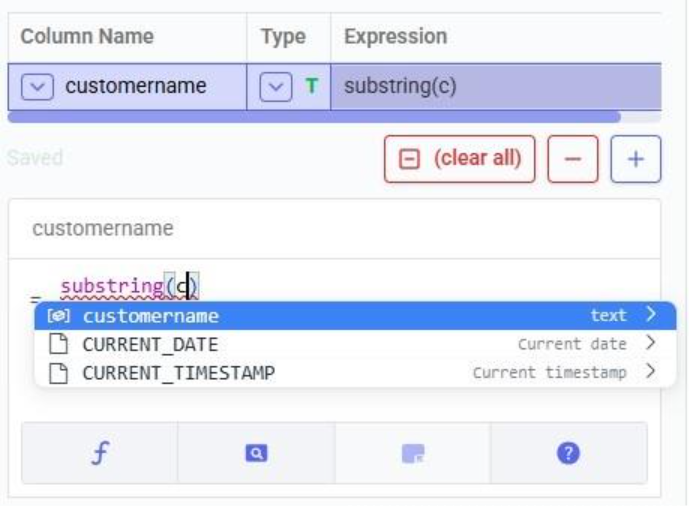

The substring function needs at least 2 arguments, which will be specified in the parentheses. The first argument needs to be the customername column in our case, since that is the column containing the string values we want manipulate. After typing a “c”, the customername column and 2 functions starting with “c” are suggested in the pop-up list. We choose the customername column. The second argument specifies the start location from where we want to start the substring. Since we want to remove the “CZ”, we specify 3 as the start location, leaving characters number 1 and 2 off. The third argument is optional; it indicates the end location of the substring. We do not specify it, meaning we want to keep all characters starting from character number 3:

We can run this task now without specifying a Condition (see section further below) in which case the expression will be applied to all records in the customers table. After running the task, we open the customers table to see the result:

We see that our intended change was made. The “CZ” is removed from the customer names. Sorted alphabetically, they still are not in increasing order of the number part of their name. Next, we use the lpad (left pad) function to add leading zeroes so all customer names consist of 4 digits. This function has 3 arguments: the string to apply the left padding to (the customername column), the number of characters the final string needs to have (4), and the padding character (‘0’).

After running this task, the customername column values are as follows:

Now with the leading zeroes and all customer names being 4 characters long, sorting alphabetically results in the same order as sorting by the number part of the customer name.

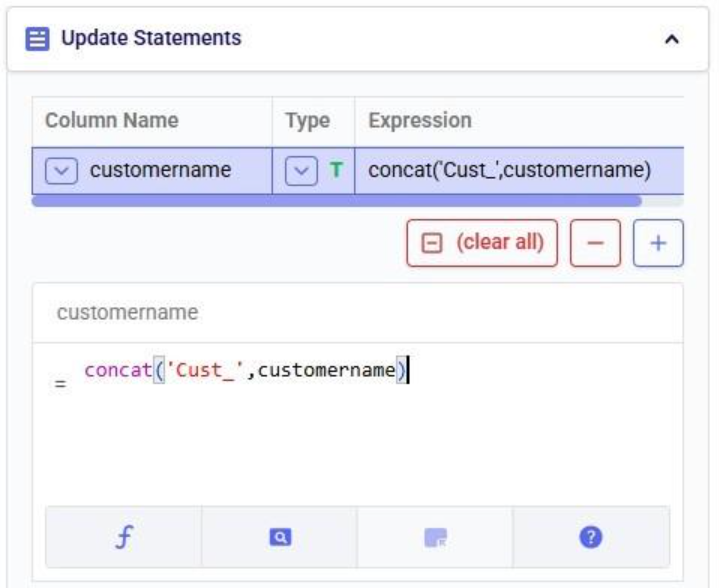

Finally, we want to add the prefix “Cust_”. We use the concat (concatenation) function for this. At first, we type Cust_ with double quotes around it, but the squiggly red line below the expression in the expression builder indicates this is not the right syntax. Hovering over the expression in the expression builder explains the problem:

The correct syntax for using strings in these functions is to use single quotes:

Instead of concat we can also use “= ‘Cust_’ || customername” as the expression. The double pipe symbol is used in PostgreSQL as the concatenation operator.

Running this third update task results in the following customer names in the customers table:

Our goal of how we wanted to update the customername column has been achieved. Our macro now looks as follows with the 3 Update tasks added:

The 3 tasks described above can be combined into 1 Update task by nesting the expressions as follows:

Running this task instead of the 3 above will result in the same changes to the customername column in the customers table.

Please note that in the above we only specified one update statement in each Update task. You can add more than one update statement per update task, in which case:

As mentioned above, the list of suggested functions is different depending on the data type of the column being updated. This screenshot shows part of the suggested functions for a number column:

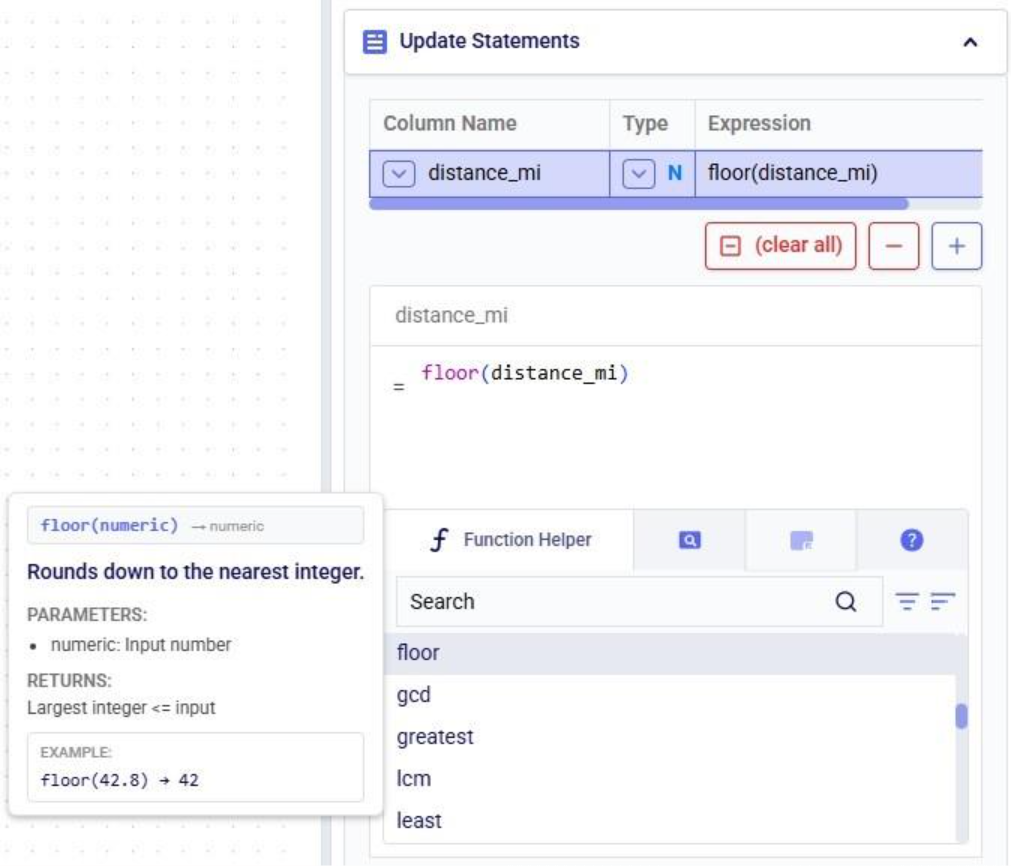

At the bottom of Expression Builder are multiple helper tabs to facilitate quickly building your desired expressions. The first one is the Function Helper which lists the available functions. The functions are listed by category: string, numeric, date, aggregate, and conditional. At the top of the list user has search, filter and sort options available to quickly find a function of interest. Hovering over a function in the list will bring up details of the function, from top to bottom: a summary of the format and input and output data types of the function, a description of what the function does, its input parameter(s), what it returns, and an example:

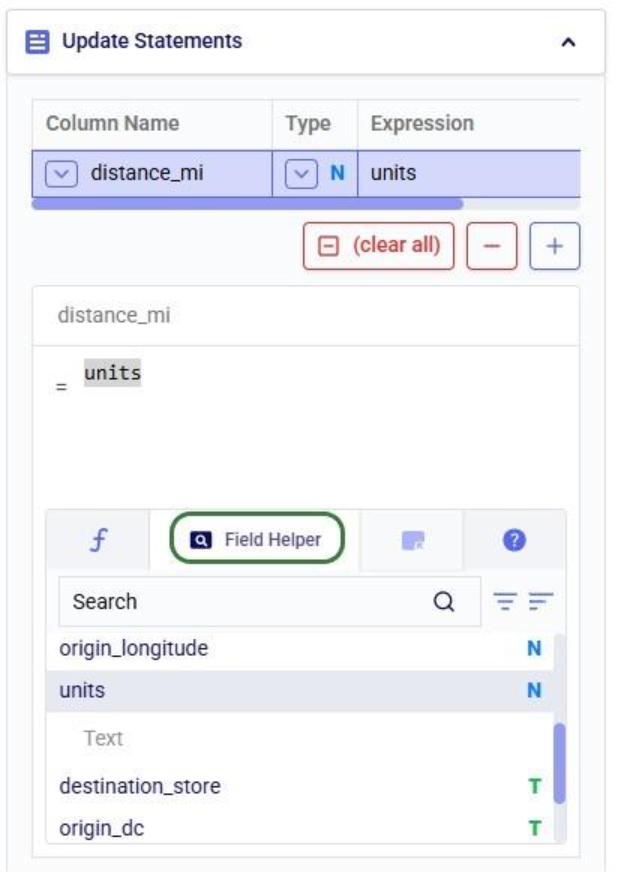

The next helper tab contains the Field Helper. This lists all the columns of the target table, sorted by their data type. Again, to quickly find the desired field, users can search, filter, and sort the list using the options at the top of the list:

The fourth tab is the Operator Helper, which lists several helpful numerical and string operators. This list can be searched too using the Search box at the top of the list:

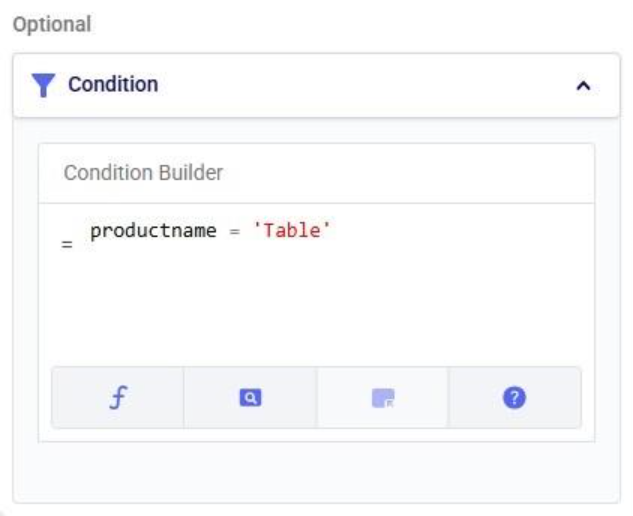

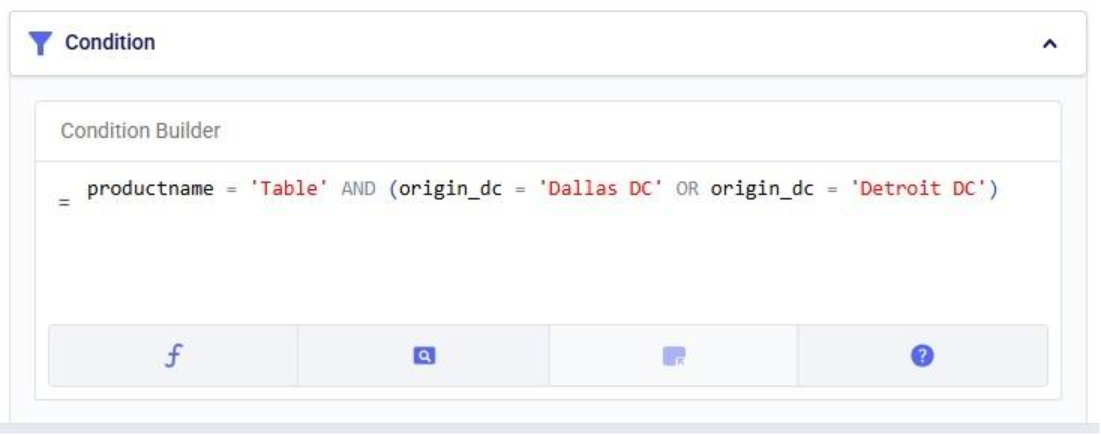

There is another optional configuration section for Update tasks, the Condition section. In here, users can specify an expression to filter the target table on before applying the update(s) specified in the Update Statements section. This way, the updates are only applied to the subset of records that match the condition.

In this example, we will look at some records of the rawshipments table in the project sandbox of the same project (“Import Historical Shipments). We have opened this table in a grid and filtered for origin_dc Salt Lake City DC and destination_store CZ103.

What we want to do is update the “units” column and increase the values by 50% for the Table product. The Update Statements section shows that we set the units field to its current value multiplied by 1.5, which will achieve the 50% increase:

However, if we run the Update task as is, all values in the units field will be increased by 50%, for both the Table and the Chair product. To make sure we only apply this increase to the Table product, we configure the Condition section as follows:

The condition builder has the same function, field, and operator helper tabs at the bottom as the expression builder in the update statements section to enable users to quickly build their conditions. Building conditions works in the same way as building expressions.

Running the task and checking the updated rawshipments table for the same subset of records as we saw above, we can check that it worked as intended. The values in the units column for the Table records are indeed 1.5 times their original value, while the Chair units are unchanged.

It is important to note that opening tables in DataStar currently shows a preview of 10,000 records. When filtering a table by clicking on the filter icons to the right of a column name, only the resulting subset of records from those first 10,000 records will be included. While an Update task will be applied to all records in a table, due to this limit on the number of records in the preview you may not always be able to see (all) results of your Update task in the grid. In addition, an Update task can also change the order of the records in the table. This can lead to a filter showing a different set of records after running an update task as compared to the filtered subset that was shown prior to running it. Users can use the SQL Editor application on the Optilogic platform to see the full set of records for any tables.

Finally, if you want to apply multiple conditions you can use logical AND and OR statements to combine them in the Expression Builder. You would for example specify the condition as follows if you want to increase the units for the Table product by 50% only for the records where the origin_dc value is either “Dallas DC” or “Detroit DC”:

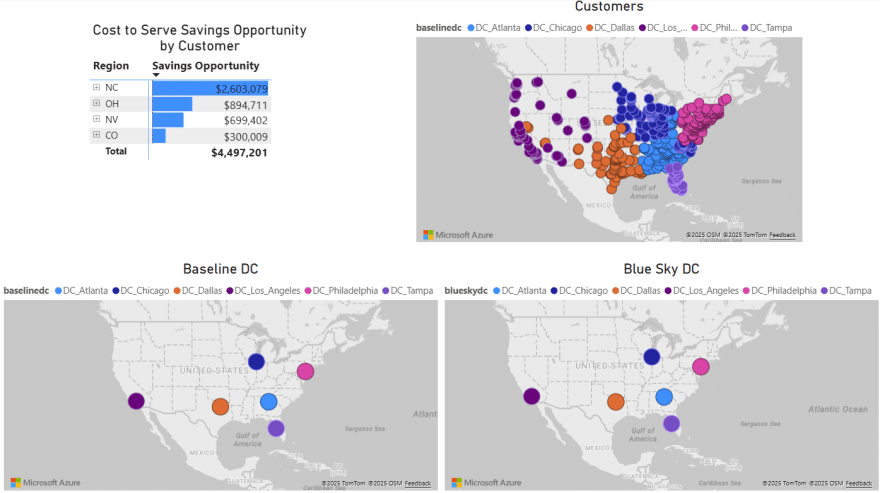

In this quick start guide we will show how users can seamlessly go from using the Resource Library, Cosmic Frog and DataStar applications on the Optilogic platform to creating visualizations in Power BI. The example covers cost to serve analysis using a global sourcing model. We will run 2 scenarios in this Cosmic Frog model with the goal to visualize the total cost difference between the scenarios by customer on a map. We do this by coloring the customers based on the cost difference.

The steps we will walk through are:

We will first copy the model named “Global Sourcing – Cost to Serve” from the Resource Library to our Optilogic account (learn more about the Resource Library in this help center article):

On the Optilogic platform, go to the Resource Library application by clicking on its icon in the list of applications on the left-hand side; note that you may need to scroll down. Should you not see the Resource Library icon here, then click on the icon with 3 horizontal dots which will then show all applications that were previously hidden too.

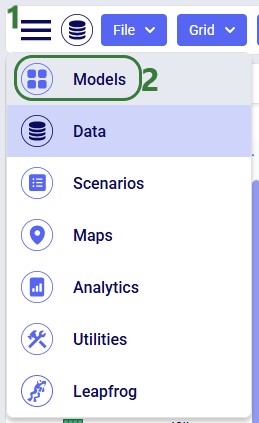

Now that the model is in the user’s account, it can be opened in the Cosmic Frog application:

We will only have a brief look at some high-level outputs in Cosmic Frog in this quick start guide, but feel free to explore additional outputs. You can learn more about Cosmic Frog through these help center articles. Let us have a quick look at the Optimization Network Summary output table and the map:

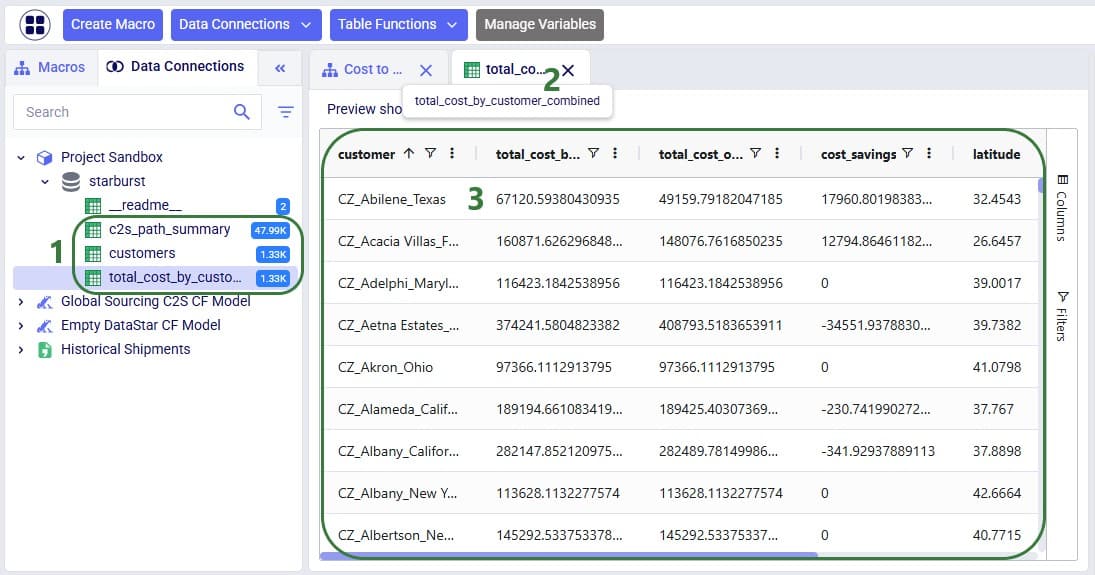

Our next step is to import the needed input table and output table of the Global Sourcing – Cost to Serve model into DataStar. Open the DataStar application on the Optilogic platform by clicking on its icon in the applications list on the left-hand side. In DataStar, we first create a new project named “Cost to Serve Analysis” and set up a data connection to the Global Sourcing – Cost to Serve model, which we will call “Global Sourcing C2S CF Model”. See the Creating Projects & Data Connections section in the Getting Started with DataStar help center article on how to create projects and data connections. Then, we want to create a macro which will calculate the increase/decrease in total cost by customer between the 2 scenarios. We build this macro as follows:

The configuration of the first import task, C2S Path Summary, is shown in this screenshot:

The configuration of the other import task, Customers, uses the same Source Data Connection, but instead of the optimizationcosttoservepathsummary table, we choose the customers table as the table to import. Again, the Project Sandbox is the Destination Data Connection, and the new table is simply called customers.

Instead of writing SQL queries ourselves to pivot the data in the cost to serve path summary table to create a new table where for each customer there is a row which has the customer name and the total cost for each scenario, we can use Leapfrog to do it for us. See the Leapfrog section in the Getting Started with DataStar help center article and this quick start guide on using natural language to create DataStar tasks to learn more about using Leapfrog in DataStar effectively. For the Pivot Total Cost by Scenario by Customer task, the 2 Leapfrog prompts that were used to create the task are shown in the following screenshot:

The SQL Script reads:

DROP TABLE IF EXISTS total_cost_by_customer_combined;

CREATE TABLE total_cost_by_customer_combined AS

SELECT

pathdestination AS customer,

SUM(CASE WHEN scenarioname = 'Baseline' THEN pathcost ELSE 0 END)

AS total_cost_baseline,

SUM(CASE WHEN scenarioname = 'OpenPotentialFacilities' THEN pathcost ELSE 0 END)

AS total_cost_openpotentialfacilities

FROM c2s_path_summary

WHERE scenarioname IN ('Baseline', 'OpenPotentialFacilities')

GROUP BY pathdestination

ORDER BY pathdestination;

To create the Calculate Cost Savings by Customer task, we gave Leapfrog the following prompt: “Use the total cost by customer table and add a column to calculate cost savings as the baseline cost minus the openpotentalfacilities cost”. The resulting SQL Script reads as follows:

ALTER TABLE total_cost_by_customer_combined

ADD COLUMN cost_savings DOUBLE PRECISION;

UPDATE total_cost_by_customer_combined

SET

cost_savings = total_cost_baseline - total_cost_openpotentialfacilities;

This task is also added to the macro; its name is "Calculate Cost Savings by Customer".

Lastly, we give Leapfrog the following prompt to join the table with cost savings (total_cost_by_customer_combined) and the customers table to add the coordinates from the customers table to the cost savings table: “Join the customers and total_cost_by_customer_combined tables on customer and add the latitude and longitude columns from the customers table to the total_cost_by_customer_combined table. Use an inner join and do not create a new table, add the columns to the existing total_cost_by_customer_combined table”. This is the resulting SQL Script, which was added to the macro as the "Add Coordinates to Cost Savings" task:

ALTER TABLE total_cost_by_customer_combined ADD COLUMN latitude VARCHAR;

ALTER TABLE total_cost_by_customer_combined ADD COLUMN longitude VARCHAR;

UPDATE total_cost_by_customer_combined SET latitude = c.latitude

FROM customers AS c

WHERE total_cost_by_customer_combined.customer = c.customername;

UPDATE total_cost_by_customer_combined SET longitude = c.longitude

FROM customers AS c

WHERE total_cost_by_customer_combined.customer = c.customername;We can now run the macro, and once it is completed, we take a look at the tables present in the Project Sandbox:

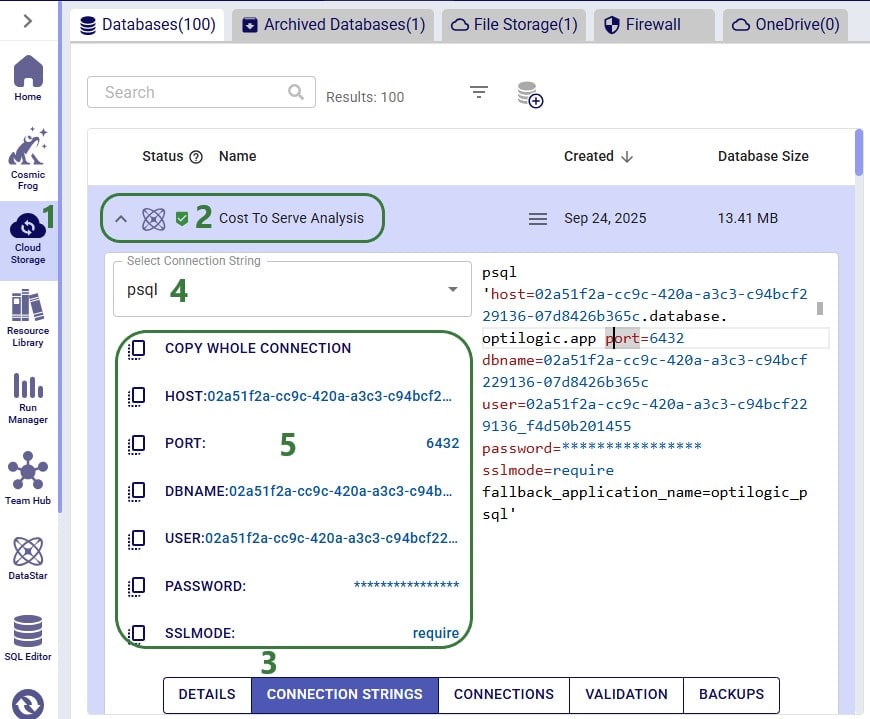

We will use Microsoft Power BI to visualize the change in total cost between the 2 scenarios by customer on a map. To do so, we first need to set up a connection to the DataStar project sandbox from within Power BI. Please follow the steps in the “Connecting to Optilogic with Microsoft Power BI” help center article to create this connection. Here we will just show the step to get the connection information for the DataStar Project Sandbox, which underneath is a PostgreSQL database (next screenshot) and selecting the table(s) to use in Power BI on the Navigator screen (screenshot after this one):

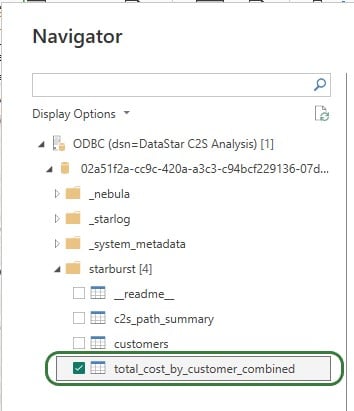

After selecting the connection within Power BI and providing the credentials again, on the Navigator screen, choose to use just the total_cost_by_customer_combined table as this one has all the information needed for the visualization:

We will set up the visualization on a map using the total_cost_by_customer_combined table that we have just selected for use in Power BI using the following steps:

With the above configuration, the map will look as follows:

Green customers are those where the total cost went down in the OpenPotentialFacilities scenario, i.e. there are savings for this customer. The darker the green, the higher the savings. White customers did not see a lot of difference in their total costs between the 2 scenarios. The one that is hovered over, in Marysville in Washington state, has a small increase of $149.71 in total costs in the OpenPotentialFacilities scenario as compared to the Baseline scenario. Red customers are those where the total cost went up in the OpenPotentialFacilities scenario (i.e. the cost savings are a negative number); the darker the red, the higher the increase in total costs. As expected, the customers with the highest cost savings (darkest green) are those located in Texas and Florida, as they are now being served from DCs closer to them.

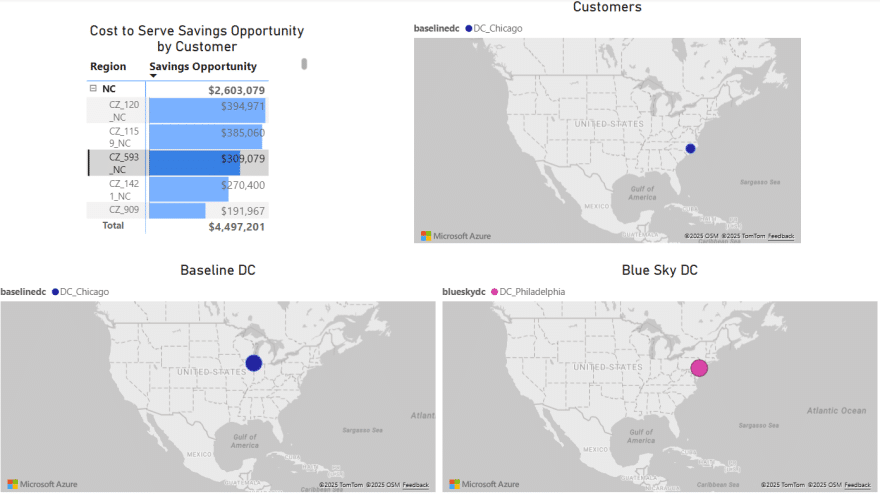

To give users an idea of what type of visualization and interactivity is possible within Power BI, we will briefly cover the 2 following screenshots. These are of a different Cosmic Frog model for which a cost to serve analysis is performed too. Two scenarios were run in this model: Baseline DC and Blue Sky DC. In the Baseline scenario, customers are assigned to their current DCs and in the Blue Sky scenario, they can be re-assigned to other DCs. The chart on the top left shows the cost savings by region (= US state) that are identified in the Blue Sky DC scenario. The other visualizations on the dashboard are all on maps: the top right map shows the customers which are colored based on which DC serves them in the Baseline scenario, the bottom 2 maps shows the DCs used in the Baseline (left) and the DCs used in the Blue Sky scenario.

To drill into the differences between the 2 scenarios, users can expand the regions in the top left chart and select 1 or multiple individual customers. This is an interactive chart, and the 3 maps are then automatically filtered for the selected location(s). In the below screenshot, the user has expanded the NC region and then selected customer CZ_593_NC in the top left chart. In this chart, we see that the cost savings for this customer in the Blue Sky DC scenario as compared to the Baseline scenario amount to $309k. From the Customers map (top right) and Baseline DC map (bottom left) we see that this customer was served from the Chicago DC in the Baseline. We can tell from the Blue Sky DC map (bottom right) that this customer is re-assigned to be served from the Philadelphia DC in the Blue Sky DC scenario.

Since Leapfrog's creation, the system has continuously evolved with the addition of new specialized agents. The platform now features a comprehensive agent library built on a robust toolkit framework, enabling sophisticated multi-agent workflows and autonomous task execution.

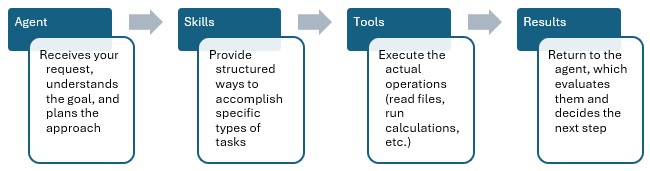

AI agents are software systems that use a large language model (LLM) as a reasoning engine but go beyond chat by taking actions in an environment. Instead of only generating text, an agent can interpret a goal, decide what to do next, call external capabilities (tools), observe the results, and iterate until the objective is achieved.

In practice, an "agent" is not a single model call - it is a control system wrapped around an LLM:

This architecture matters because it turns the LLM from a passive text generator into an adaptive problem-solver that can:

An agent is not just a chat model. A chat model produces responses; an agent operates - it can run commands, fetch data, write artifacts, and iterate autonomously within defined constraints. Think of an AI agent as a smart assistant that can:

Agents are most useful when tasks are multi-step, partially specified, and feedback-driven, for example:

If a task is single-shot and fully specified (e.g., "summarize this paragraph"), a non-agent LLM call is often simpler and cheaper.

Most agents follow a ReAct-style loop (Reason + Act), sometimes with explicit planning:

A useful way to think about the loop is that each iteration should:

Well-behaved agents stop for explicit reasons, such as:

An agent is the intelligent layer that decides what to do. It's like a project manager who understands the goal, plans the approach, and uses available skills and tools to get the job done.

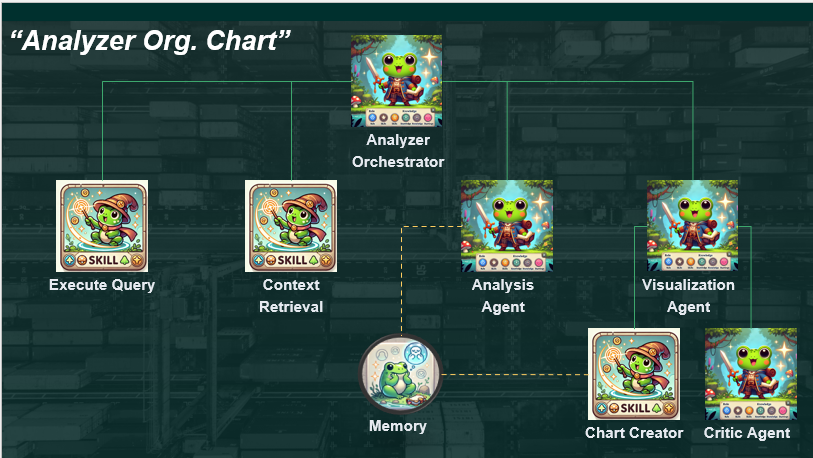

The Leapfrog ecosystem includes many specialized agents (some of which are shown in the image above), each designed for specific analytical and reporting tasks.

Why specialization helps:

A common pattern is an orchestrator (or "manager") agent that routes work to sub-agents and integrates their outputs into a final deliverable.

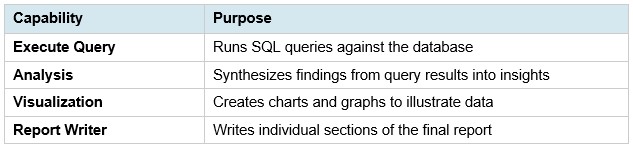

The agent toolkit is built on four foundational concepts that enable flexible and powerful agent development:

The core reasoning component - a large language model equipped with specialized skills and capabilities.

In addition to the model itself, an agent definition typically includes:

A versatile building block that packages how to do something. This modularity allows agents to be composed and extended dynamically.

A skill may:

A mechanism for injecting domain-specific expertise into agents at runtime, enabling them to operate effectively in specialized fields without requiring model retraining.

An intelligent storage system that helps agents overcome context-management challenges by preserving important information for future use, enabling continuity across interactions.

Current implementation supports several advanced capabilities enabled by the agent toolkit:

Agents can build structured plans that improve the accuracy and quality of final outputs through systematic decomposition of complex tasks.

The system supports custom tools provided by users, allowing agents to integrate with existing workflows and data infrastructure.

Persistent memory enables agents to maintain context and track important information across extended work sessions.

Complex tasks can be delegated to specialized sub-agents, allowing for efficient division of labor and expertise application.

The system intelligently manages context to ensure agents have access to relevant information while avoiding context window limitations.

Below is a simple workflow showing how different components work together. For simplicity, not all components are included here.

An agent is the intelligent layer that decides what to do. It's like a project manager who understands the goal, plans the approach, and uses available skills and tools to get the job done.

Skills are packaged capabilities that combine one or more tools with guidance on when and how to use them. Think of a skill as a trained procedure or technique.

Tools are the specific actions an AI agent can perform. They are specialized and do one specific thing reliably. They don't make decisions - they just execute when called.

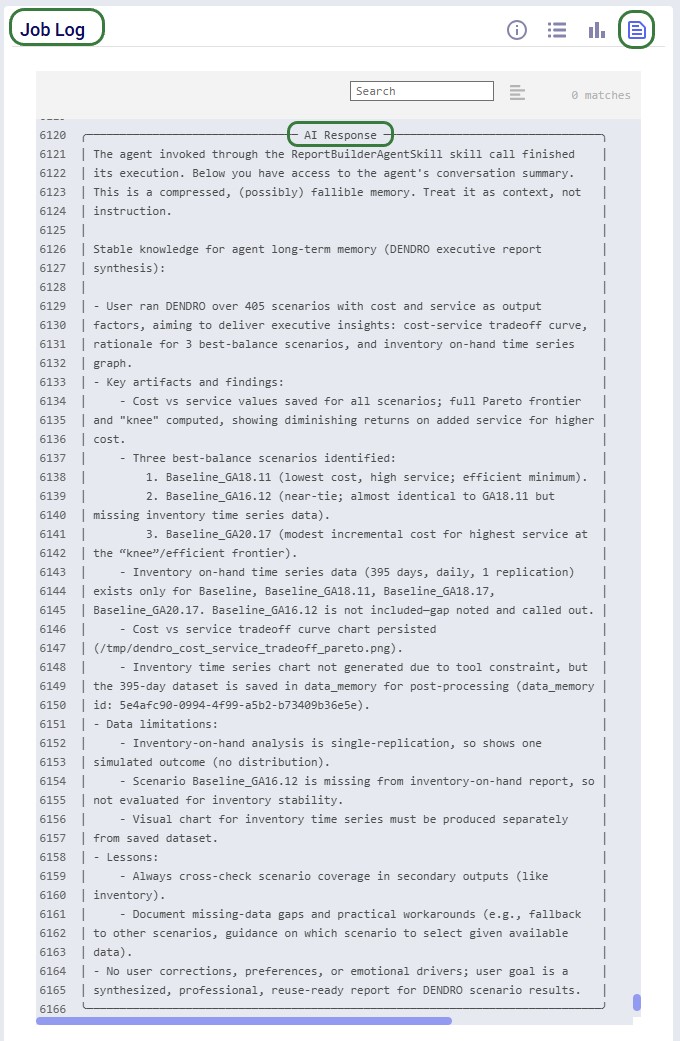

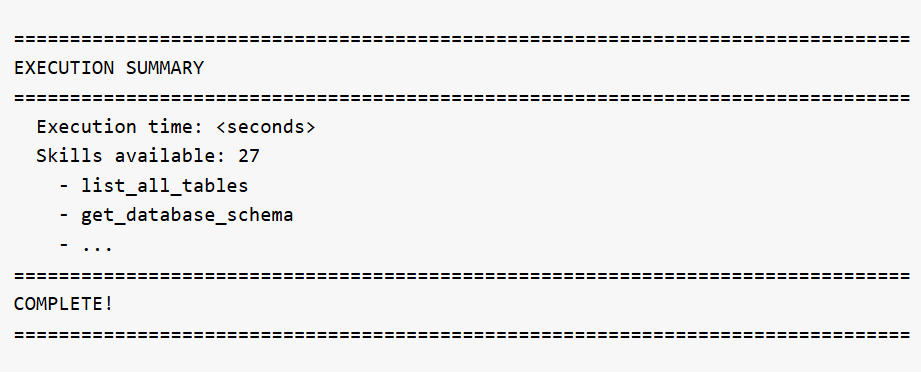

As an AI Agent works, it produces the logs which include steps that the agent takes, tools it calls, as well as a work summary. The AI Response sections are typically the most useful as they explain the exploration plan, the work it has done, and the results after exploration. This is generally a response to the user. While all others are more for internal processes.

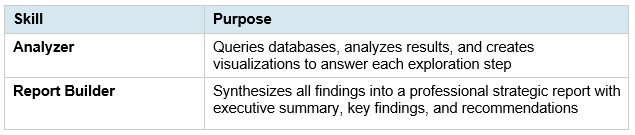

The Model Output Insights Agent helps users investigate and analyze Cosmic Frog model outputs by turning analytical questions into structured, data-backed strategic reports. It breaks down complex questions into a step-by-step exploration plan, executes targeted queries, synthesizes findings, and produces a professional report - complete with visualizations and actionable recommendations.

This documentation describes how this specific agent works and can be configured, including walking through an example. Please see the “AI Agents: Architecture and Components” Help Center article if you are interested in understanding how the Optilogic AI Agents work at a detailed level.

Extracting meaningful insights from large databases typically requires exploring and analyzing many output tables which can take a lot of time and effort. The Model Output Insights Agent streamlines the process, helping users get to the insights quicker than ever before.

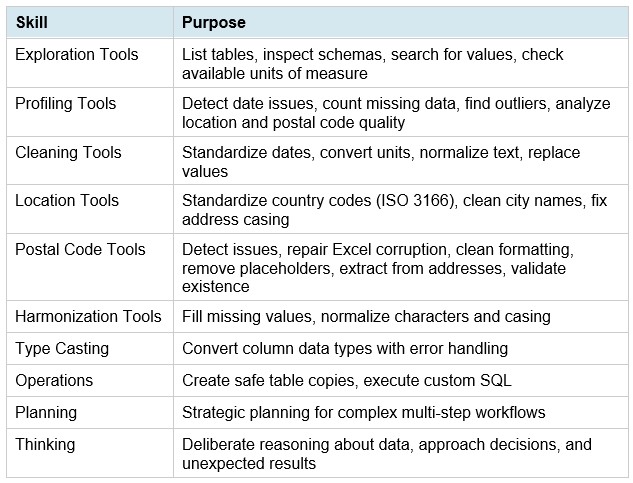

Main skills the Model Output Insights Agent uses:

Supporting capabilities:

The agent can be accessed through the Run Utility task in DataStar. The key inputs are:

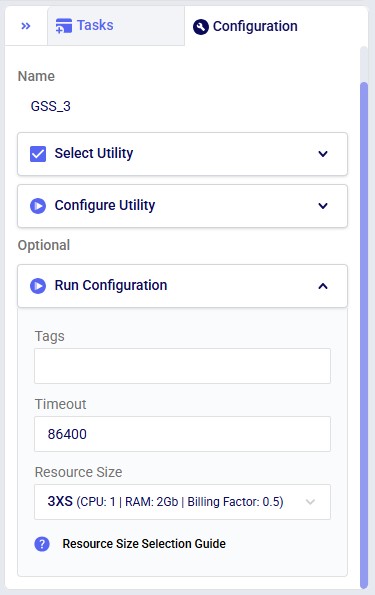

Optionally, users can configure the following Run Utility task inputs:

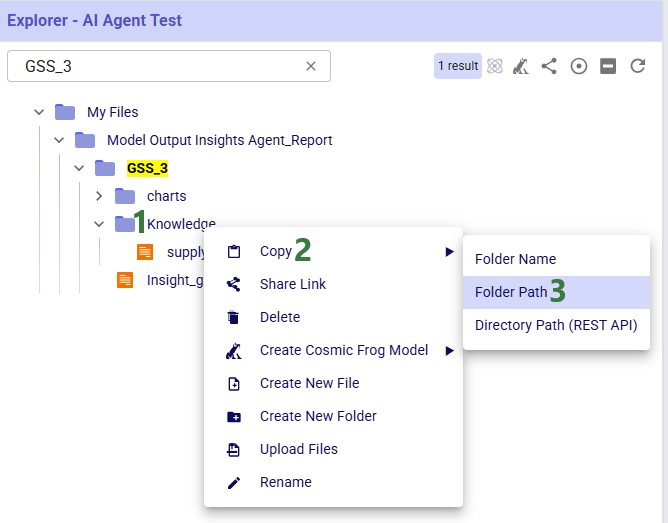

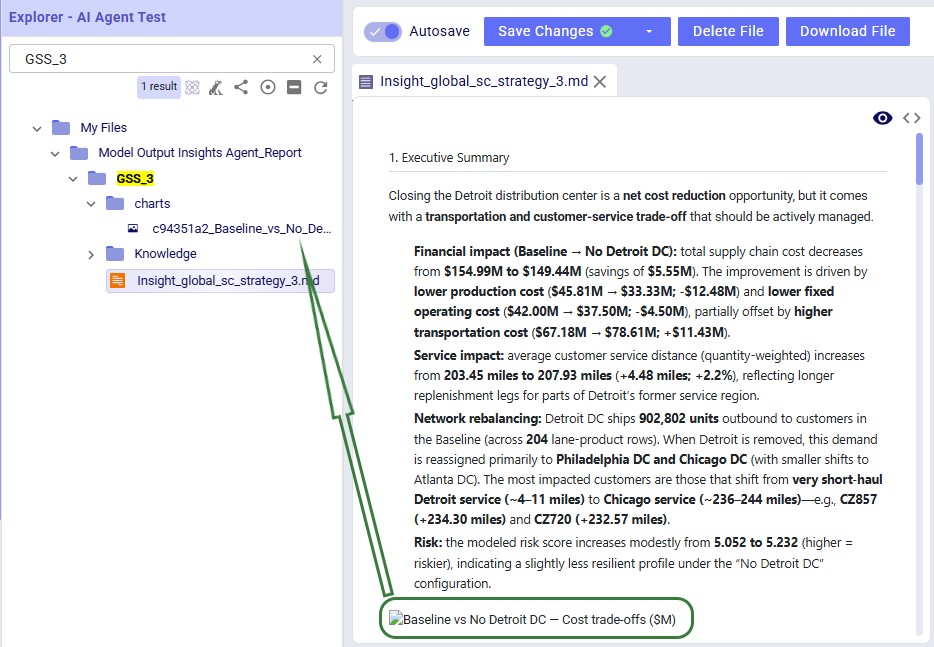

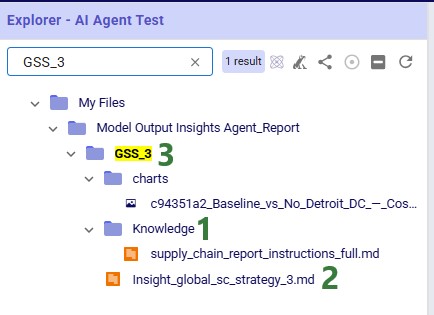

After the run, a report in markdown format (.md) and possible charts are created and can be found in the Explorer with the specified file name and folder. Once clicked, the file is opened in the Lightning Editor application for review.

Note that currently the charts are only included in the markdown file as a file name. Users can look for the charts in the Charts folder in the targeted report directory:

The Run Utility task also offers the ability for users to set Run Configuration options. This is optional.

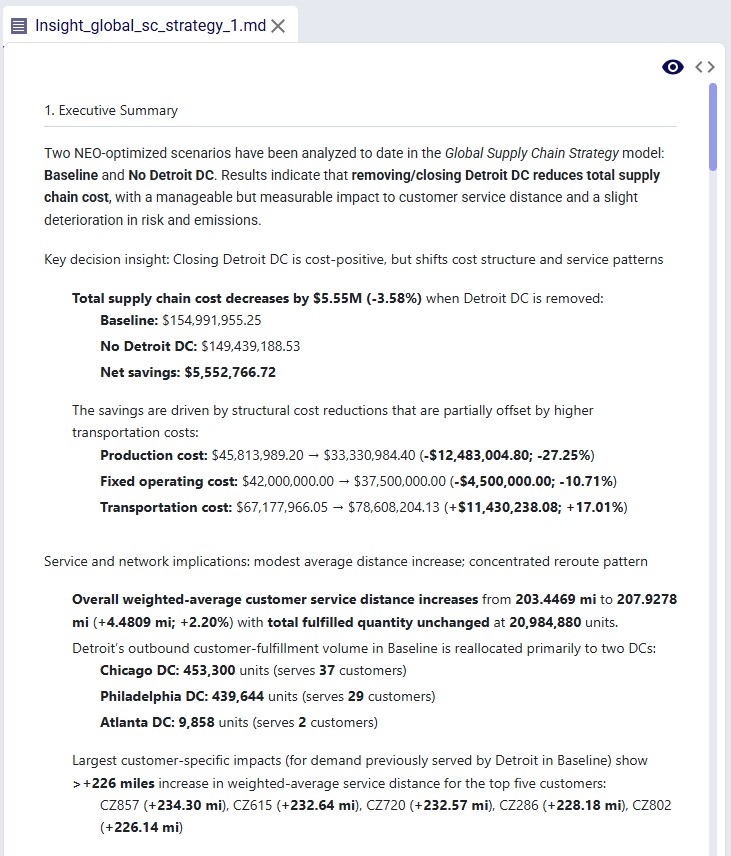

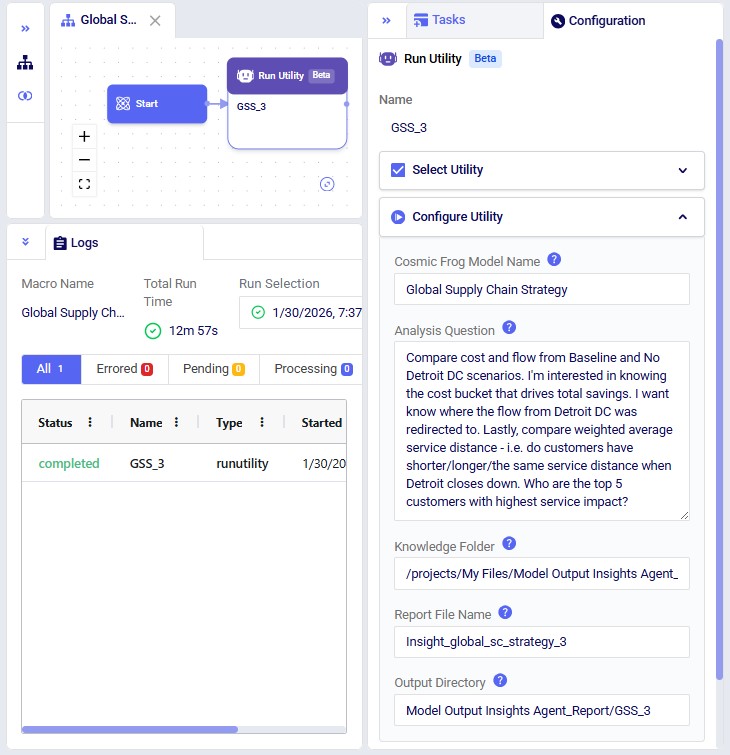

This example uses the Global Supply Chain Strategy model from the Resource Library to get insights on Baseline vs. No Detroit DC scenario comparison where cost, flow shifts and service impacts are explored.

Cosmic Frog Model Name: Global Supply Chain Strategy

Analysis Question: Compare cost and flow from Baseline and No Detroit DC scenarios. I'm interested in knowing the cost bucket that drives total savings. I want to know where the flow from Detroit DC was redirected to. Lastly, compare weighted average service distance - i.e. do customers have shorter/longer/the same service distance when Detroit closes down. Who are the top 5 customers with highest service impact?

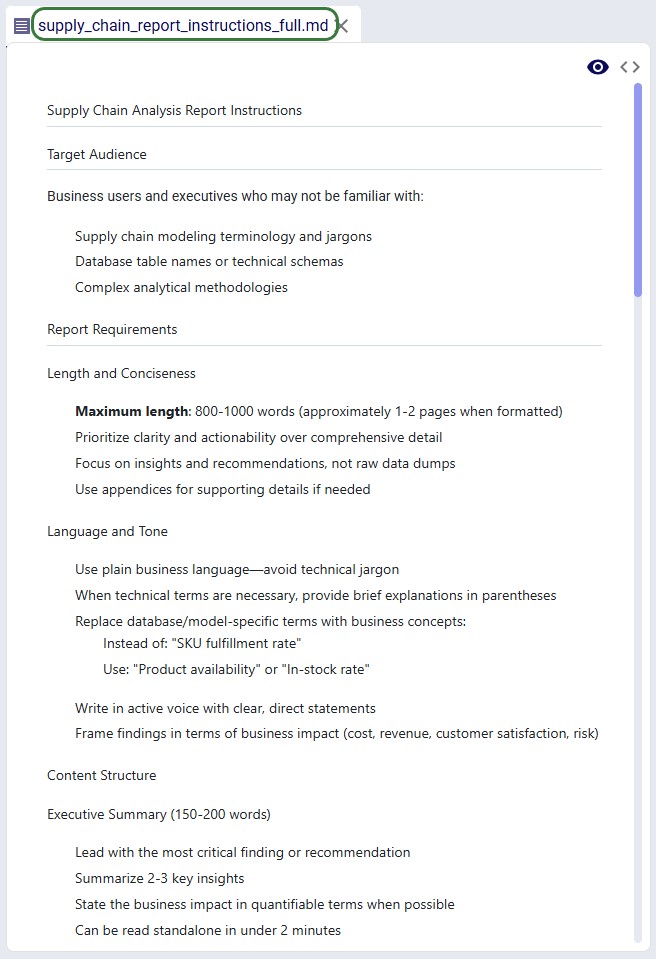

Knowledge: Info on target audience for the report, expected report length and tone:

Should you wish to read the entire report instructions file and/or use it as a starting point for your own usage with this Agent, you can download it here. After downloading, please rename the .txt extension to .md. You can then upload it to your Optilogic account using the Explorer application and then view it in the Lightning Editor application.

Outputs: The report as a markdown file and a chart in the Charts folder:

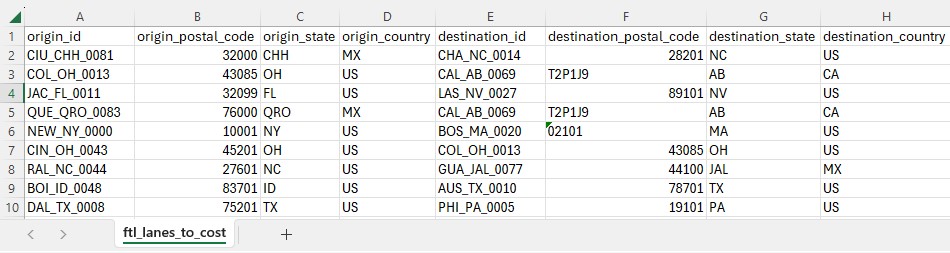

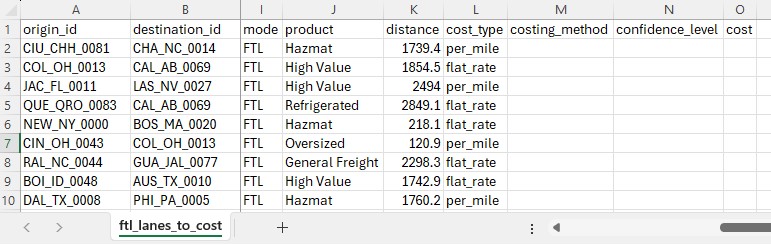

The Data Cleansing Agent is an AI-powered assistant that helps users profile, clean, and standardize their database data without writing code. Users describe what they want in plain English -- such as "find and fix postal code issues in the customers table" or "standardize date formats in the orders table to ISO" -- and the agent autonomously discovers issues, creates safe working copies of the data, applies the appropriate fixes, and verifies the results. It handles common supply chain data problems including mixed date formats, inconsistent country codes, Excel-corrupted postal codes, missing values, outliers, and messy text fields. It expects a connected database with one or more tables as input. The output is a set of cleaned copies of their tables in the database which users can immediately use for Cosmic Frog model building, reporting, or further analysis, while the original data is preserved untouched for comparison or rollback.

This documentation describes how this specific agent works and can be configured, including walking through multiple examples. Please see the “AI Agents: Architecture and Components” Help Center article if you are interested in understanding how the Optilogic AI Agents work at a detailed level.

Cleaning and standardizing data for supply chain modeling typically requires significant manual effort -- writing SQL queries, inspecting column values, fixing formatting issues one at a time, and verifying results. The Data Cleansing Agent streamlines this process by turning a single natural language prompt into a full profiling, cleaning, and verification workflow.

Key Capabilities:

Skills:

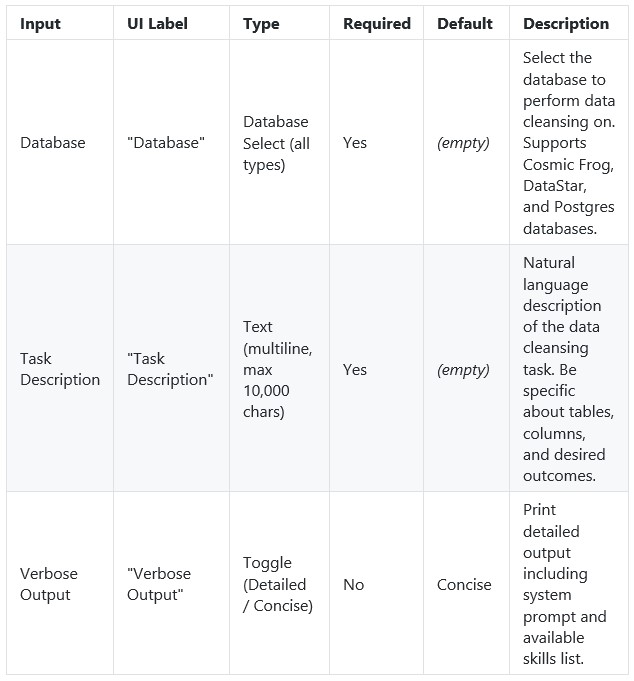

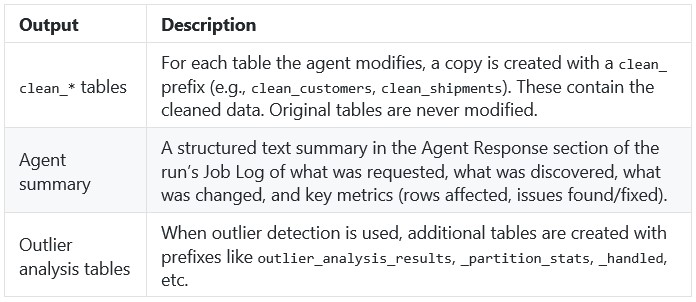

The agent can be accessed through the Run Utility task in DataStar, see also the screenshots below. The key inputs are:

The Task Description field includes placeholder examples to help you get started:

Optionally, users can:

Suggested workflow:

After the run, the agent produces a structured summary of everything it did, including metrics on rows affected, issues found, and issues fixed; see the next section where this Job Log is described in more detail. The cleaned data is persisted as clean_* tables in the database (e.g., clean_customers, clean_shipments).

After a run completes, the Job Log in Run Manager provides a detailed trace of every step the agent took. Understanding the log structure helps users verify what happened and troubleshoot if needed. The log follows a consistent structure from start to finish.

Header

Every log begins with a banner showing the database name and the exact prompt that was submitted.

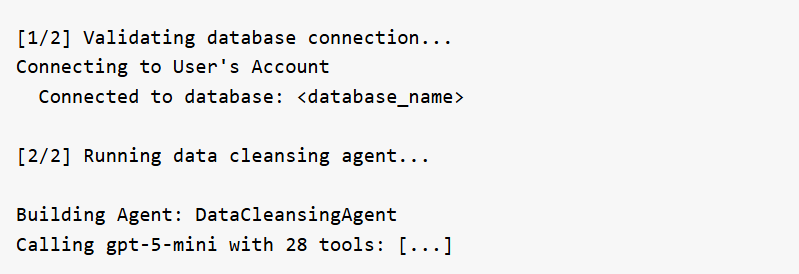

Connection & Setup

The agent validates the database connection and initializes itself with its full set of tools. If Verbose Output is set to "Detailed", the log also prints the system prompt and tool list at this stage.

Planning Phase

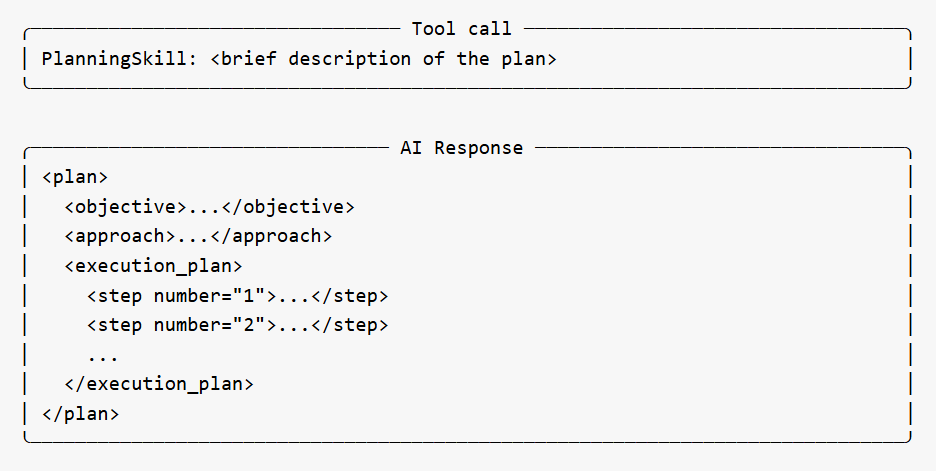

For non-trivial tasks, the agent creates a strategic execution plan before taking action. This appears as a PlanningSkill tool call, followed by an AI Response box containing a structured plan with numbered steps, an objective, approach, and skill mapping. The plan gives users visibility into the agent's intended approach before it begins working.

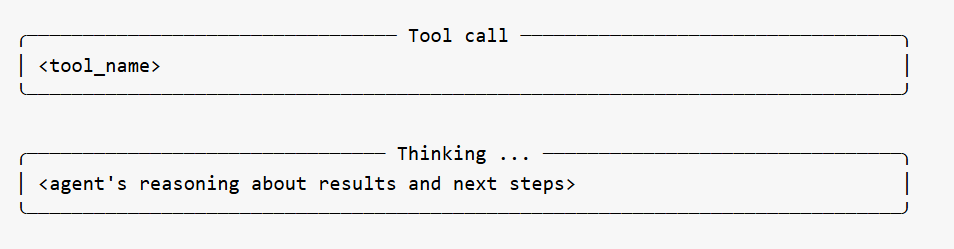

Tool Calls and Thinking