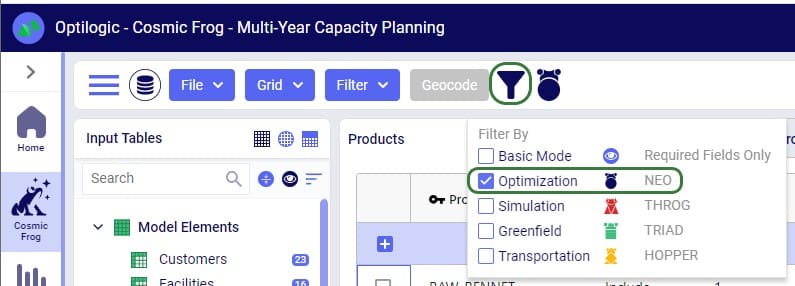

Exciting new features which enable users to solve both network and transportation optimization in one solve have been added to Cosmic Frog. By optimizing multi-stop route optimization as part of Network Optimization, it enables users to streamline their analysis and make their Network Optimization more accurate. This is possible because the single optimization solve calculates multi-stop route costs by shipment/customer combination, uses this cost as part of another possible transportation mode in addition to OTR, Rail, Air, etc. and will result in more accurate answers by including better transportation costs in a single solve.

In addition to this documentation, these 2 videos cover the new Network Transportation Optimization (NTO) features too:

Network Transportation Optimization is particularly useful for two classic network design problems (both will be described in more detail in the next section):

This feature set includes 2 ways of running the Transportation Optimization (Hopper) and Network Optimization (Neo) engines together:

Following will be covered in this documentation for both the Hopper within Neo and Hopper after Neo NTO algorithms: example use cases, model inputs, how to use the new features, basics of the model used to show the NTO features, and walk through 2 example models, including their setup (input tables, scenarios), and analysis of the outputs using tables and maps.

With this feature, users can consider routes as inputs in a Neo model, meaning that the model will optimize product flow sources based on all costs, including the cost of routes. Costs and asset capacities are taken into account for the routes.

Two example use cases which can be addressed using Hopper within Neo are customer consolidation and hub optimization, which will be illustrated with the images that follow.

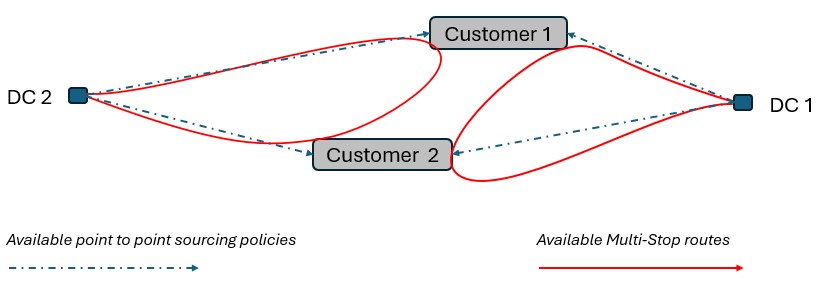

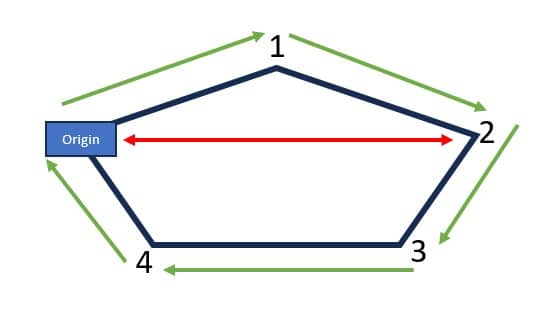

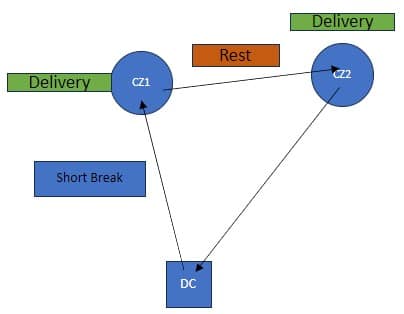

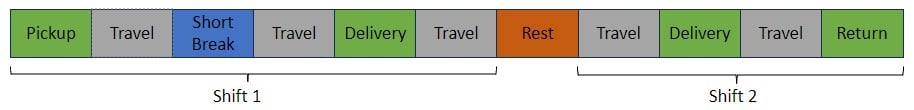

Consider a network where 2 distribution centers (DCs) are available to serve the customers. Two of these customers are in between the DCs and can either be serviced by the DCs directly (the blue dashed point to point lines) or product can be delivered to both of them as part of a multi-stop route from either DC (the red solid lines):

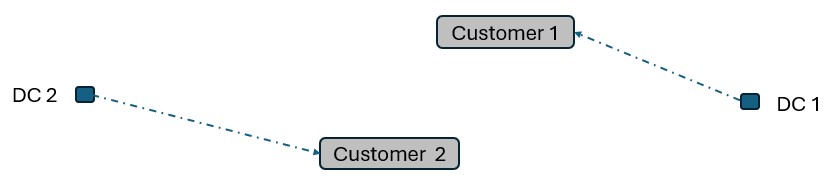

Running Neo without Hopper, not taking any route inputs into account, can lead to a solution where each DC serves 1 customer:

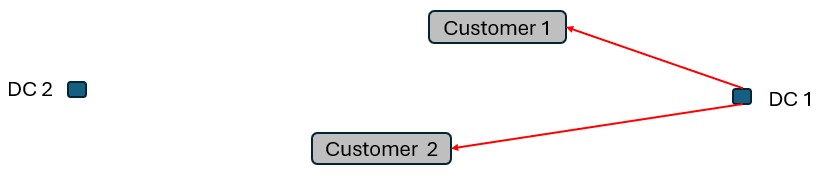

Whereas when taking route costs and capacities into account during a Hopper within Neo solve, it may be found that the overall cost solution is lower if one of the DCs (DC 1 in this example) serves both customers using a multi-stop route:

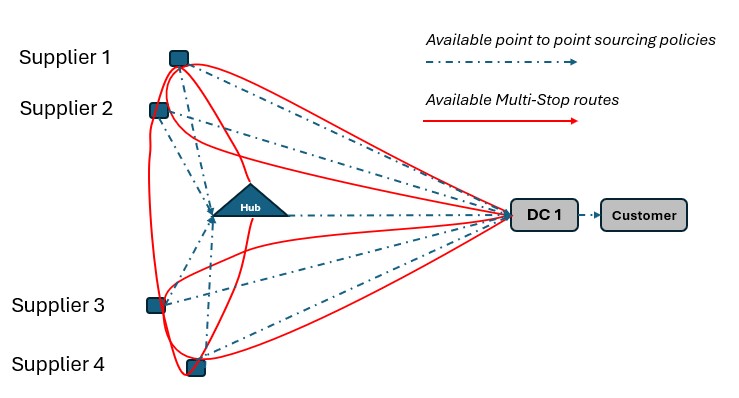

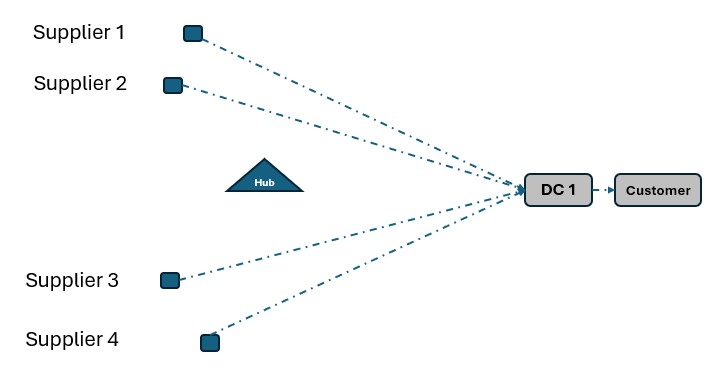

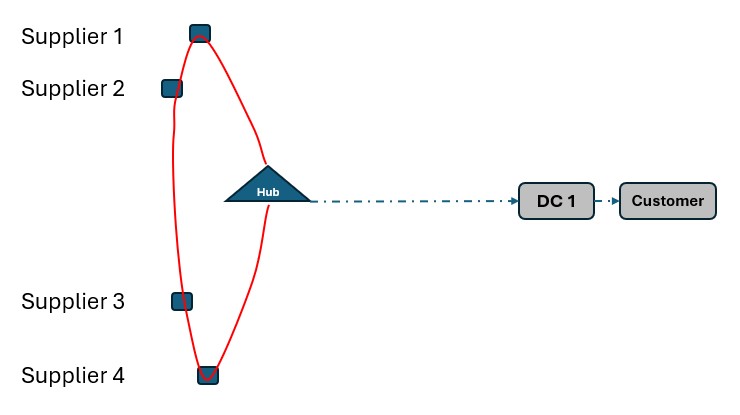

As a second example, consider a network where Suppliers can either deliver product to a Hub, either direct or on a multi-stop route from multiple suppliers, or direct/on multi-stop routes from multiple suppliers to a Distribution Center. Again, blue dashed lines indicate direct point to point deliveries and red lines indicate multi-stop route options:

Not taking route options into account when running Neo without Hopper can lead to a solution where each Supplier delivers to DC 1 directly:

This solution can change to one where the Suppliers deliver to the Hub on a multi-stop route when taking the route options into account in a Neo within Hopper run if this is overall beneficial (e.g. lower total cost) to do:

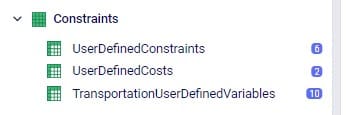

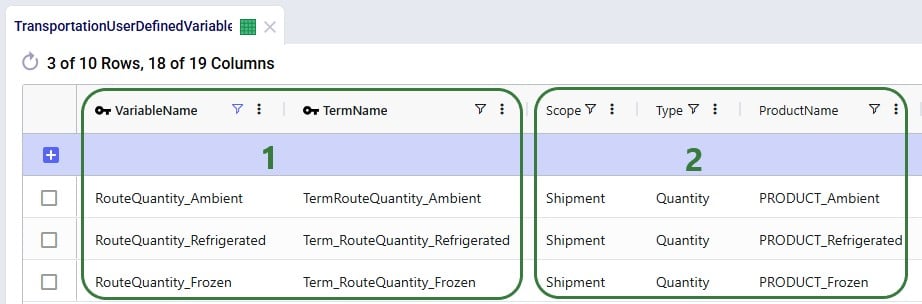

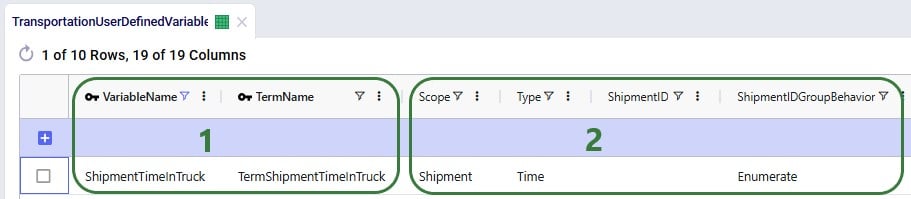

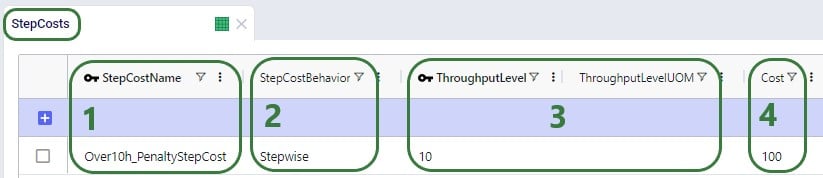

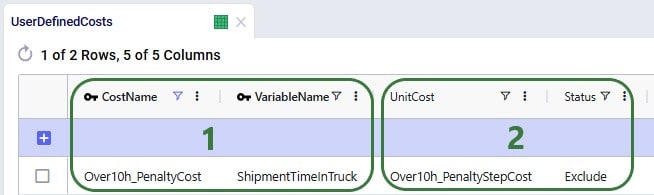

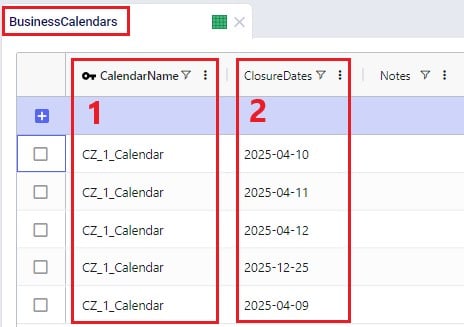

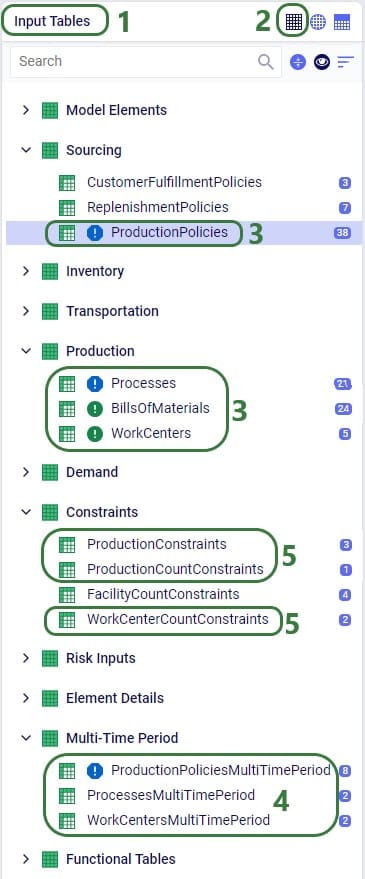

The Hopper within Neo algorithm needs inputs in order to consider routes as part of the Neo network optimization run. These include:

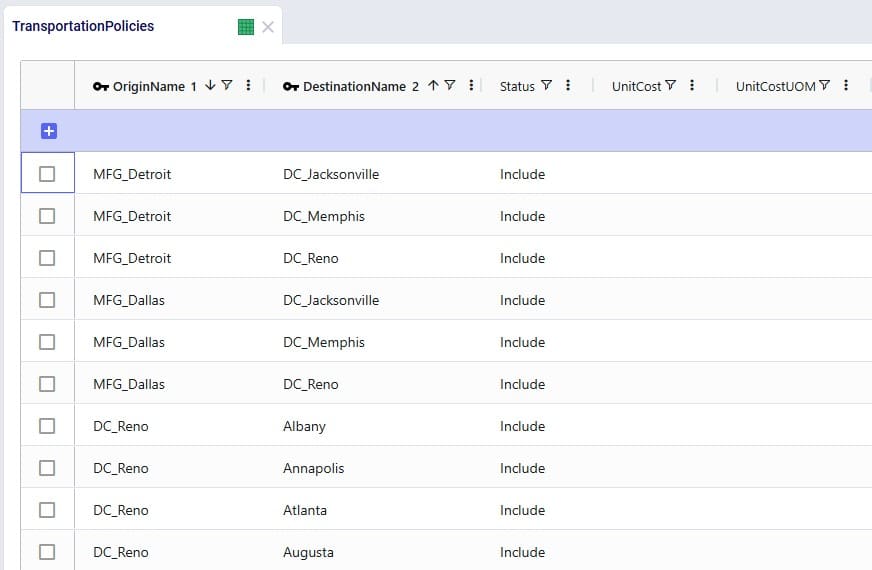

In all cases, additional records need to be added to the Transportation Policies table as well (explained in more detail below), and if the Transit Matrix table is populated, it will also be used for Hopper within Neo runs.

Please note that any other Hopper related tables (e.g. relationship constraints, business hours, transportation stop rates, etc.), whether populated or not, are not used during a Hopper within Neo solve.

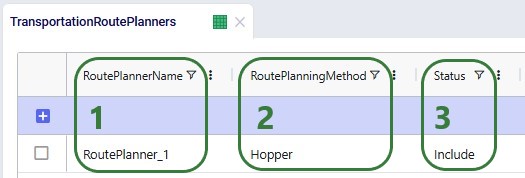

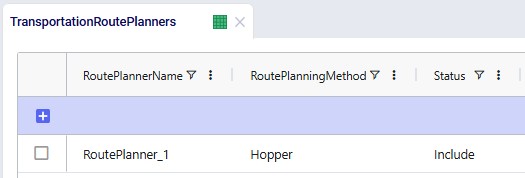

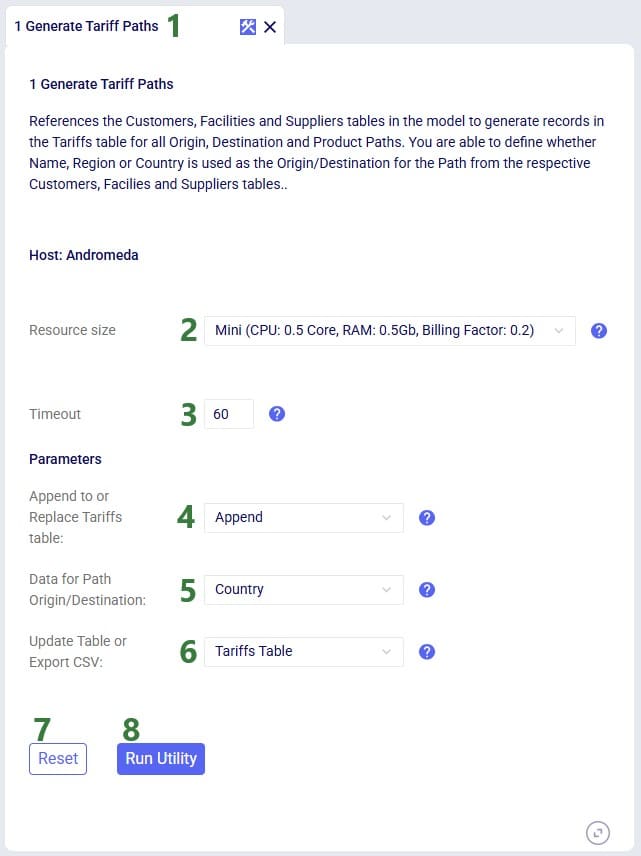

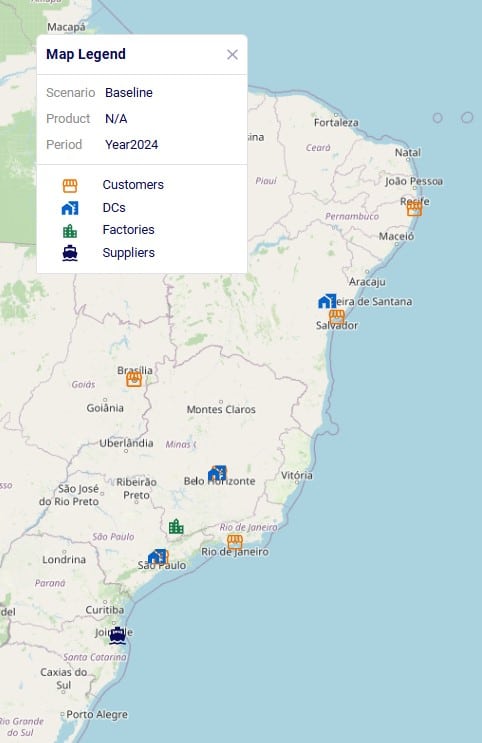

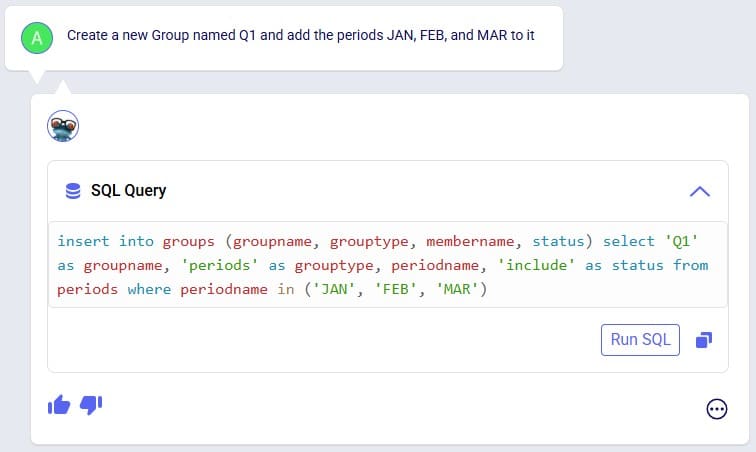

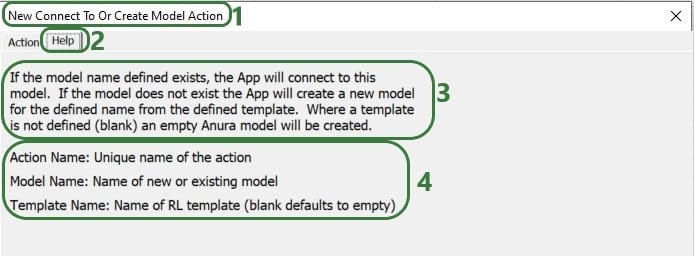

To use the Hopper algorithm for route generation, the Transportation Route Planners table and Transportation Policies tables need to be used. Starting with the Transportation Route Planners table:

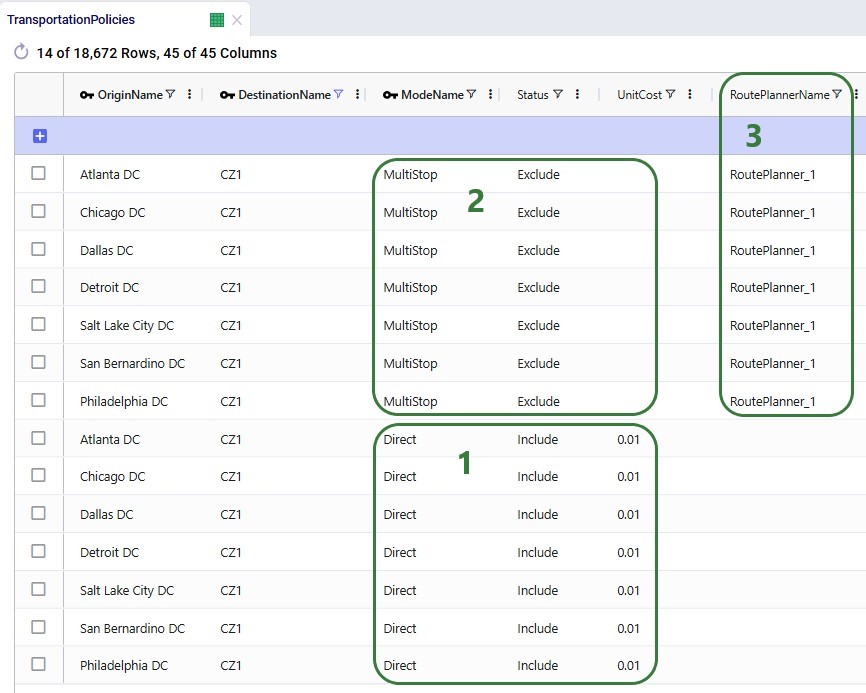

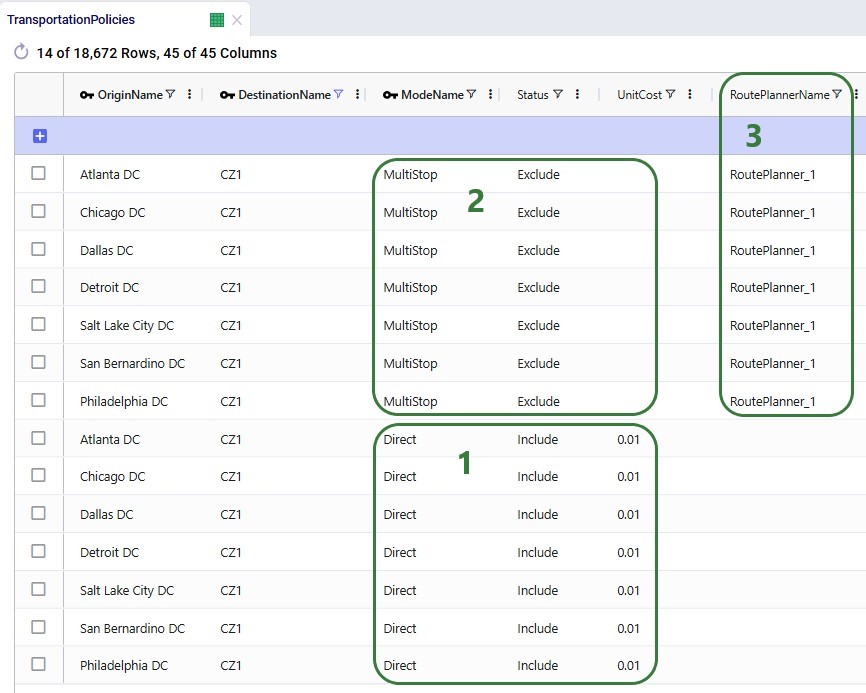

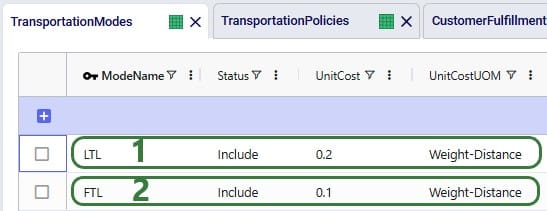

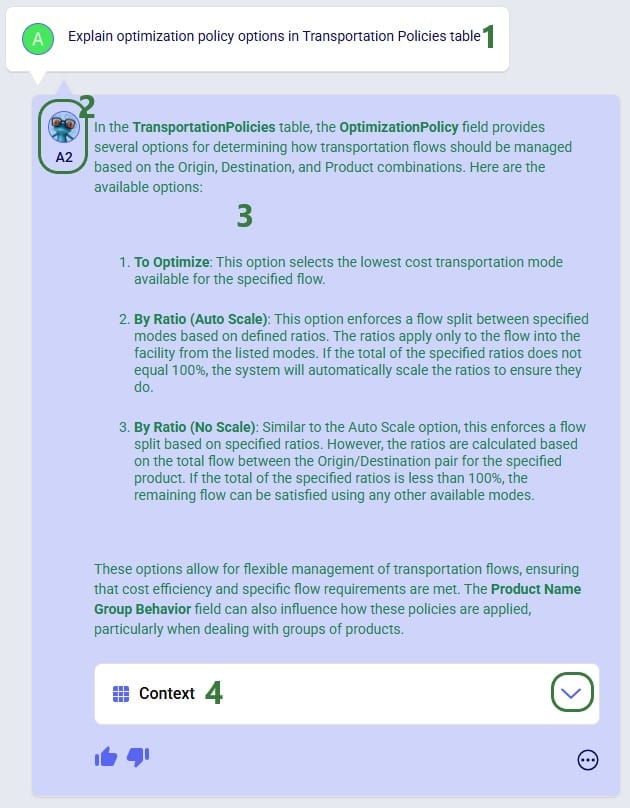

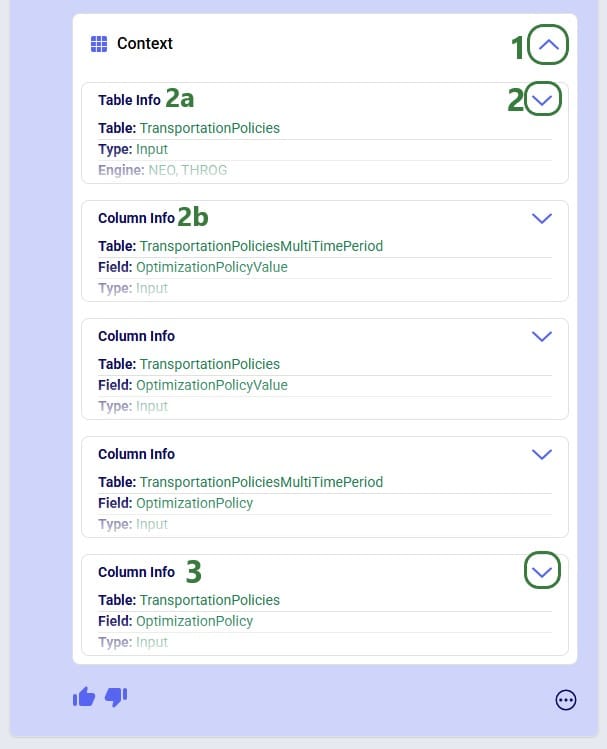

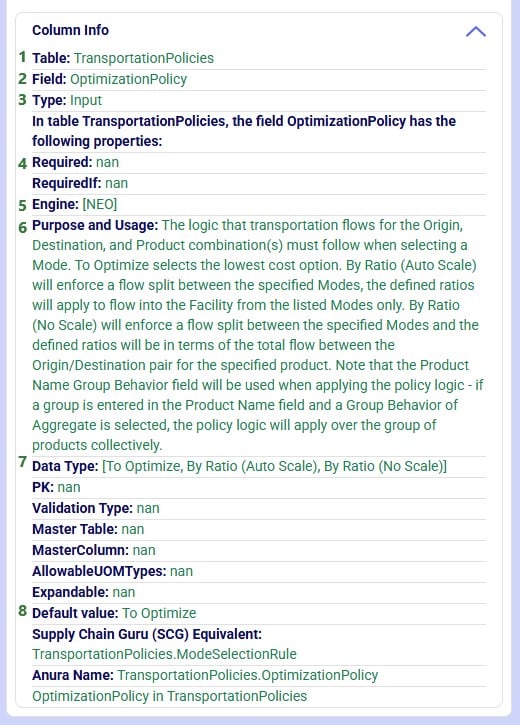

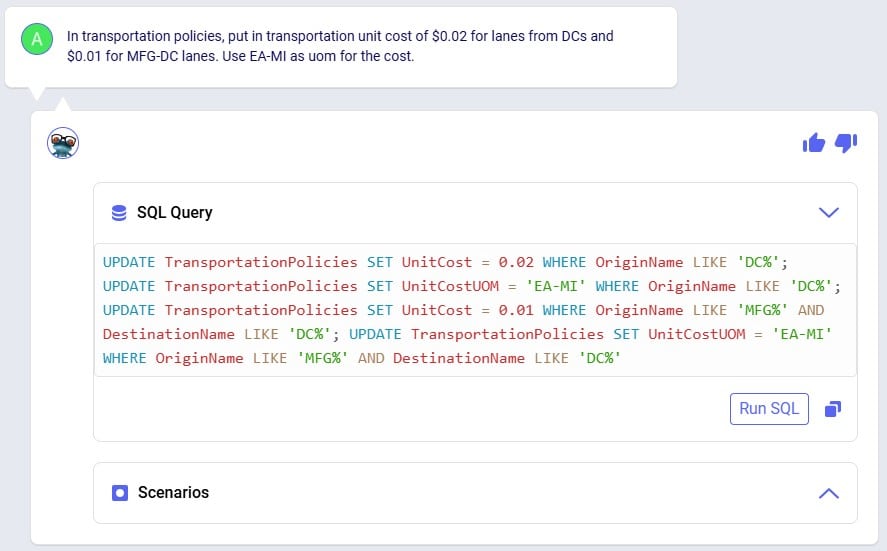

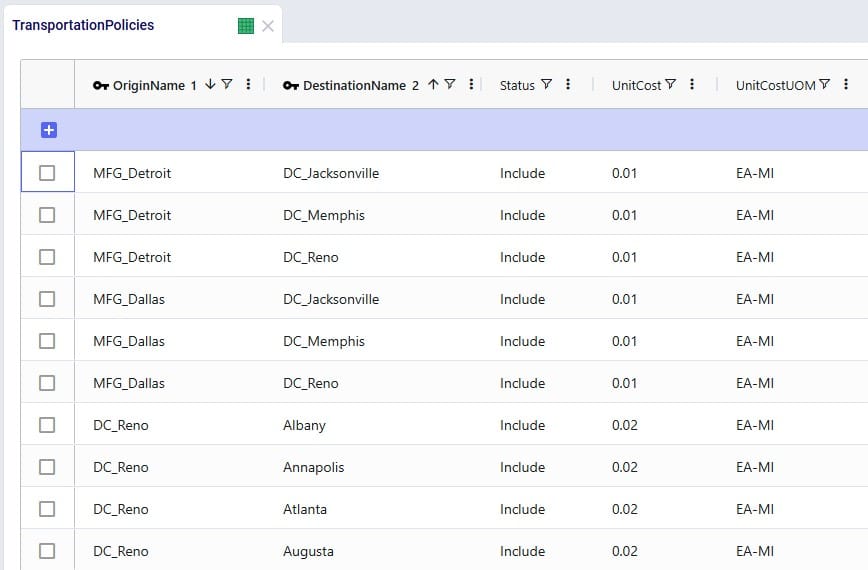

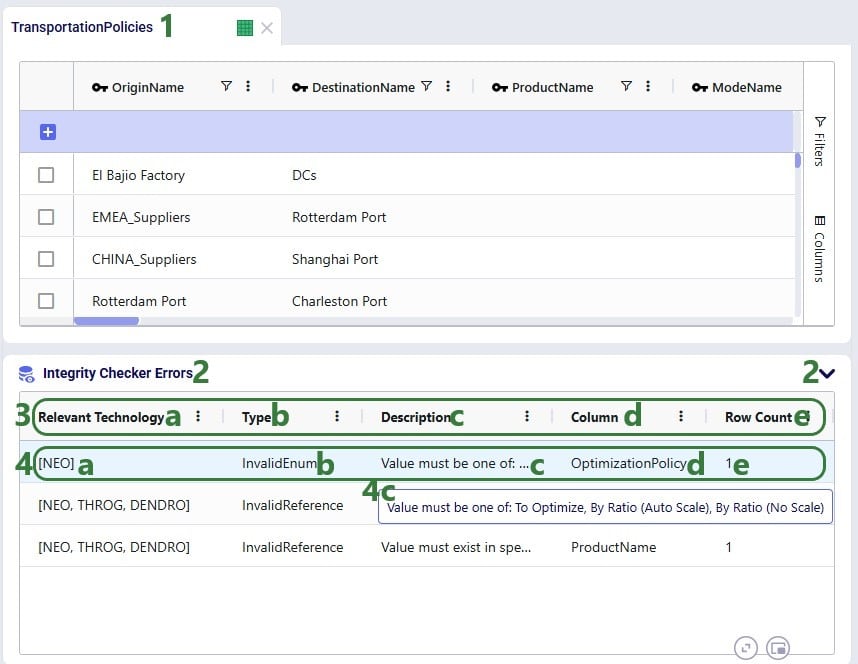

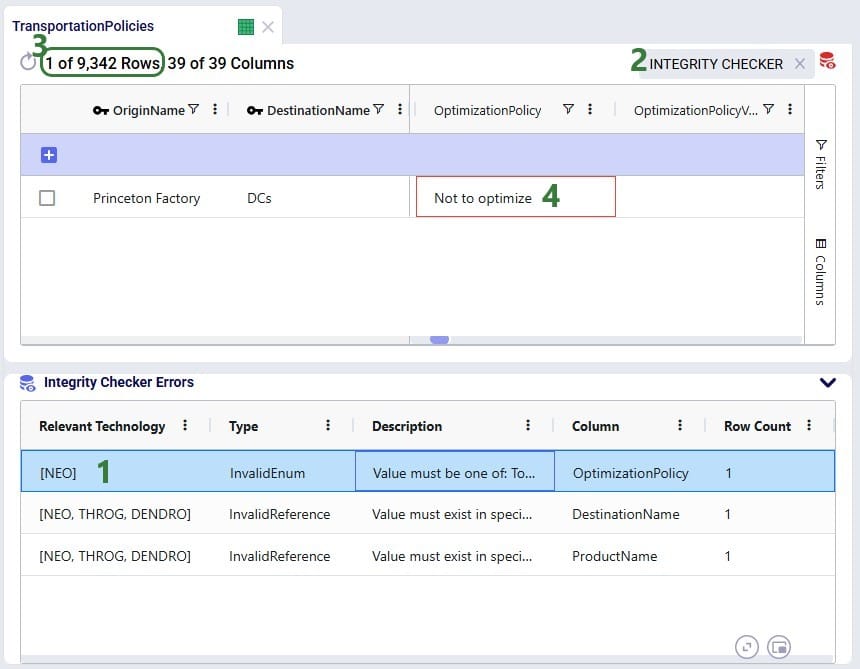

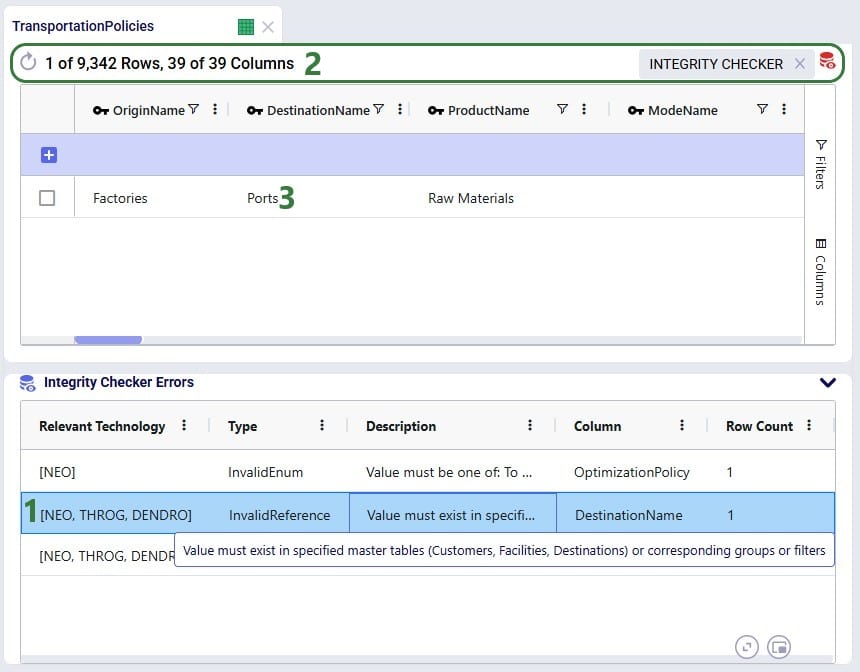

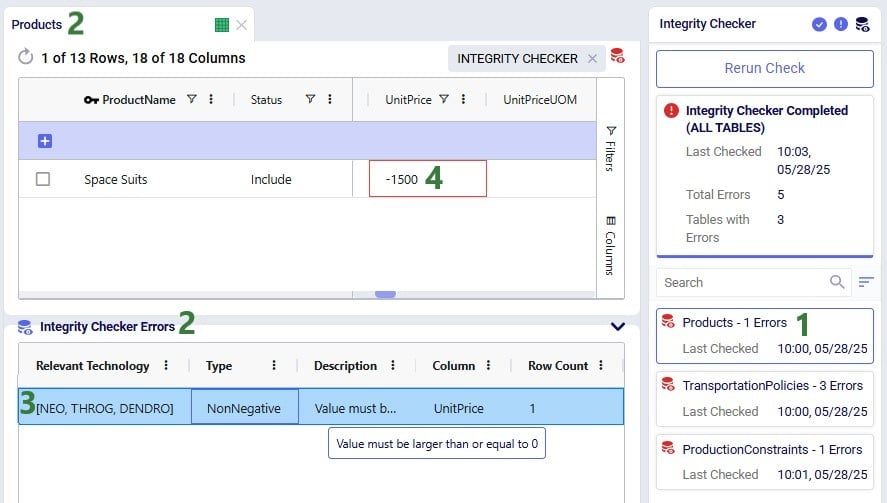

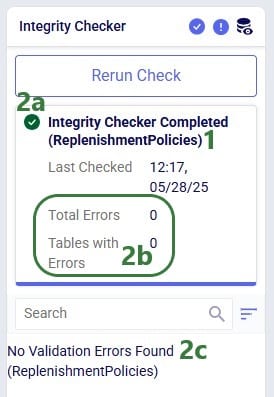

In the Transportation Policies table, records need to be added to indicate which lanes will be considered to be part of a multi-stop route:

Under the hood, potential routes will be calculated based on the inputs provided by the user. For example, for a case where the shipments table is not populated, and the user has specified their own assets:

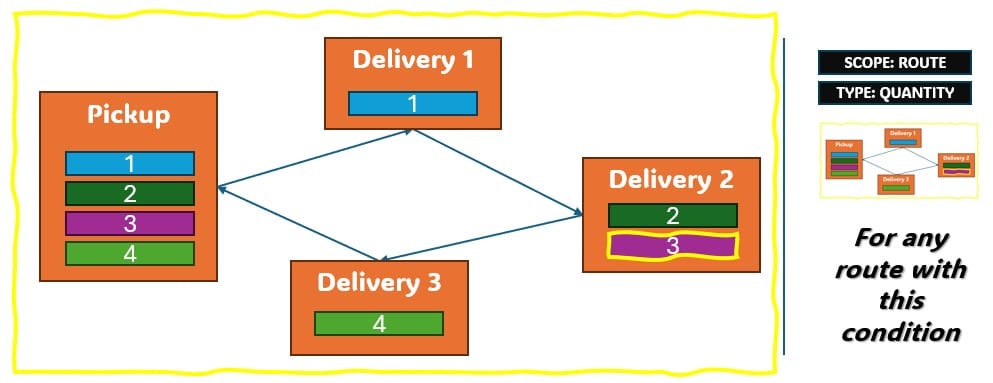

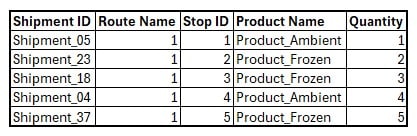

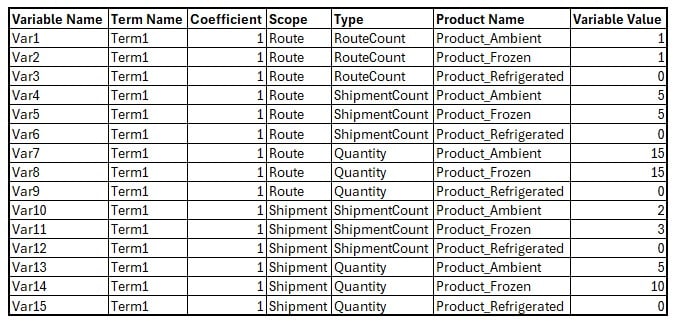

As a numbers example to illustrate this calculation of a candidate route, let us consider following:

These costs are then used as inputs into the Network Optimization, together with costs for other transportation modes, and all are taken into account when optimizing the model.

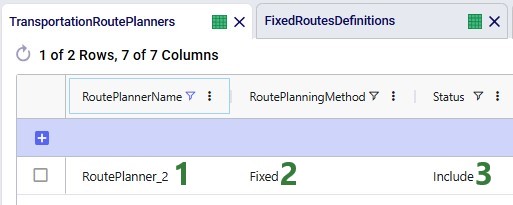

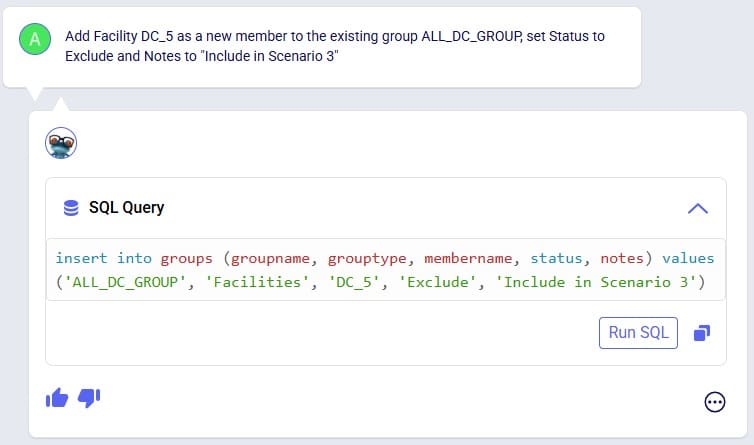

To use routes that are defined by the user in the Neo solve, the user also needs to use the Transportation Route Planners table, in combination with the Fixed Routes, Fixed Routes Definitions, and Transportation Policies tables. Starting with the Transportation Route Planners table:

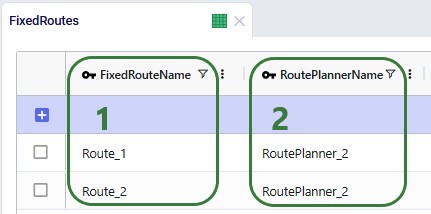

The Fixed Routes table connects the names of the routes the user defines with the route planner name:

There are a few additional columns on the Fixed Routes table which are not shown in the screenshot above. These are:

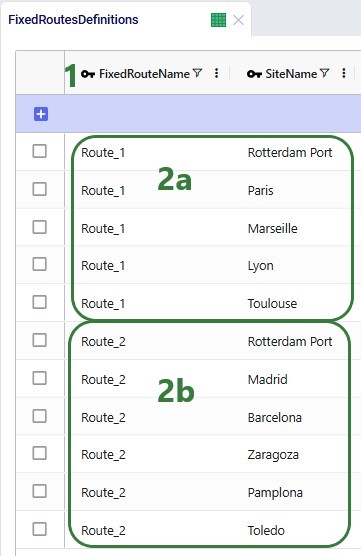

The Fixed Routes Definitions table needs to be used to indicate which stops are on a route together:

Please note that the Stop Number field on this table is currently not used by the Hopper within Neo functionality. The solve will determine the sequence of the stops.

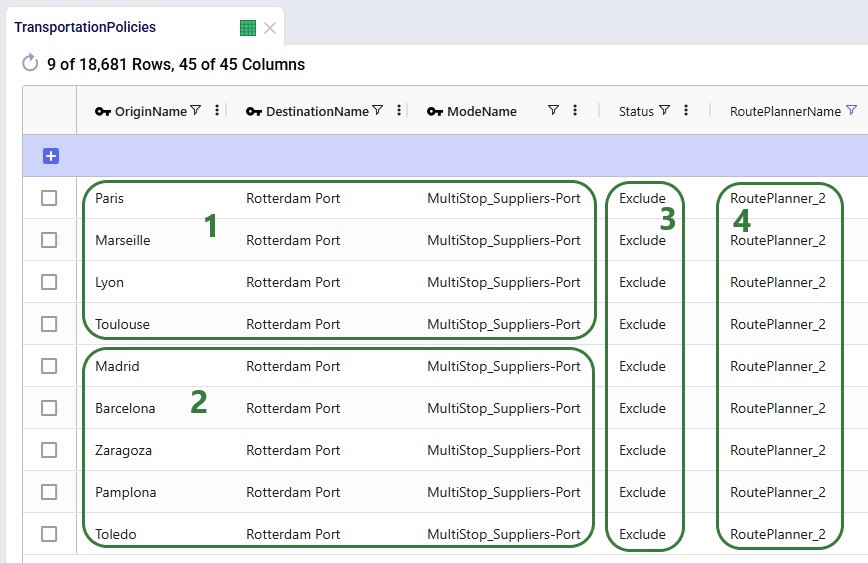

Finally, to understand which locations function as sources (pickups) and which as drop-offs (deliveries) on a route, and to indicate that multi-stop routes are an option for these source-destination combinations, corresponding records need to be added to the transportation policies table too:

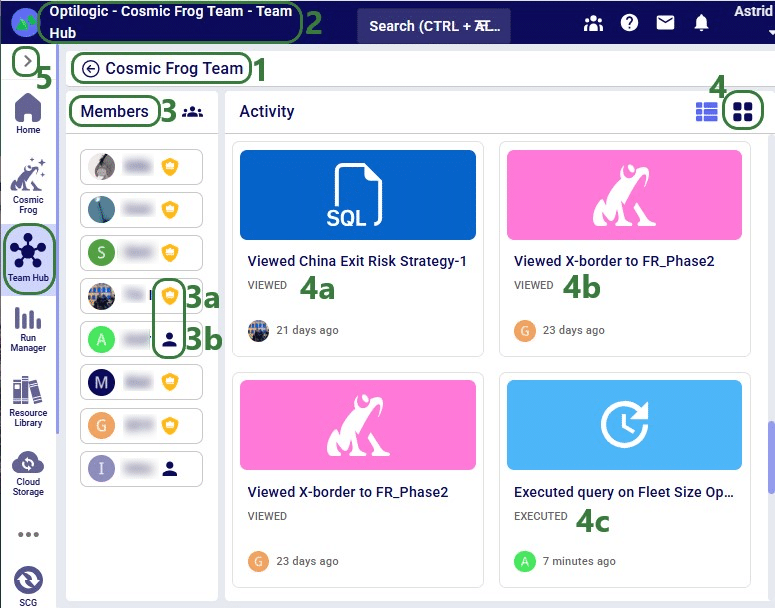

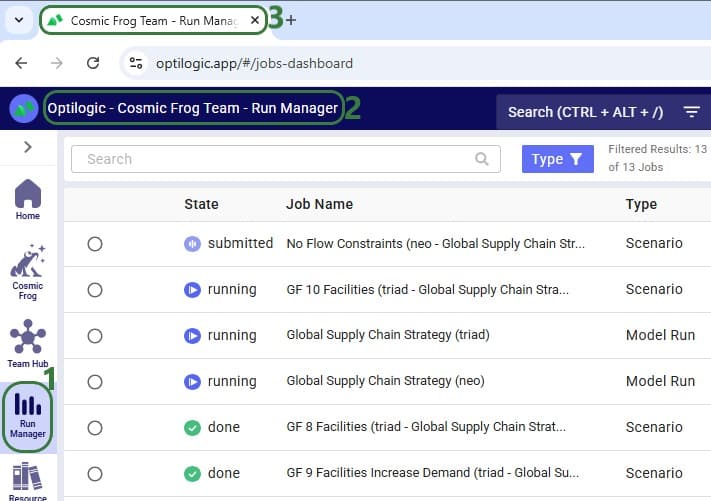

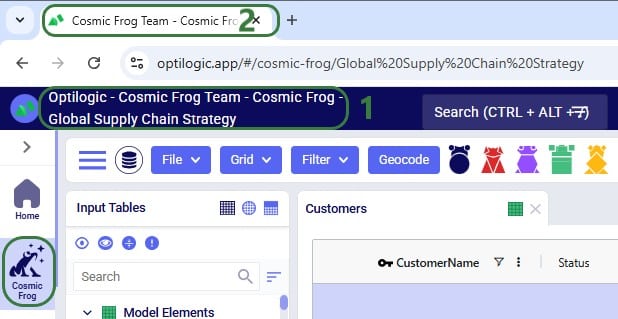

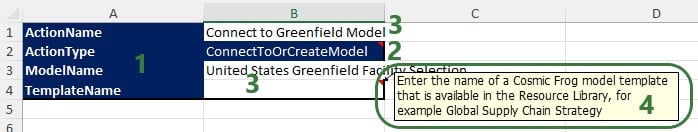

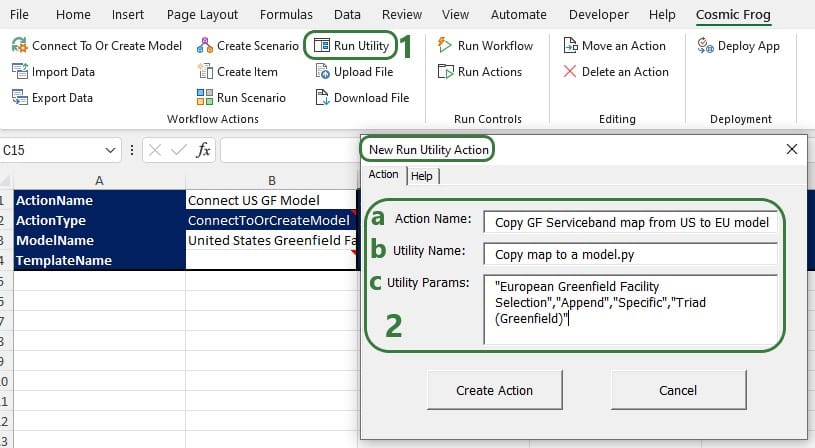

A copy of the Global Supply Chain Strategy model from the Resource Library was used as the starting point for both the Hopper within Neo and Hopper after Neo demo models. The original Global Supply Chain Strategy model can be found here on the Resource Library, and a video describing it can be found in this "Global Supply Chain Strategy Demo Model Review" Help Center article. The modified models showing the Hopper within and after Neo functionality can be found here on the Resource Library:

To learn more about the Resource Library and how to use it, please see the "How to use the Resource Library" Help Center article.

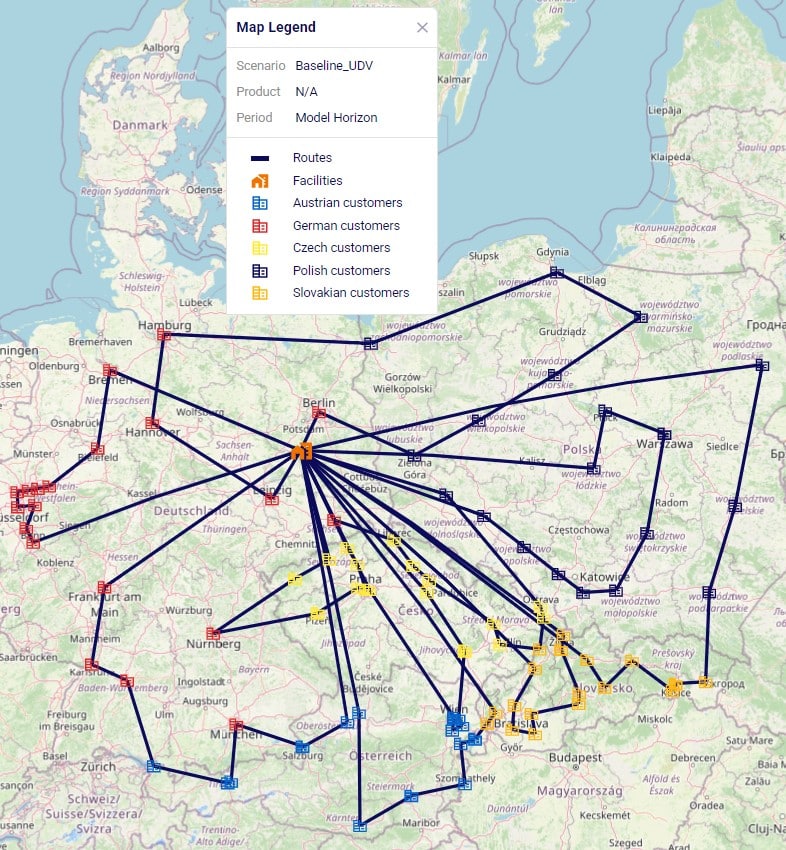

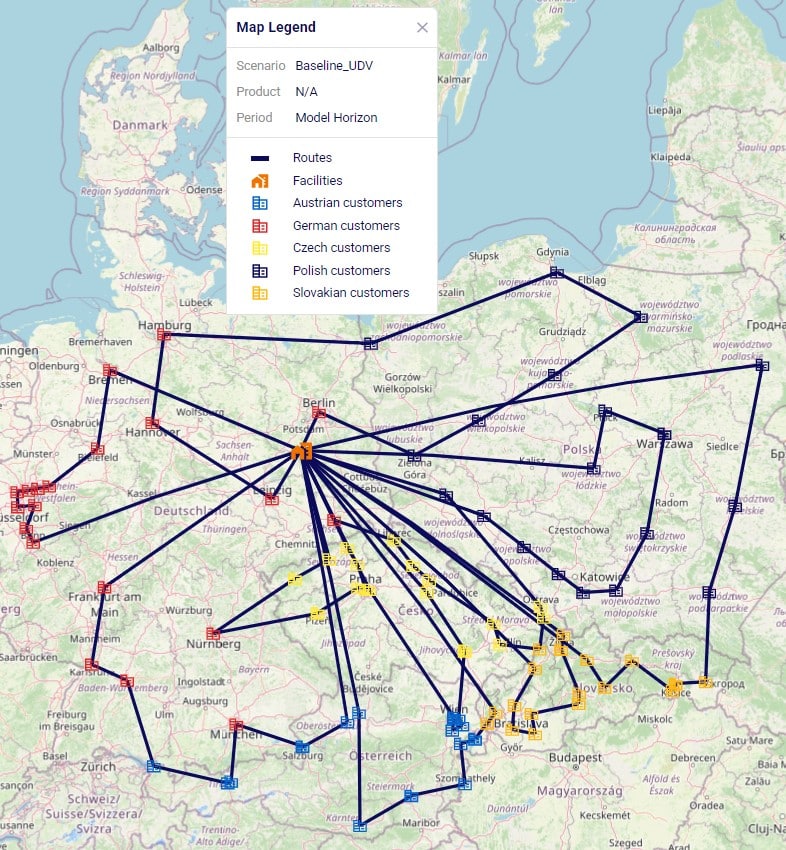

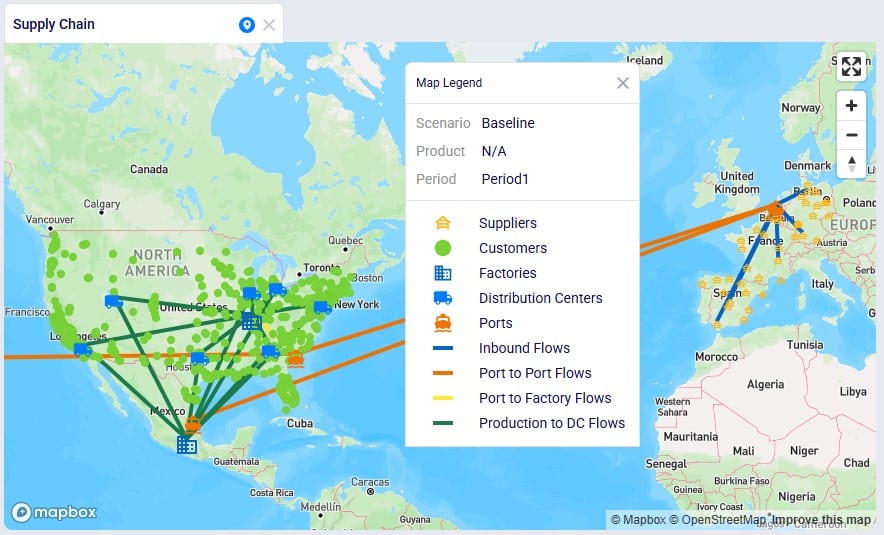

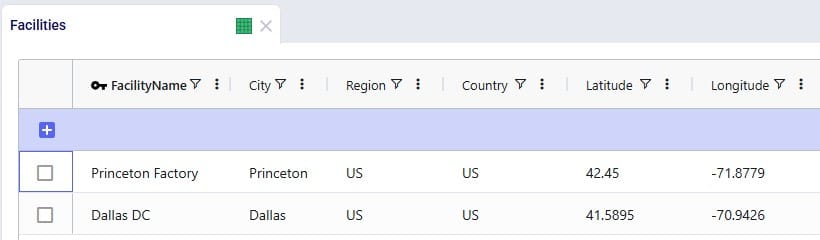

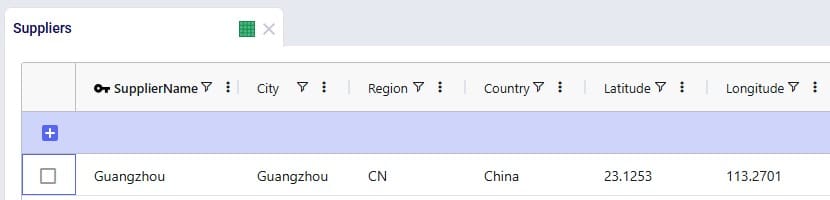

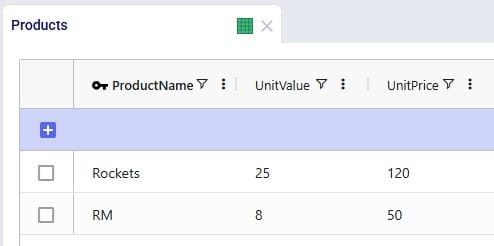

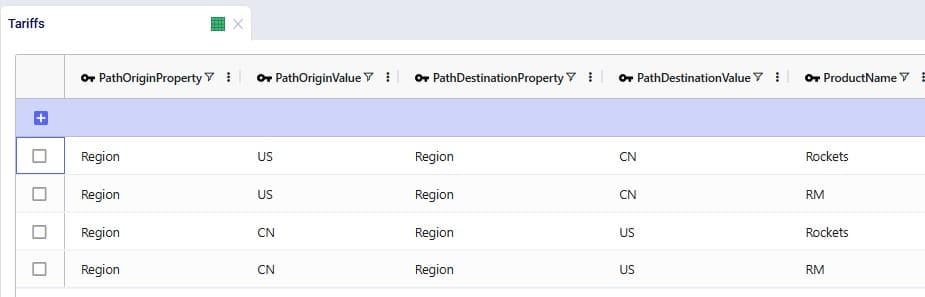

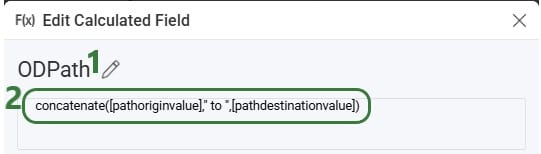

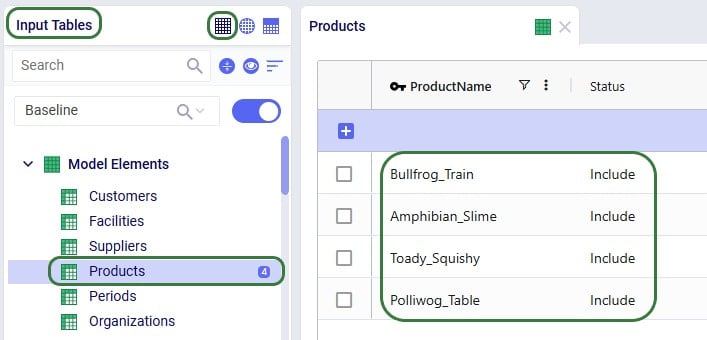

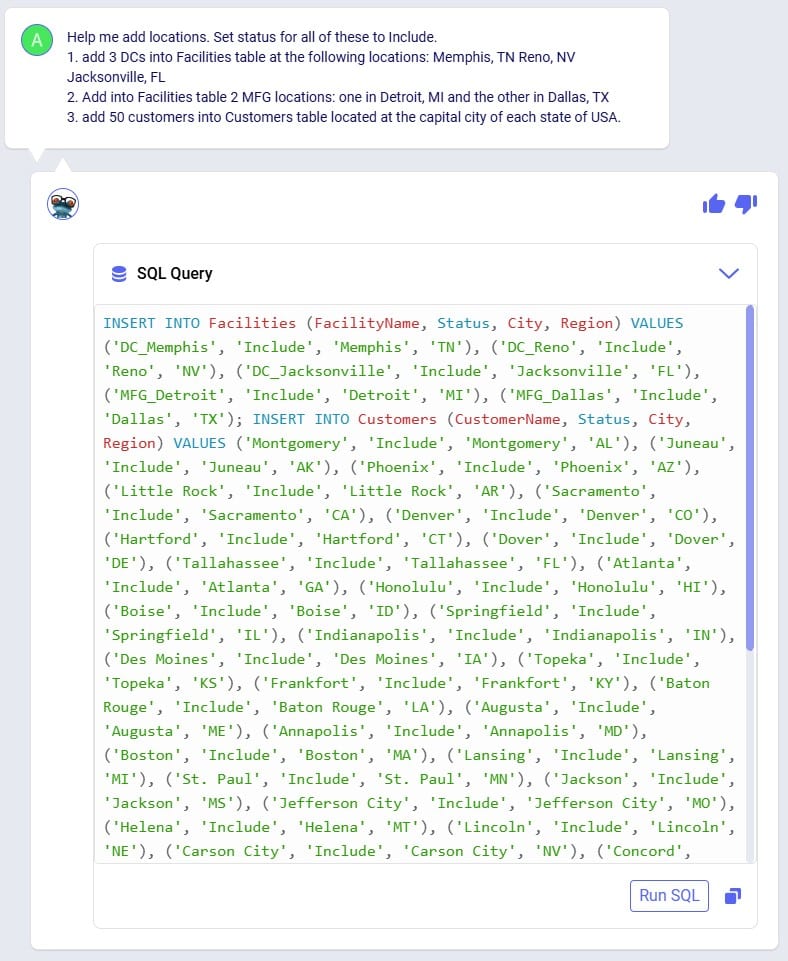

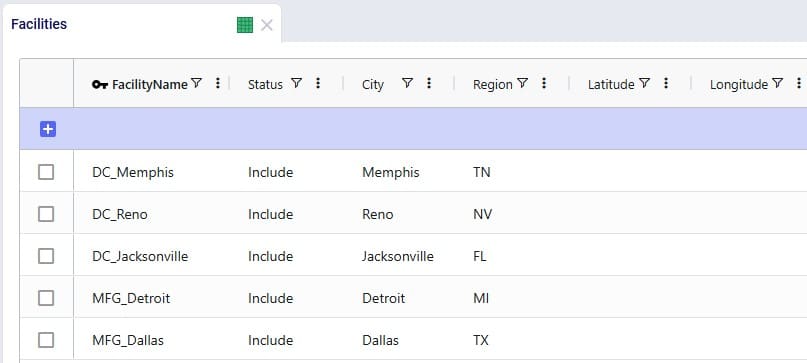

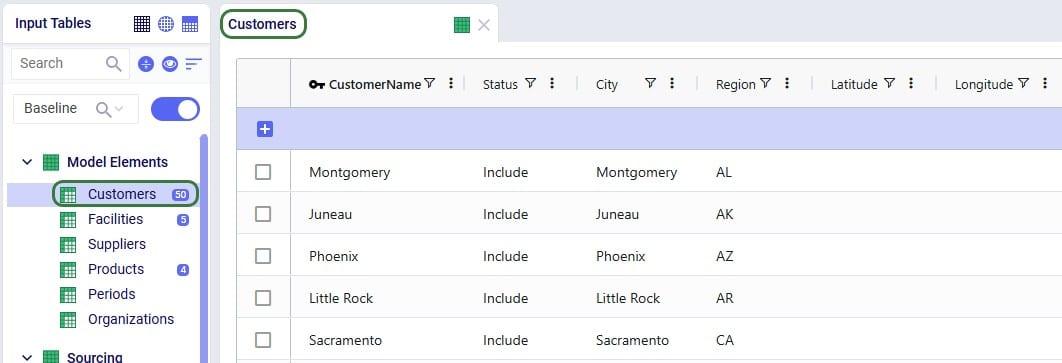

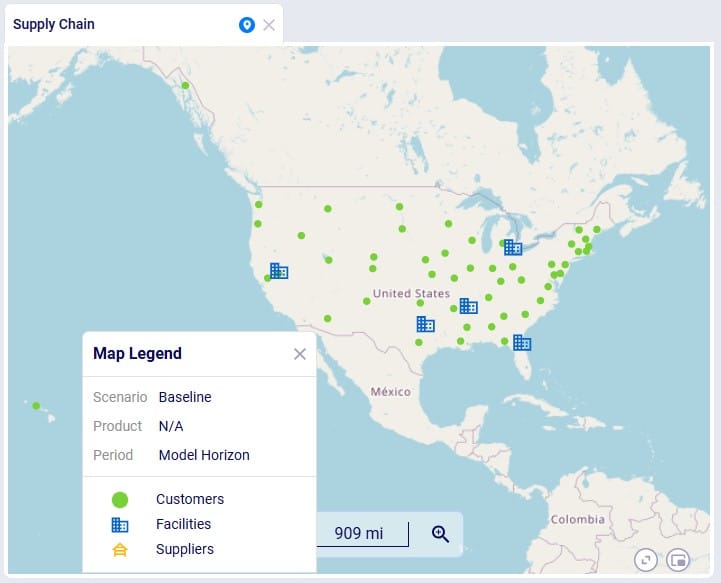

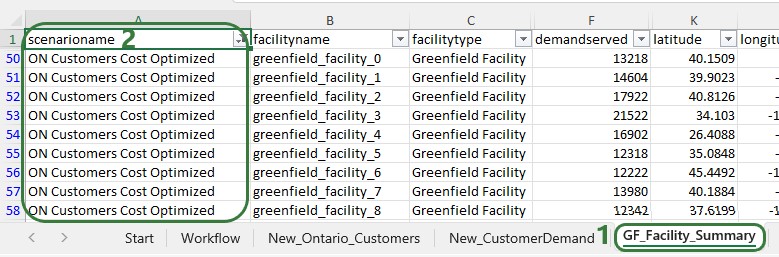

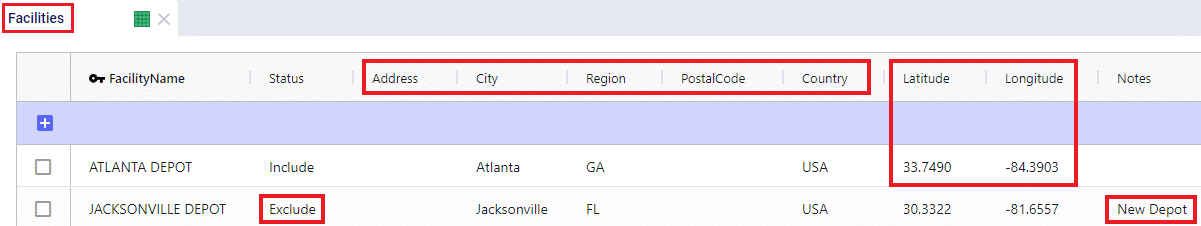

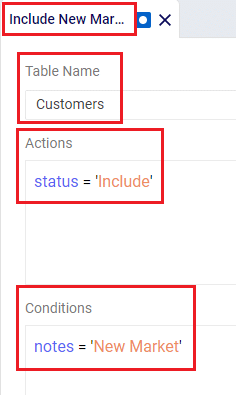

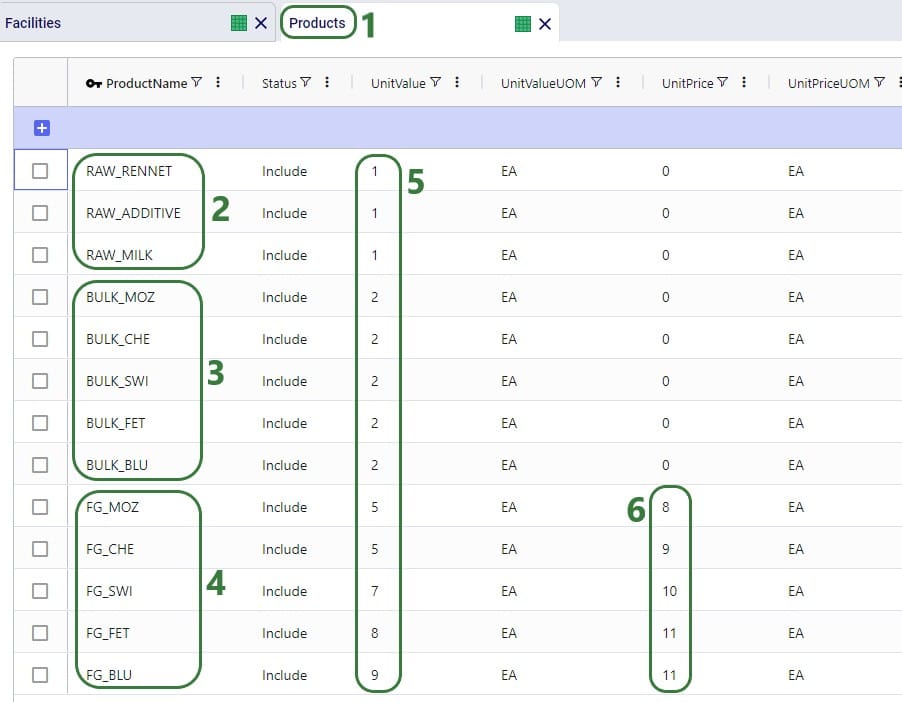

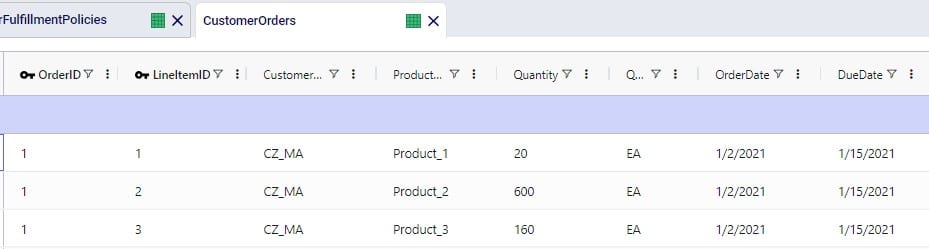

A short description of the main elements in this model is as follows:

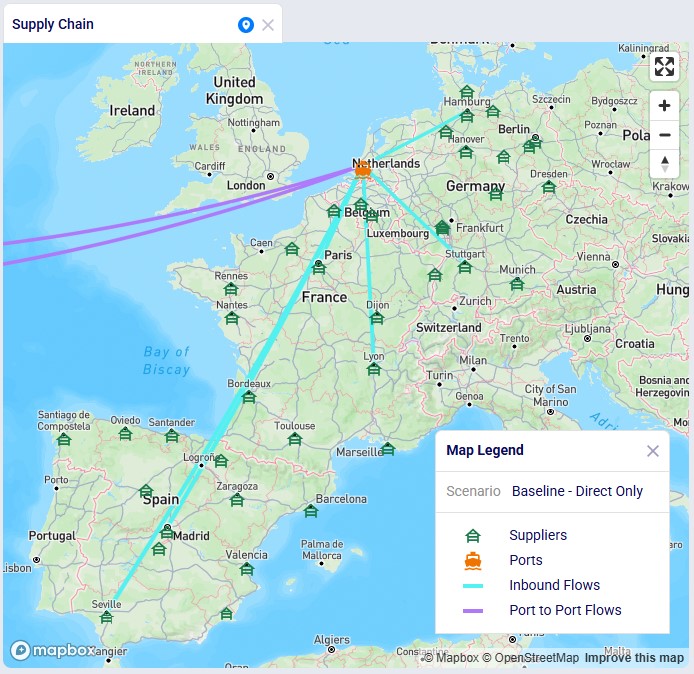

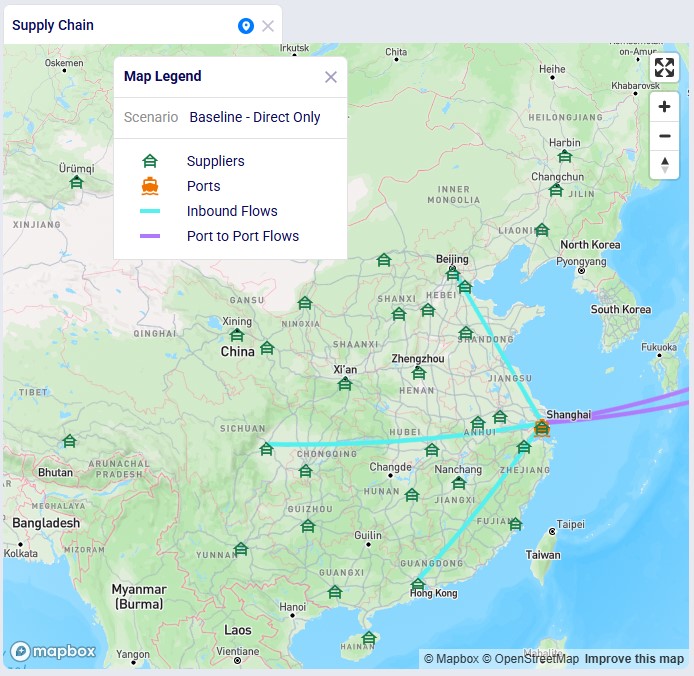

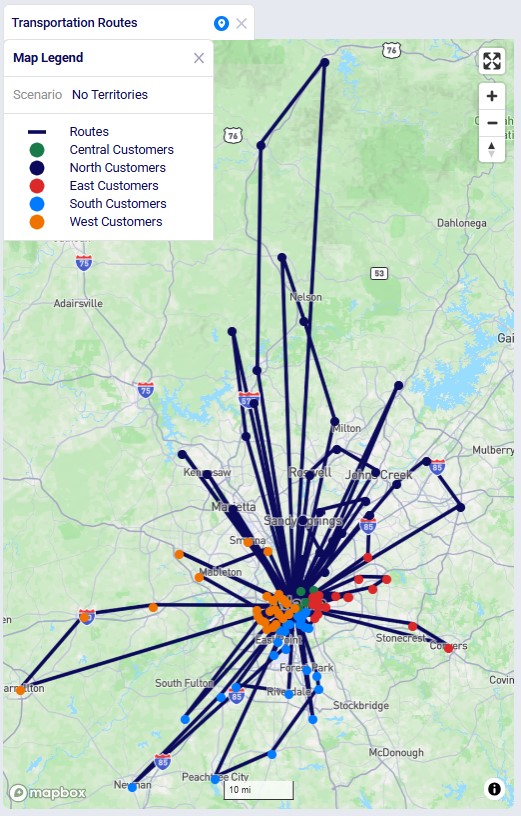

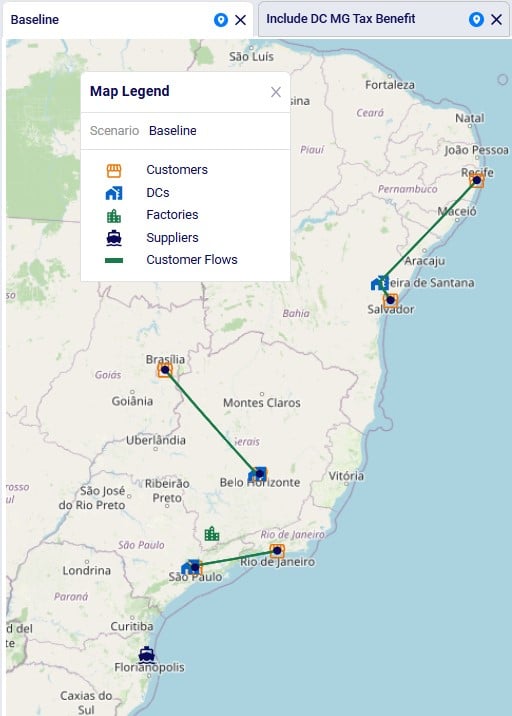

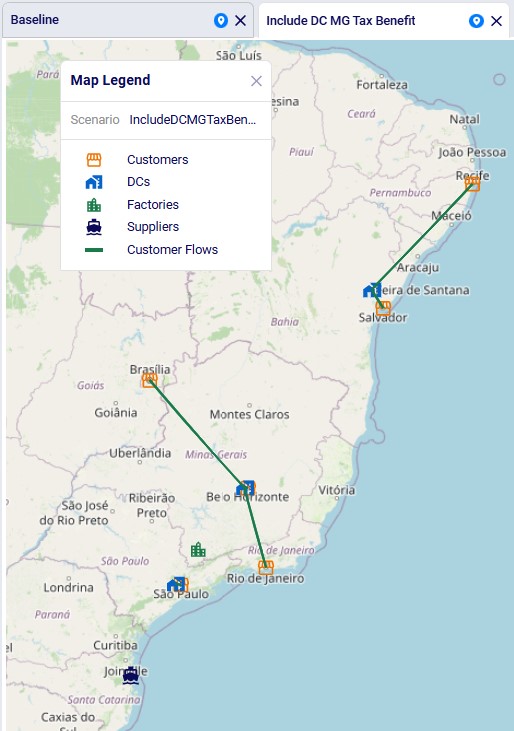

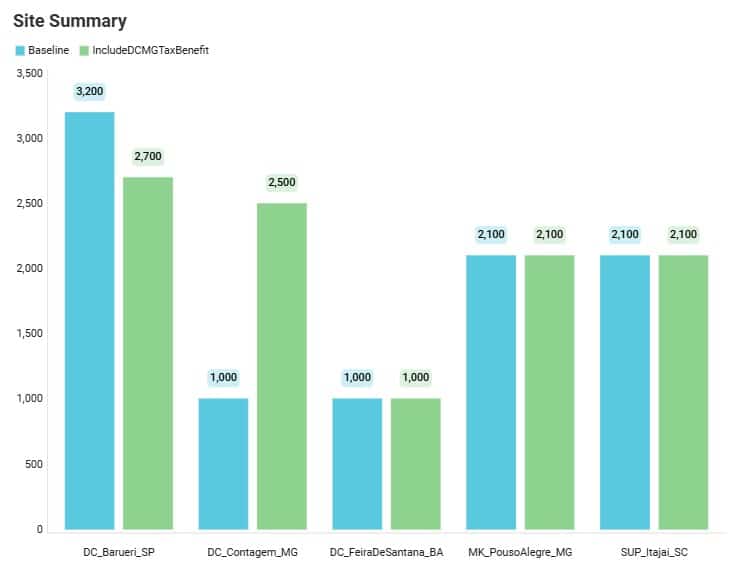

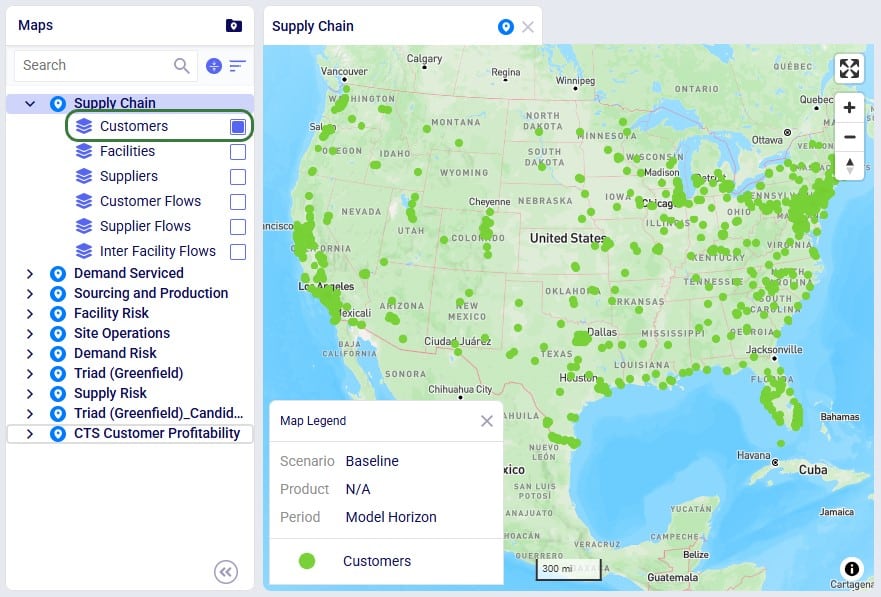

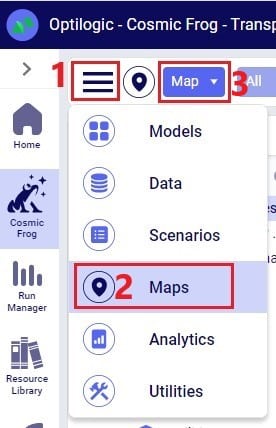

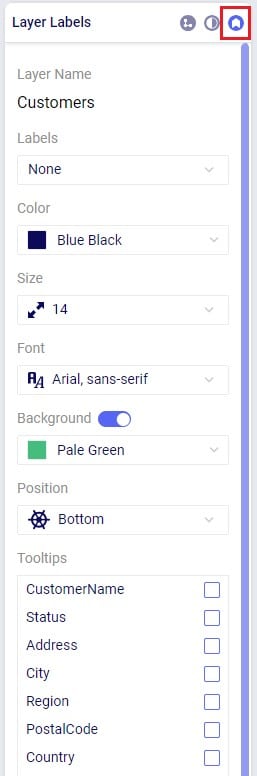

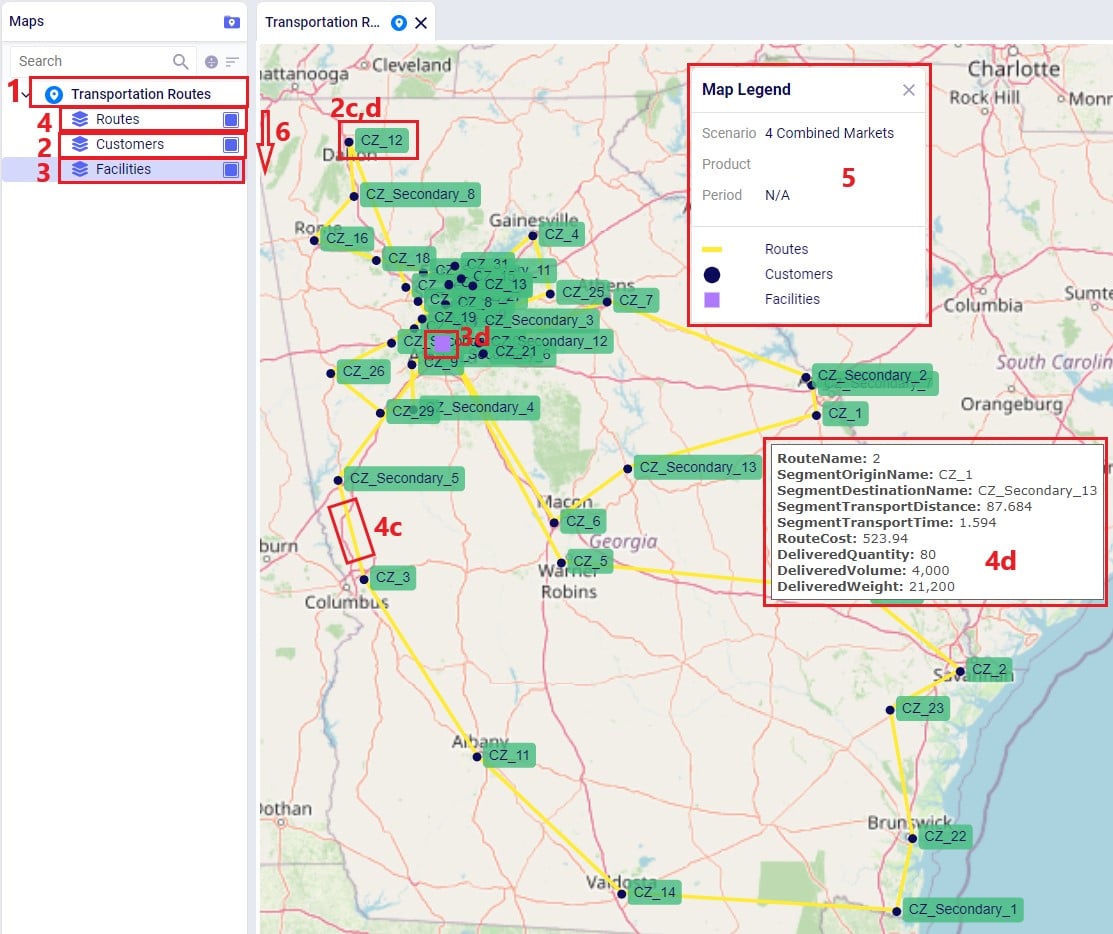

We illustrate the locations and flow of product through the following 3 maps:

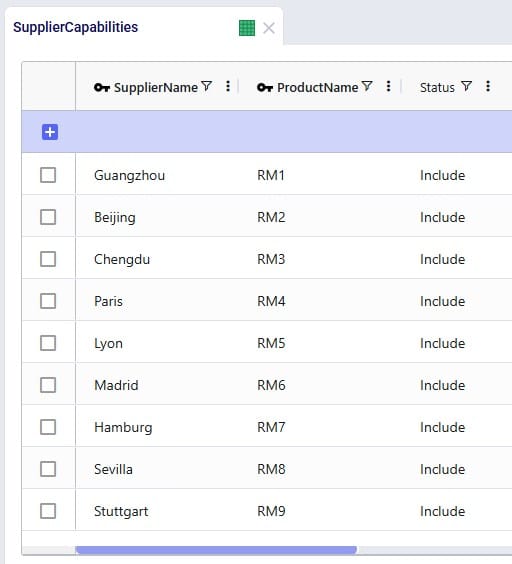

Even though around 35 suppliers are set up in the model in the EMEA region, only 6 of them are used based on the records in the Supplier Capabilities input table. We see the light blue lines from these suppliers delivering raw materials to the port in Rotterdam. From there, the 2 purple lines indicate the transport of the raw materials from Rotterdam to the US and Mexican ports.

Similarly, around 35 suppliers are set up in China in the model, but only 3 of them are used per the set up in the Supplier Capabilities input table. The light blue lines are the transport of raw materials from the suppliers to the Shanghai port and the purple lines from the Shanghai port to the ports in Mexico and the US.

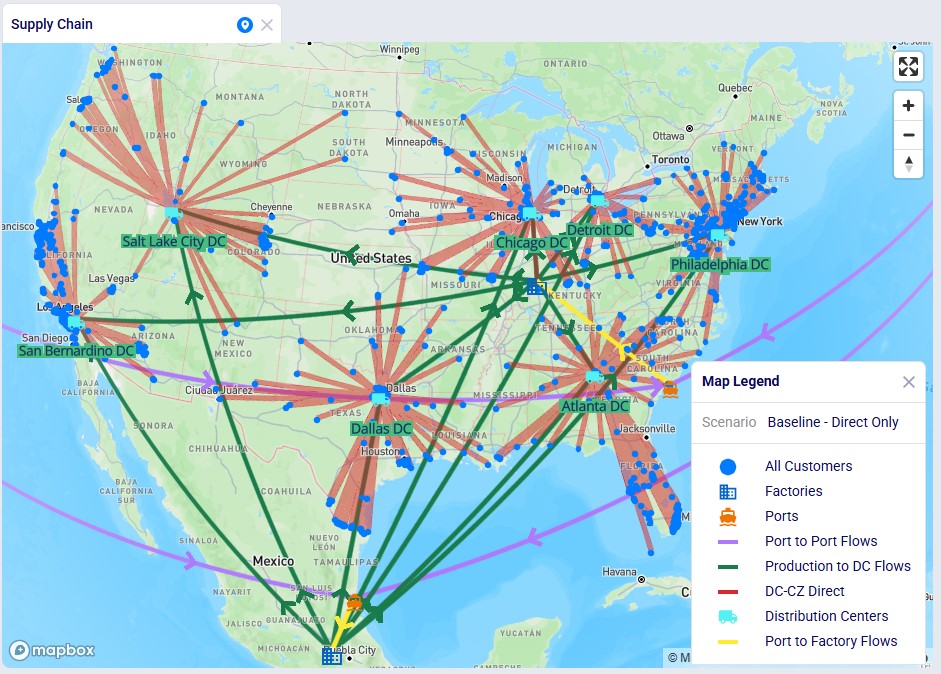

This map shows the flows into and within/between the US and Mexico locations:

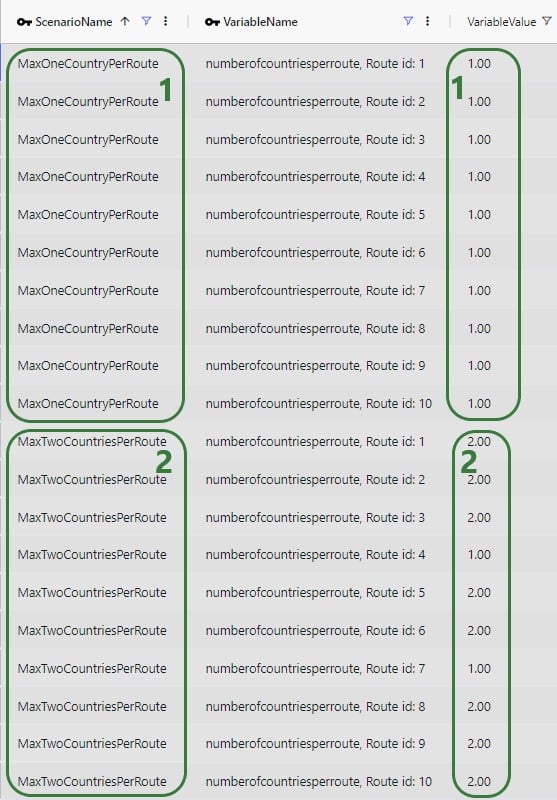

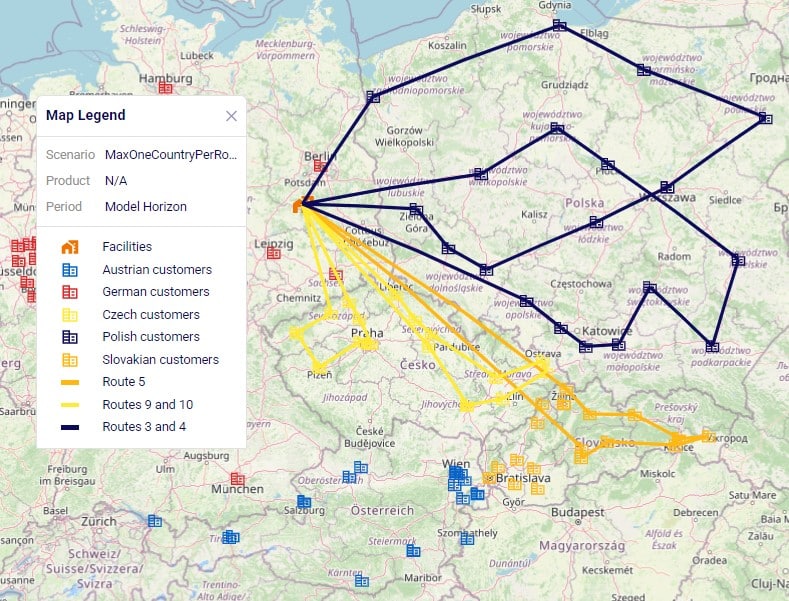

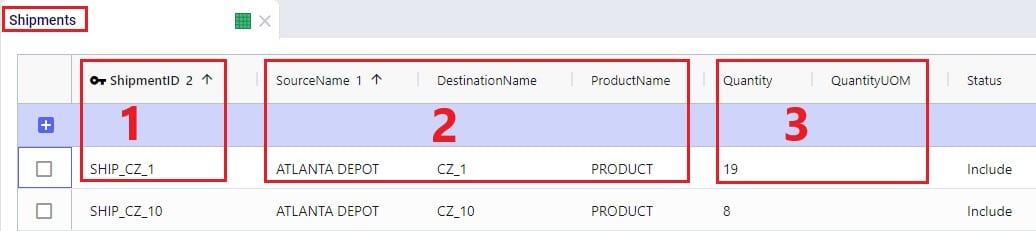

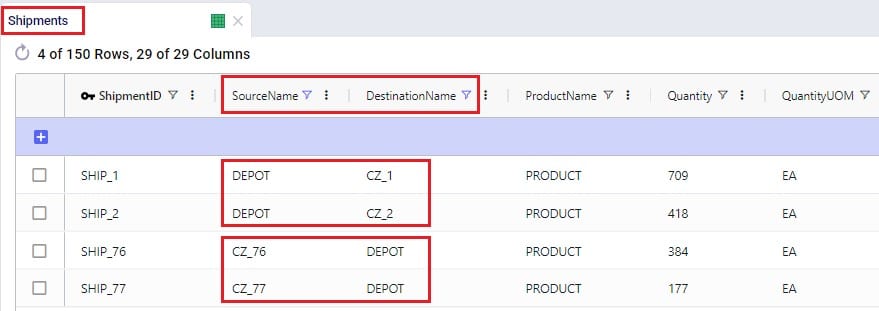

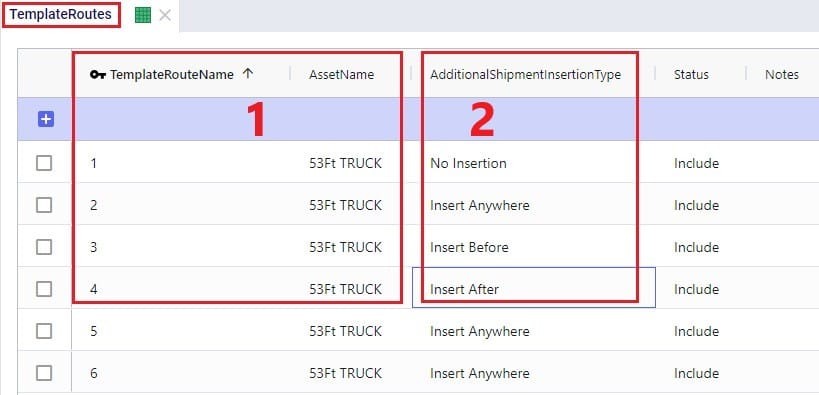

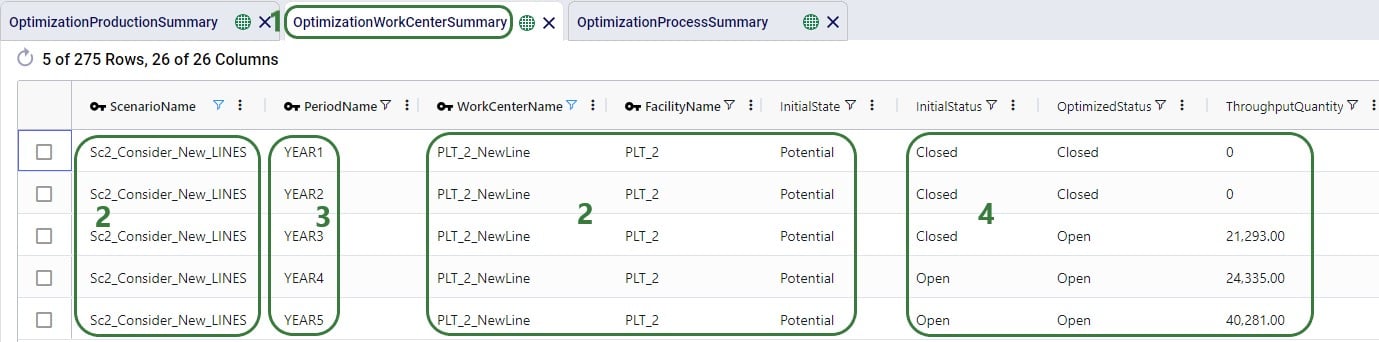

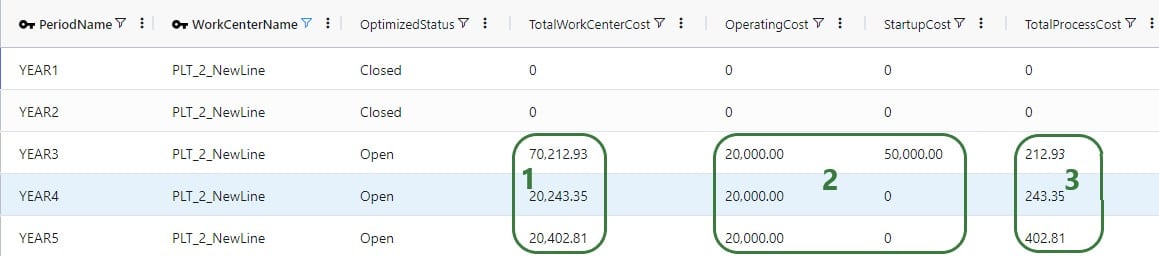

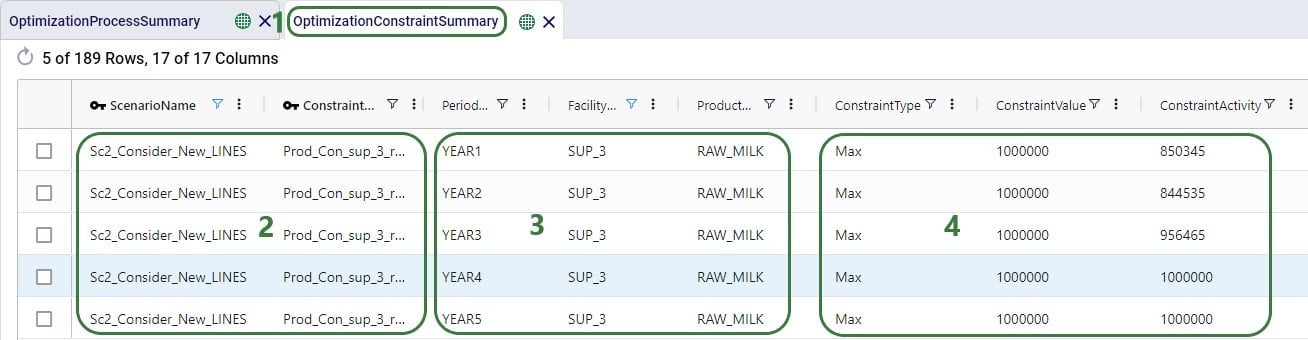

We will show how Hopper routes for the last leg of the network, from DCs to customers, can be taken into account during a Neo solve. Through a series of scenarios, we will explore if using multi-stop routes for this supply chain is beneficial:

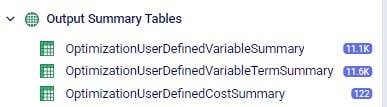

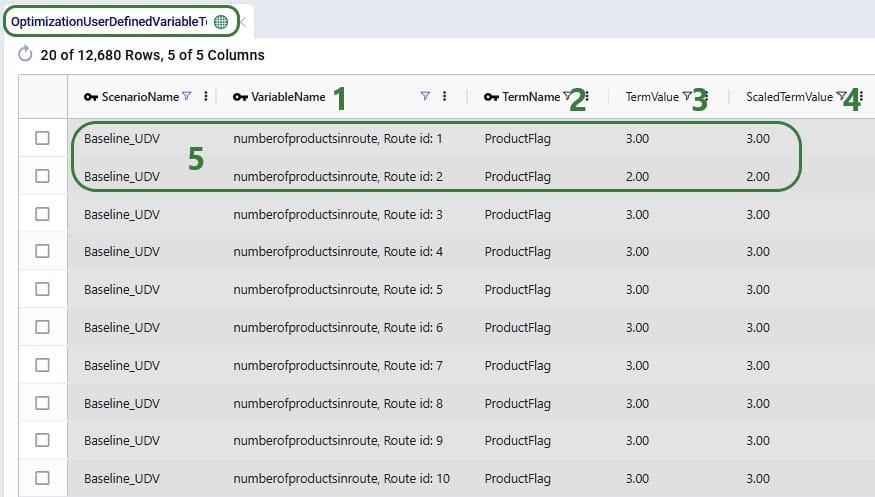

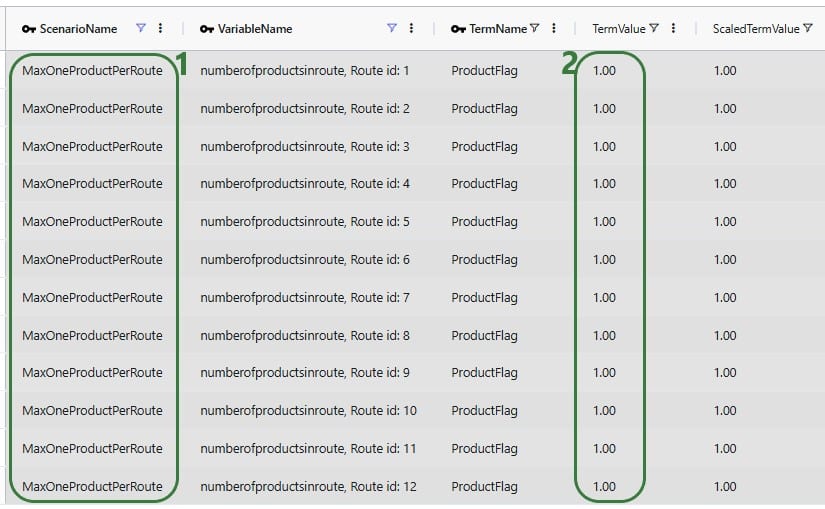

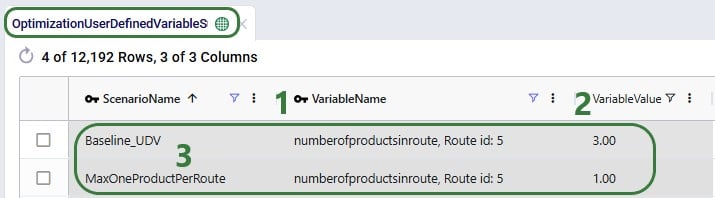

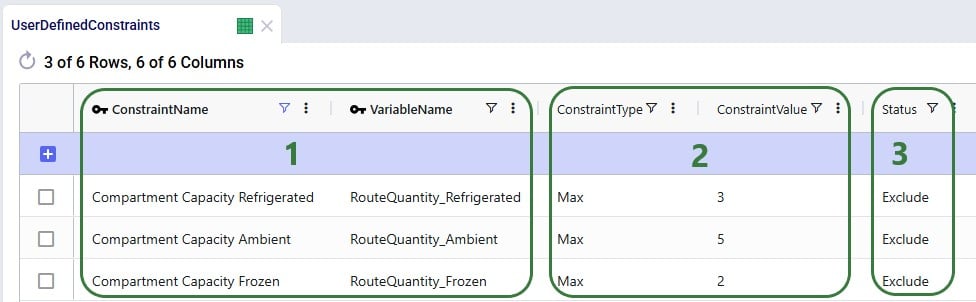

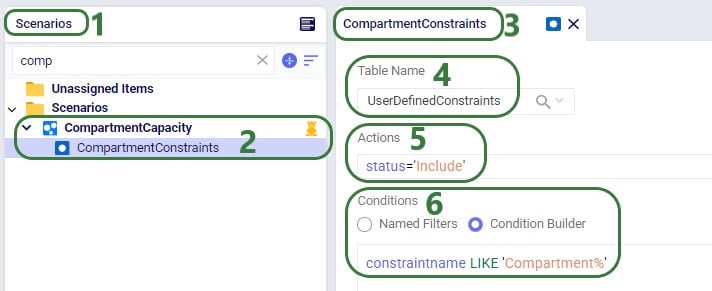

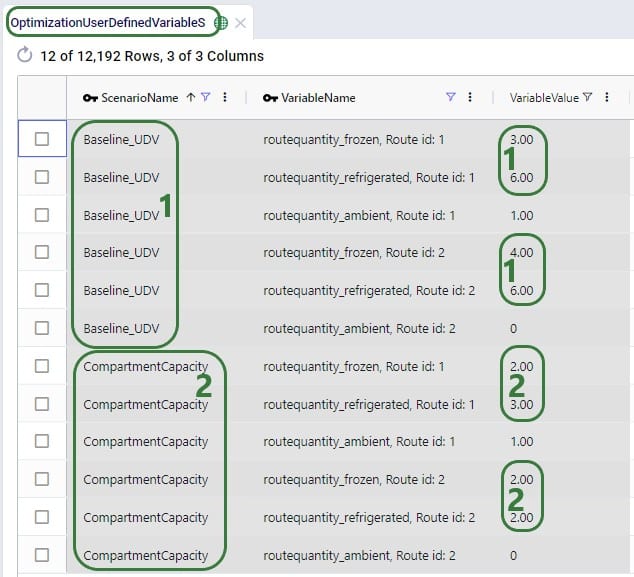

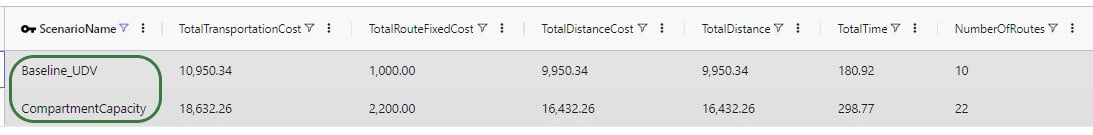

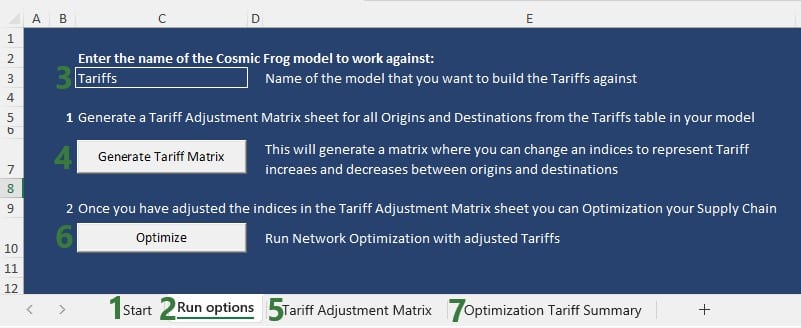

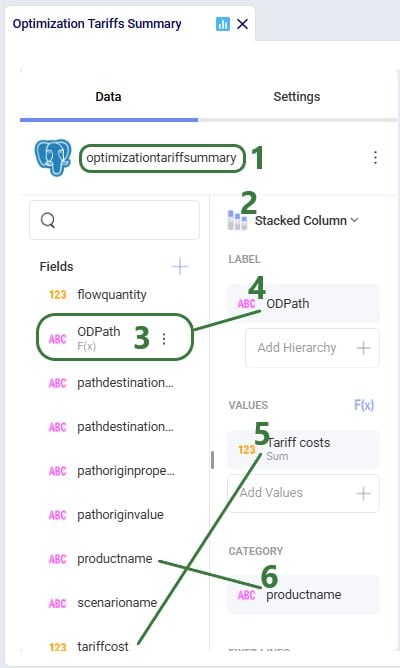

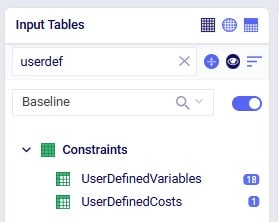

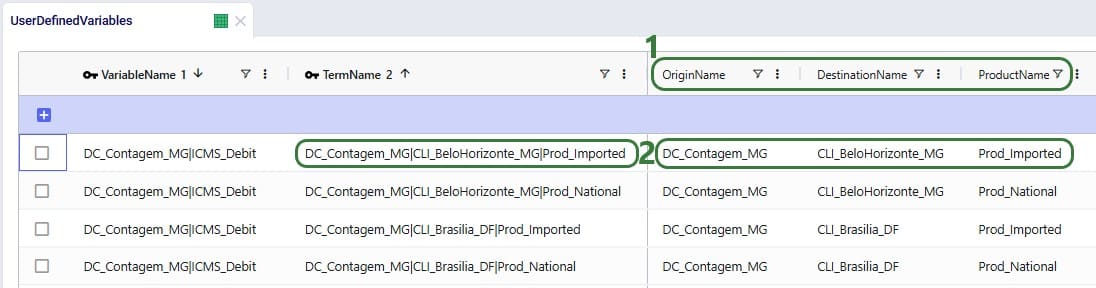

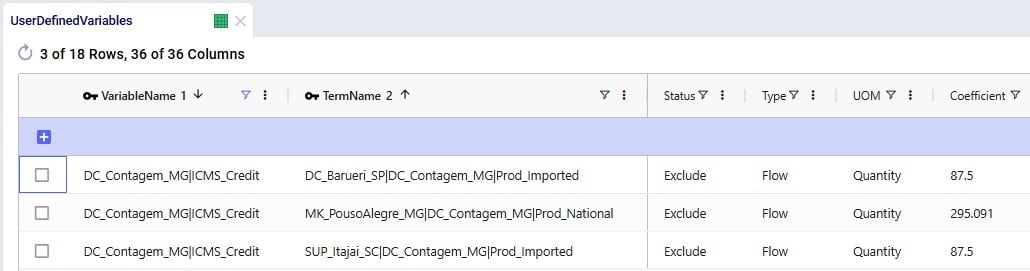

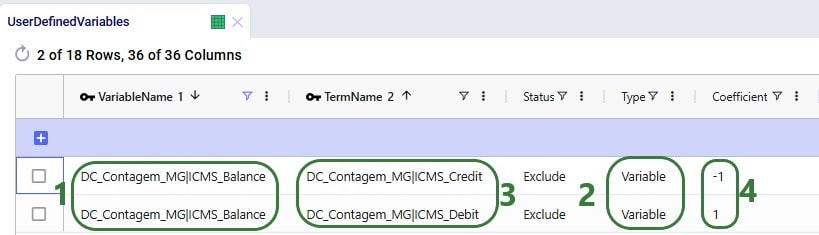

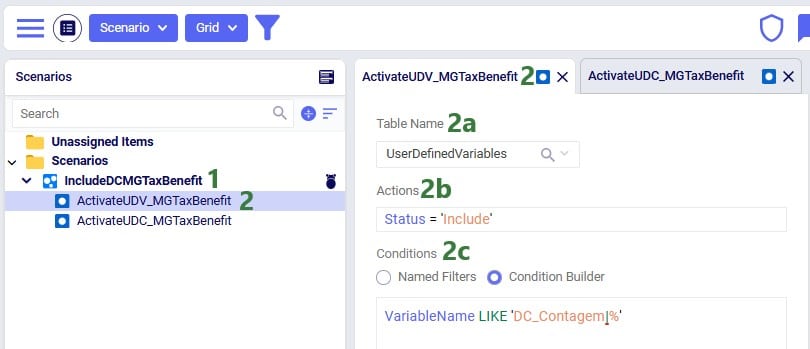

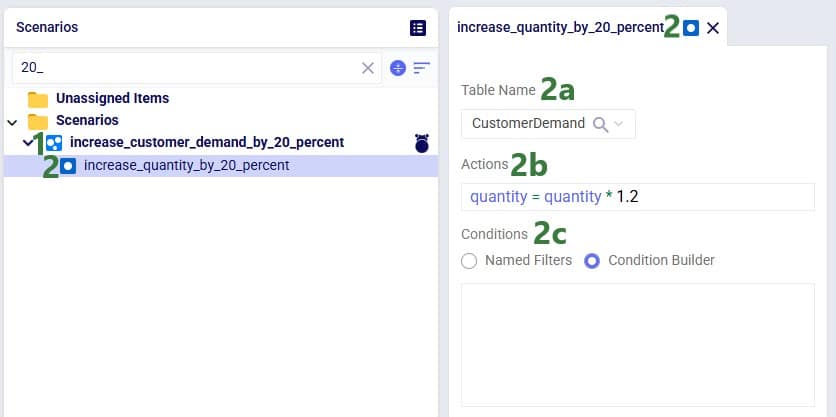

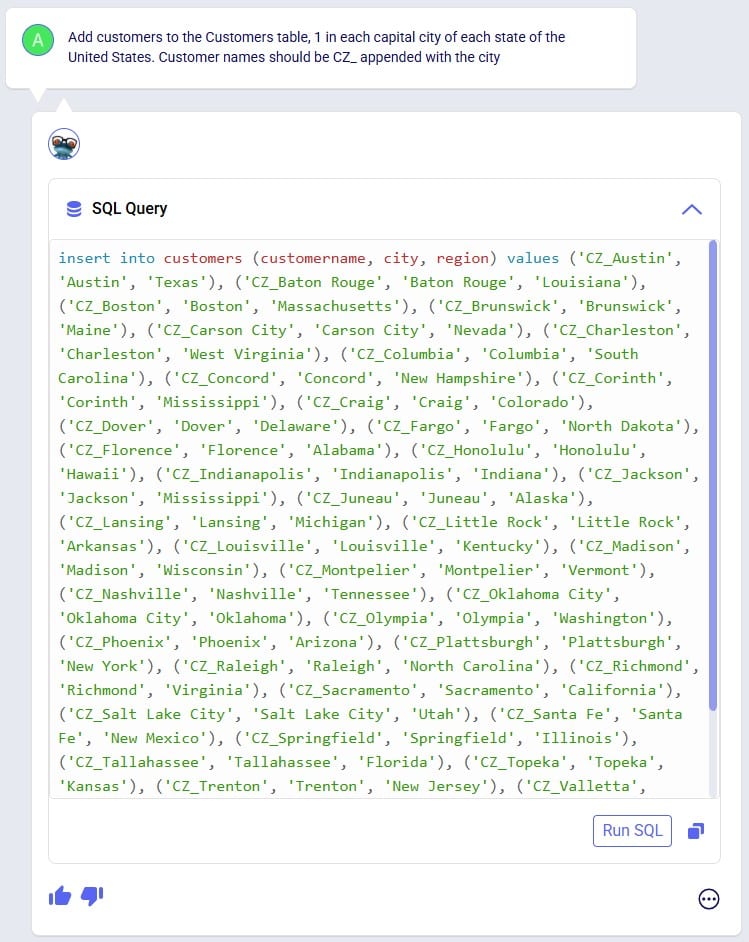

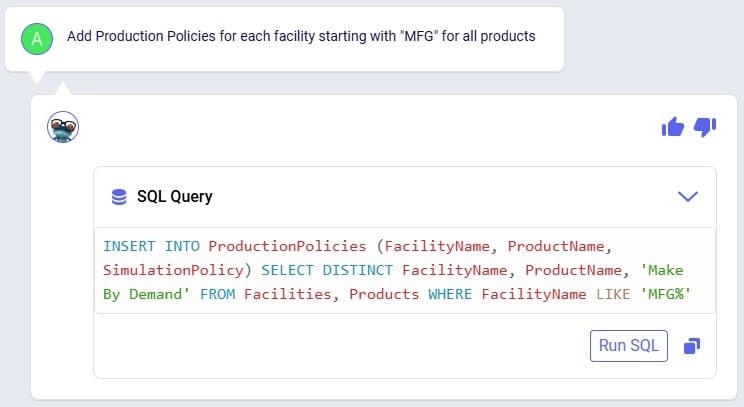

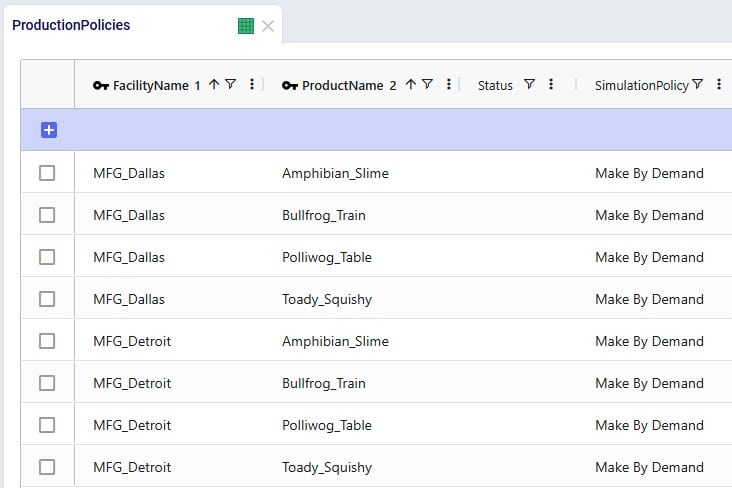

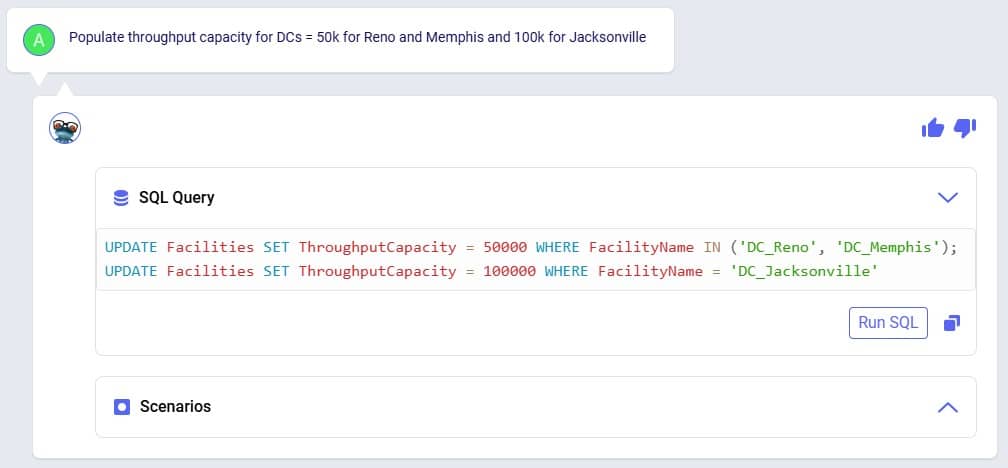

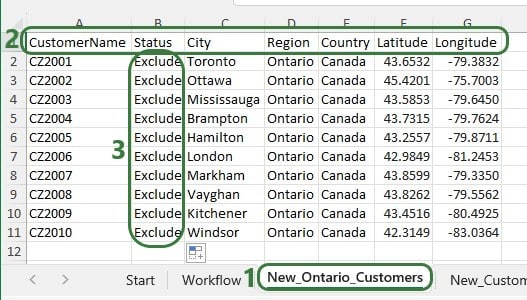

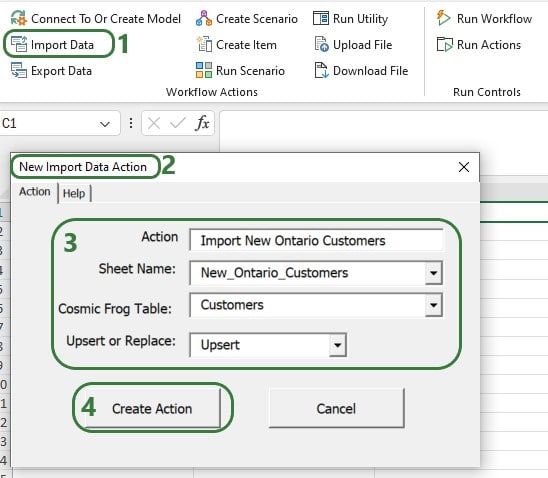

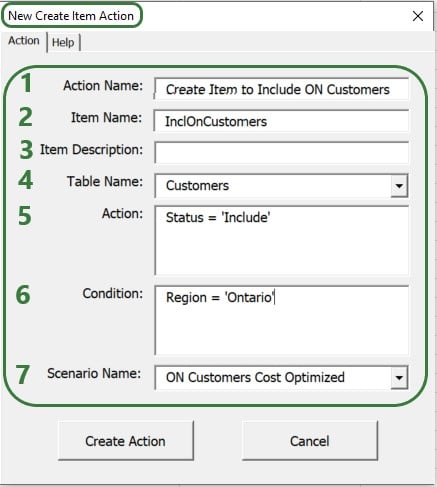

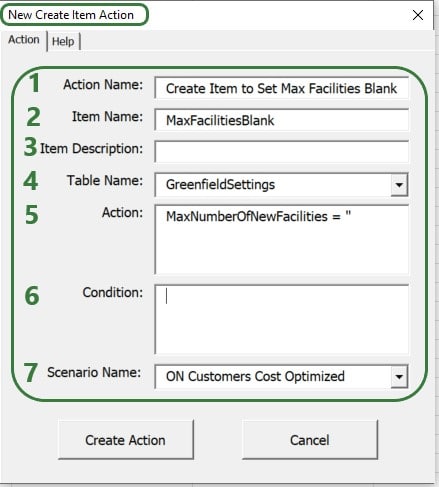

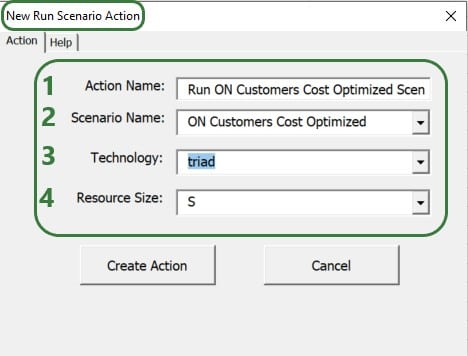

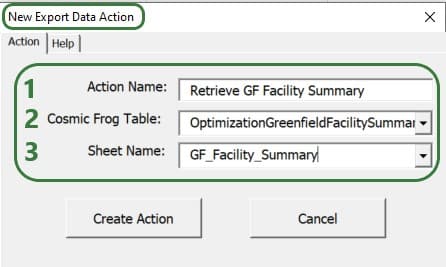

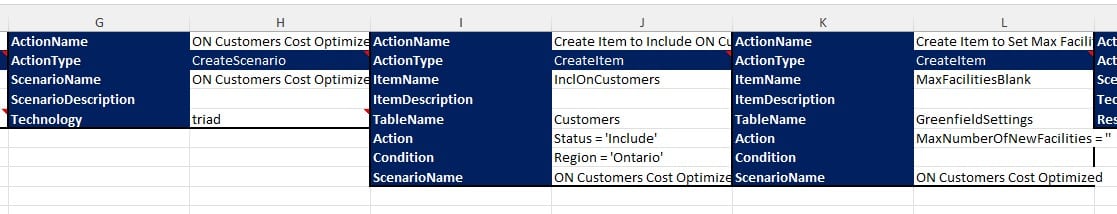

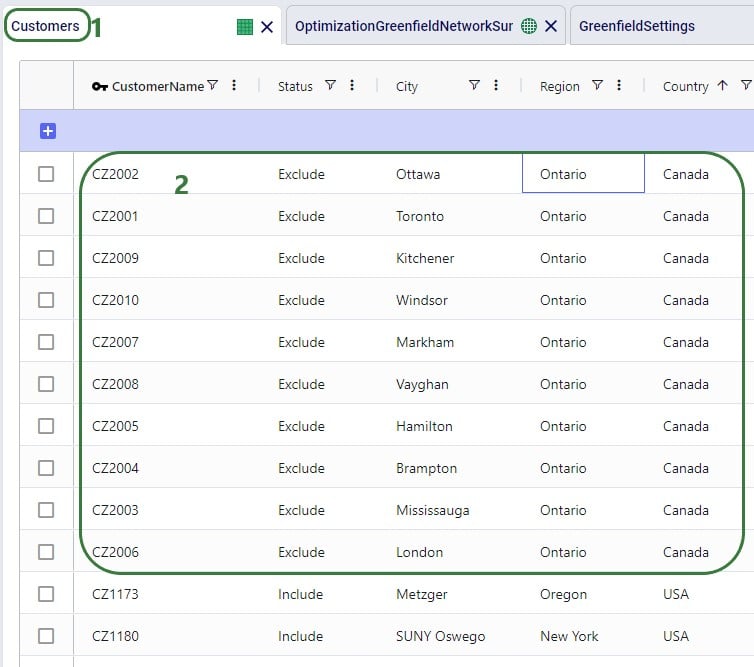

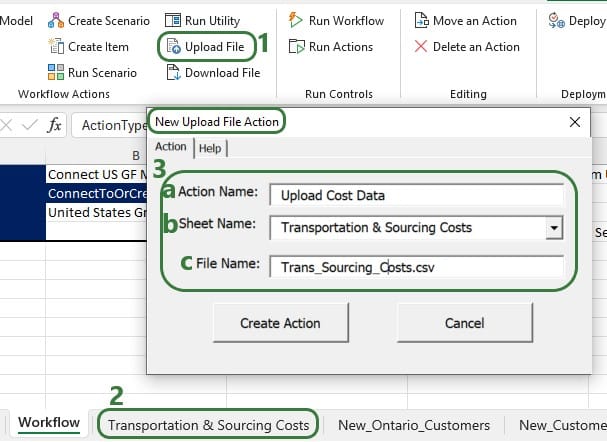

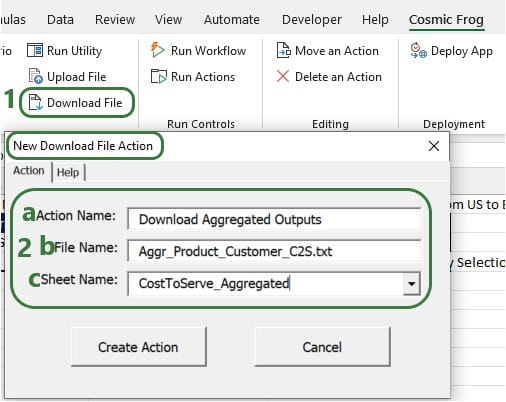

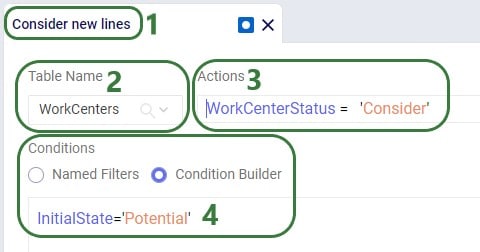

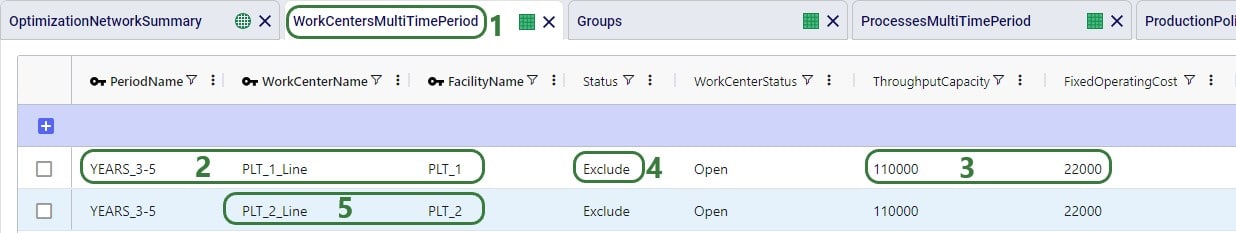

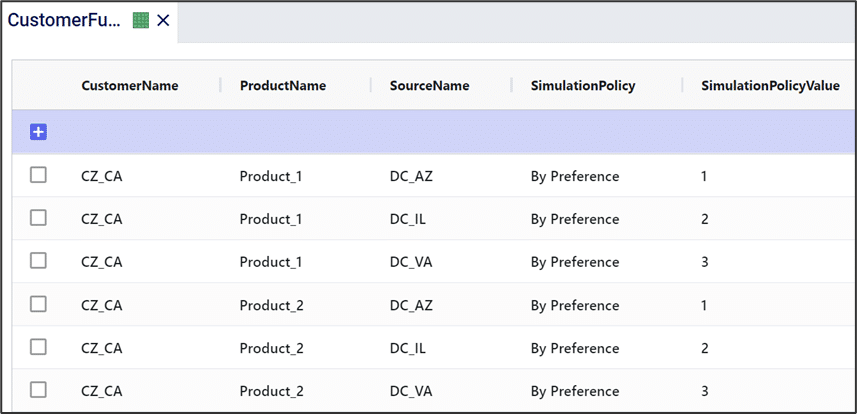

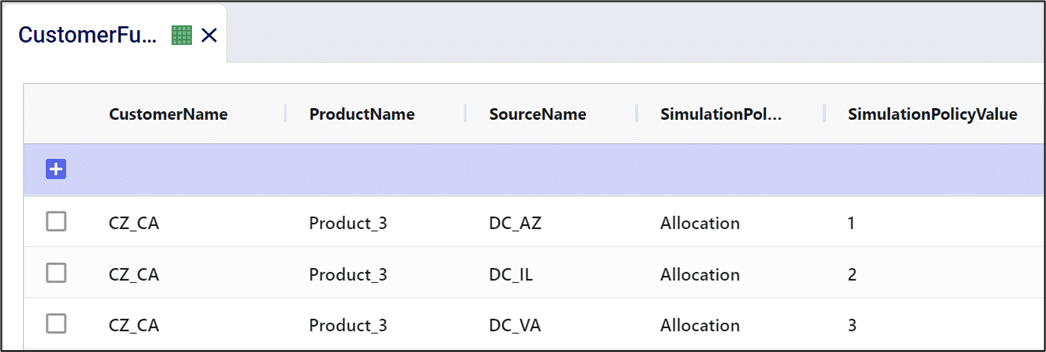

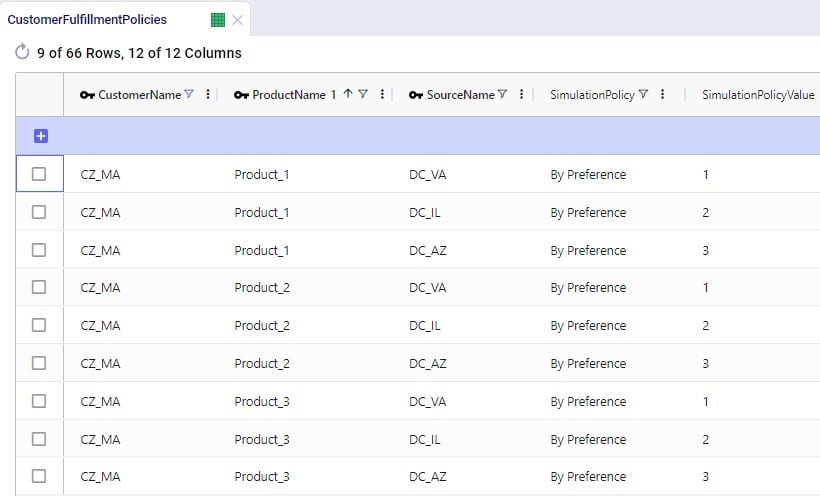

After copying the Global Supply Chain Strategy model from the Resource Library, following changes/additions were made to be able to consider Hopper generated routes for the DC-CZ leg during the Neo solve. After listing these changes here, we will explain each in more detail through screenshots further below. Users can copy this modified model with the scenarios, outputs, and preconfigured maps from the Resource Library.

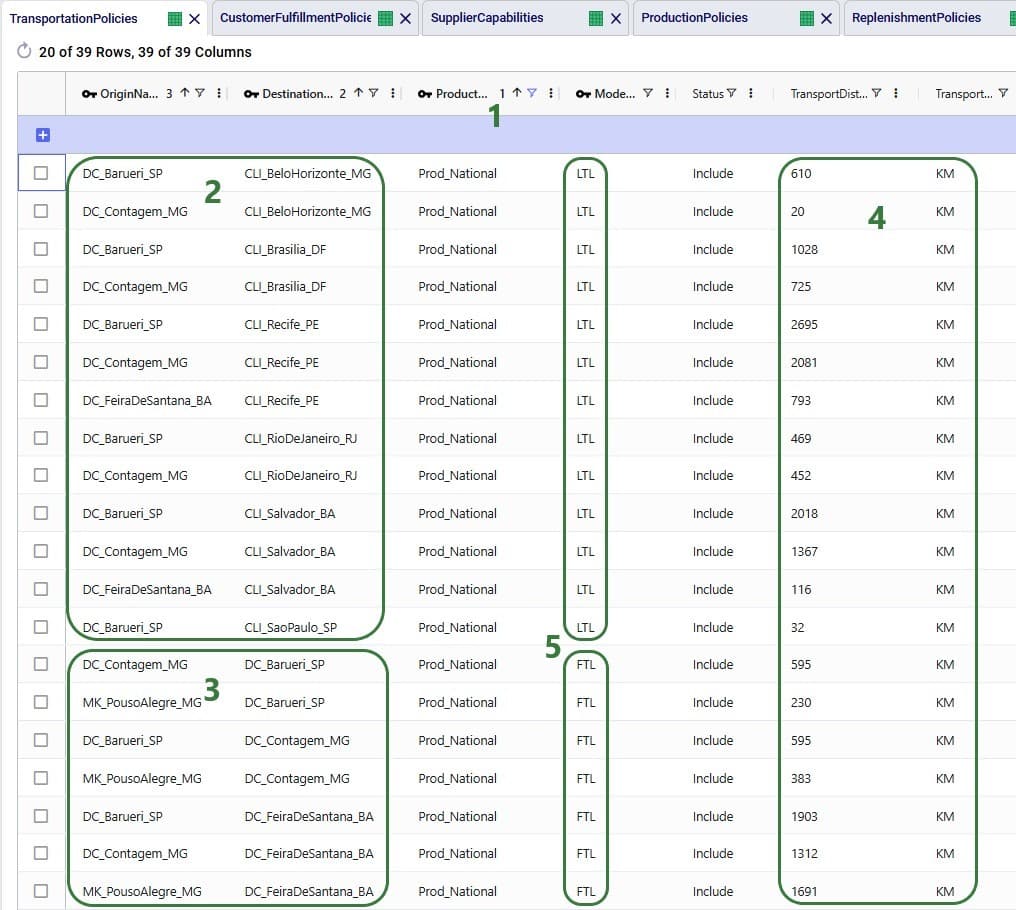

One record is added in this table where it is indicated that the route planner named "RoutePlanner_1" will use Hopper generated routes and is by default included during a Neo solve.

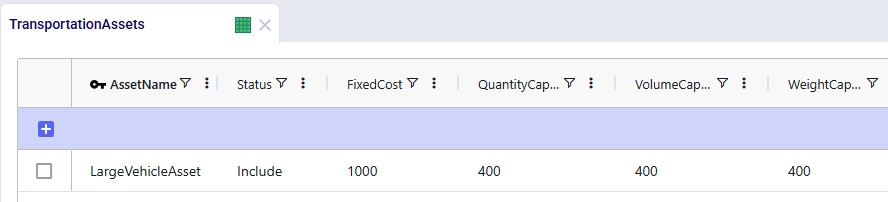

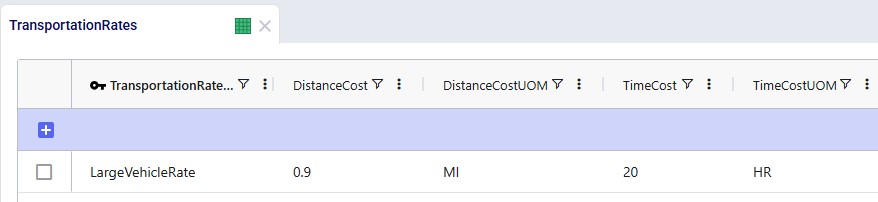

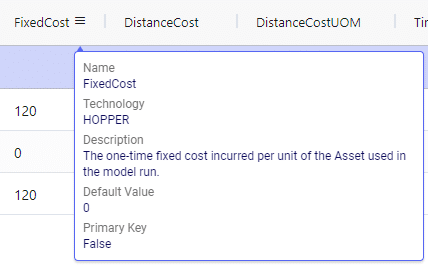

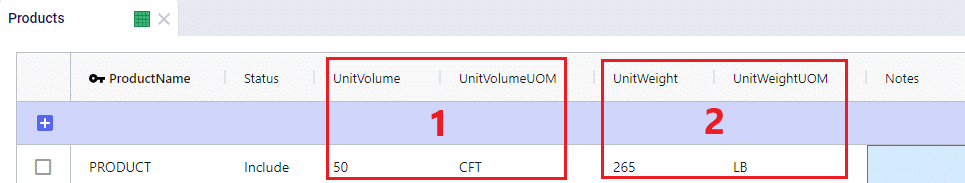

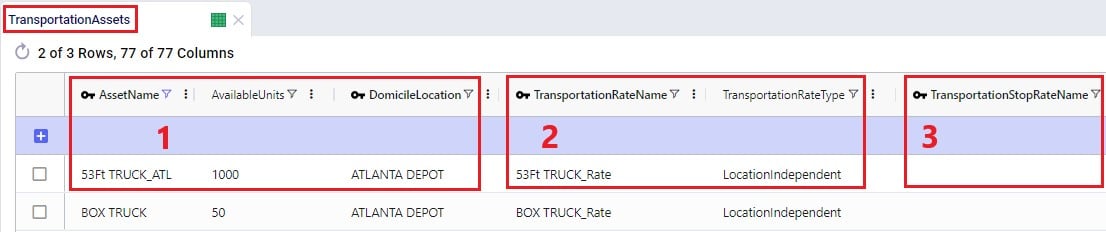

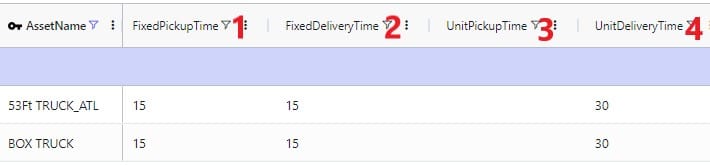

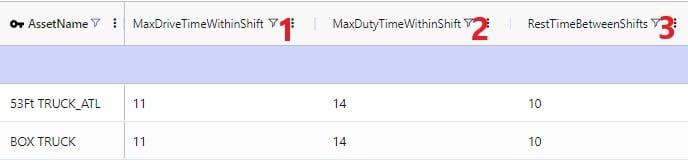

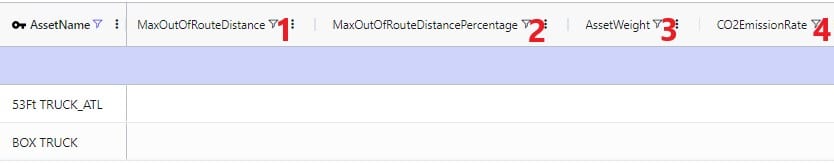

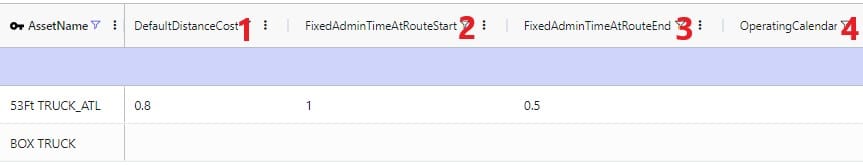

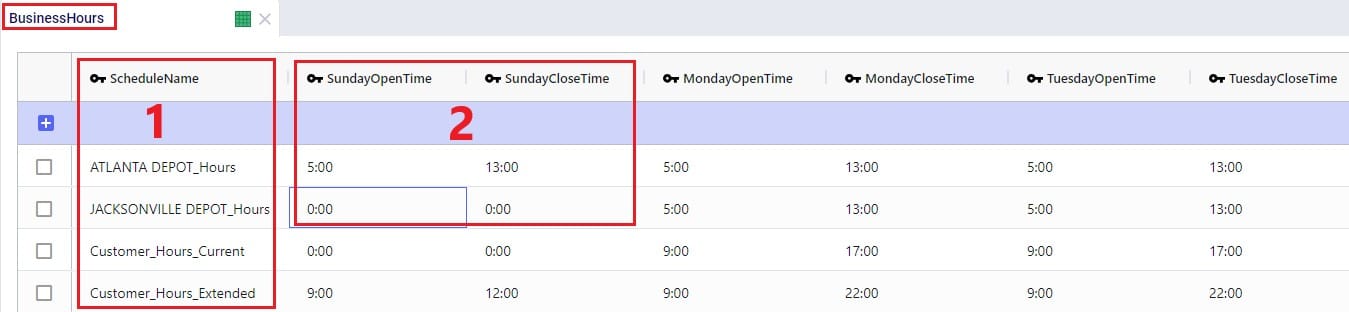

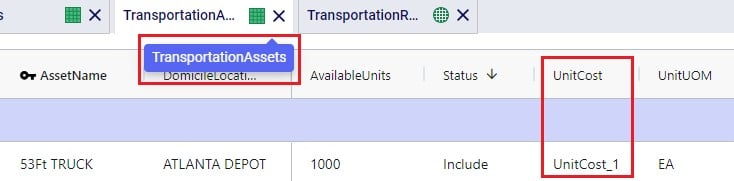

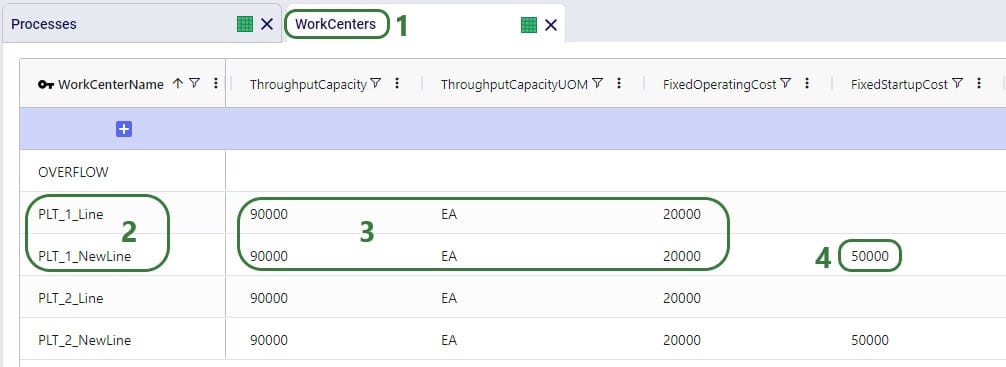

We choose to add our own asset instead of the 3 defaults ones that will be used if the Transportation Assets table is left blank. The characteristics of our large vehicle are shown in the next 2 screenshots:

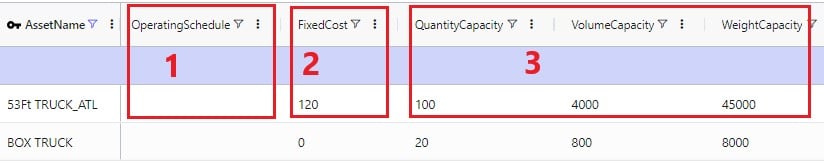

This large asset is included in the scenario runs as its Status = Include. The fixed cost of the asset is $1,000, with a capacity of 400 units / 400 cubic feet / 400 pounds.

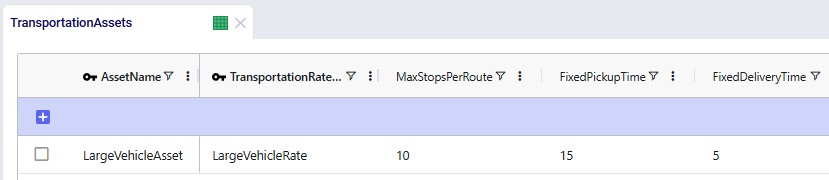

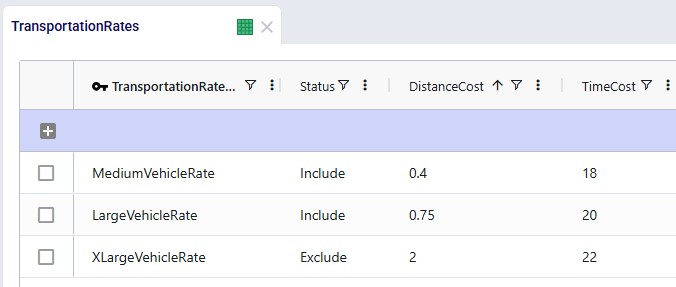

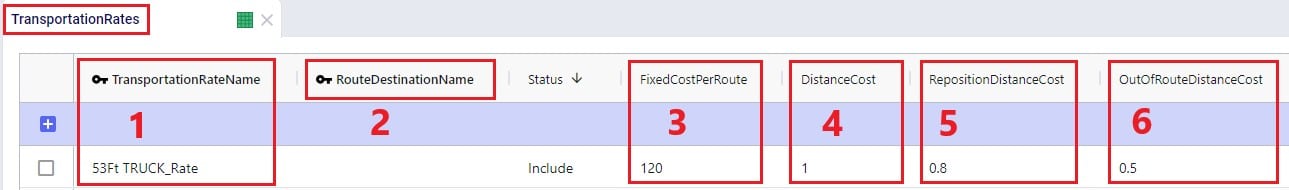

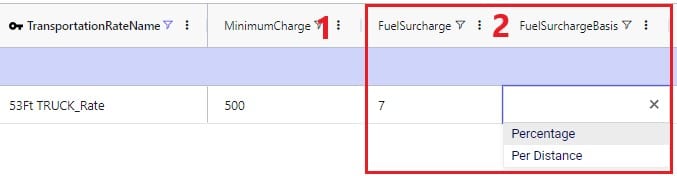

A rate record is set up in the Transportation Rates input table in order to model distance- and time-based costs. This is shown in the next screenshot. To use it, we link it to the asset by using the Transportation Rate Name field on the Transportation Assets table.

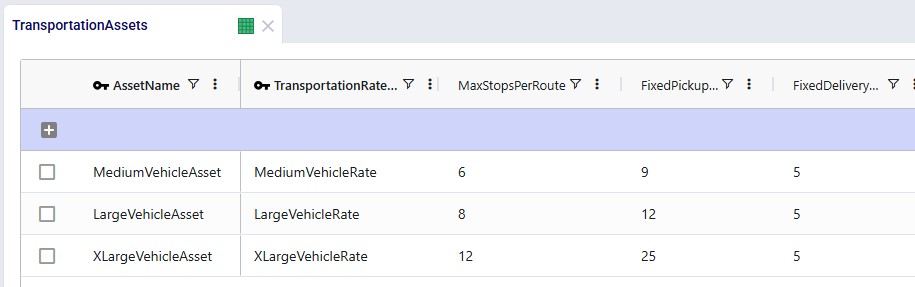

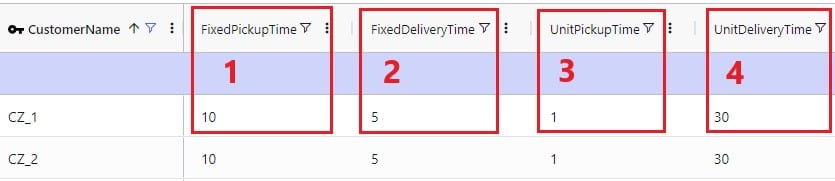

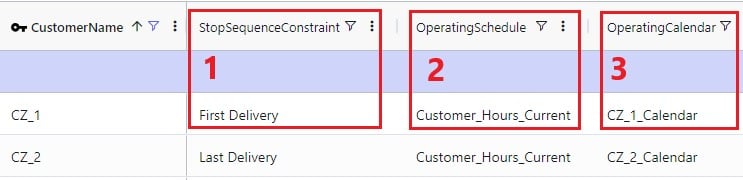

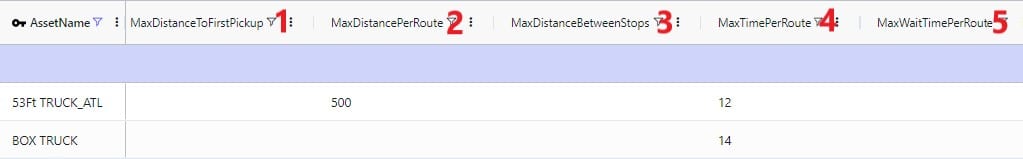

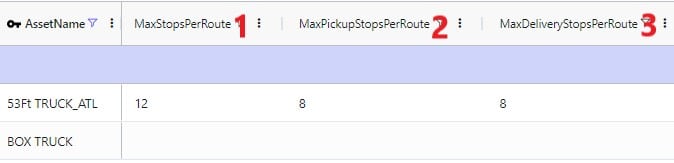

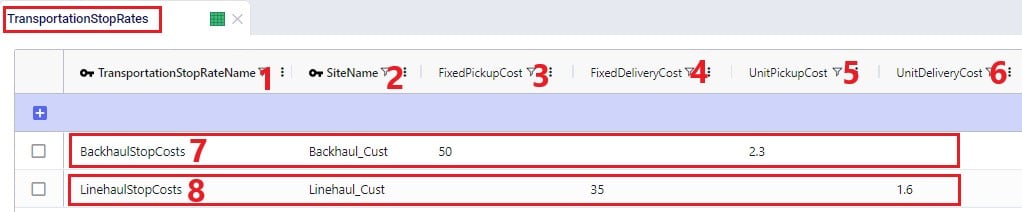

The Max Stops Per Route is set to 10. A Fixed Pickup Time of 15 minutes is entered, which will be applied once when picking up product at the DCs. Also, a Fixed Delivery Time of 5 minutes is set, this will be applied once at each customer when delivering product (the UOM fields are omitted in the screenshot; they are set to MIN).

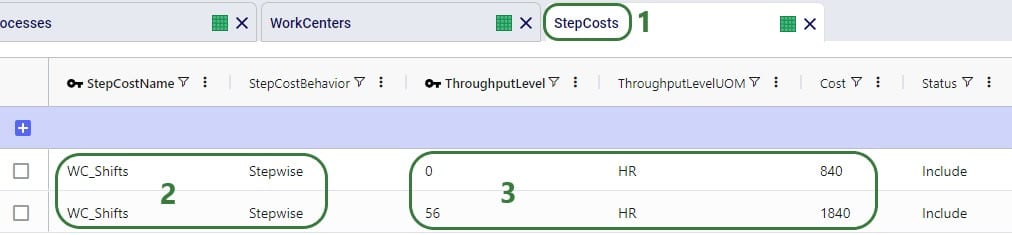

Besides the fixed cost for the asset as specified in the Transportation Assets table, we also want to apply both distance- and time-based costs to the routes:

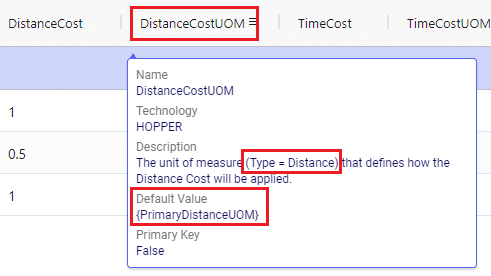

A record is set up in the Transportation Rates table where Transportation Rate Name is used in the Transportation Assets table to link the correct rate to the correct asset (e.g. LargeVehicleRate is used for the LargeVehicleAsset). The per distance cost is set to 0.9 per mile, and the time cost is set to 20 per hour; the latter mostly reflects the cost for the driver.

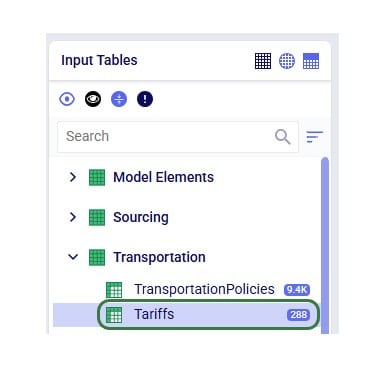

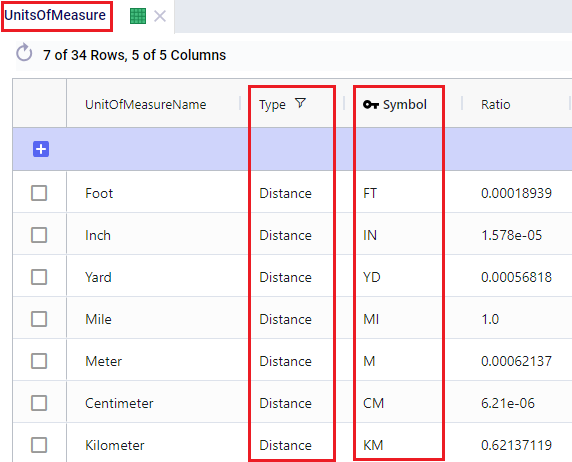

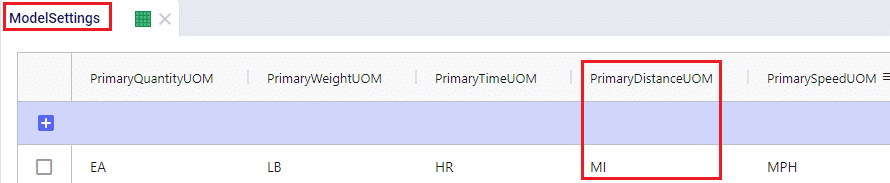

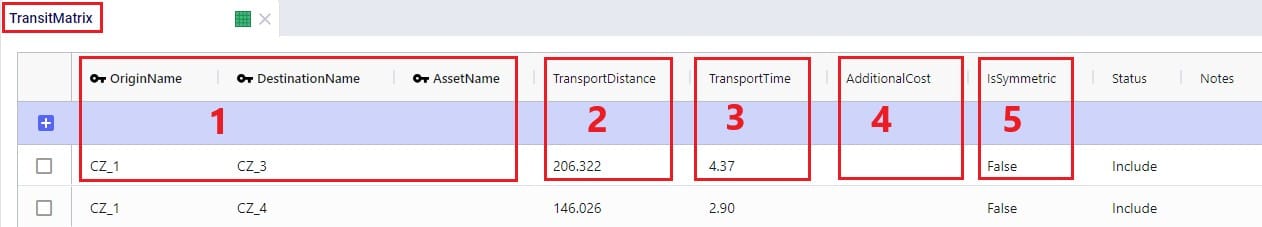

For the scenarios to use actual road distances and transport times, the Distance Lookup Utility in Cosmic Frog was used with following parameters:

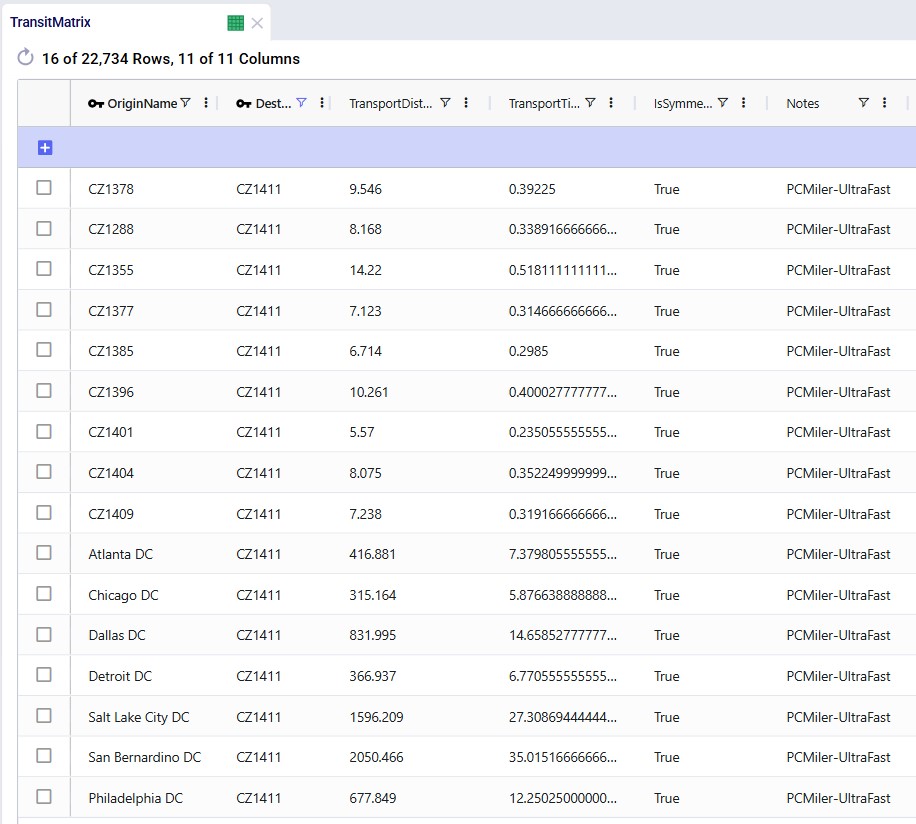

A subset of records filtered for 1 customer destination (CZ1411) is shown in this next screenshot where we see the distance and time from the 10 closest other customers to this customer, and also from all 7 DCs:

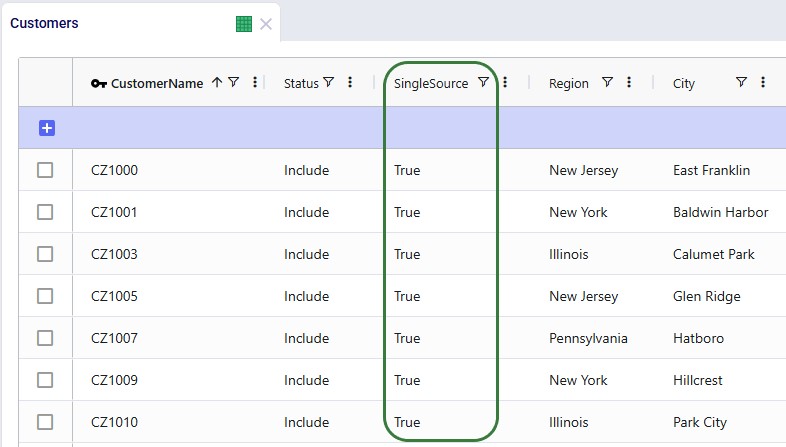

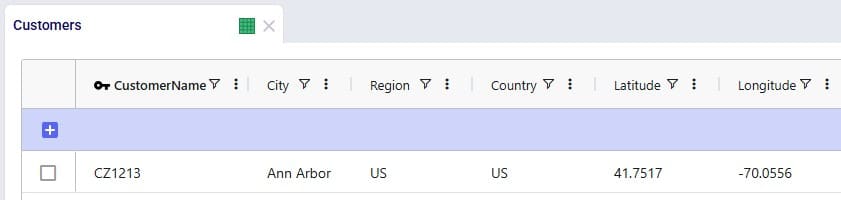

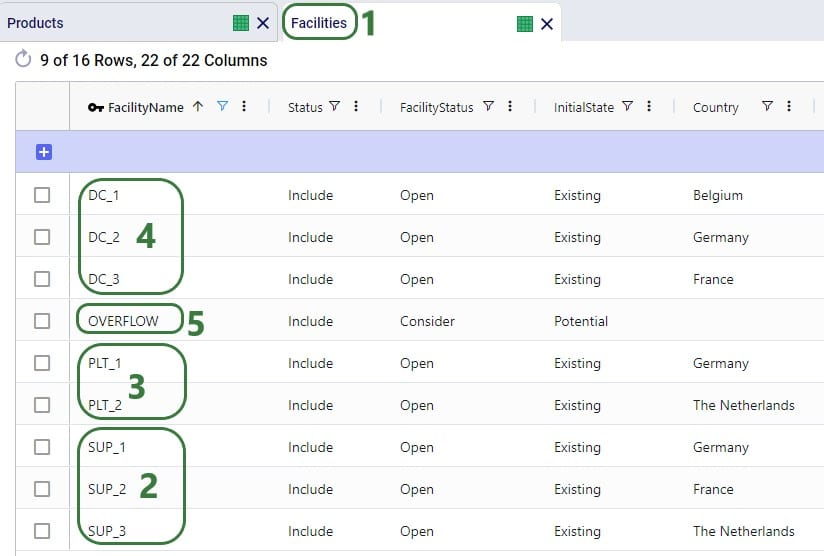

To remove potential dual sourcing, all customers are set to single sourcing (Single Source field is set to True on the Customers table), meaning they need to be served all product they demand from a single DC. In this model, setting customers to single sourcing also helps reduce the runtime of the scenarios:

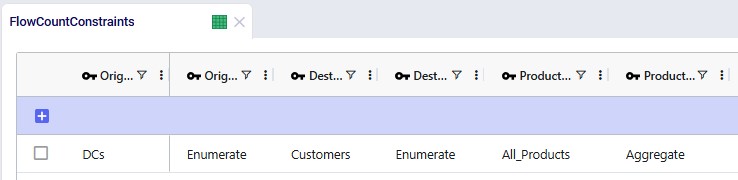

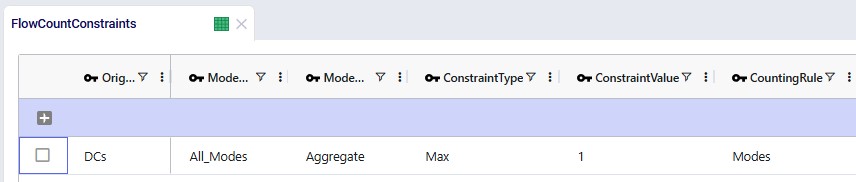

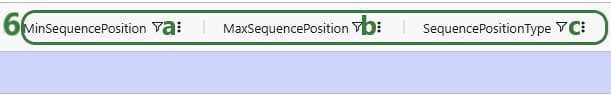

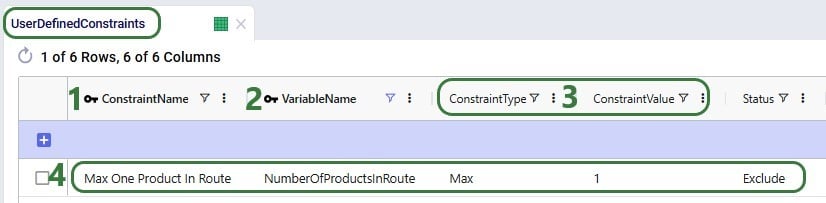

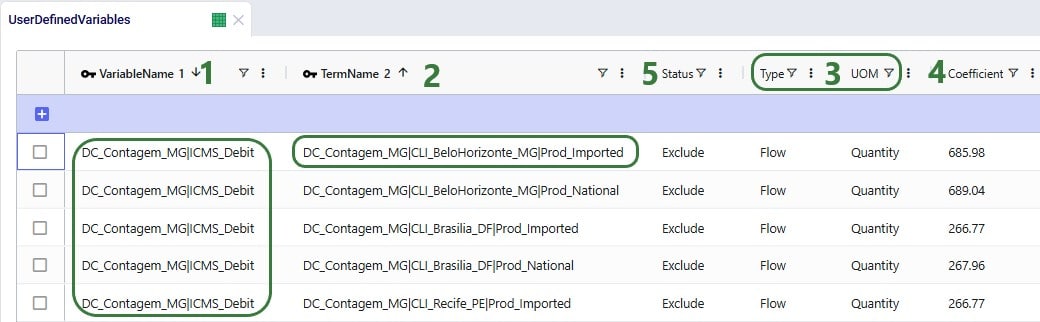

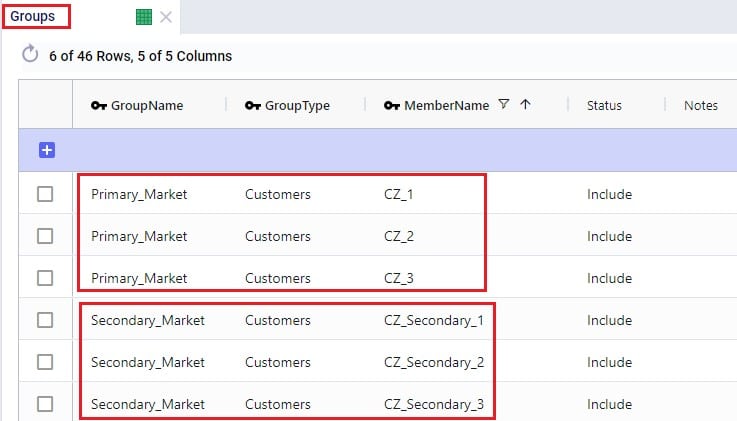

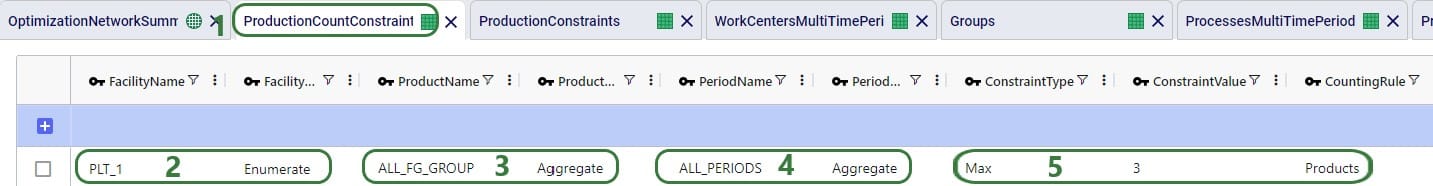

To further help reduce solve times, a flow count constraint that allows the selection of at maximum 1 mode to each customer is added:

Groups for all DCs, all Customers, all Products, and all Modes (the 2 to customers, Direct and MultiStop) are being used in the Origin, Destination, Product, and Mode fields on the Flow Count Constraints table. The Group Behavior fields for Origin and Destination are set to Enumerate, meaning that under the hood, this constraint is expanded out into individual constraints for each DC-Customer combination. On the other hand, the Group Behavior fields for Product and Mode are set to aggregate, meaning that the constraint applies to all products and modes together. The Counting Rule indicates the combination of entities that "is counted on", in this case the number of modes, which is not allowed to be more than 1 (Type = Max and Value = 1), i.e. there can only be 1 mode selected on each DC-customer lane.

The Status field of the Flow Count Constraint is set to Exclude (not shown in the screenshots above), and the constraint will be included in 2 of the scenarios by changing the status through a scenario item.

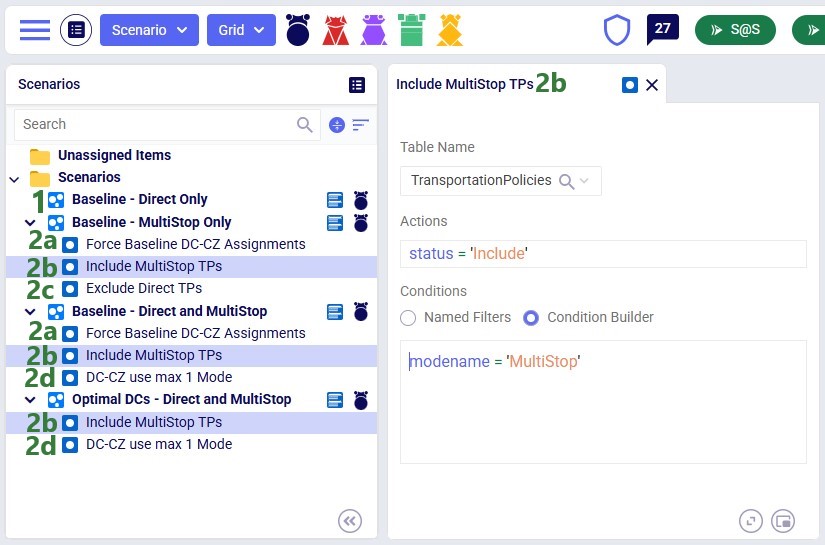

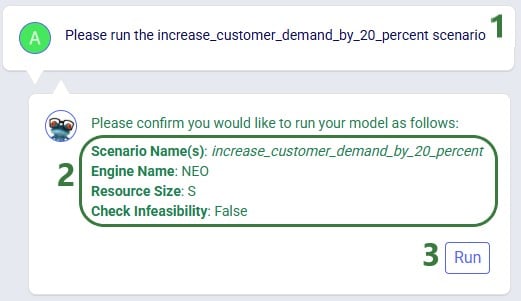

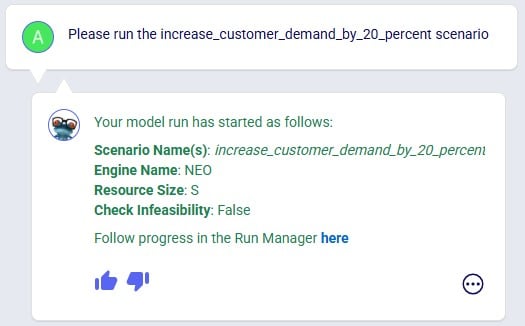

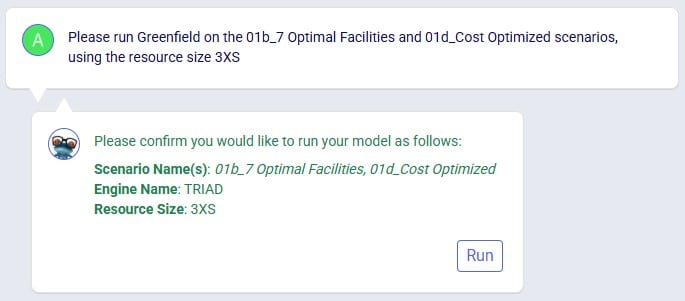

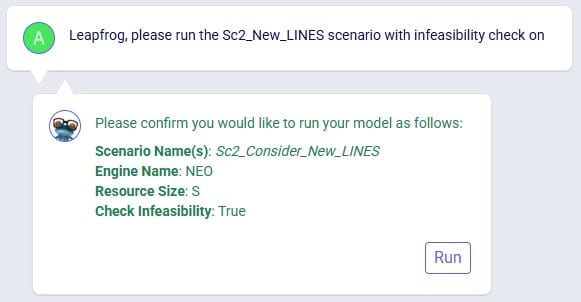

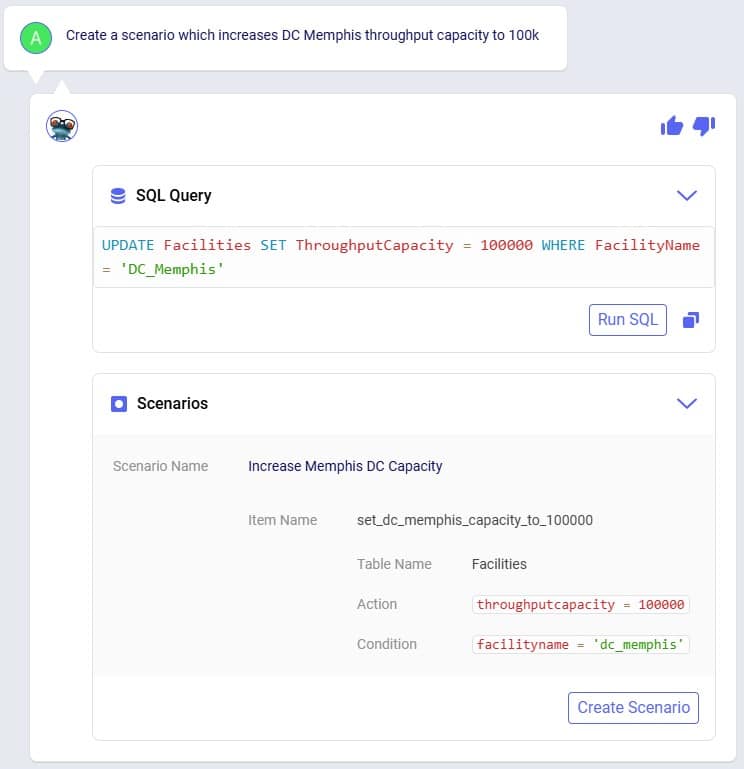

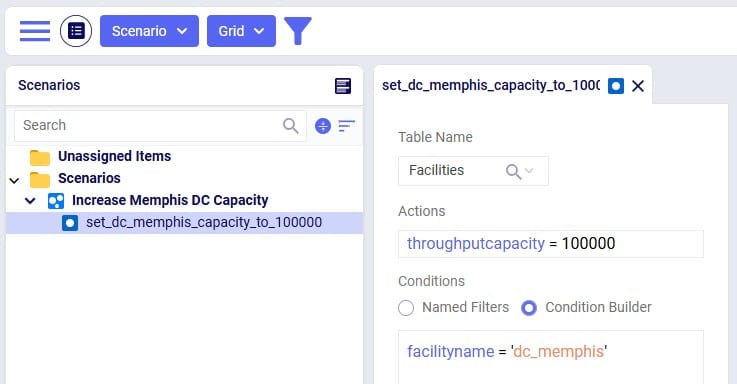

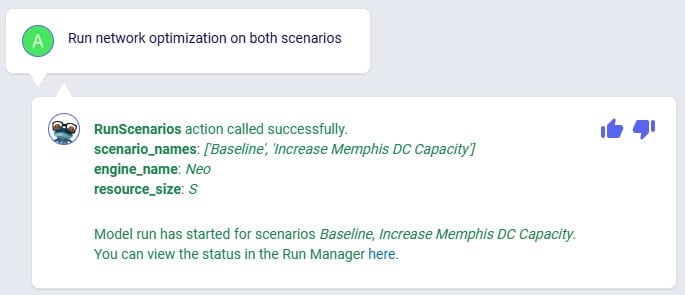

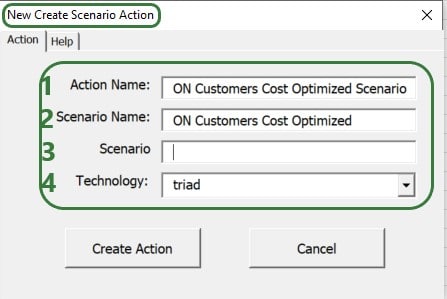

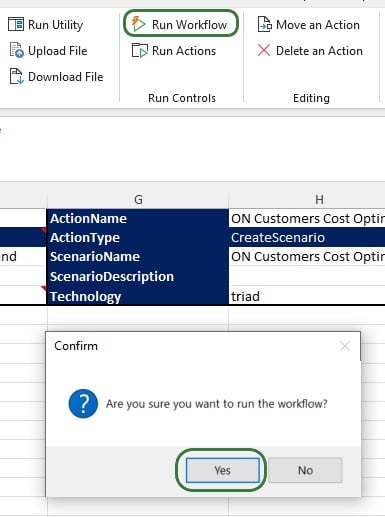

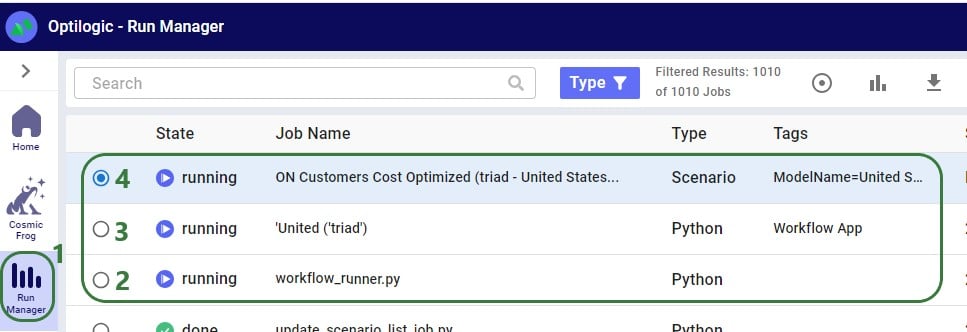

The following screenshot shows the scenarios and which scenario items are associated with each of them. To learn more about creating scenarios and their items, please see these 2 help center articles: "Creating Scenarios in Cosmic Frog" and "Getting Started with Scenarios".

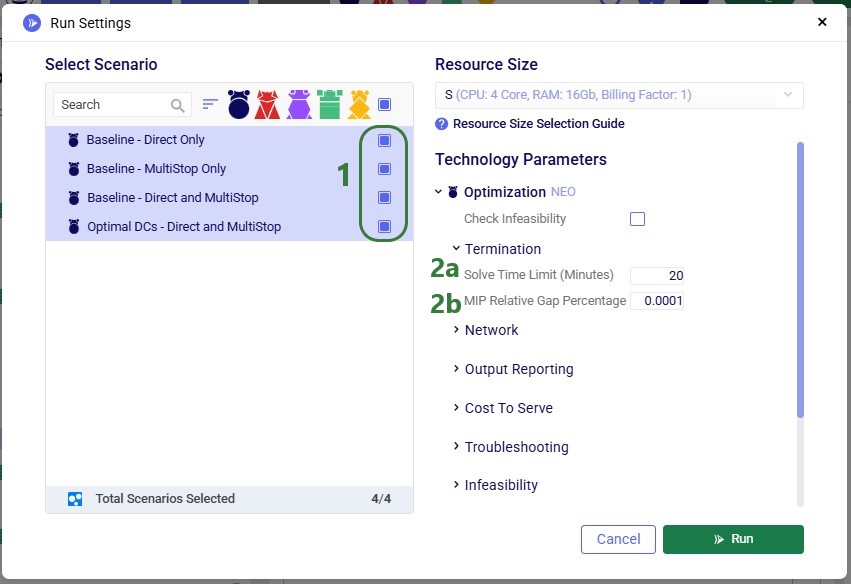

To find a balance between running the model to a low enough gap to reduce any suboptimality in the solution and running for a reasonable amount of time, the following is set on the Run Settings modal which comes up after clicking on the green Run button at the top right in Cosmic Frog:

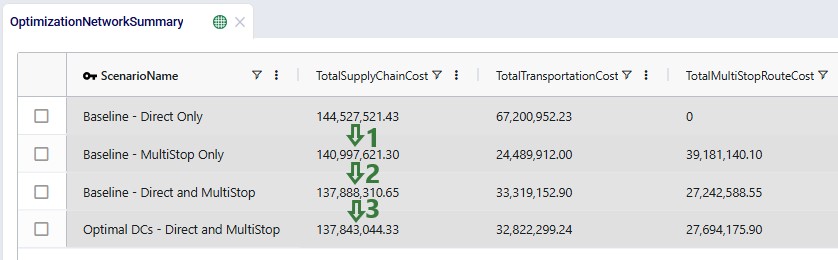

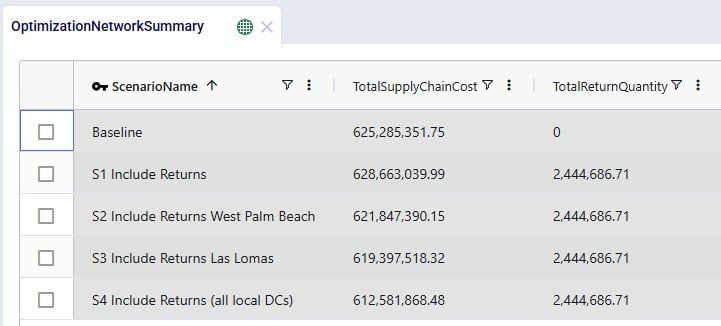

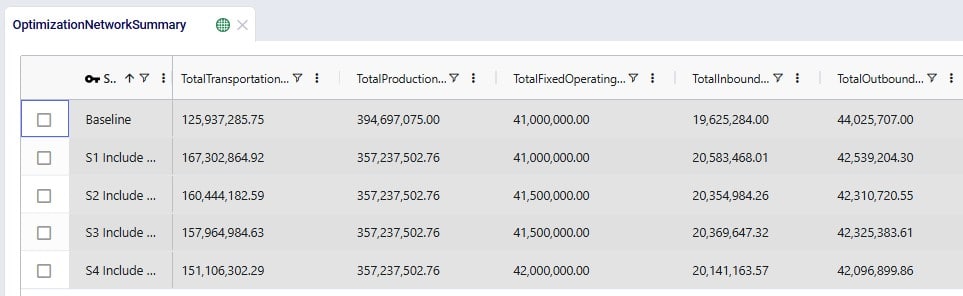

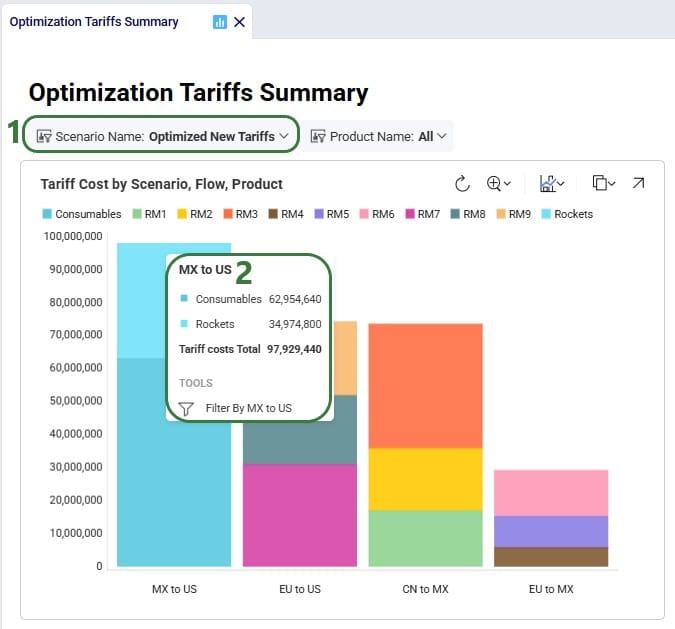

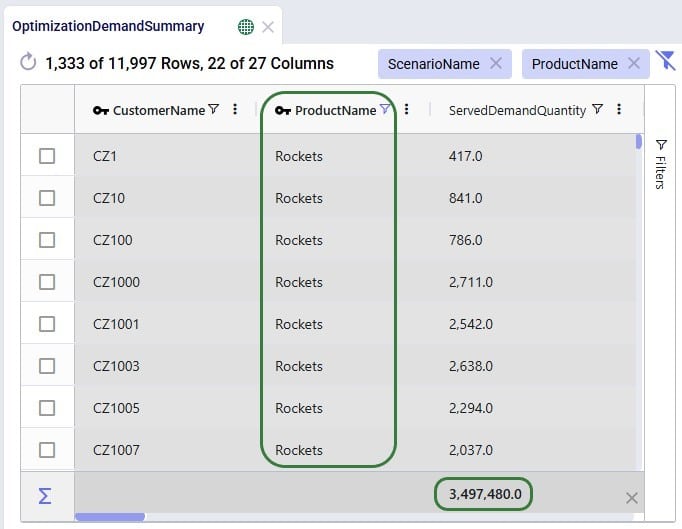

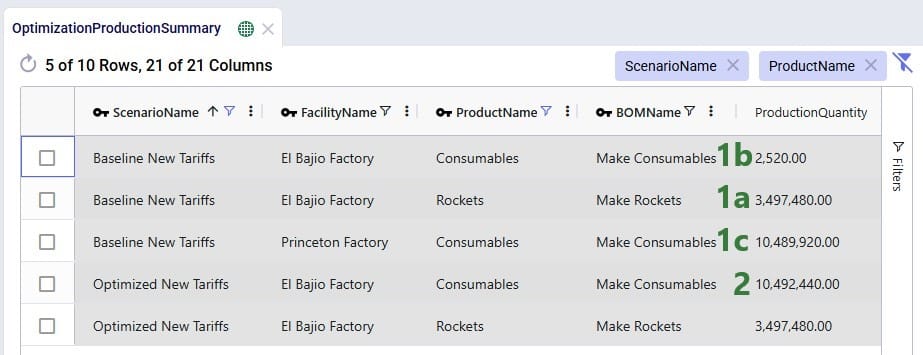

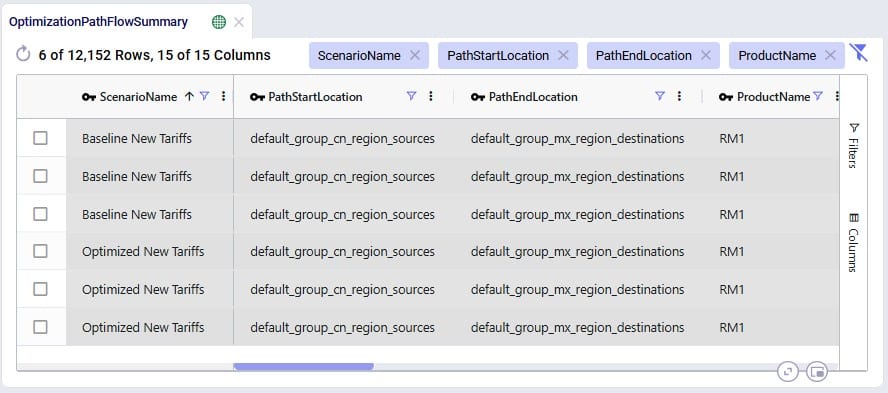

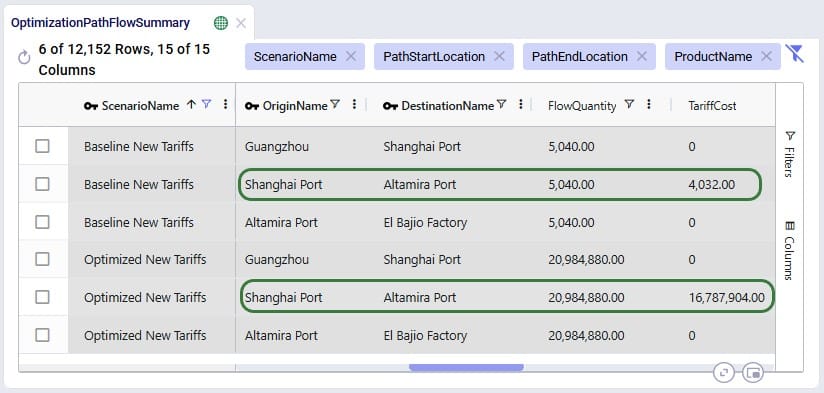

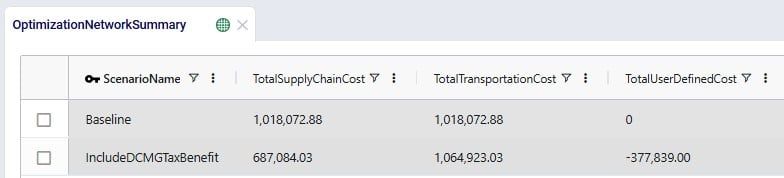

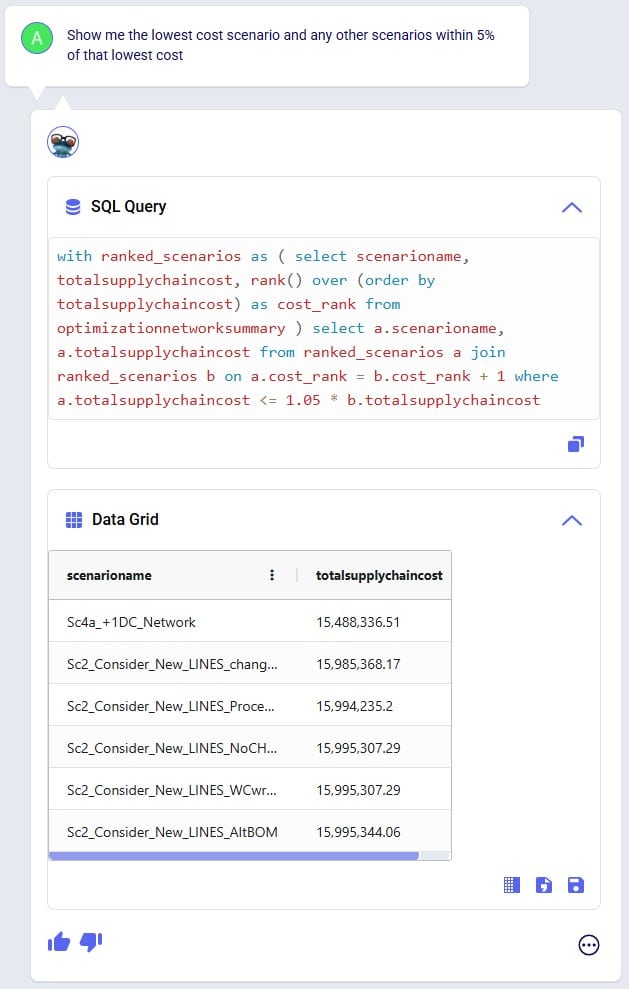

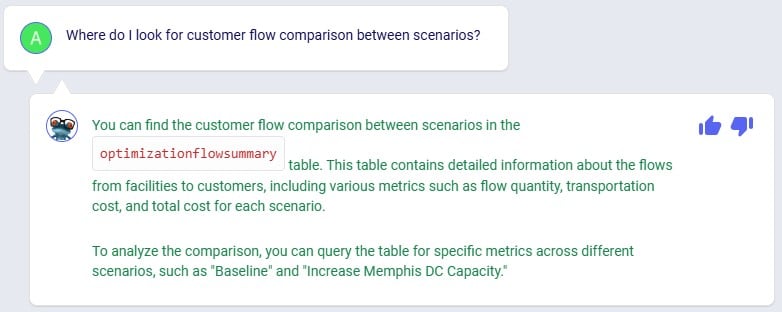

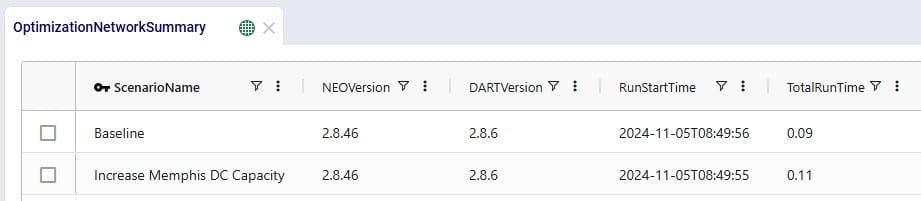

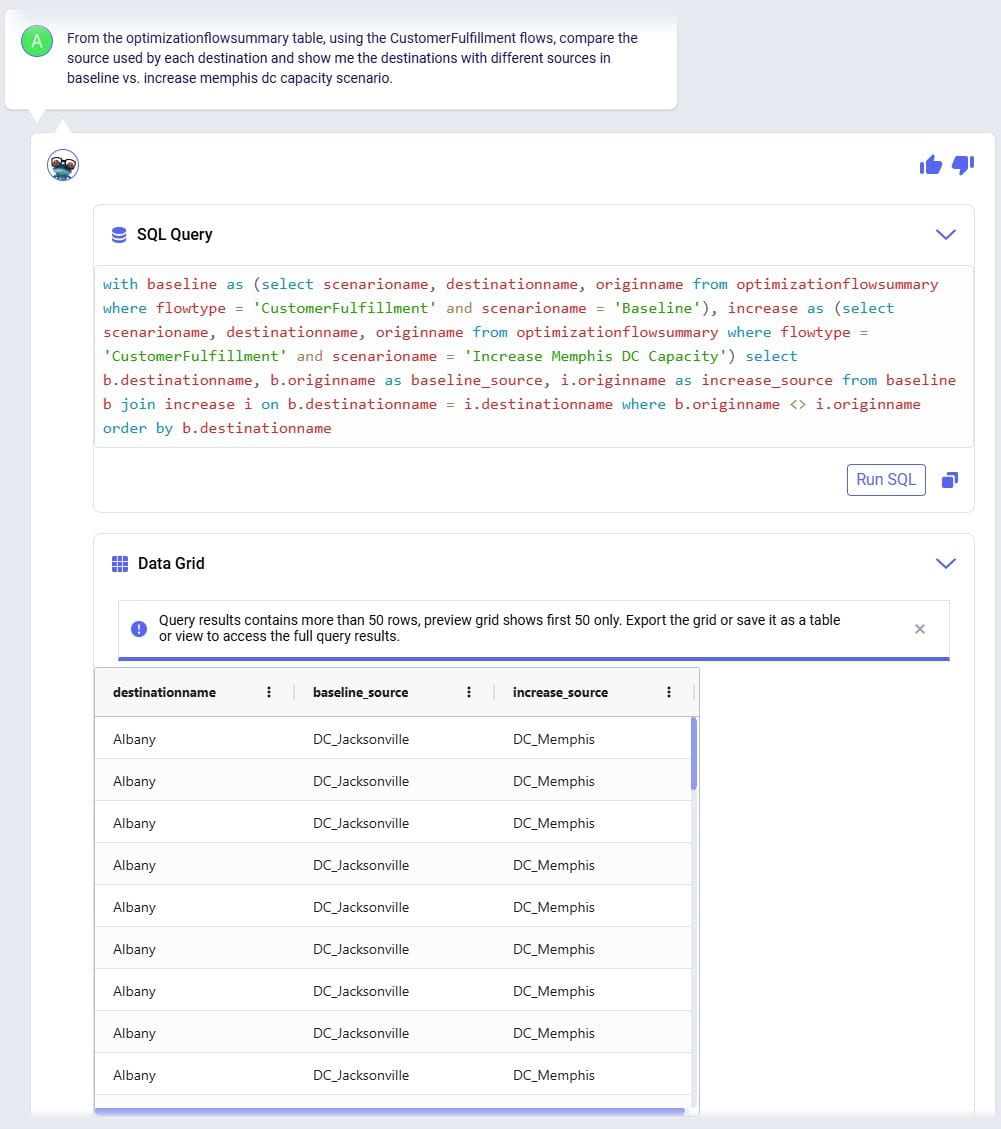

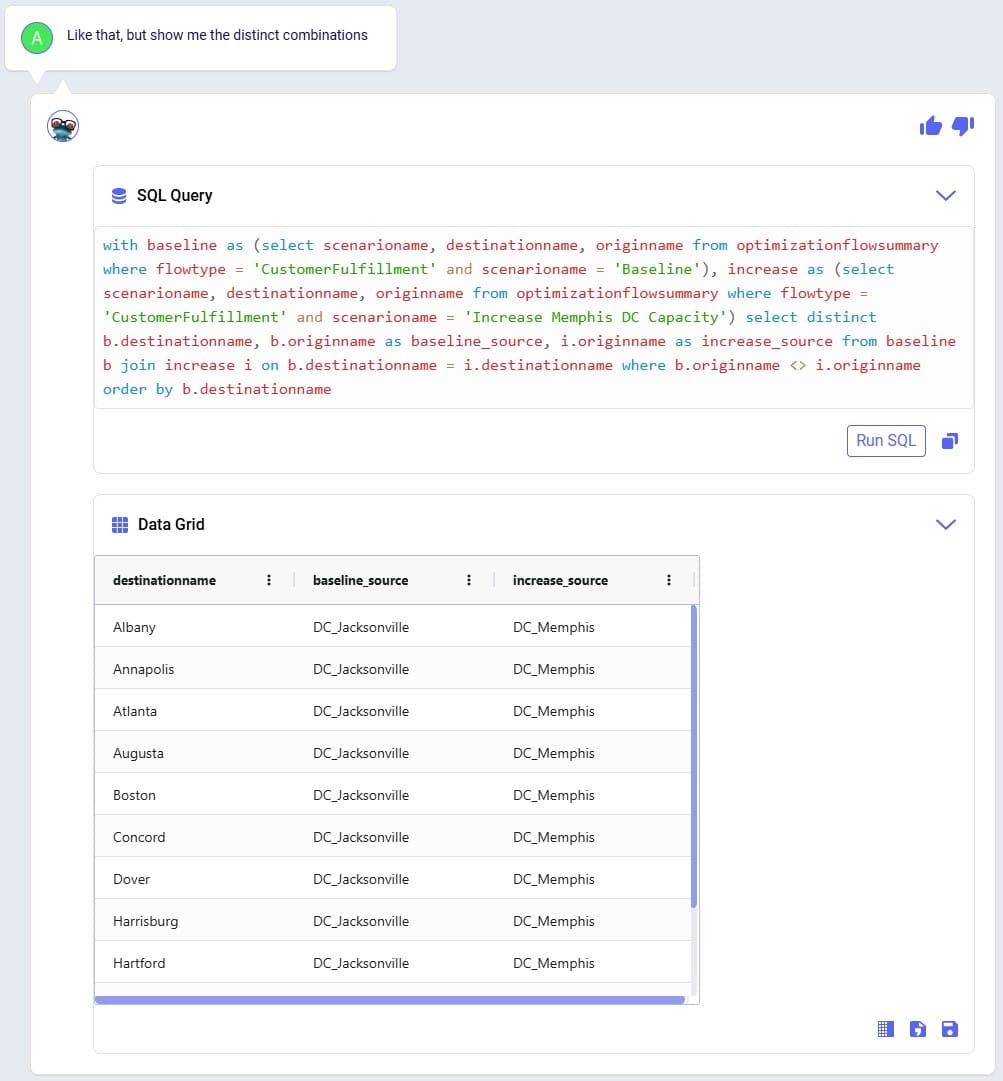

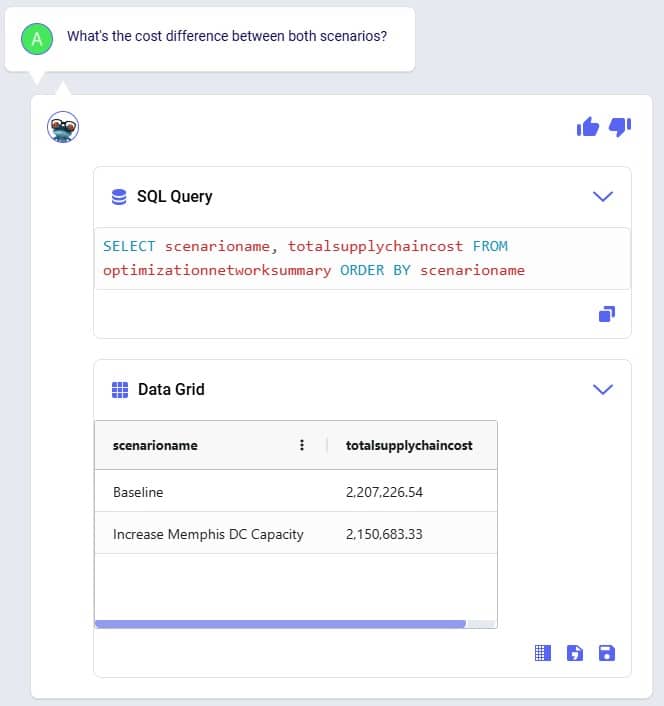

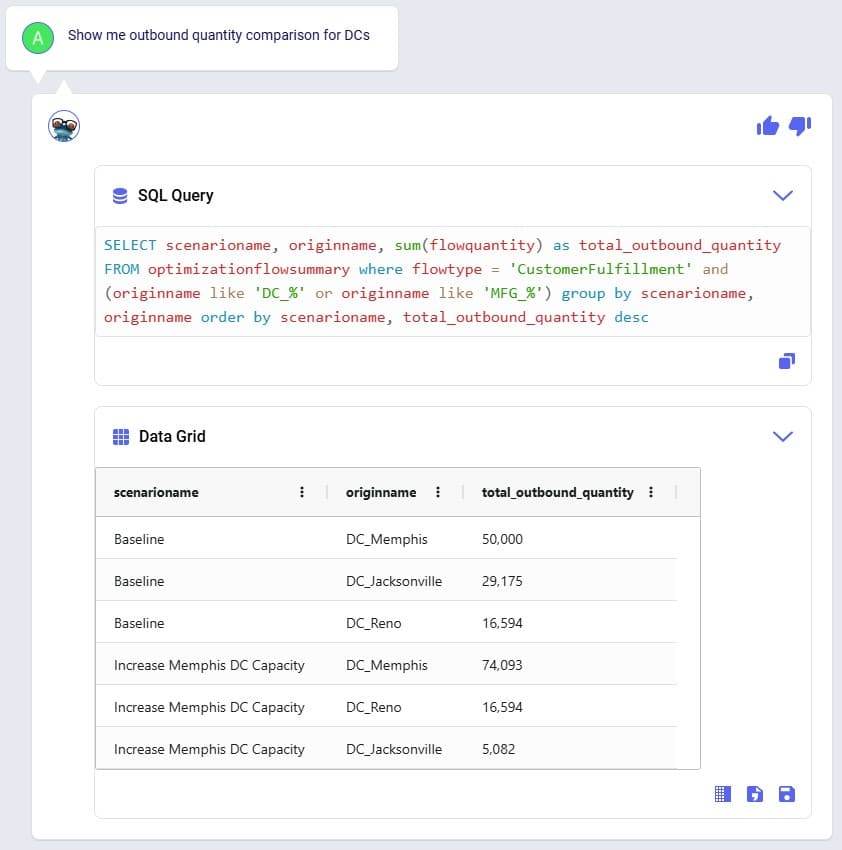

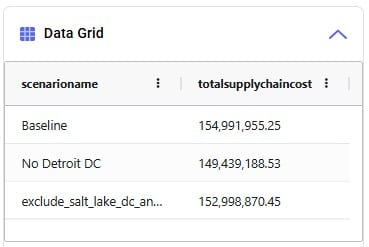

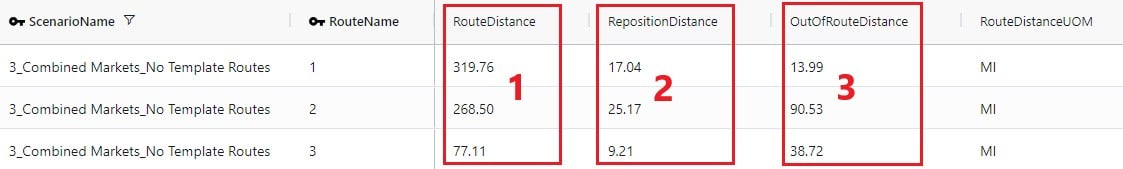

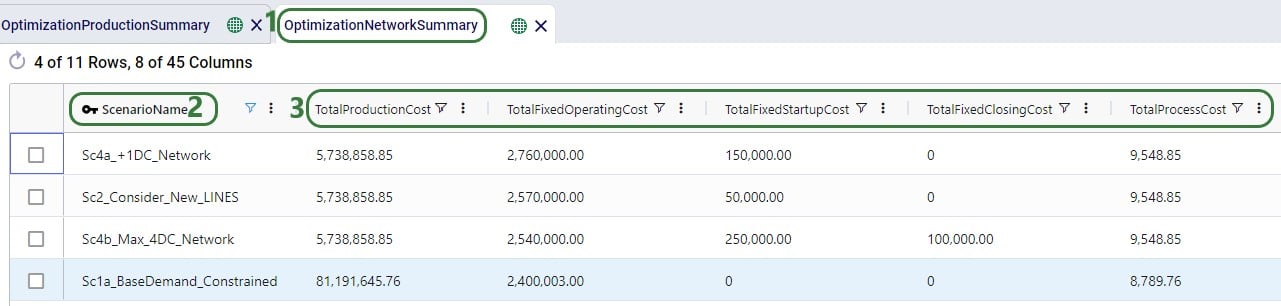

We will first look at the Optimization Network Summary and Optimization Flow Summary output tables, and next at the maps of the last 2 scenarios. We will start with the Optimization Network Summary output table. This screenshot does not show the Production and Fixed Operating Costs as they are the same across all scenarios, and we will focus on the transportation related costs:

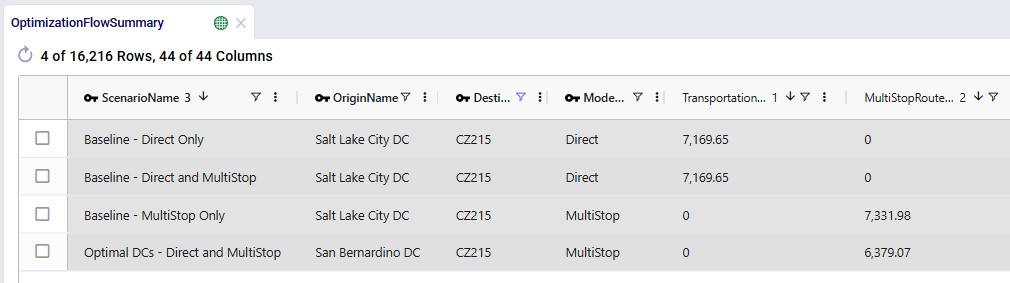

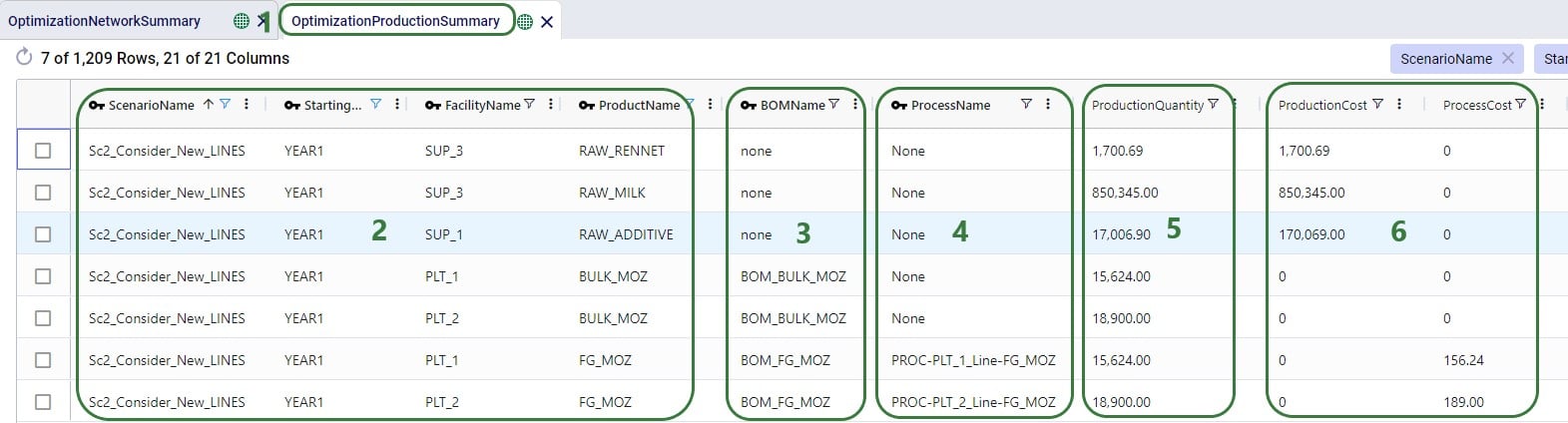

Next, the Optimization Flow Summary output table is filtered for 1 specific customer, CZ215, for which the DC assignment is changed in the final scenario. The table is also filtered for the Rockets product, for which the delivered quantity is the same for these 4 records (1,243 units):

Salt Lake City is the optimal DC to source from for this customer based on just the direct delivery option; the transportation cost is ~7.2k. We see from the "Baseline - MultiStop Only" scenario that using a multi-stop route from Salt Lake City to this customer is a little more expensive (7.3k), so therefore the Direct mode is used in the "Baseline - Direct and MultiStop" scenario. In the "Optimal DCs - Direct and MultiStop" scenario, the customer swaps DC and is served using a multi-stop route from the San Bernardino DC, this results in a transportation cost of 6.4k, which is a reduction of almost $800 as compared to going direct from the Salt Lake City DC.

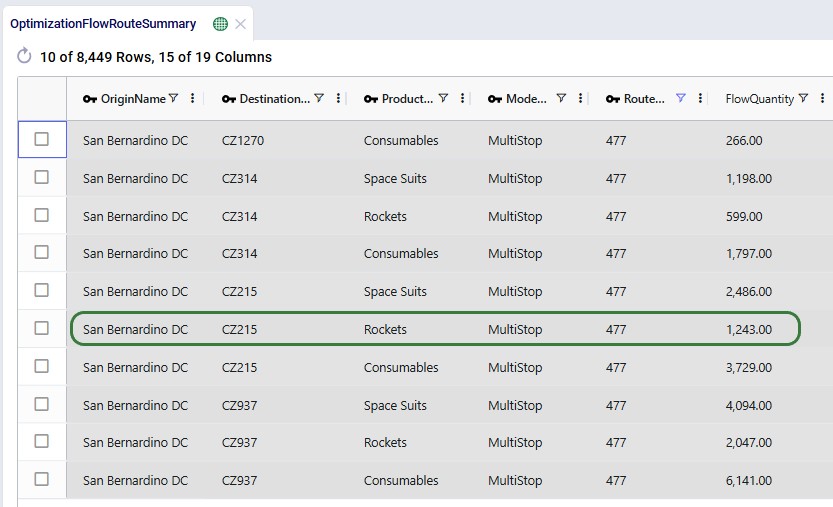

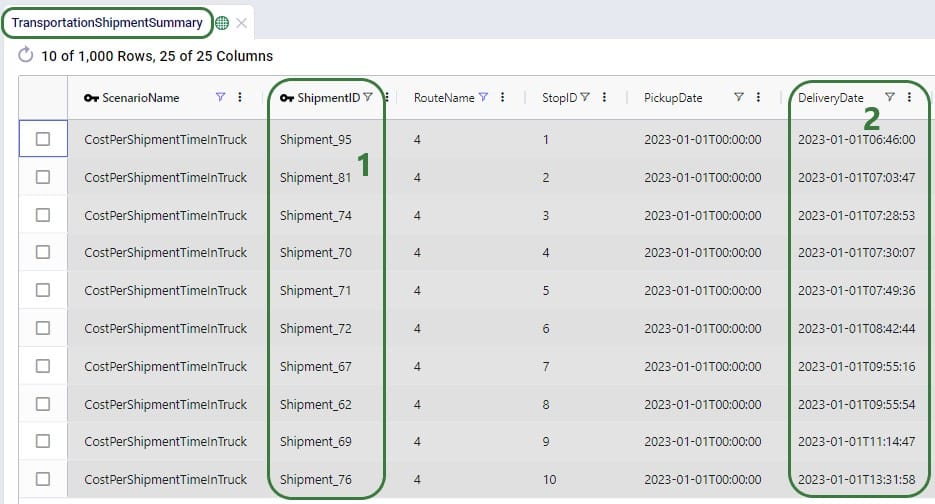

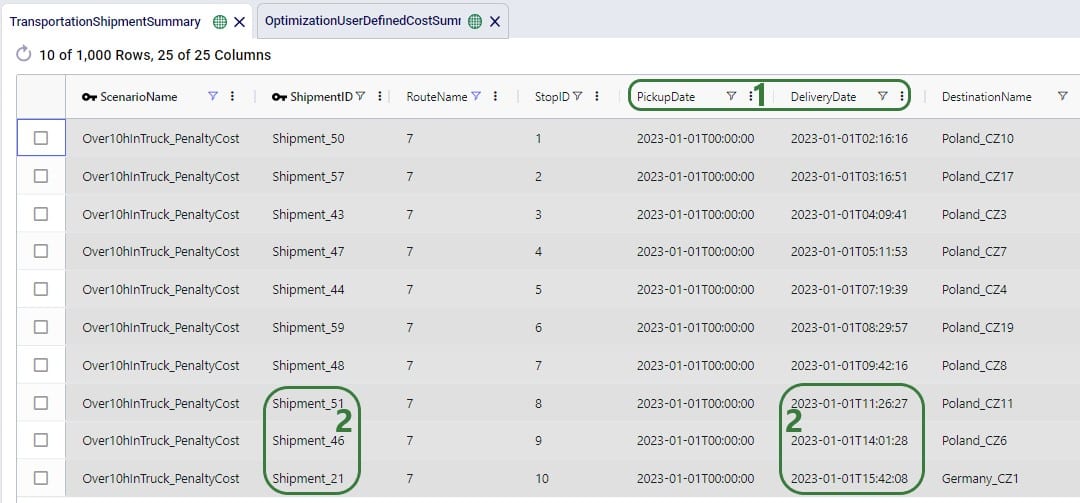

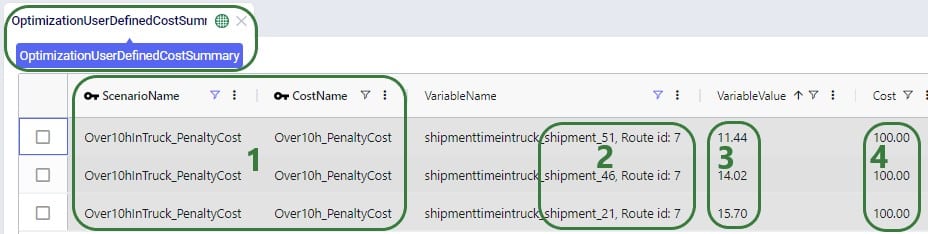

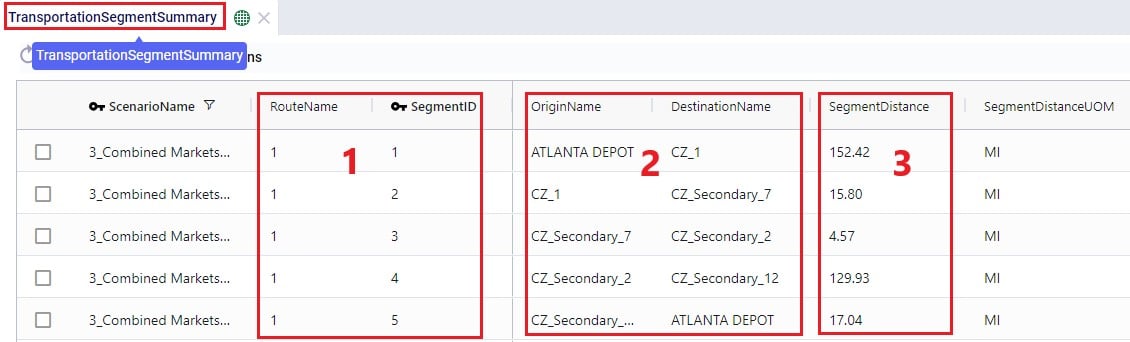

With a newly added output table named Optimization Flow Route Summary, we can see on which route from San Bernardino CZ215 is placed in this "Optimal" scenario:

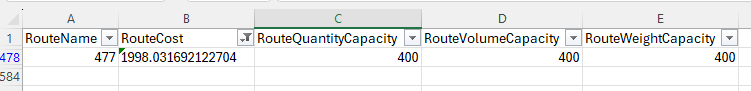

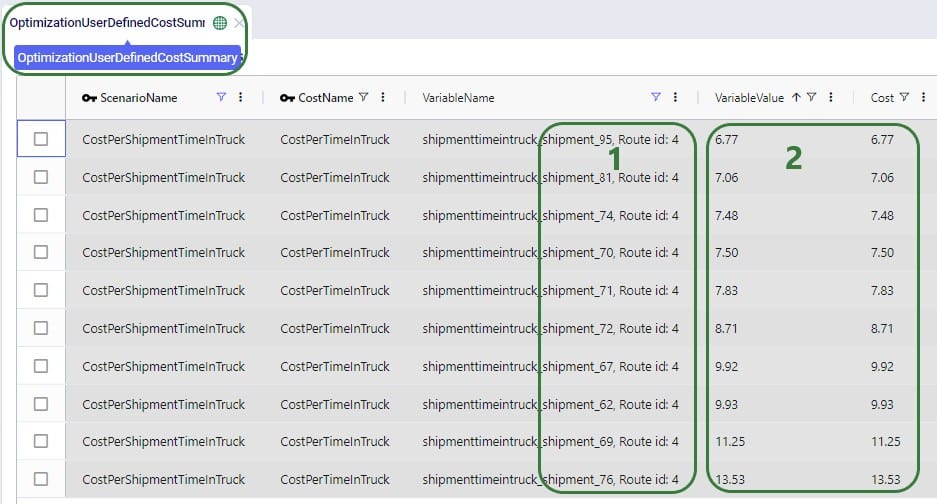

We see that CZ215 is a stop on route 477 from San Bernardino. The screenshot also shows the other stops on this route. Now we will retrace how the multi-stop route cost of $6.4k for this flow (see previous screenshot) is calculated:

When the possible routes are created during the model run, each customer is placed on up to 3 potential routes from its closest 2 DCs. These possible routes generated during preprocessing are listed in the HopperRouteSummary.csv input file which is generated when the "Write Input Solver" model run option (Troubleshooting section) is turned on. We can look up route 477 in it to see the cost for it:

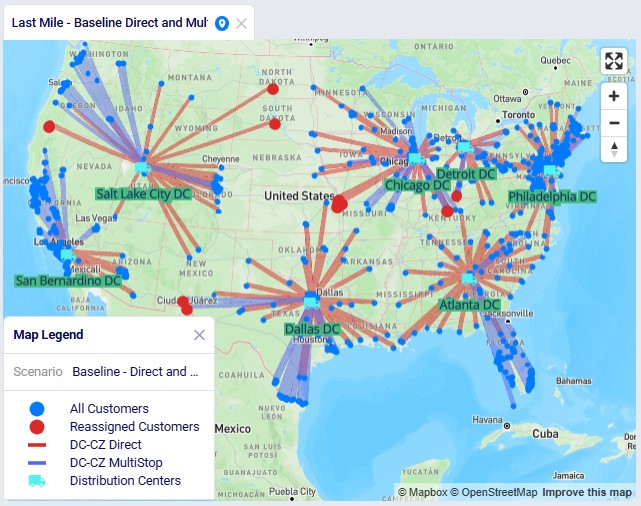

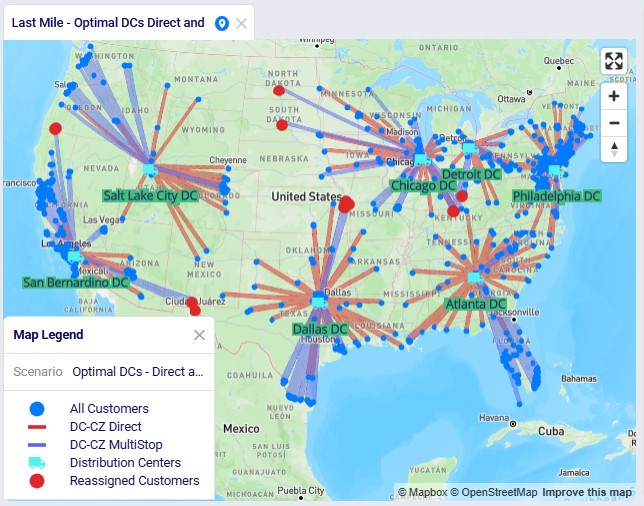

The following 2 maps show the DC-CZ flows for the "Baseline - Direct and MultiStop" and "Optimal DCs - Direct and MultiStop" scenarios. Multi-stop routes are shown with blue lines and direct deliveries with red lines. There are 15 customers that swap DCs and these are shown with larger red dots:

In total 15 CZs are swapping DCs in the "Optimal DCs - Direct and MultiStop" scenario. From west to east on the map:

The last 3 swaps cause some flow lines to be crossing each other on the map. This is due to the algorithm considering a finite number of possible routes.

Note that maps for the first 2 scenarios are also set up in this demo model.

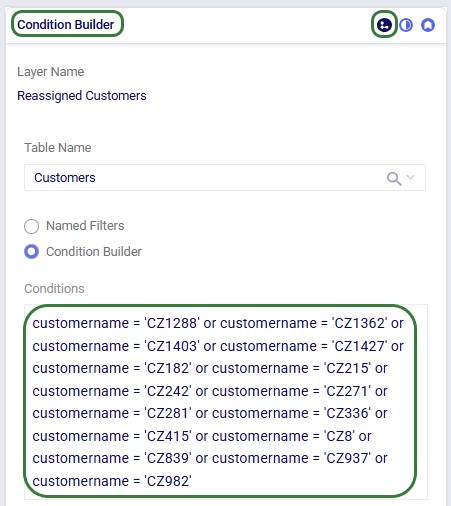

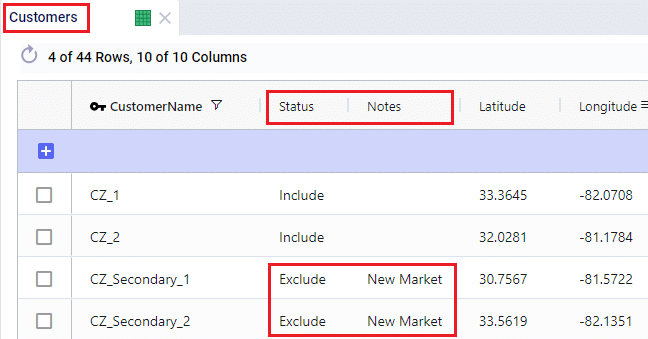

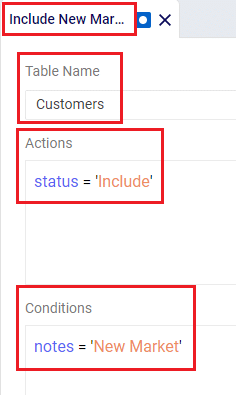

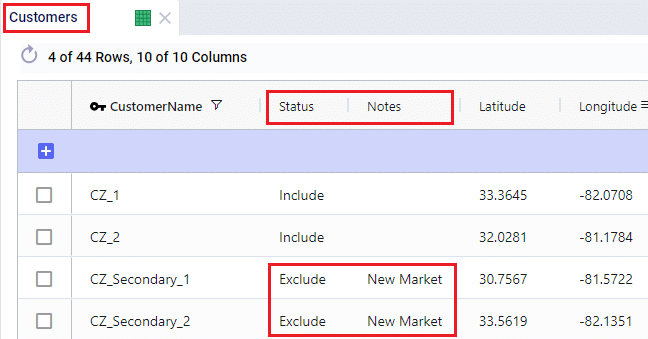

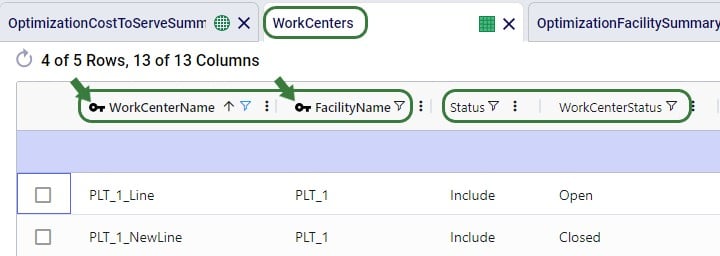

Re-running these scenarios in future with a newer solver and/or after making (small) changes to the model can change the outputs such that the set of reassigned customers which are shown in larger red dots on the map changes. To visualize these on the map in the same way as is done above, the filter in the Condition Builder panel of the "Reassigned Customers" map layer needs to be updated manually. Currently the filter is as follows:

To determine which customers are being reassigned and need to be used in this filter, users can take following steps (which can be automated using for example DataStar):

With this new functionality, a transportation optimization (Hopper) run is started immediately after a network optimization (Neo) run has completed and this will be seen as one run to the user. Underneath, the assignments on the last leg of the network (e.g. customer to DC assignments) as determined optimal by the Neo run will be fixed for the Hopper run, and then multi-stop routes are generated for this last leg during the Hopper run. All existing Hopper functionality is taken into account while determining the optimal multi-stop routes and complete sets of outputs for both the network optimization and transportation optimization are generated at the end of a Hopper after Neo run. This saves users time where they do not need to go into the model to retrieve and manipulate Neo outputs to be used as input shipments for a subsequent Hopper run.

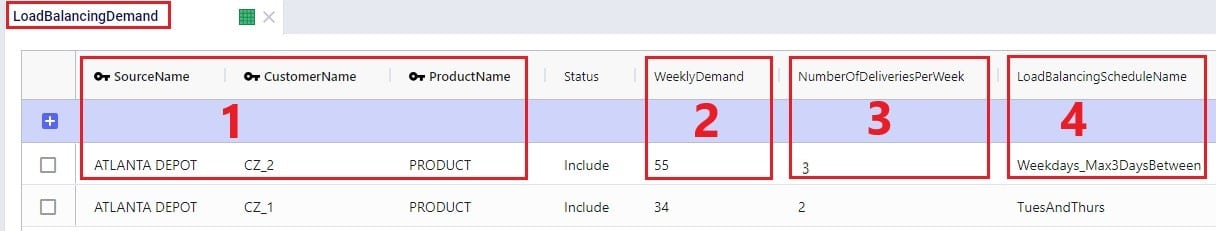

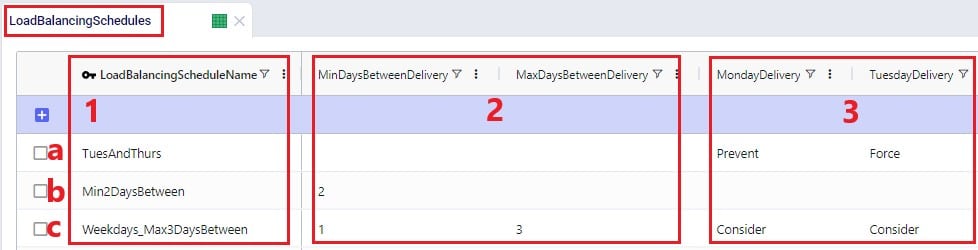

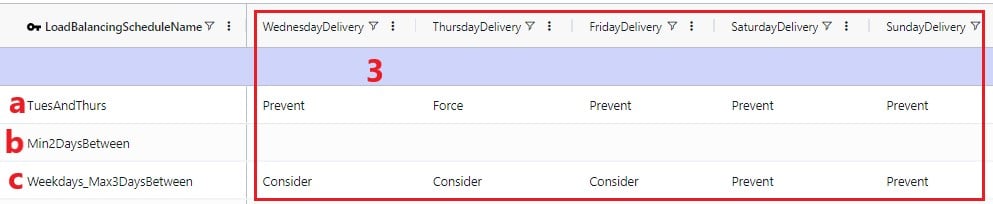

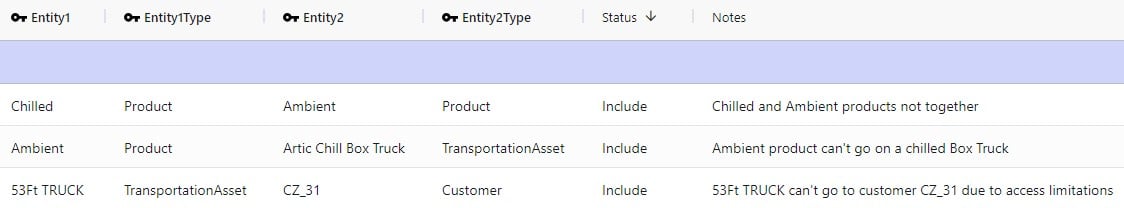

Besides needing the usual input for the network optimization (Neo) engine to be able to run, the Hopper after Neo algorithm needs some additional inputs in order to generate optimal multi-stop routes after the Neo run has completed. These include:

In addition, all populated Hopper fields used on any other Hopper table will be used during the Hopper part of the solve.

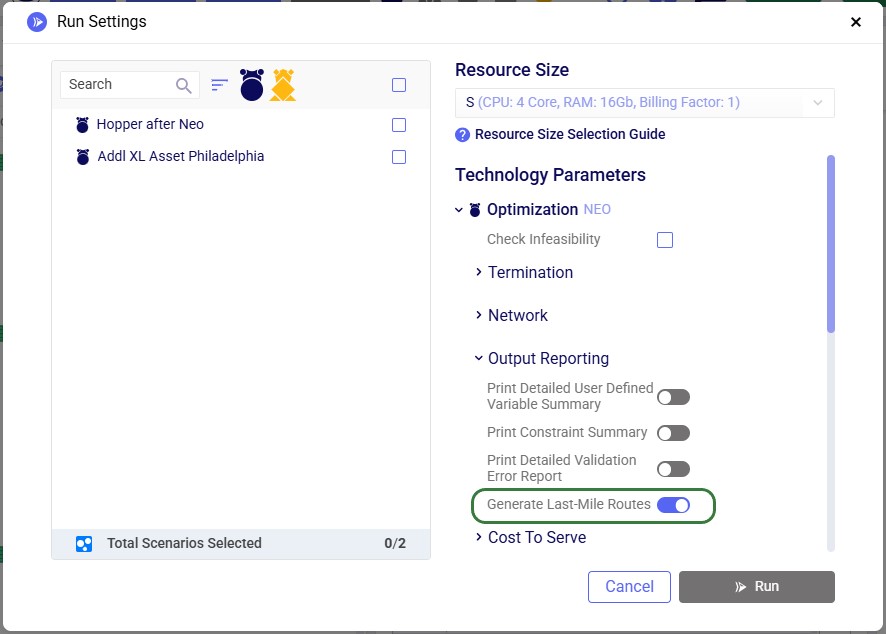

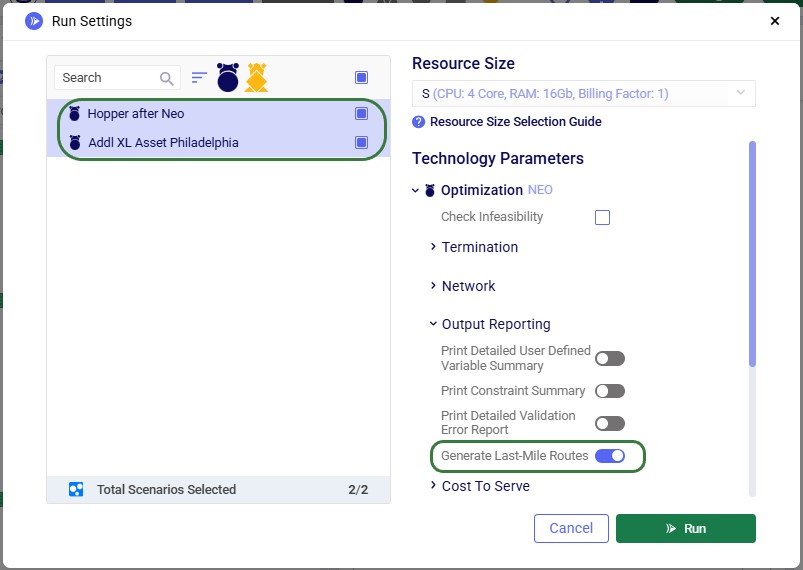

To turn on the Hopper after Neo functionality, one needs to enable the "Generate Last-Mile Routes" run setting in the Output Reporting section of the Optimization (NEO) Technology Parameters. These parameters are located on the right-hand side of the Run Settings modal which comes up after clicking on the green Run button at the right top in Cosmic Frog:

Please see the "Model used to showcase Hopper within/after Neo Features" section further above for the details of the starting point for the demo model for Hopper after Neo, which is the same one that was also the starting point for the Hopper within Neo demo model.

We will show how Hopper routes for the last leg of the network, from DCs to customers, can be created immediately after a Neo solve. Through 2 scenarios, we will explore which asset mix will be optimal for the generated multi-stop routes:

After copying the Global Supply Chain Strategy model from the Resource Library, following changes/additions were made to be able to use Hopper for multi-stop route generation for the DC-CZ leg after the Neo solve. After listing these changes here, we will explain each in more detail through screenshots further below. Users can copy this modified model with the scenarios and outputs from the Resource Library.

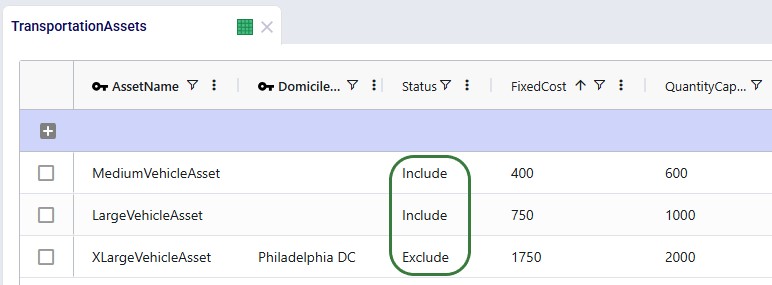

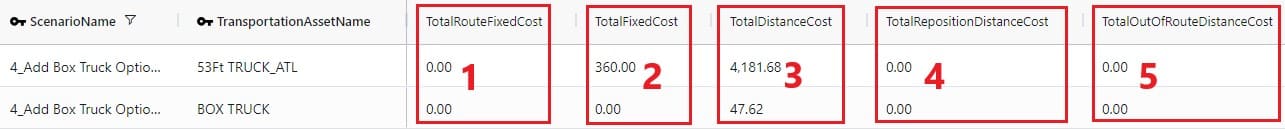

Instead of using the 1 large default vehicle when leaving the Transportation Assets table blank, we decide to use 2 user-defined assets initially: a large and medium one. One additional extra large asset will be added after running the first scenario with the 2 assets; it will be added to resolve unrouted shipments seen in the first scenario. The assets and their settings are shown in the following 2 screenshots:

We see that both the Medium and Large vehicle are available at all locations (Domicile Location is left blank) and their Status is set to "include", whereas the Extra Large vehicle will only be available at the Philadelphia DC in the second scenario, and its initial Status is set to "Exclude". The assets have increasing fixed costs, quantity capacities, max number of stops per route, and fixed pickup times (in minutes) by increasing asset size. The fixed delivery time is the same for all: 5 minutes per stop.

For each asset, a transportation rate is specified in the Transportation Rates input table. This rate is used in the Transportation Rate Name field in the Transportation Assets table (see screenshot above). The Distance and Time Costs are specified as follows, where the distance-based rate increases with the size of the vehicle to account for higher fuel usage. The Time Cost increases a bit with the size of the vehicle too to reflect the level of experience of the driver needed for larger vehicles:

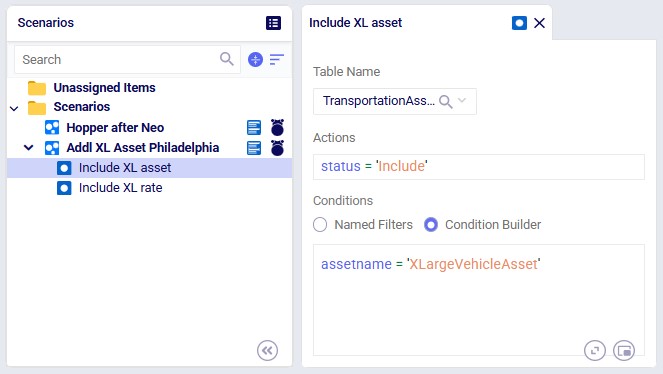

Two scenarios will be run in this model:

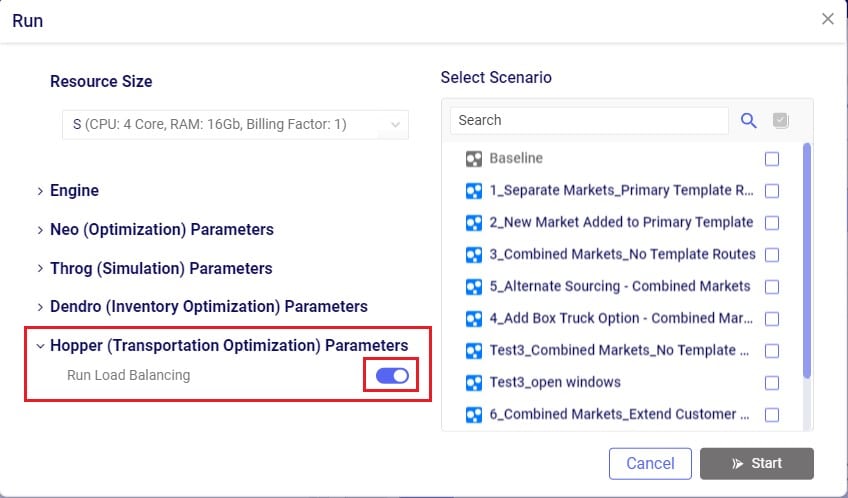

When running the scenarios, the only additional input required to indicate that the Hopper engine should be run immediately after the Neo run, while taking its customer assignment decisions into account, is to turn on the "Generate Last-Mile Routes" option in the Output Reporting section of the Optimization Technology Parameters:

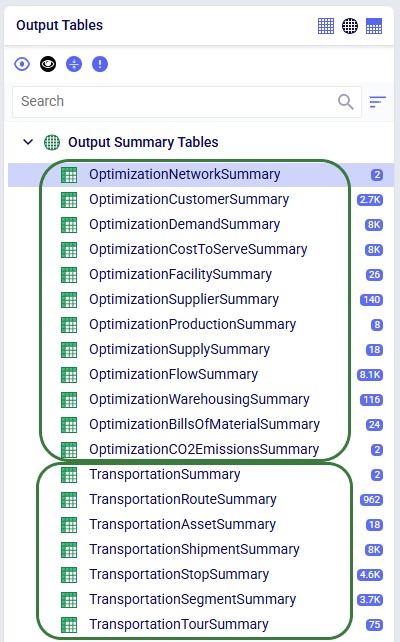

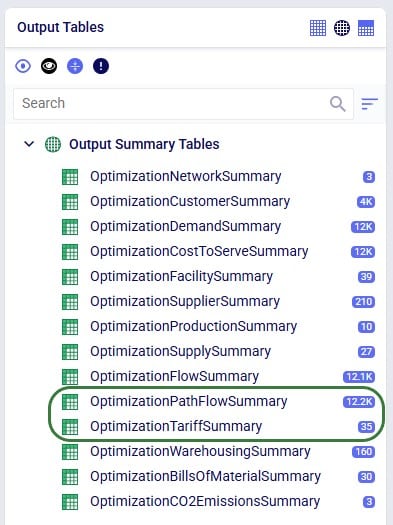

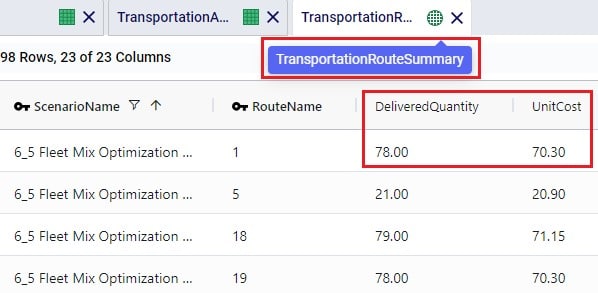

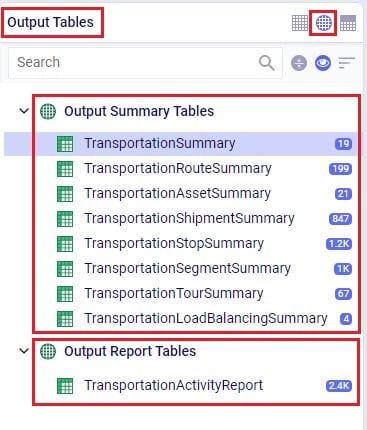

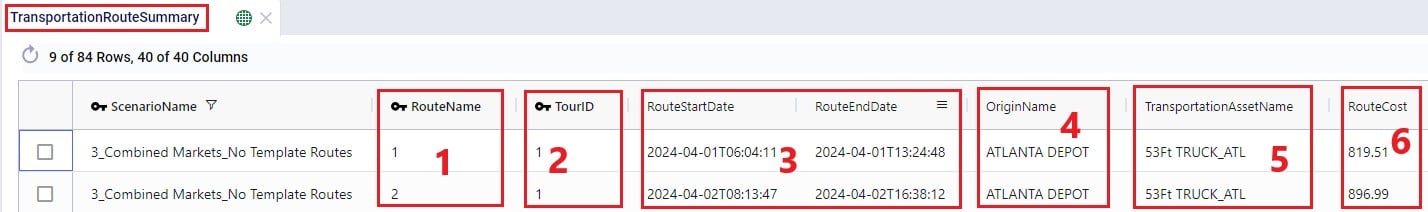

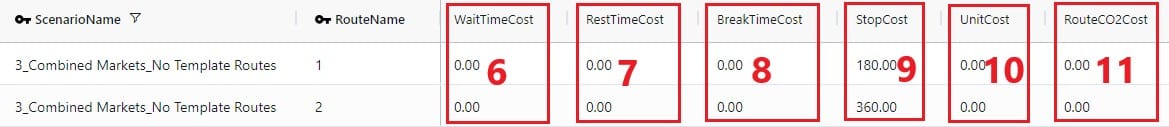

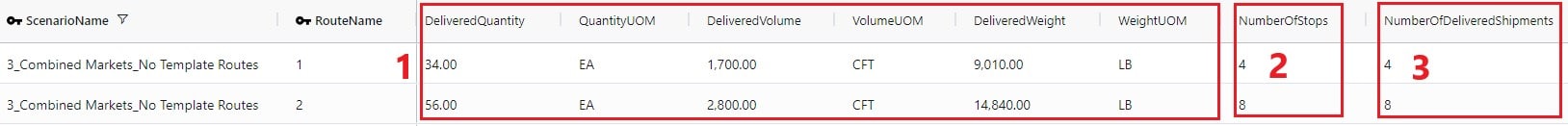

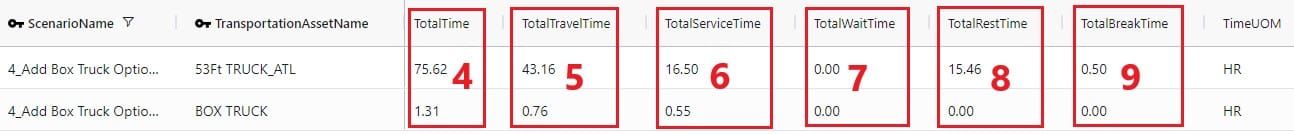

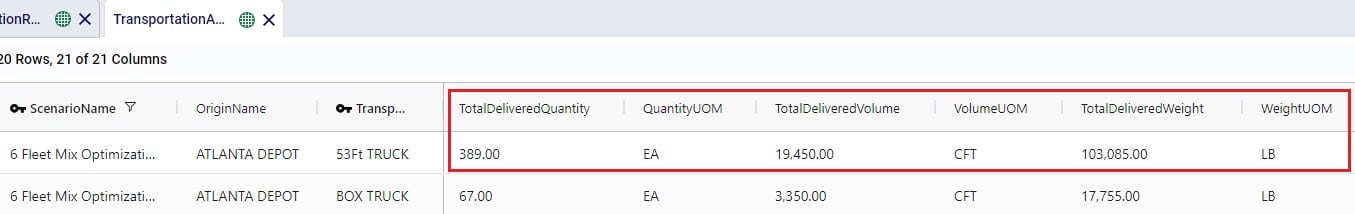

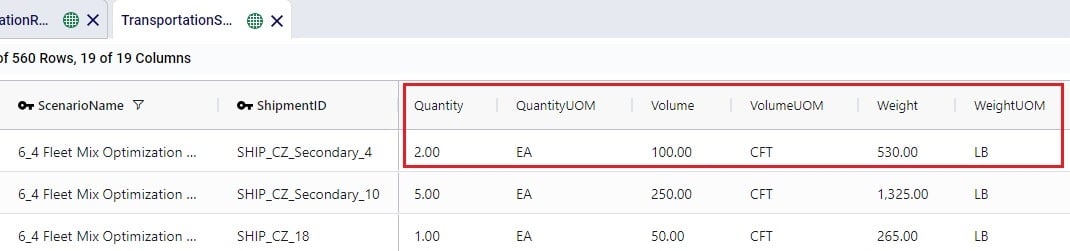

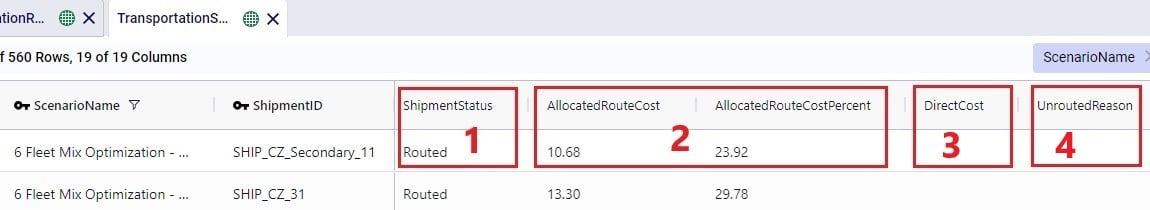

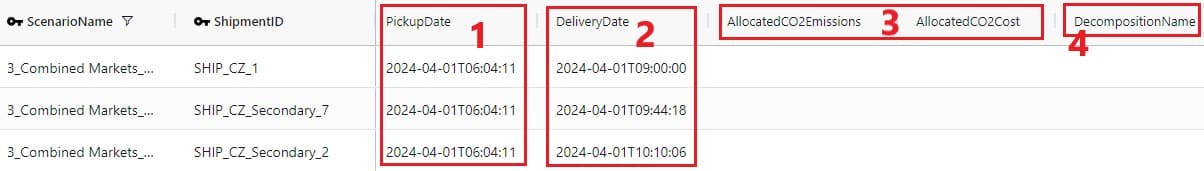

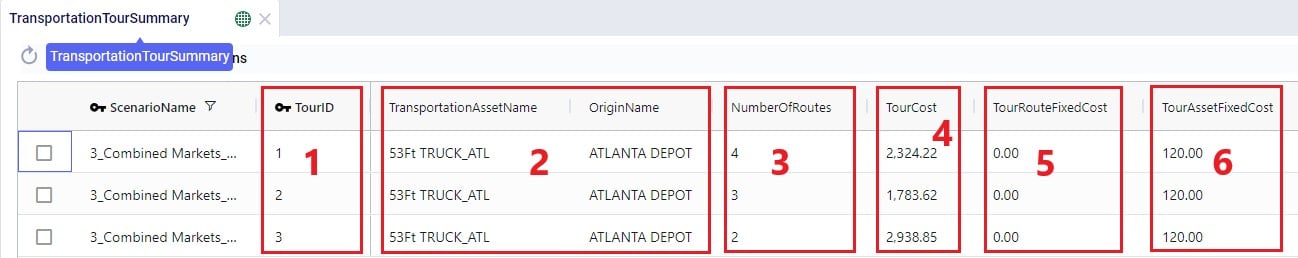

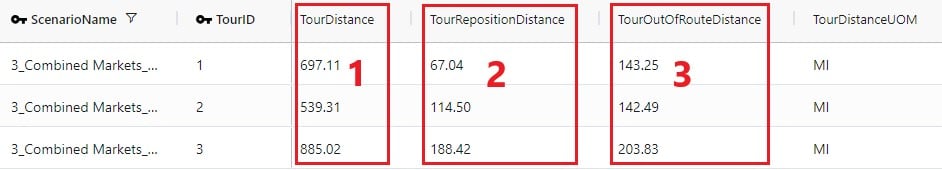

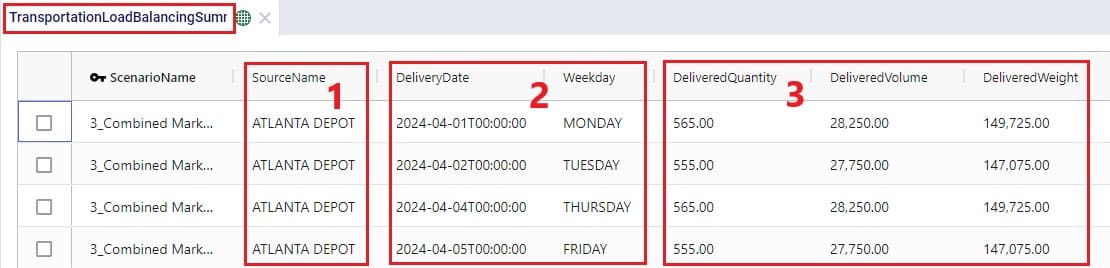

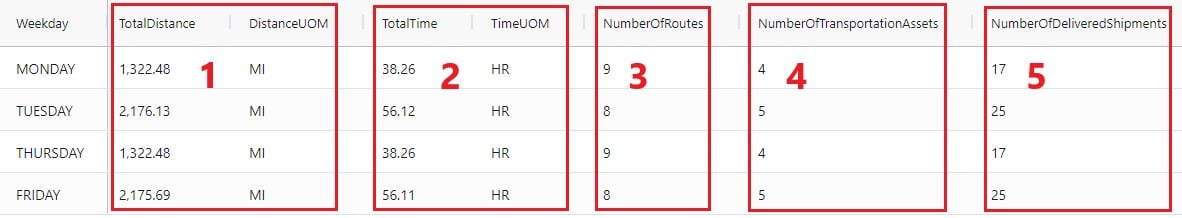

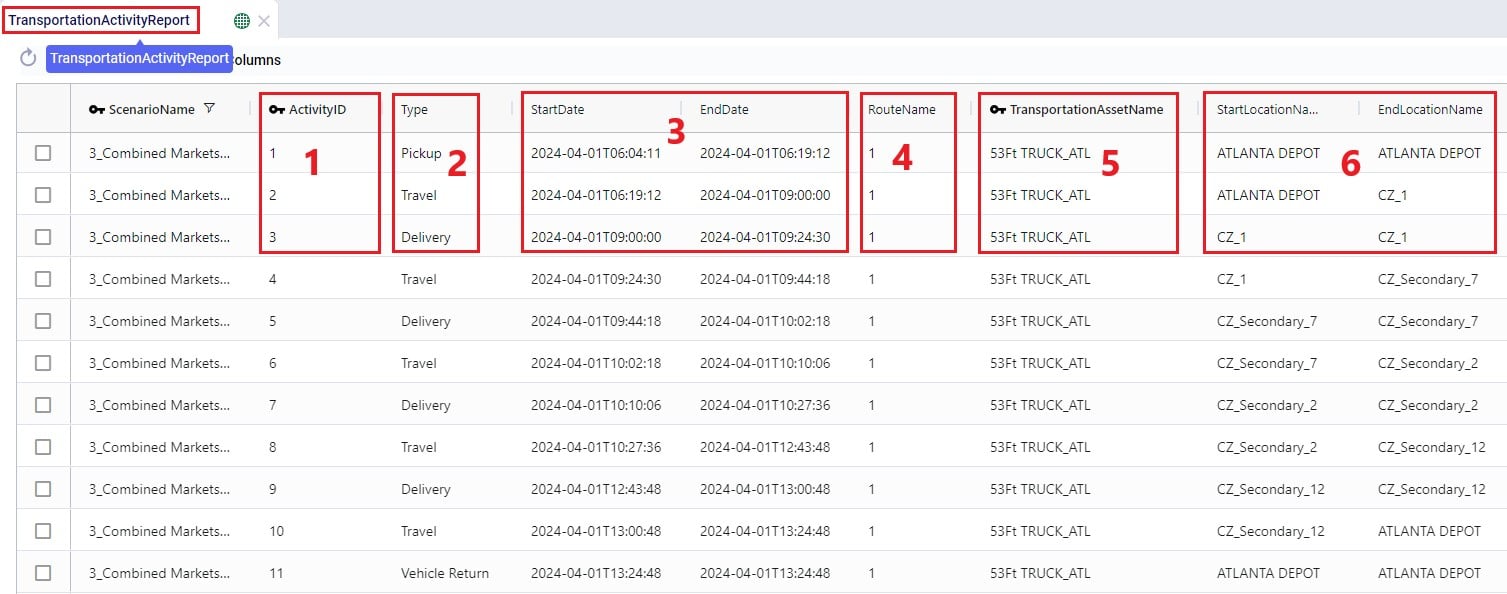

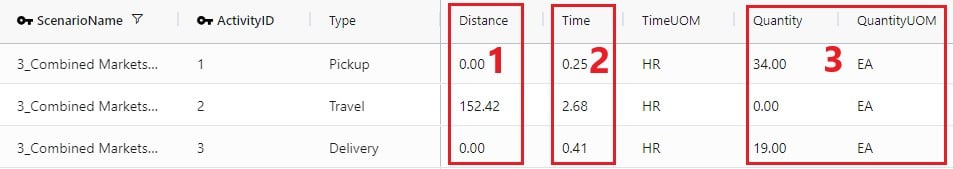

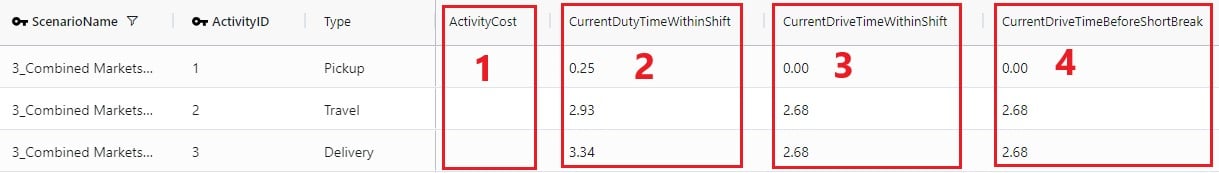

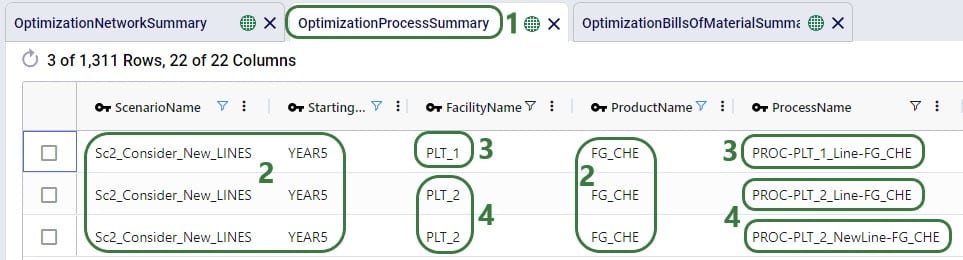

After running both scenarios, we see that full sets of outputs have been generated in the network optimization output tables and in the transportation optimization output tables:

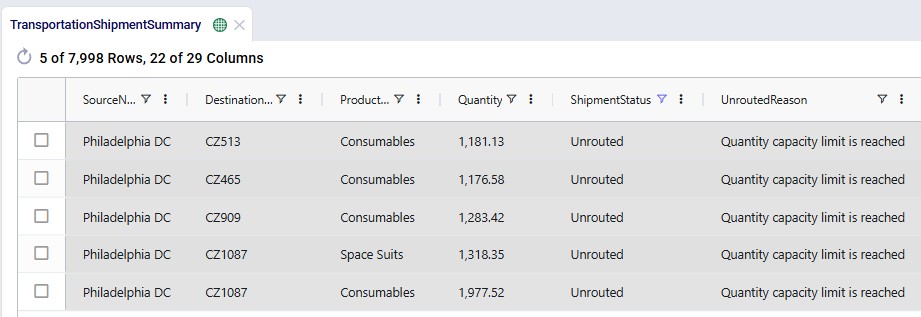

When looking through all outputs, we notice unrouted shipments in the Transportation Shipment Summary output table. Filtering these out, we find they are all shipments coming from Philadelphia DC, and the Unrouted Reason for all = "Quantity capacity limit is reached", see the next screenshot. The large vehicle asset has a capacity of 1000 units which is not big enough to fit these shipments. Therefore, the XL asset is added at Philadelphia DC in the second scenario.

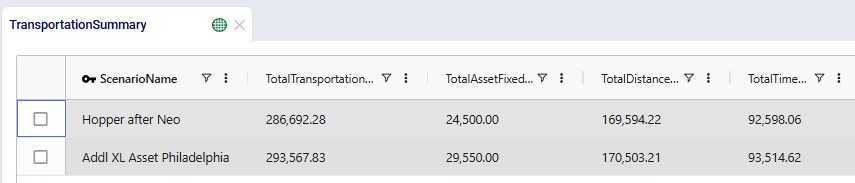

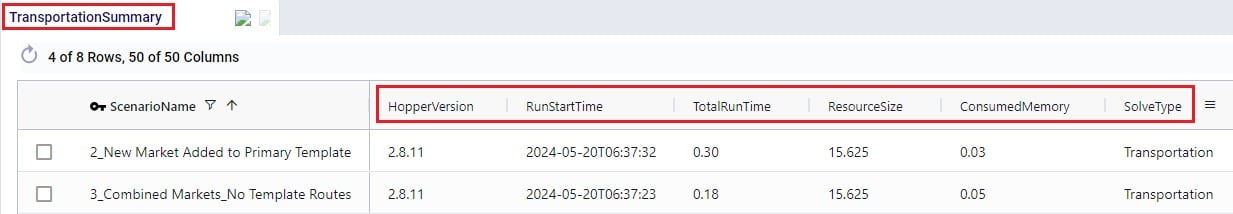

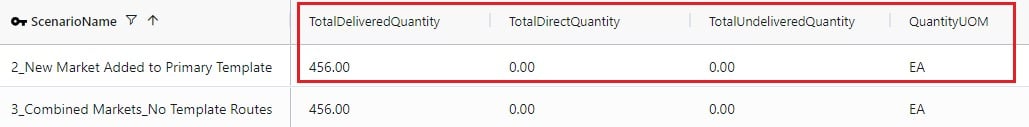

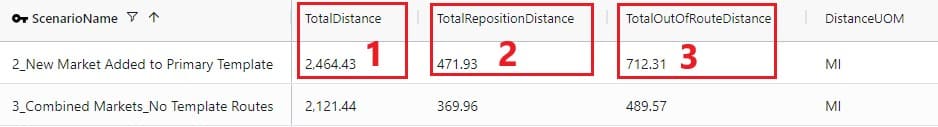

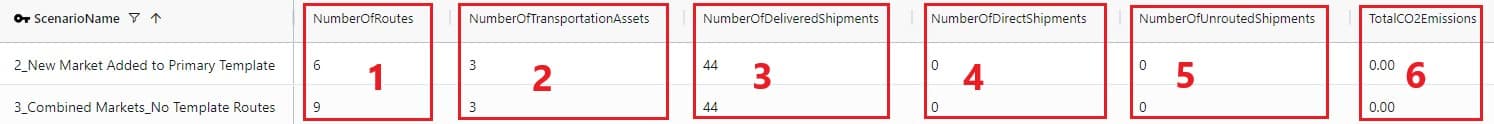

After running the second scenario, there are no more unrouted shipments and we take a look at the Transportation Summary output table which summarizes the Hopper part of the run at the scenario level. We can conclude from here that also delivering these 5 large shipments adds about $6.9k per week to the total transportation cost:

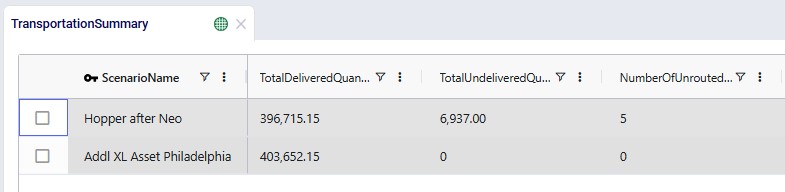

The following screenshot shows fields further to the right in the Transportation Summary output table, which confirm there are no more unrouted shipments in the second scenario, and therefore the total undelivered quantity is also 0 in this scenario:

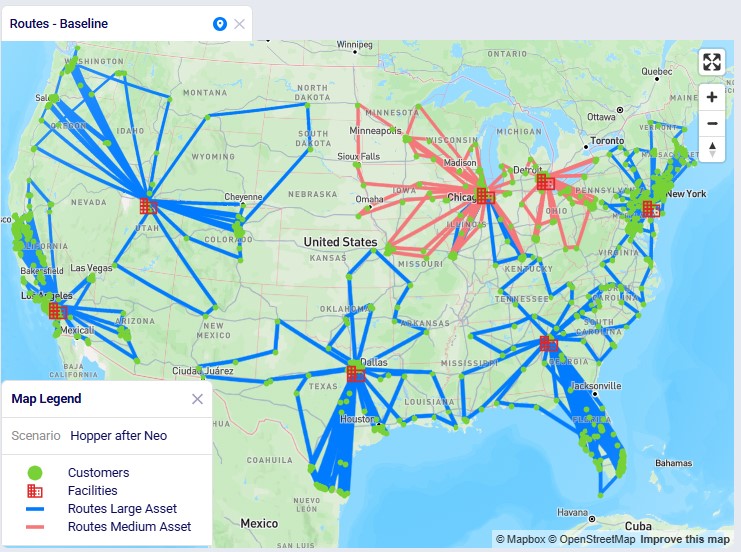

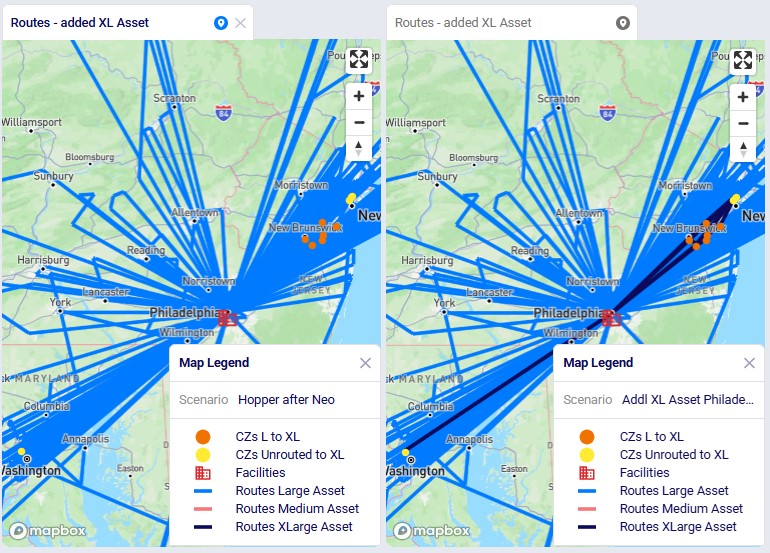

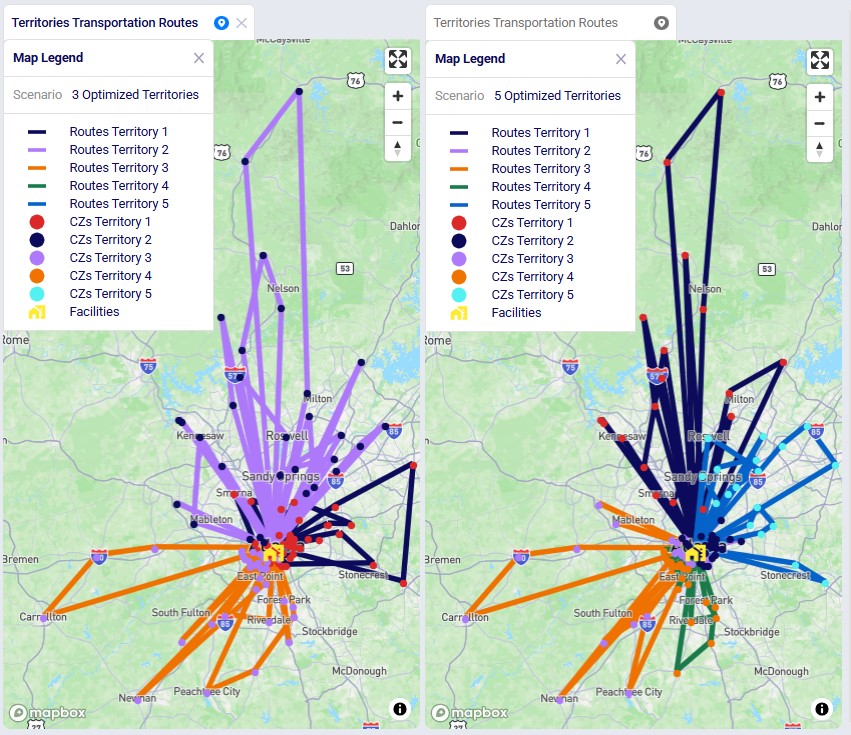

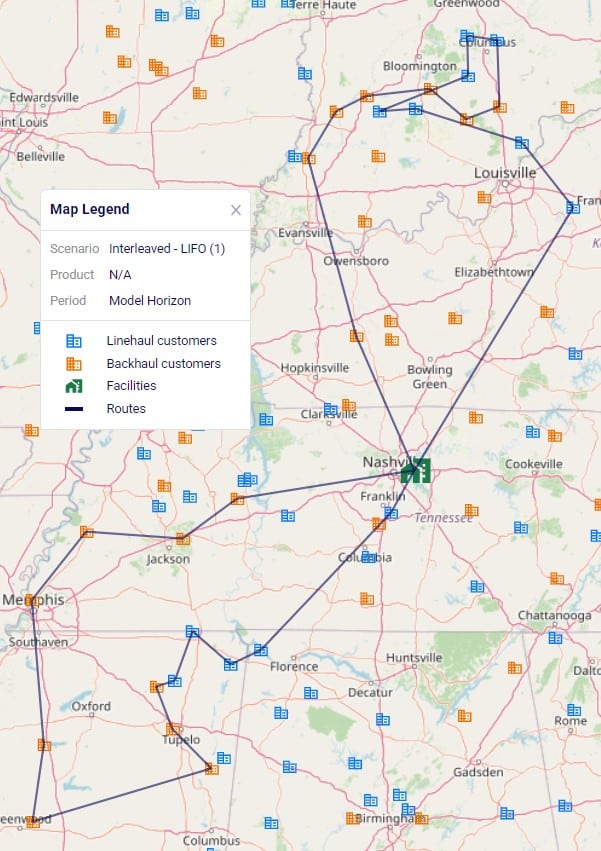

Looking on a map, we can visualize the routes. In this example, they are colored based on which vehicle is used on the route. This is the map for the "Hopper after Neo" scenario. The map is called "Routes - Baseline" and is pre-configured when copying this model from the Resource Library:

We see that the medium asset is used for most routes from the Chicago DC and all routes from the Detroit DC; the large asset is used on all other routes.

Finally, in the next screenshot we compare the 2 scenarios side-by-side, zoomed in on the Philadelphia DC and its routes. The darker routes are those that use the XL asset. Only the 10 customers which are served by the XL asset in the second scenario are shown on the map. Six of them were served by the large asset in the "Hopper after Neo" scenario; these are shown in orange on the map. The other 4 which are colored yellow had unrouted shipments for 1 or more products in the "Hopper after Neo" scenario; three of these are clustered together northeast of Philadelphia, while one is by itself southwest of Philadelphia:

Please note that the "CZs L to XL" and "CZs Unrouted to XL" map layers contain filters on the Condition Builder pane that have been manually added after analyzing and comparing the outputs of the 2 scenarios to determine which customers to include in these layers. If the scenarios are re-run in future with a newer solver and/or after making (small) changes to the model, the outputs may change, including which customers switch from a large to extra-large vehicle and/or those going from being unrouted to using the extra-large vehicle. In that case the current condition builder filters will need to be updated to visualize those changes on the map. See the notes at the end of the "Hopper within Neo-Outputs" section which apply here in a similar way.

To determine which customers initially have unrouted shipments and are served by the extra-large vehicle in the second scenario:

To determine which customers are served by a different asset in the second scenario as compared to the first:

We hope this provides you with the guidance needed to start using the Hopper with Neo features yourself. In case of any questions, please do not hesitate to reach out to Optilogic's support team on support@optilogic.com.

Territory Planning is a new capability in the Transportation Optimization (Hopper) engine that automatically clusters customers into geographic regions - territories - and restricts routes and drivers to operate within those territory boundaries. This reduces operational complexity, improves route consistency, and enables delivery-promise logic for end consumers.

Territory Planning is available today in Cosmic Frog for all users and is powered by an enhanced high-precision Genetic Algorithm. Please note that all Hopper models, whether using the new Territory Planning features or not, can now be run using this high-precision mode.

This "How-To Tutorial: Territory Planning" video explains the new feature:

In this documentation we will first cover the benefits of Territory Planning, next explain how it works in Cosmic Frog, and then take you through an example model showcasing the new feature.

Traditional routing optimization focuses on building the most cost-efficient set of routes. In many real-world operations, however, drivers do not cross territories. A driver consistently serving the same neighborhoods knows the roads, customer patterns, and service requirements.

Key benefits include:

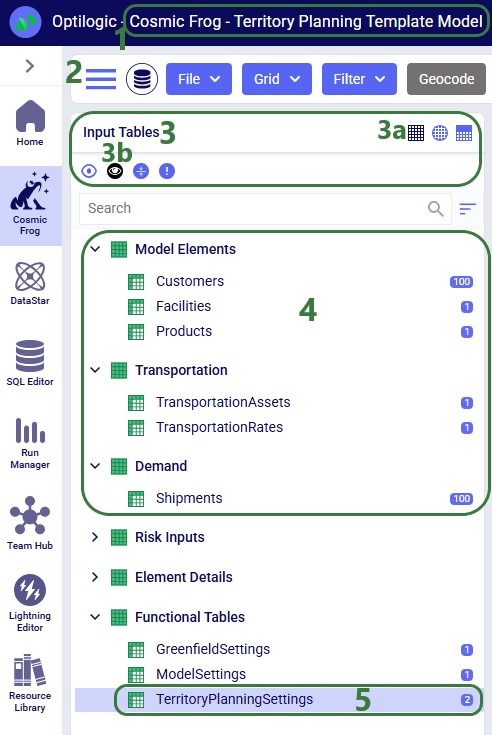

With the Territory Planning feature one new Hopper input table and two new Hopper output tables are introduced.

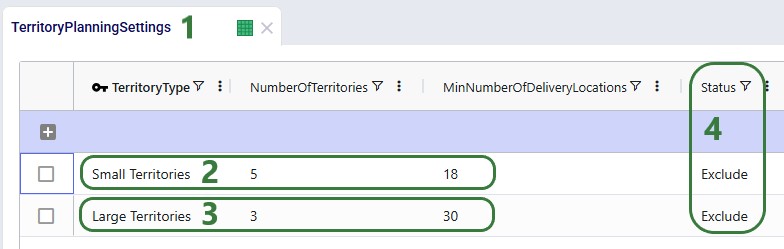

Territory Planning requires one new input table, Territory Planning Settings, while supporting all existing Hopper tables. This table defines the characteristics and constraints of the territories to be created during the Hopper solve, and can be found in the Functional Tables section: 1. Territory Type: A descriptive name for the territory configuration (e.g., "Large Territories", "Balanced Small Territories").

The universal compatibility with all other Hopper tables ensures you can add territory planning to any existing Hopper model without needing to restructure your data.

Territory Planning generates two new output tables, the Transportation Territory Planning Summary and the Transportation Territory Assignment Summary, in addition to all standard Hopper outputs. The Transportation Territory Planning Summary table provides one record per territory with aggregate KPIs:

This table is useful for:

The Transportation Territory Assignment Summary table shows the detailed assignment of each customer to a territory:

This table enables:

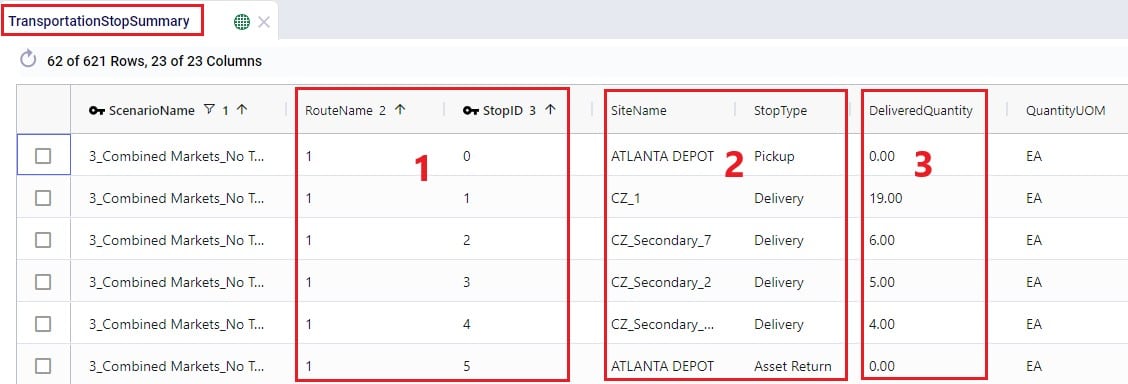

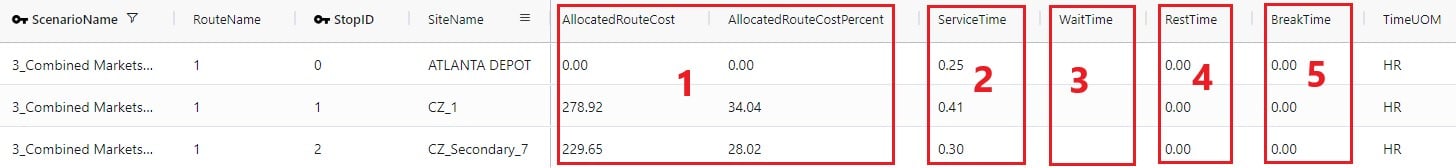

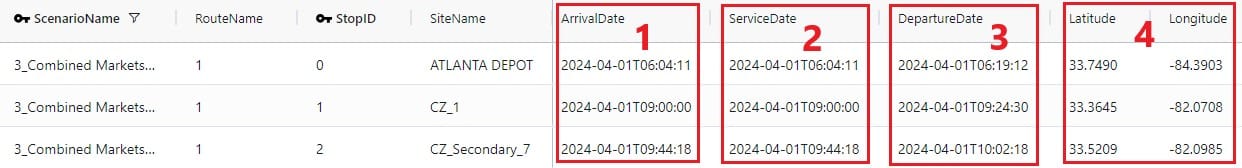

Besides the 2 new output tables, the following existing transportation output tables have new fields Territory Name and Territory Type added to them: Routes Map Layer, Transportation Asset Summary, Transportation Segment Summary, and Transportation Stop Summary. This facilitates filtering on territories in these tables to for example quickly see which assets are used in which territories.

Territory Planning uses Hopper's advanced genetic algorithm to simultaneously optimize multiple objectives:

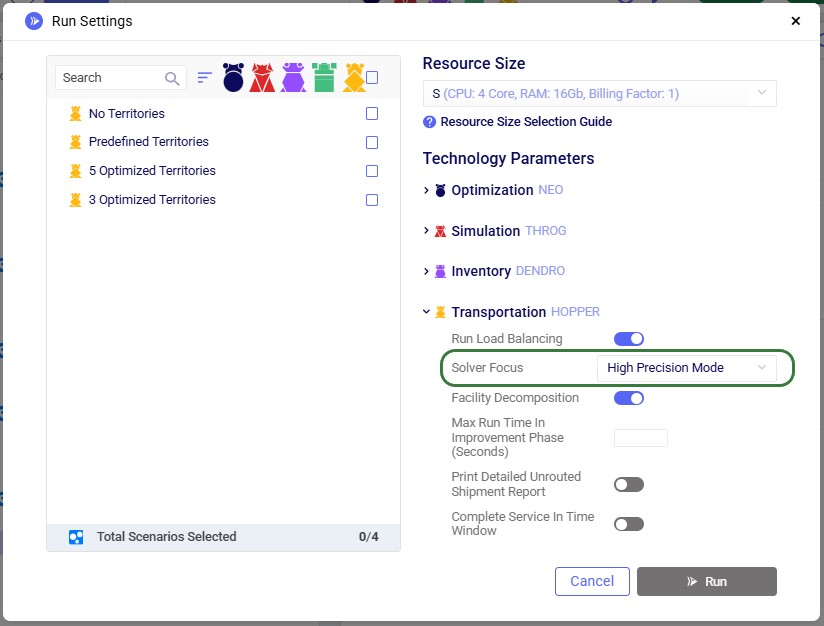

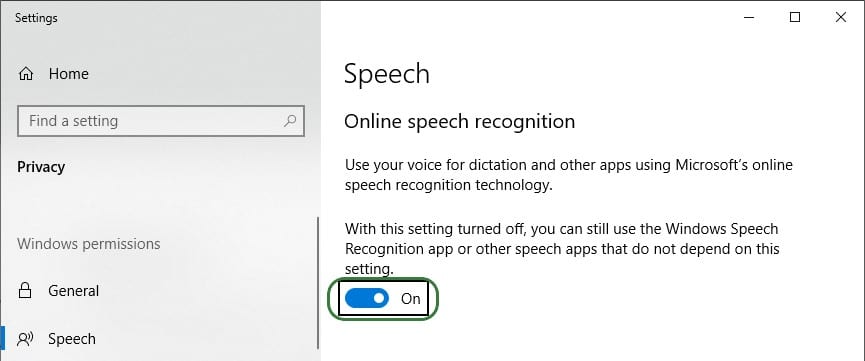

The genetic algorithm is available in Hopper's High Precision solver mode and powers all Hopper optimizations, not just territory planning. To turn on high precision mode, expand the Transportation (Hopper) section on the Run Settings modal which comes up after clicking on the green Run button at the right top in Cosmic Frog. Then select "High Precision mode" from the Solver Focus drop-down list:

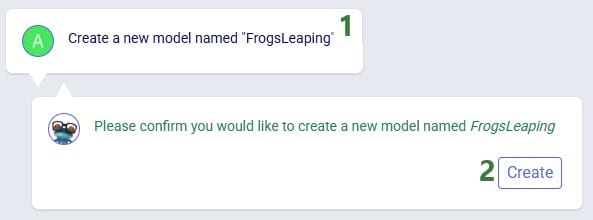

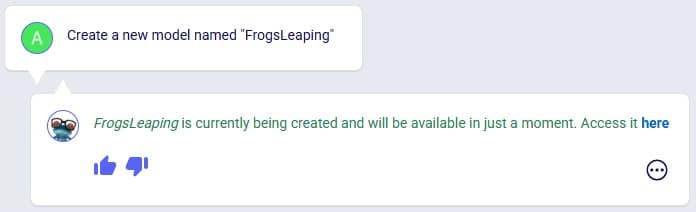

A model showcasing the Territory Planning capabilities can be found here on Optilogic's Resource Library. We will cover its features, scenario configuration, and outputs here. You can copy this model to your own Optilogic account by selecting the resource and then using the Copy to Account blue button on the right hand-side (see this "How to use the Resource Library" help center article for more details).

First, we will look at the input tables of this model:

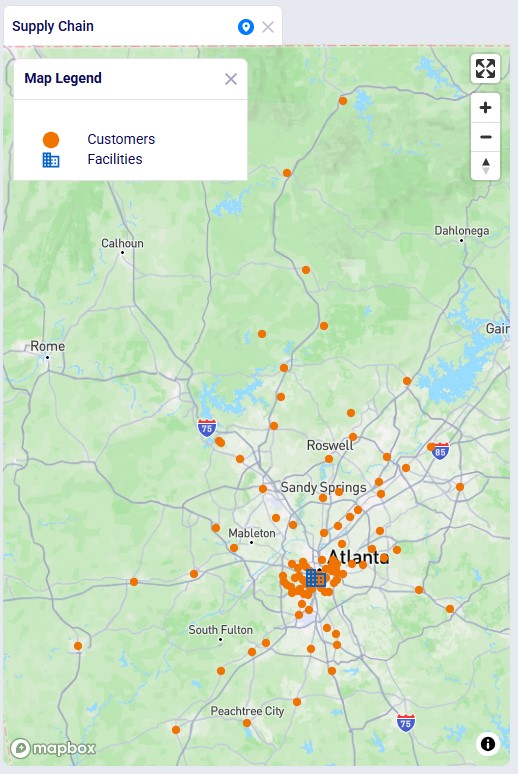

Let us also have a look at the customer and distribution center locations on the map before delving into the scenario details:

In this model, we will explore the following 4 scenarios:

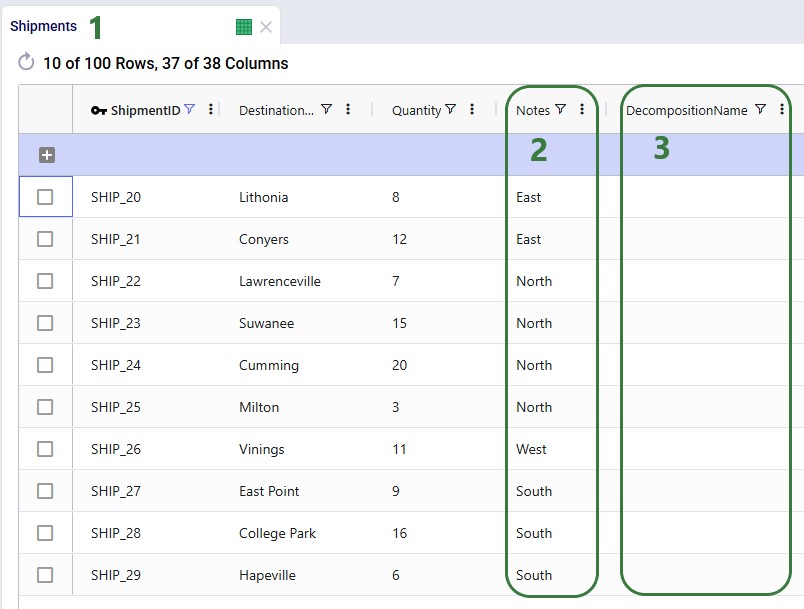

To set up the predefined territories scenario, the Notes and Decomposition Name field on the Shipments table are used:

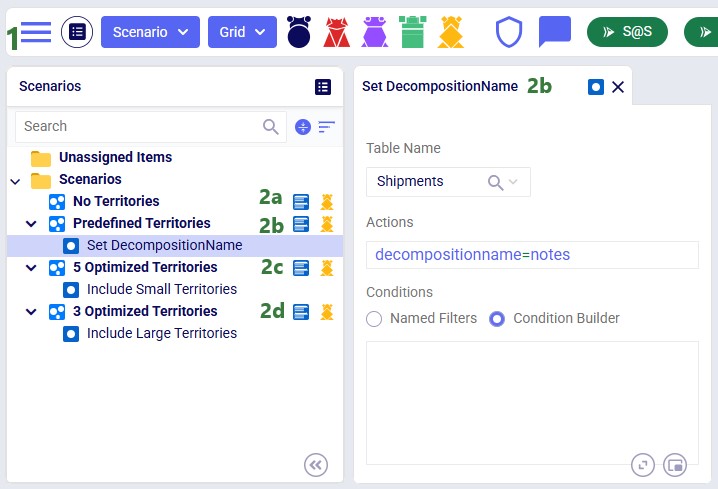

The configuration of the scenarios then looks as follows:

These scenarios are run with the Solver Focus Hopper model run option set to High Precision Mode as explained above.

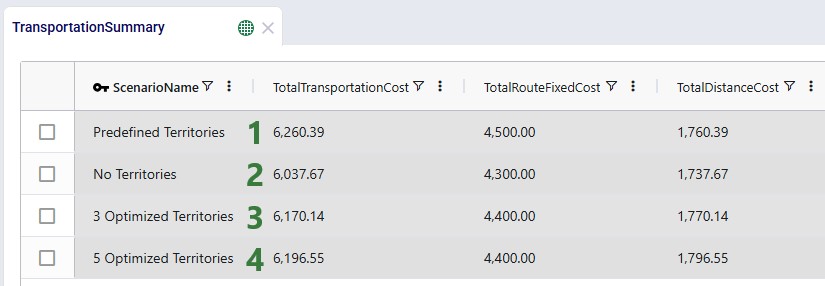

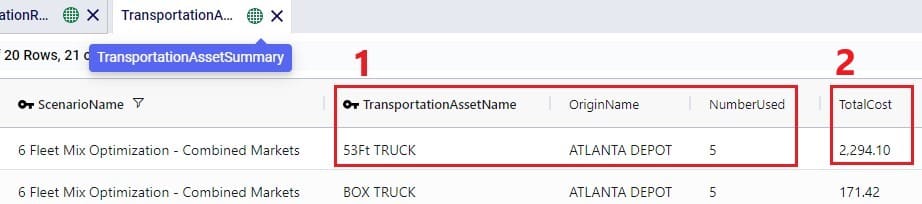

Now, we will have a look at the outputs of these 4 scenarios and compare them. First, we will look at several output tables, including the 2 new ones. We will start with the Transportation Summary output table, which summarizes costs and other KPIs at the scenario level:

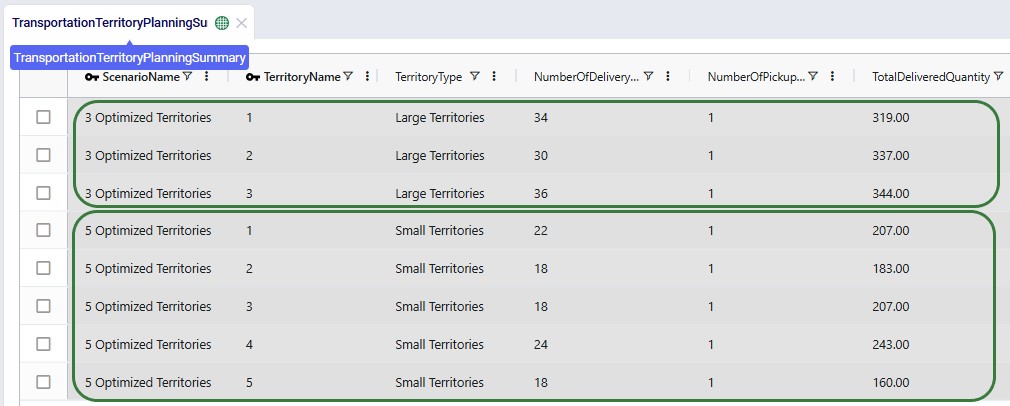

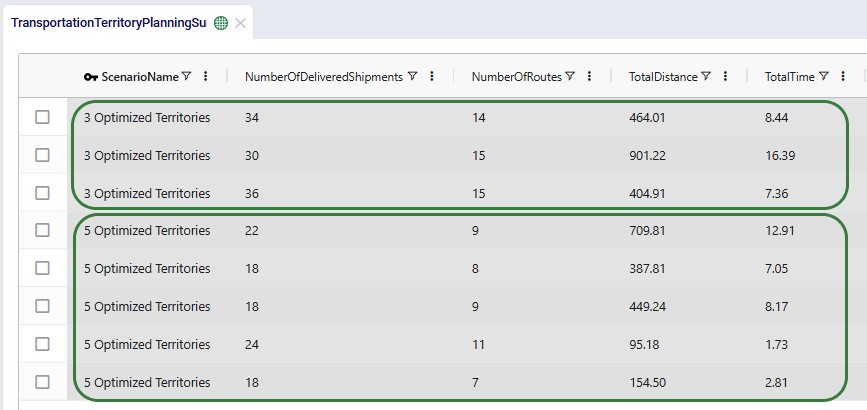

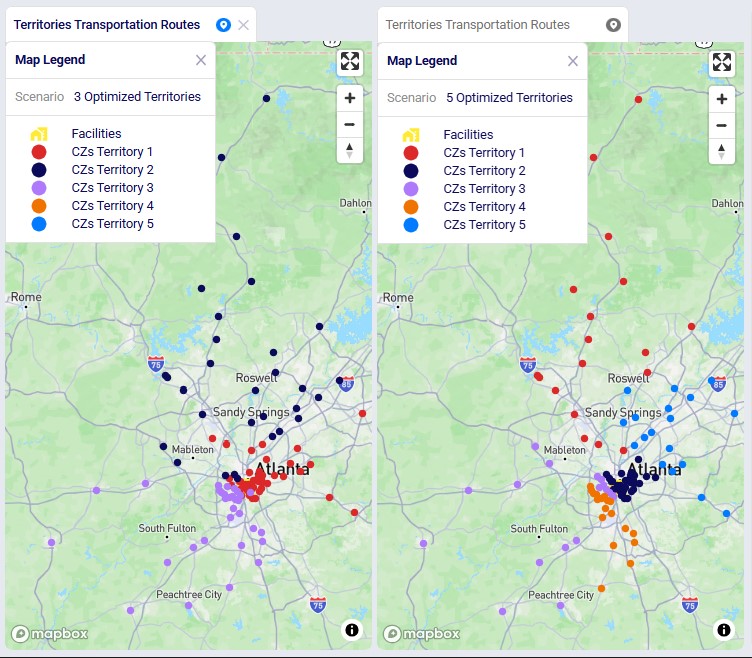

The next 2 screenshots are of the new Transportation Territory Planning Summary output table:

The top 3 records show the summarized outputs for each territory in the 3 Optimized Territories scenario, including the territory name and type, the number of delivery locations, the number of pickup locations, and the total delivered quantity. The bottom 5 records show the same outputs for the 5 territories of the 5 Optimized Territories scenario.

Scrolling right in this table shows additional outputs for each territory, including the number of delivered shipments, number of routes, total distance, and total time:

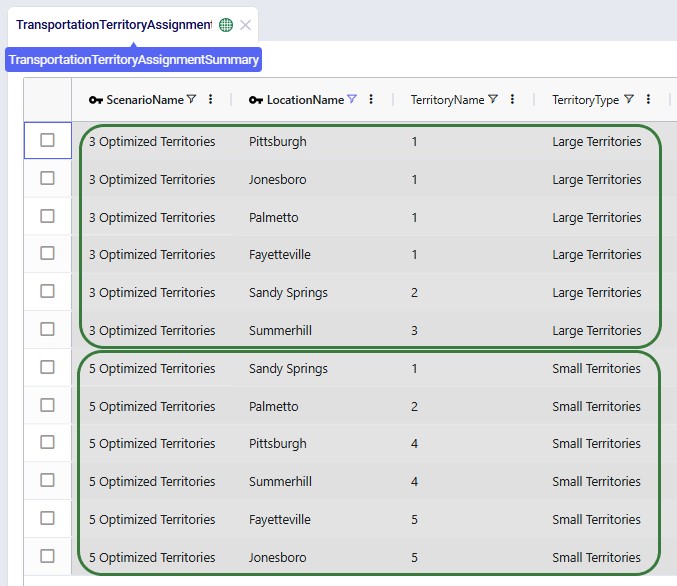

The other new output table, the Transportation Territory Assignment Summary table, contains the details of the assignments of customer locations to territories. The following screenshot shows the assignments of 6 customers in both the 3 Optimized Territories and 5 Optimized Territories scenarios:

Please note that this table also includes the latitudes and longitudes of all customer locations (not shown in the screenshot), to allow easy visualization on a map.

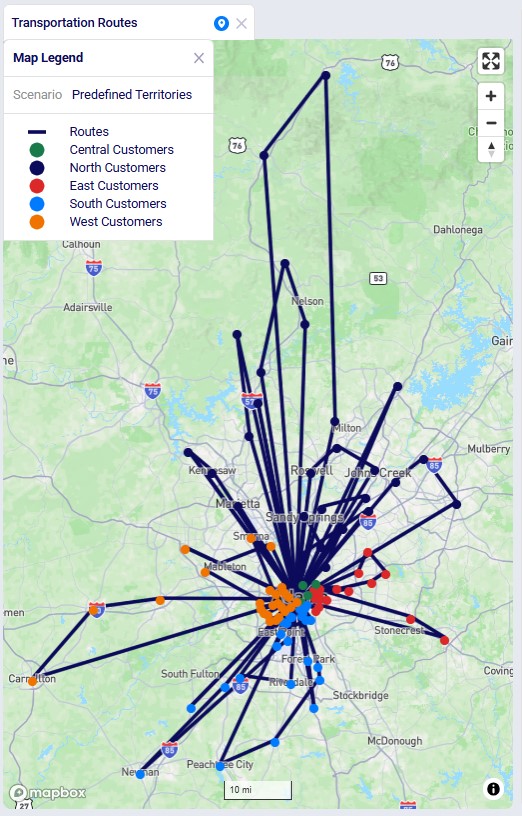

Next, we will have a look at the locations and routes on maps, these are also preconfigured in the template model that can be copied from the Resource Library, so you can have a look at these in Cosmic Frog yourself as well. The next screenshot shows the routes and locations of the Predefined Territories scenario on a map named Transportation Routes. The customers have been colored based on the predefined territory they belong to:

We can compare these routes to those of the No Territories scenario shown in the next screenshot. The customers are still colored based on the predefined territories, and if you zoom in a bit and pan through some routes, you will find several examples where customers from different predefined territories are now on a route together.

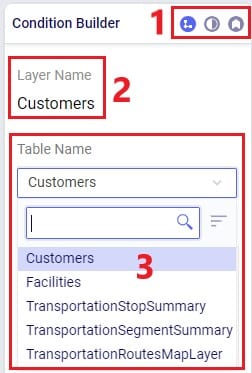

The following 2 screenshots compare the 3 and 5 Optimized Territories scenarios in a map called Territories Transportation Routes. The first shows the customers colored by territory. This is done by adding a layer to the map for each territory. The Transportation Stop Summary output table is used as the table to draw each of these "CZs Territory N" layers. Each layer's Condition Builder input uses "territoryname = 'N' and stoptype = 'Delivery'" as the filter; this is located on the layer's Condition Builder panel:

We see the customers clustered into their territories quite clearly in these visualizations. Some overlap between territories may happen, this can for example be due to non-uniform shipment sizes, pickup / delivery time windows, using actual road distances, etc.

This last screenshot shows the routes of the territories too, they are color coded based on the territory they belong too. This is done by again adding 1 map layer for each territory, this time drawing from the Transportation Routes Map layer table, and filtering in the Condition Builder for "territoryname = 'N'":

The Auto-Archiving feature helps keep your account clean and efficient while ensuring Optilogic maintains a streamlined, cost-effective storage footprint. By automatically archiving inactive databases, we reduce unnecessary server load, improve overall performance, and free up space so you can always create new Cosmic Frog models or DataStar projects when you need them.

From your perspective, Auto-Archiving means less manual cleanup and more confidence that your account is organized, fast, and ready for your next project.

Archiving moves a database from an active state into long-term storage. Once archived:

Important: Auto-archiving does not delete your data. You are always in control and can restore an archived database back into an active state.

With Auto-Archiving, you do not need to manually track and archive inactive databases. Our system will automatically archive any database that has been inactive for 90 days.

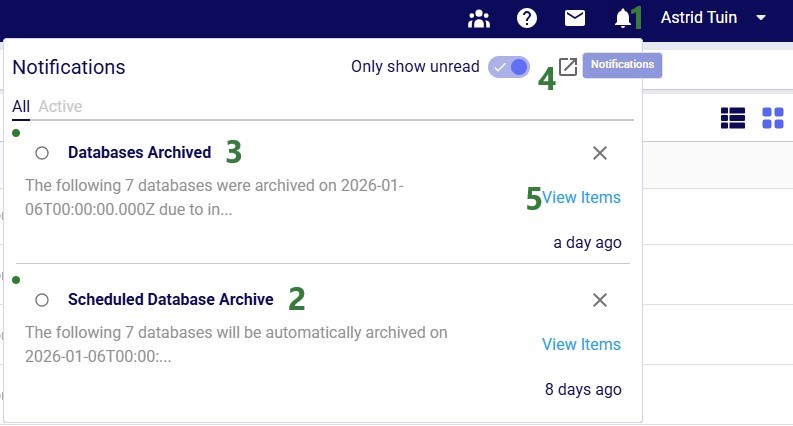

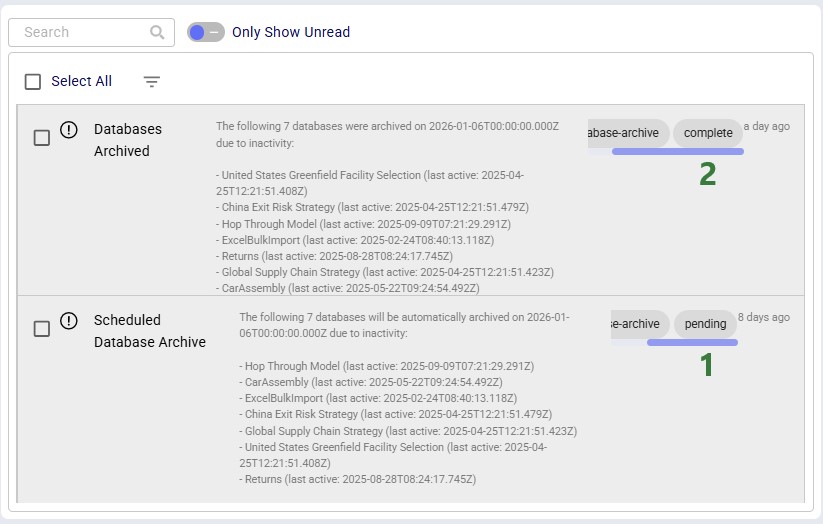

The screenshot below shows the notifications you will receive when 1) there are databases in your account that meet the criteria for an auto-archive event and 2) once databases have been archived:

Now, we will take a look at the Notifications Page, which is opened using the pop-out icon in the in-app notification, described under bullet #4 above:

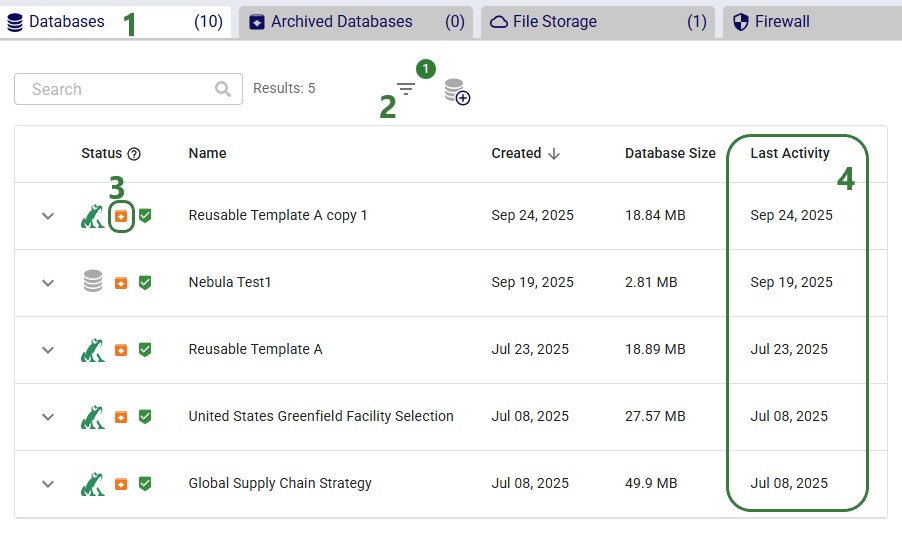

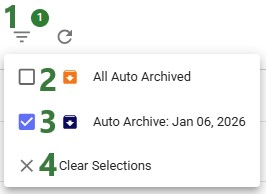

Clicking on the “View Items” link of a “Scheduled Database Archive” in-app notification (see bullet #5 of the first screenshot) will take you to a filtered view in the Cloud Storage application where you can see all the databases that will be auto-archived (note that this shows a different example with different databases to be archived as the previous screenshots):

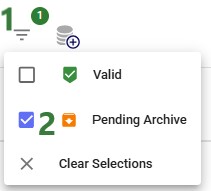

This next screenshot shows the filter that is applied on the Databases tab:

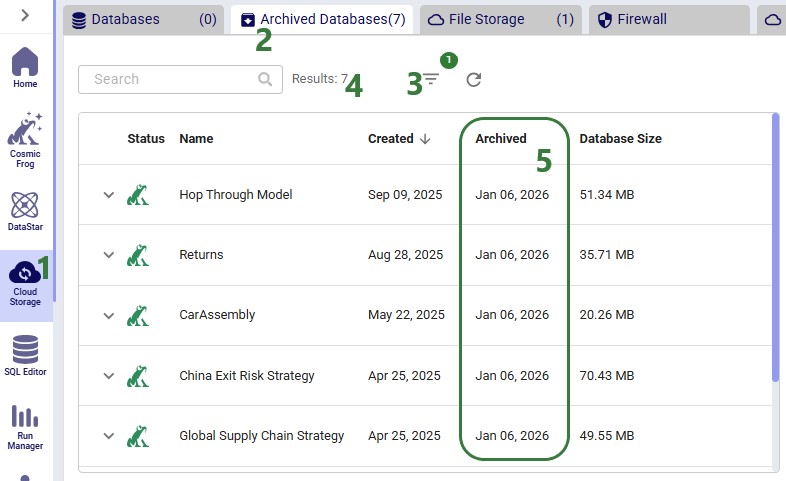

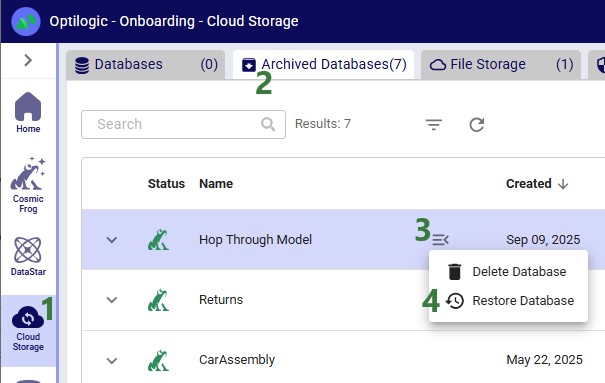

Clicking on the “View Items” link of a “Databases Archived” in-app notification (see bullet #5 of the first screenshot) will again take you to a filtered view in the Cloud Storage application where you can see the databases that have been auto-archived:

Finally, the following screenshot shows the filter that was applied on the Archived Databases tab:

Archiving is not just about organization — it also enhances performance across the platform. By reducing the number of idle databases consuming system resources, we lower the likelihood of “noisy neighbor” effects (when unused databases cause latency or compete with active ones).

With fewer inactive databases on high-availability servers, your active databases run faster and more reliably.

To keep a database active, simply interact with it. Any of the following actions will reset its inactivity timer:

Performing any of these actions ensures the database will not be archived.

Restoring an archived database is quick and straightforward:

The system will start a background job to restore the database. You can track progress at any time on the Account Activity page.

What to expect:

Quota reminder: To unarchive a database, you will need enough space in your database quota. If you have already reached your limit, you may need to archive or delete another database before restoring.

Auto-Archiving helps you:

It is a simple, automated way to ensure your workspace stays efficient while protecting your data.

As always, please feel free to reach out to the Optilogic support team at support@optilogic.com for any questions or feedback.

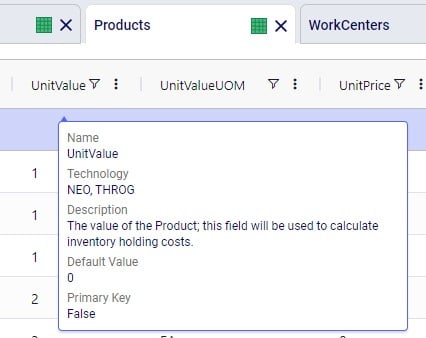

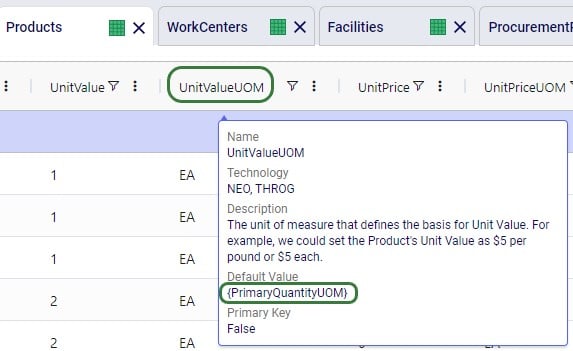

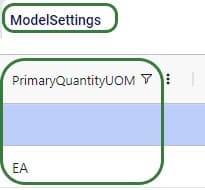

Cosmic Frog’s network optimization engine (Neo) can now account for shelf life and maturation time of products out of the box with the addition of several fields to the Products input table. The age of product that is used in production, product which flows between locations, and product sitting in inventory is now also reported in 3 new output tables, so users have 100% visibility into the age of their products across the operations.

In this documentation, we will give a brief overview of the new features first and then walk through a small demo model, which users can copy from the Resource Library, showing both shelf life and maturation time using 3 scenarios.

The new feature set consists of:

Please note that:

We will now showcase the use of both Shelf Life and Maturation Time in a small demo model. This model can be copied to your own Optilogic account from the Resource Library (see also the “How to use the Resource Library” Help Center article). This model has 3 locations which are shown on the map below:

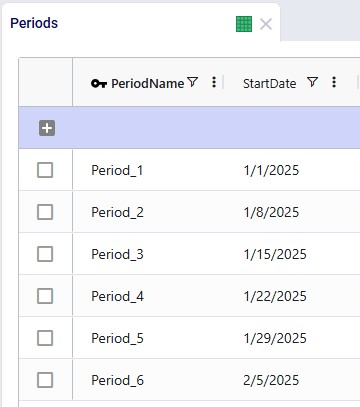

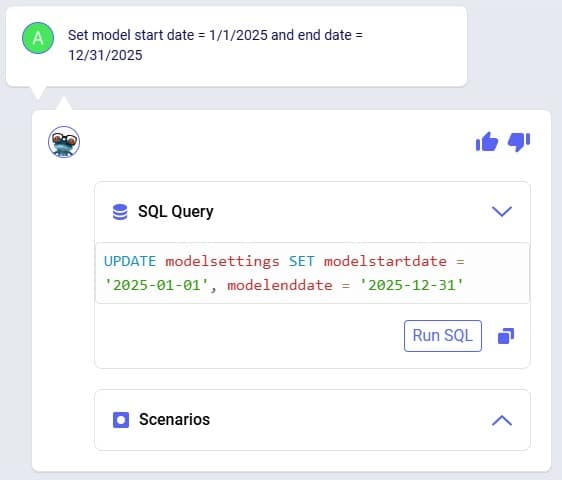

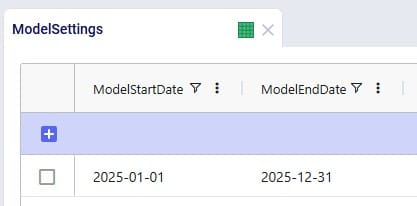

It is a multi-period model with 6 periods, which are each 1 week long (the Model End Date is set to February 12, 2025, on the Model Settings input table, not shown):

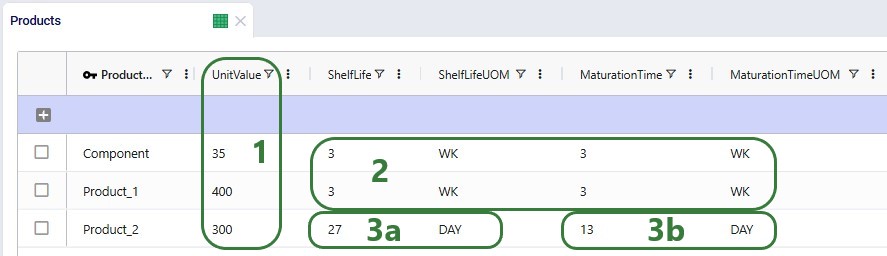

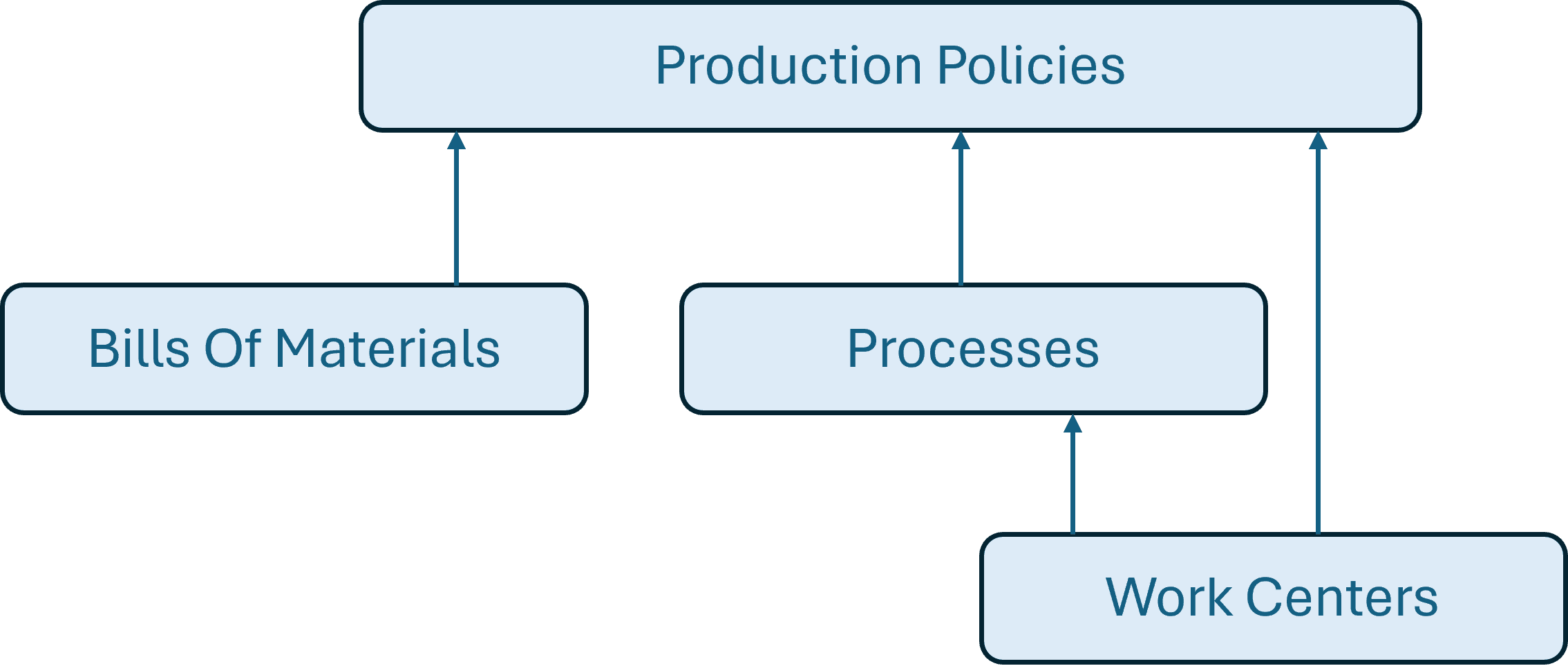

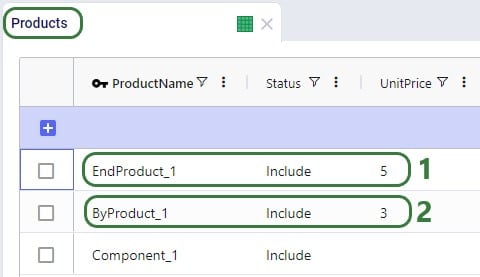

There are 3 products included the model: 2 finished goods, Product_1 and Product_2, and 1 raw material, named Component. The component is used in a bill of materials to produce Product_1, as we will see in the screenshots after this one.

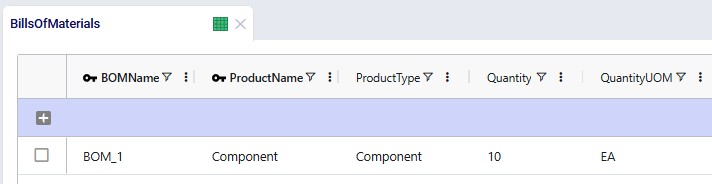

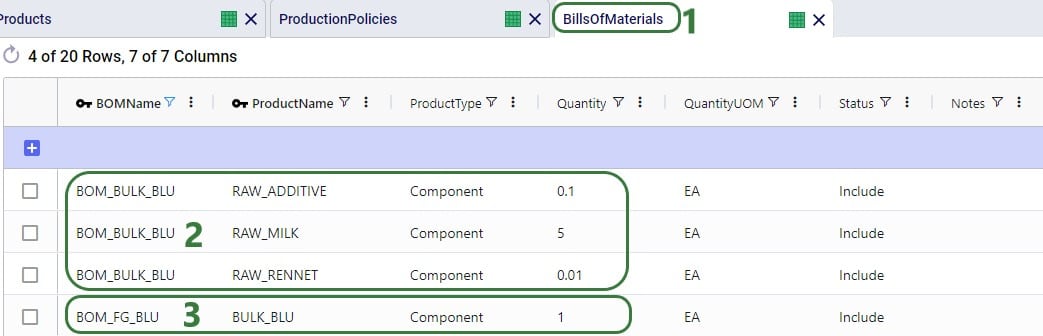

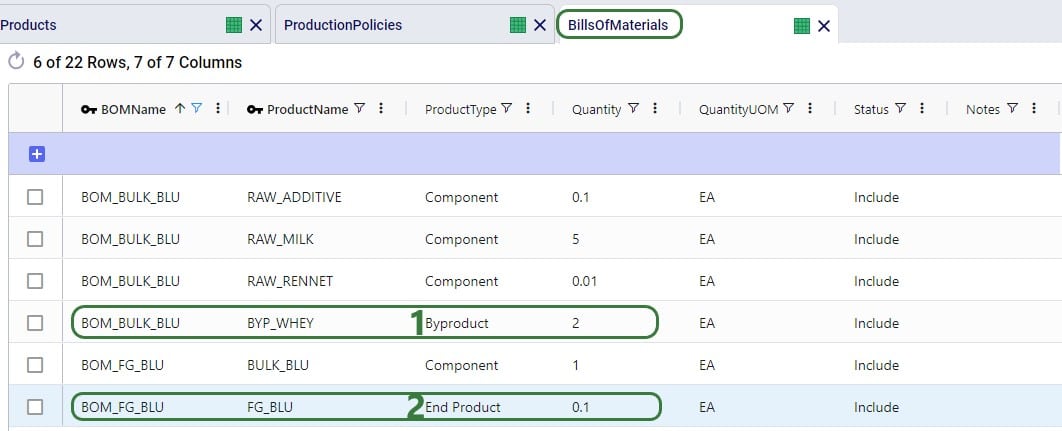

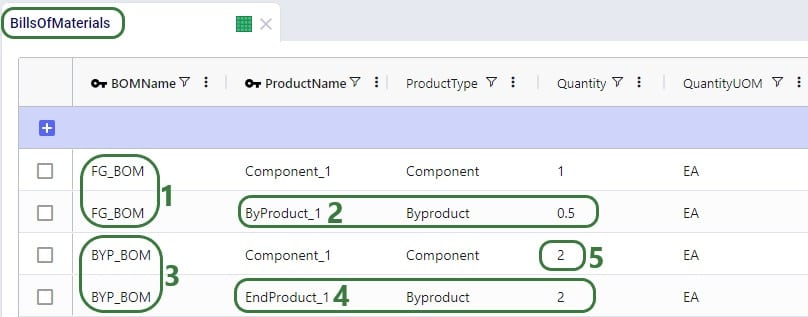

As mentioned above, a bill of materials is used to produce finished good Product_1:

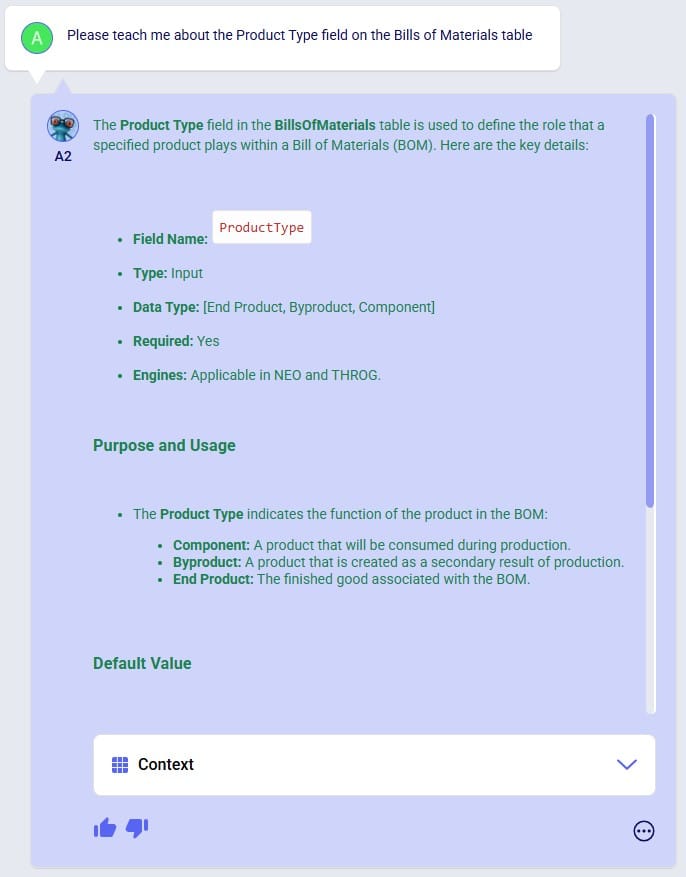

This bill of materials is named BOM_1 and it specifies that 10 units of the product named Component are used as an input (product type = Component) of this bill of materials. Note that the bill of materials does not indicate the end product that is produced with it. This is specified by associating production policies with a BOM. To learn more about detailed production modelling using the Neo engine, please see this Help Center article.

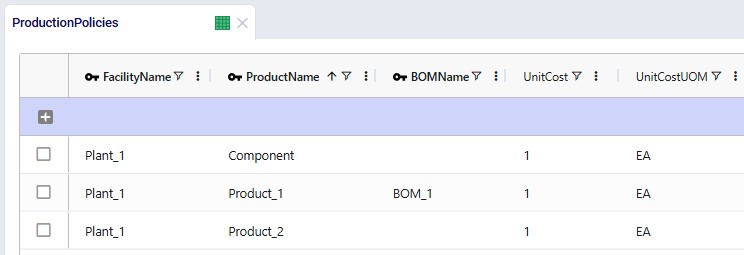

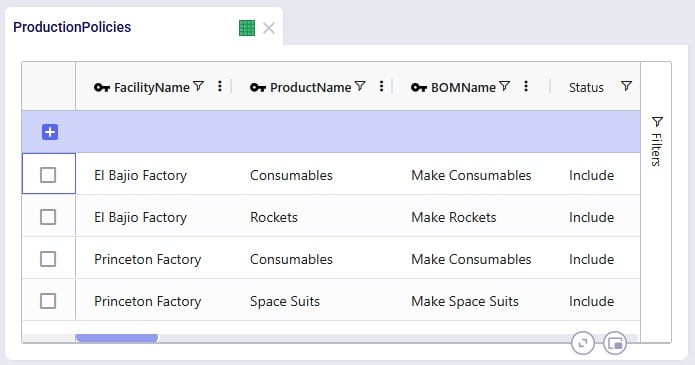

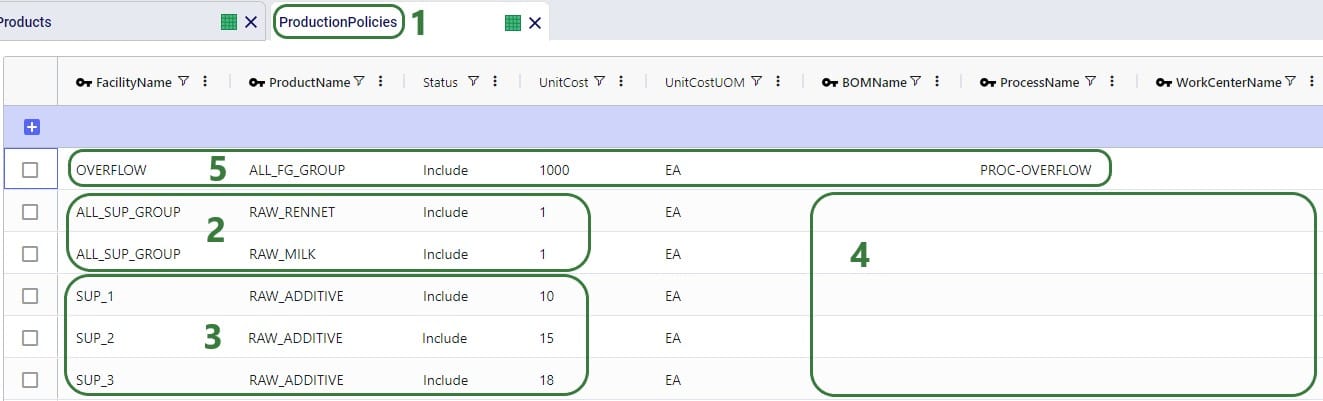

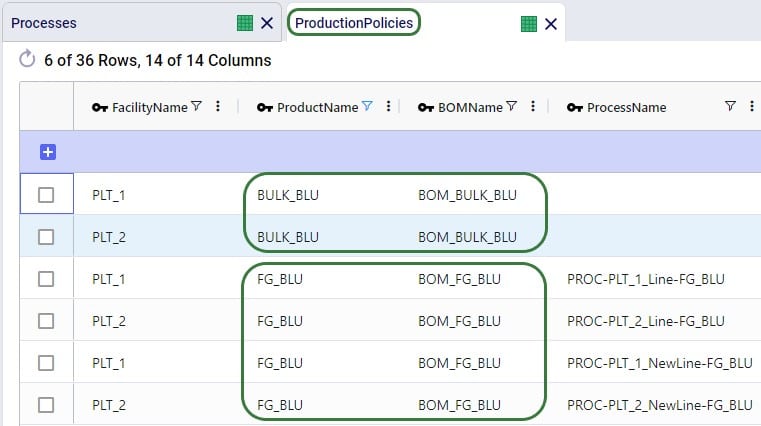

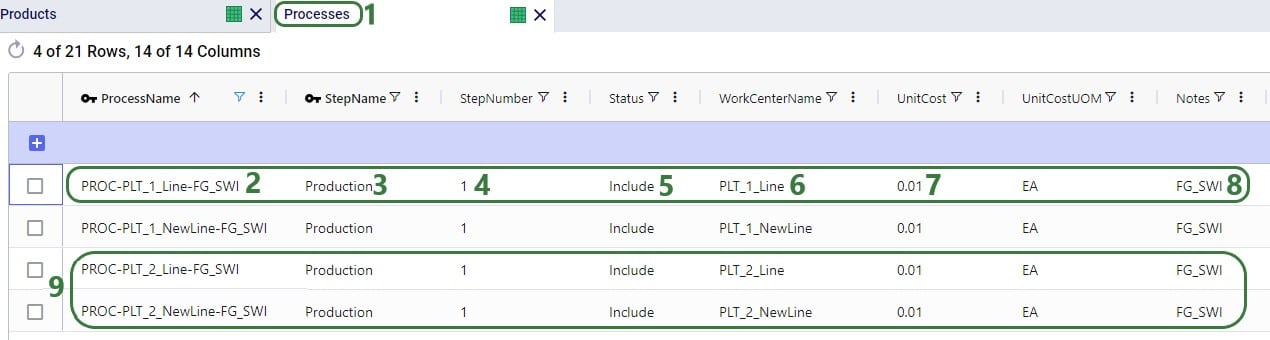

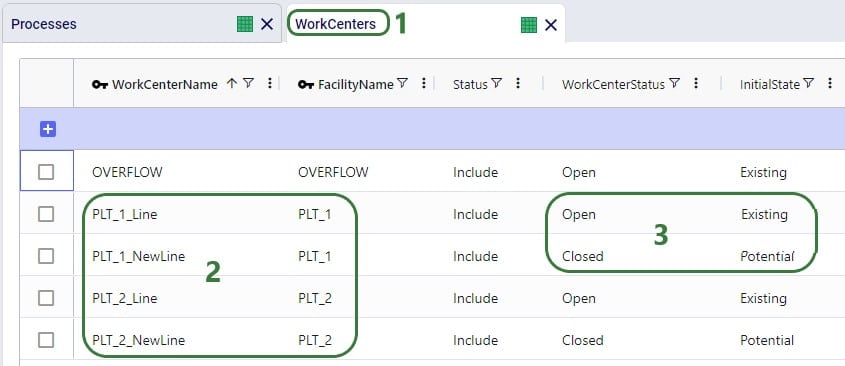

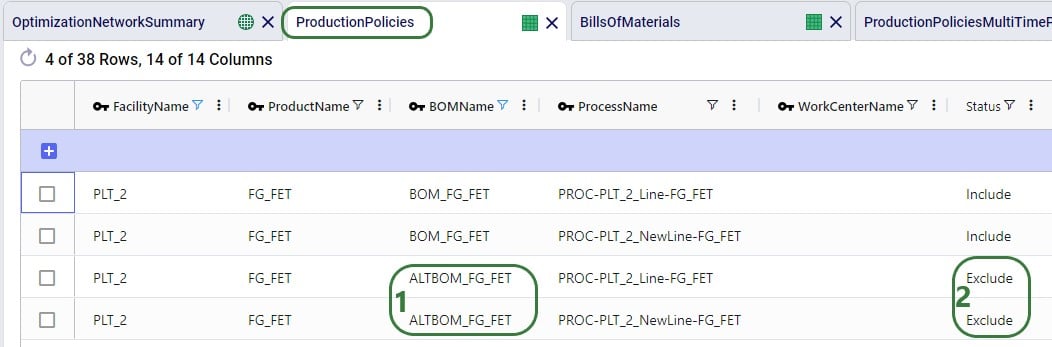

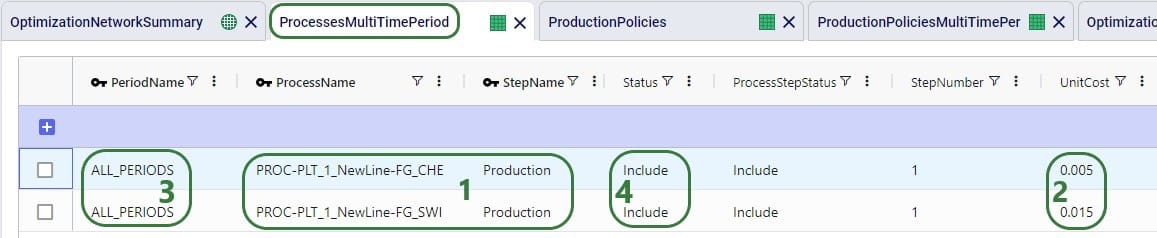

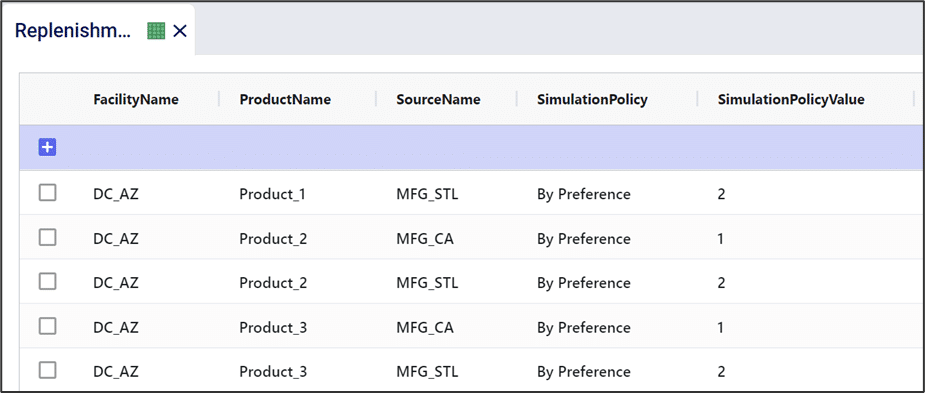

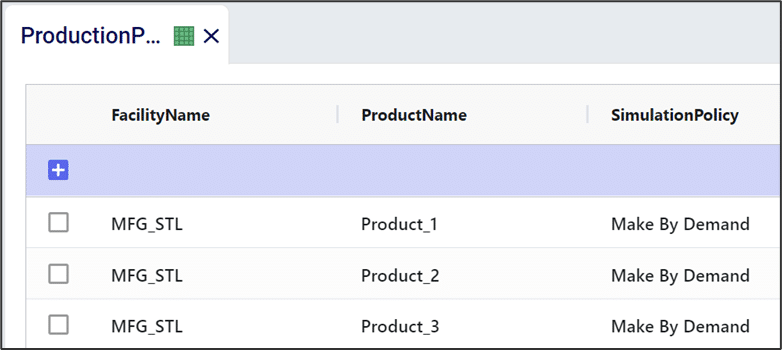

In the next screenshot of the production policies table, we see that the plant can produce all 3 products, and that for the production of Product_1, the bill of materials shown in the previous screenshot, BOM_1, is used. The cost per unit is set to 1 here for each product:

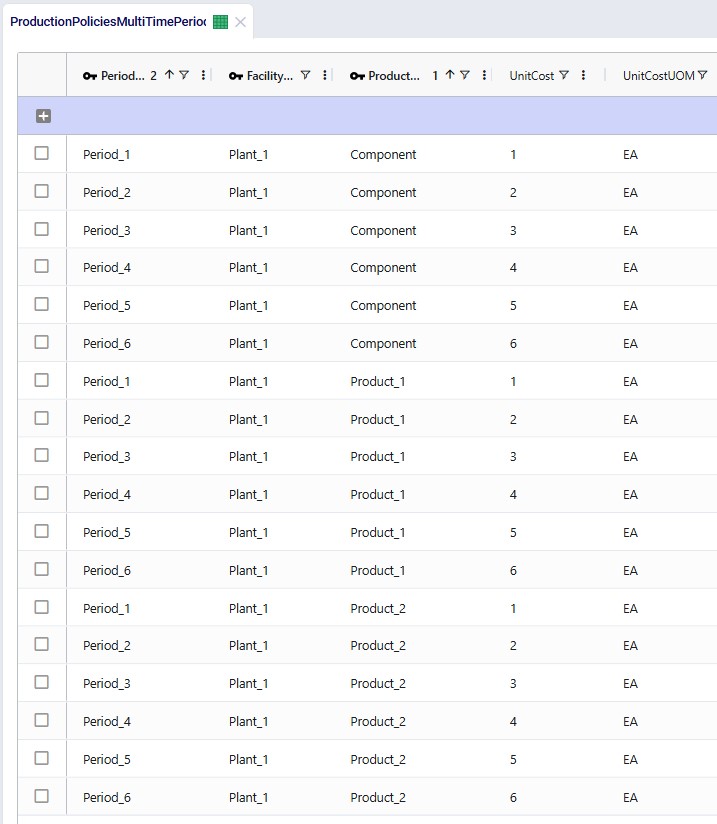

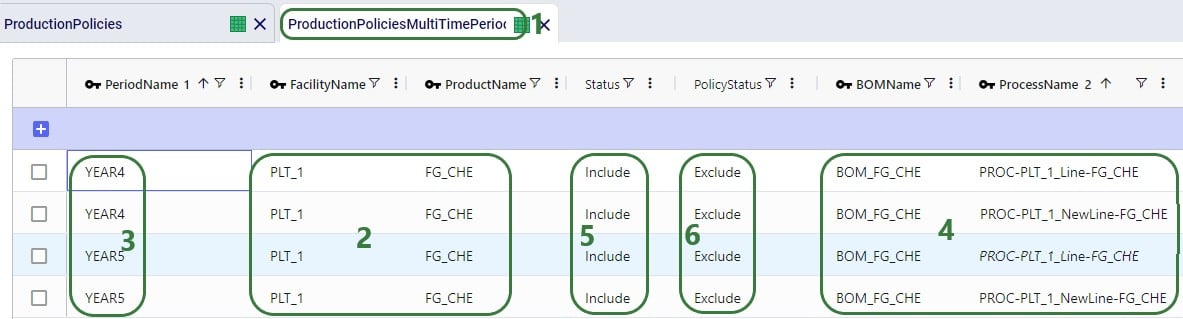

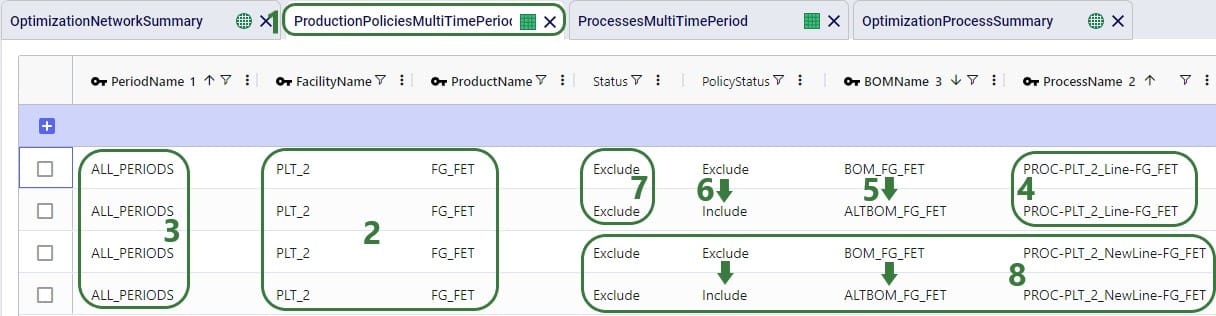

For purposes of showing how Shelf Life and Maturation Time work, we will use the Production Policies Multi-Time Period input table too. In here we override the production cost per unit that we just saw in the above screenshot to become increasingly expensive in later periods for all products, adding $1 per unit for each next period. So, to produce a unit of Product_1 in Period_1 costs $1, in Period_2 it costs $2, in Period_3 $3, etc. Same for Component and Product_2:

The production cost is increased here to encourage the model to produce product as early as possible, so that it incurs the lowest possible production cost. It will also still need to respect the shelf life and maturation time requirements. Note that this is also weighed against the increased inventory holding costs for producing earlier than possibly needed, as product will sit in inventory longer if produced earlier. So, the cost differential for production in different periods needs to be sufficiently big as compared to the increased inventory holding cost to see this behavior. We will explore this more through the scenarios that are run in this demo model.

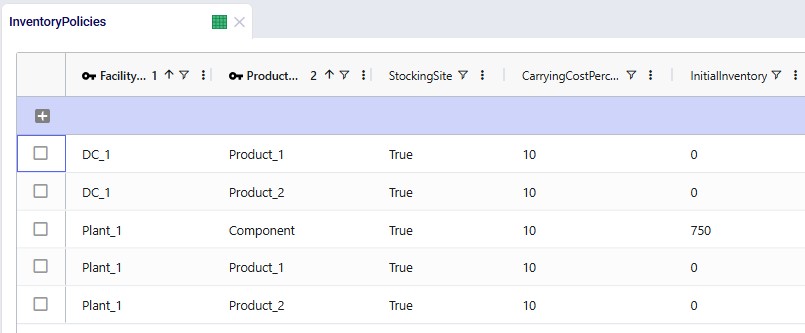

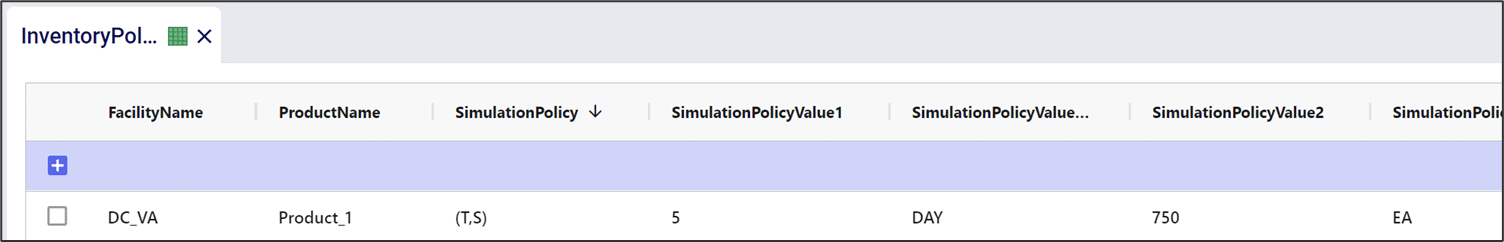

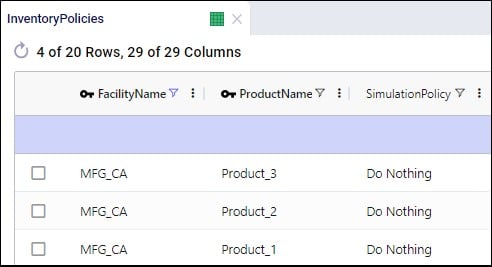

Since products will be spending some time in inventory, we need to have at least 1 inventory policy per product with Stocking Site = True. At the plant, all 3 products can be held in inventory, and there is an initial inventory of 750 units of the Component. At the DC, both finished goods can be held in inventory. The carrying cost percentage to calculate the inventory holding costs is set to 10% for all policies:

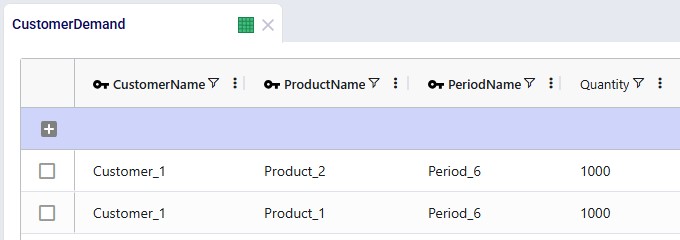

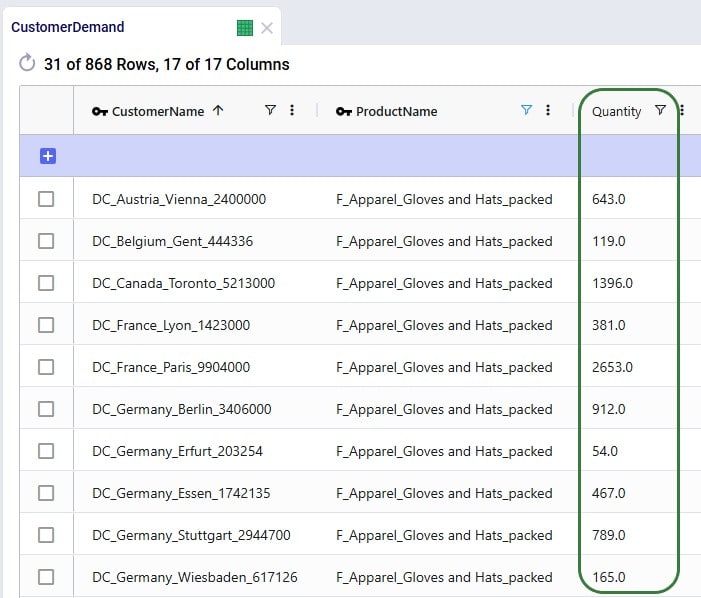

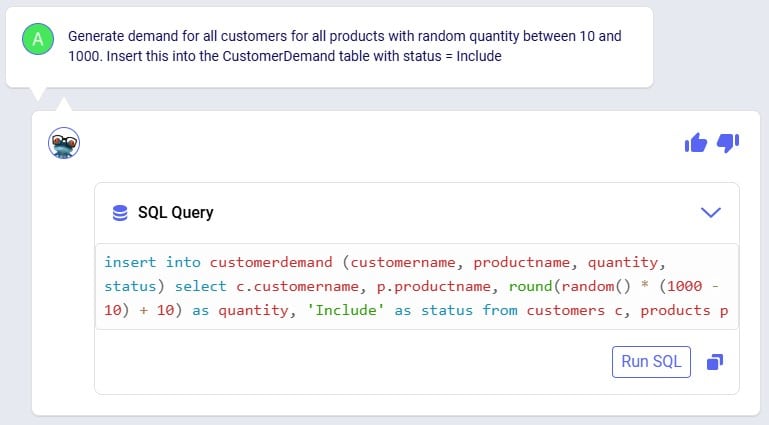

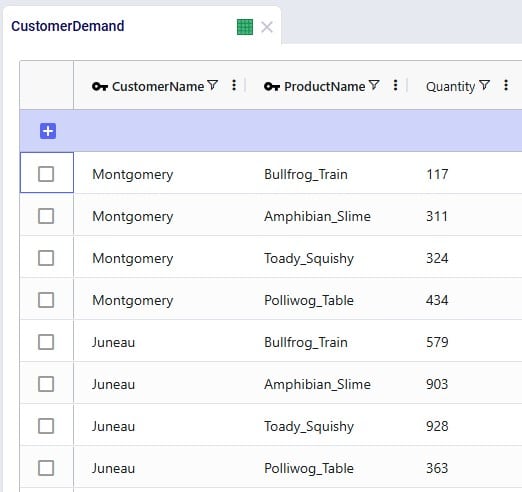

Lastly, we will show the demand that has been entered into the Customer Demand table. The customer demands 1,000 units each of the finished goods in period #6:

Other input tables that have populated records which have not been shown in a screenshot above are: Customers, Facilities, and Transportation Policies. The latter specifies that the plant can ship both finished goods to the DC, and the DC can ship both to the customer. The cost of transportation is set to 0.01 per unit per mile on both lanes.

The 3 costs that are modelled are therefore:

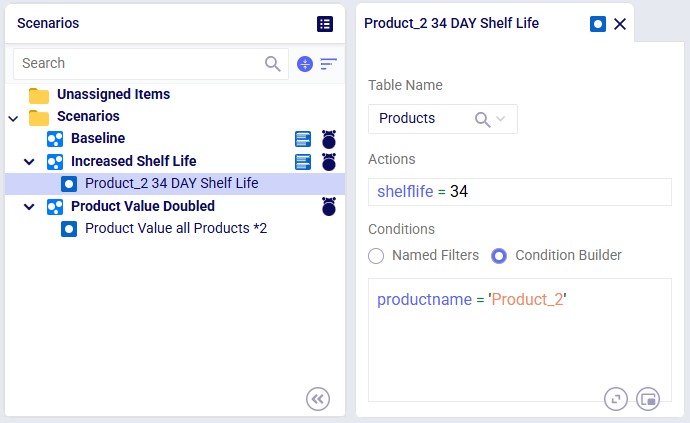

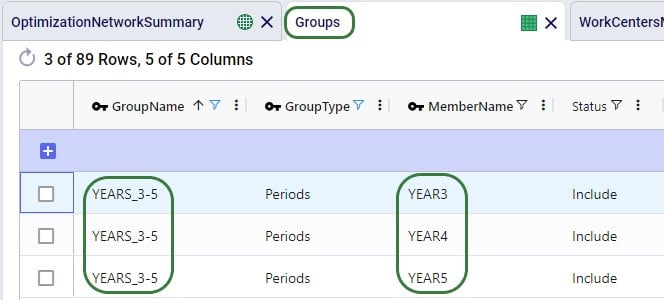

There are 3 scenarios run in this model, see also the screenshot below:

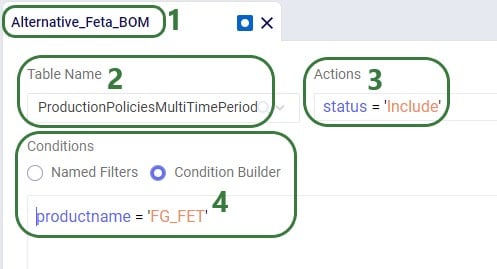

The screenshot shows the 3 scenarios on the left, where we see that the Increased Shelf Life and Product Value Doubled scenarios both contain 1 scenario item, whereas the Baseline does not contain any. On the right-hand side, the scenario item of the Increased Shelf Life scenario is shown where we can see that the Shelf Life value of Product_2 is set to 34. See the following Help Center articles for more details on Scenario building and syntax:

Two notes upfront about the outputs before we dive into details as follows:

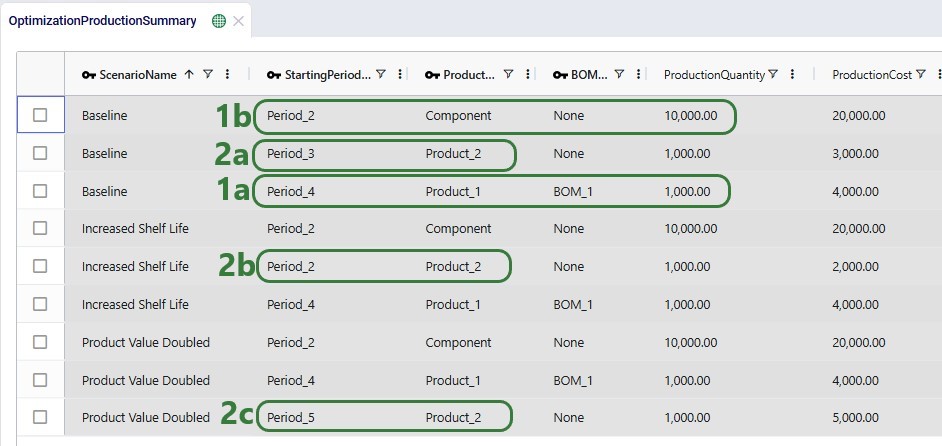

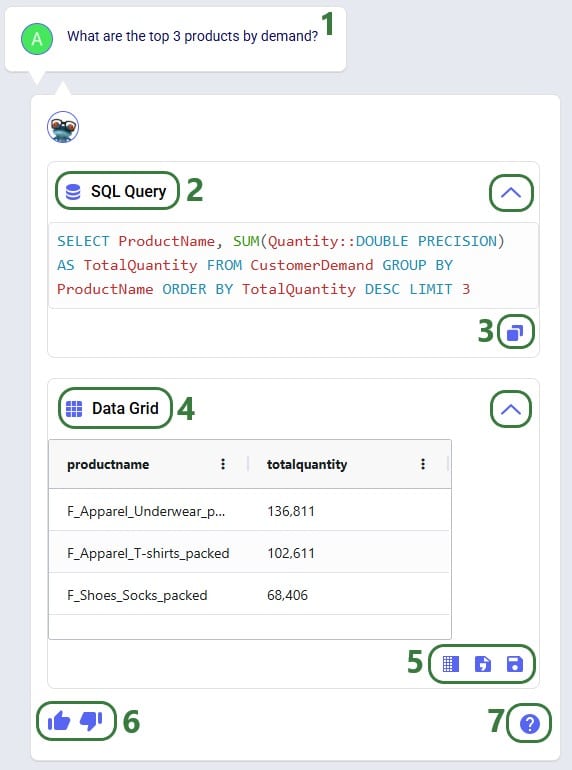

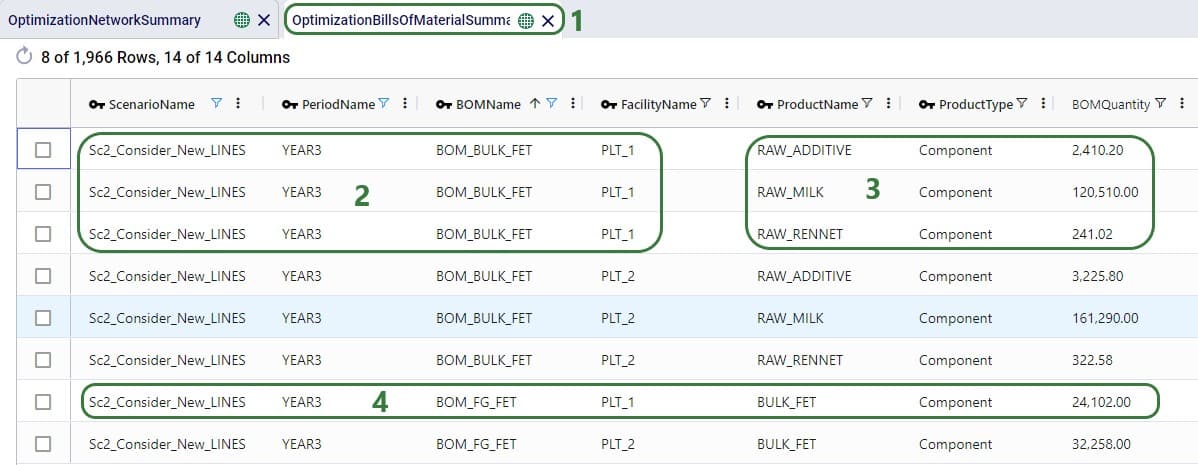

Now let us first look at when which product is produced through the Optimization Production Summary output table:

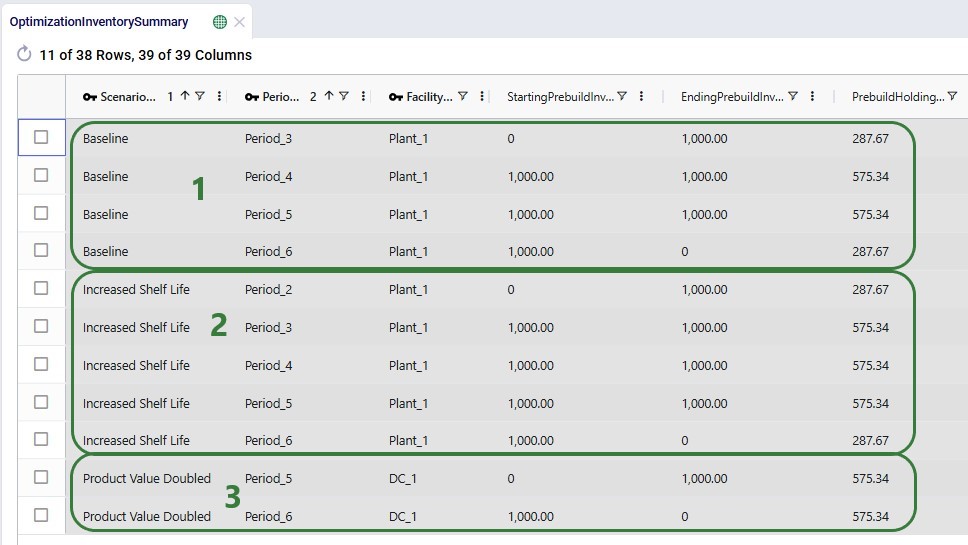

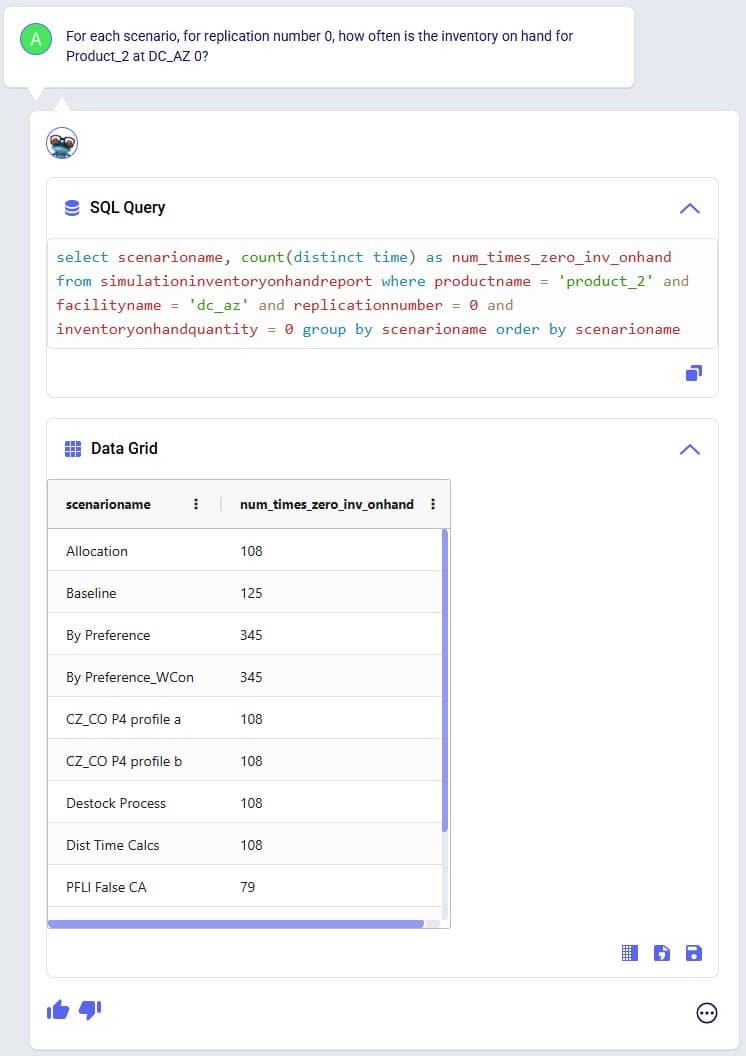

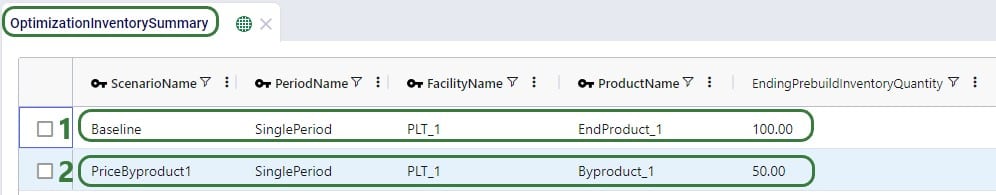

The next screenshot shows the Optimization Inventory Summary filtered for Product_2. Since we know it is produced in different periods for each of the 3 scenarios and that the demand occurs in Period_6, we expect to see the product sitting in inventory for a different number of periods in the different scenarios:

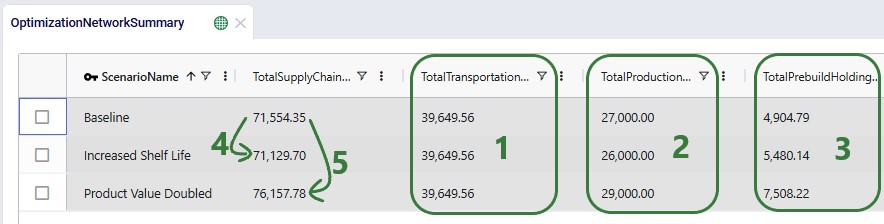

In the Optimization Network Summary output table, we can check the total cost by scenario and how the 3 costs modelled contribute to this total cost:

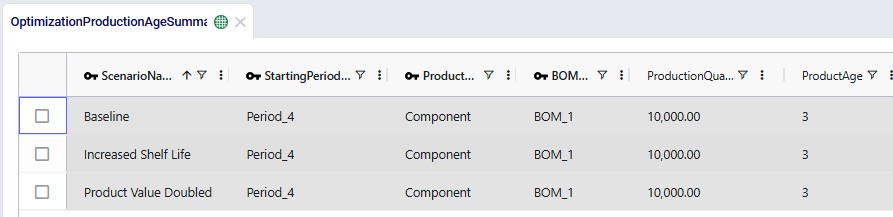

Next, we will take a look at the 3 new output tables, which detail the age of products that are used in production, of products that are transported, and of products that are sitting in inventory. We will start with the Optimization Production Age Summary output table:

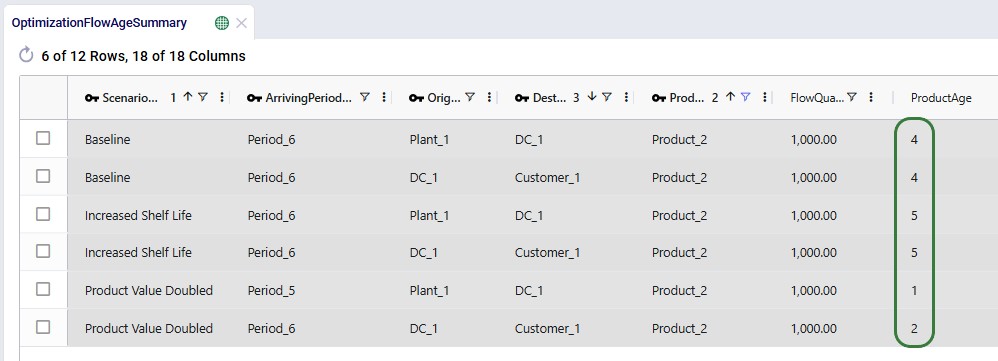

Next, we will look at the age of Product_2 when it is shipped between locations:

Lastly, we will look at 2 screenshots of the new Optimization Inventory Age Summary output table. This first one only looks at the ages of Product_1 and Component at Plant_1 in the Baseline scenario. The values for their inventory levels and ages are the same in the other 2 scenarios as the production of these 2 products occurs during the same periods for all 3 scenarios:

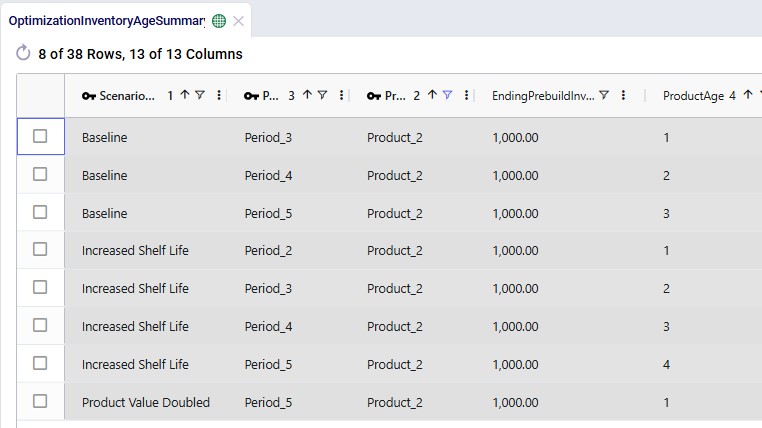

In the next screenshot, we look at the same output table, Optimization Inventory Age Summary, but now filtered for Product_2 and for all 3 scenarios:

For any questions on these new features, please do not hesitate to contact Optilogic support on support@optilogic.com.

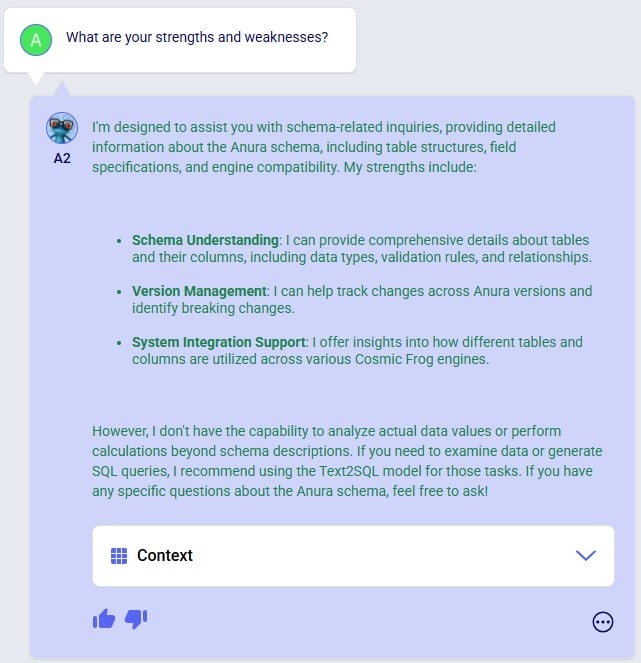

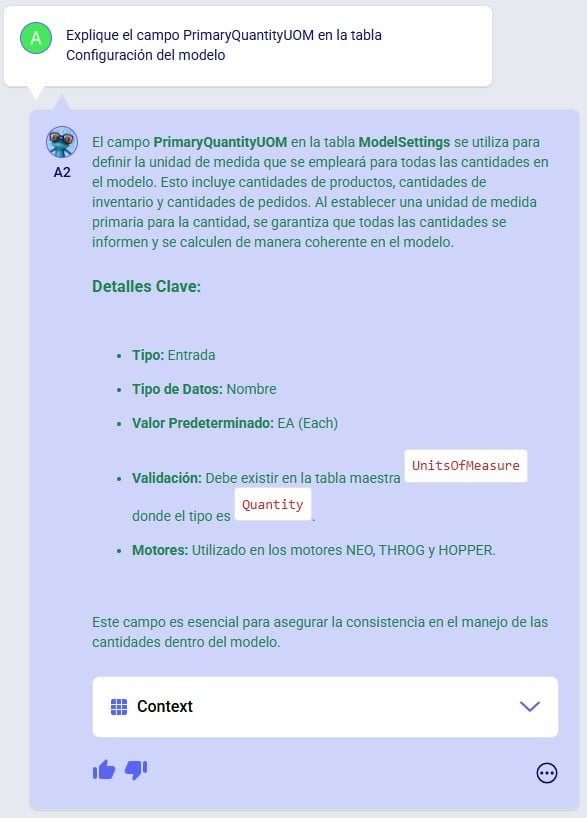

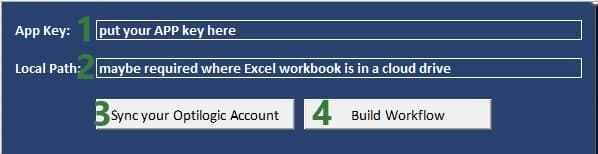

The best way to understand modeling in Cosmic Frog is to understand the data model and structure. The following link provides a downloadable (Excel) template with the documentation and explanation for every input table and field in the modeling schema.

A downloadable template describing the fields in the output tables can be downloaded from the Downloadable Anura Data Structure - Outputs Help Center article.

For a brief review of how to use the template file, please watch the following video.

The following link provides a downloadable (excel) template describing the fields included in the output tables for Neo (Optimization), Throg (Simulation), Triad (Greenfield), and Hopper (Routing).

Anura 2.8 is the current schema.

A downloadable template describing the fields in the input tables can be downloaded from the Downloadable Anura Data Structure - Inputs Help Center article.

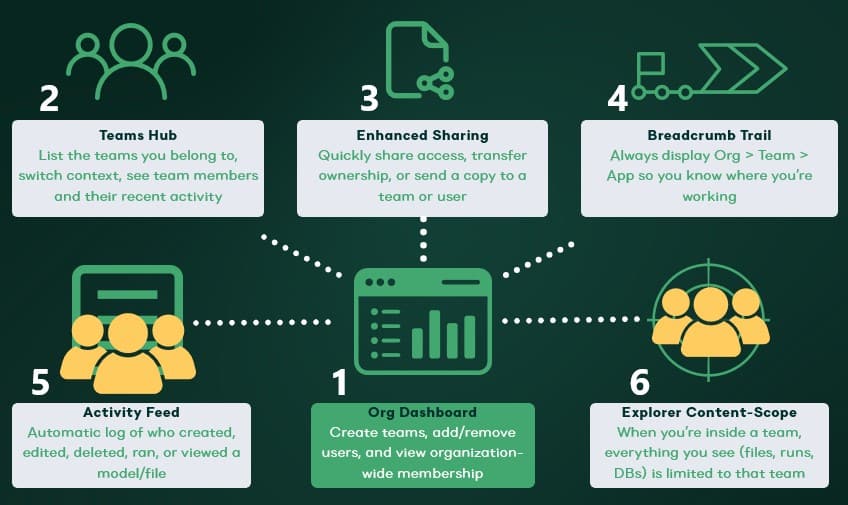

Users of the Optilogic platform can easily access all files they have in their Optilogic account and perform common tasks like opening, copying, and sharing them by using the built-in Explorer application. This application sits across all other applications on the Optilogic platform.

This documentation will walk users through how to access the Explorer, explain its folder and file structure, how to quickly find files of interest, and how to perform common actions.

By default, the Explorer is closed when users are logged into the Optilogic platform, they can open it at the top of the applications list:

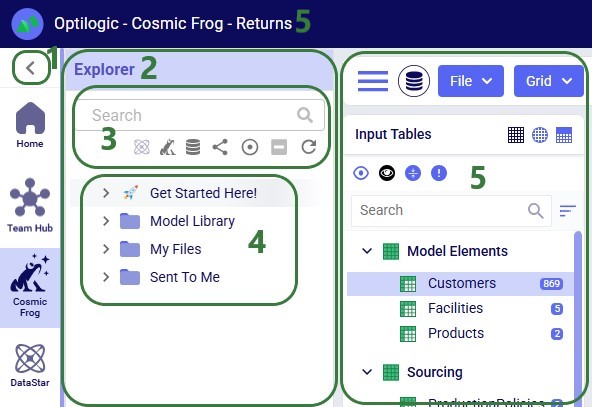

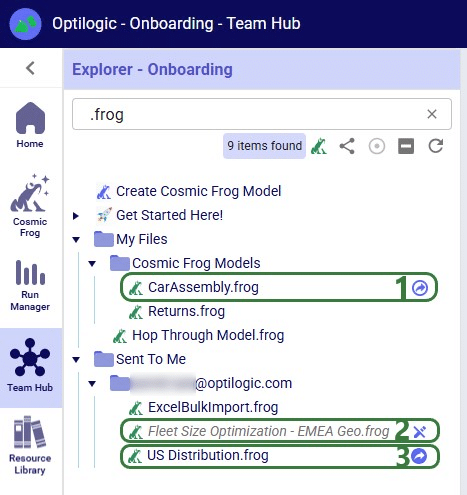

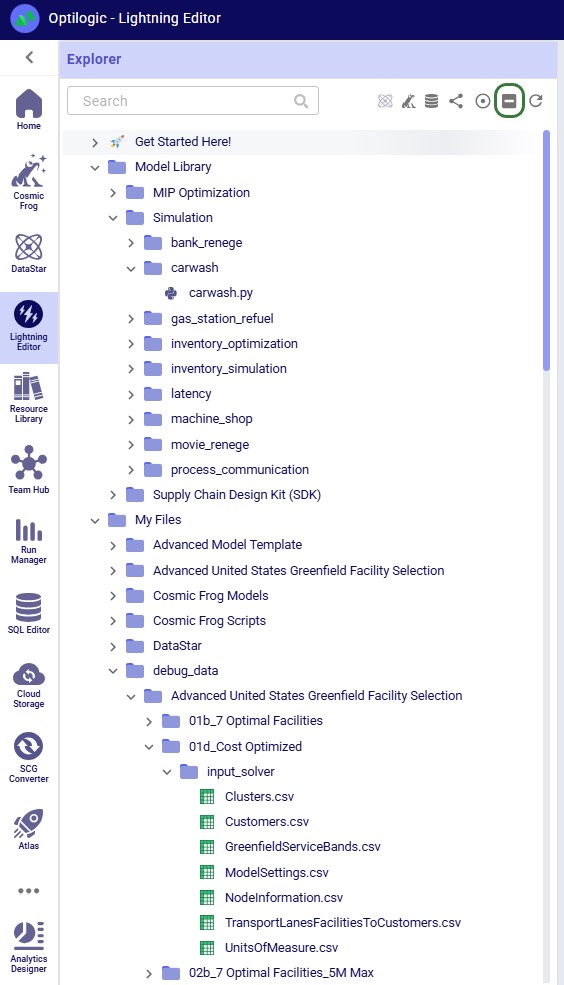

Once the Explorer is open, your screen will look similar to the following screenshot:

This next screenshot shows the Explorer when it is open while the user is working inside the workspace of one of the teams they are part of, and not in their My Account workspace:

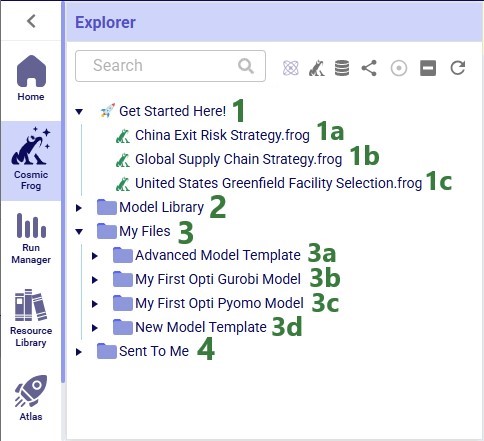

When a new user logs into their Optilogic account and opens the Explorer, they will find there are quite a few folders and files present in their account already. The next screenshot shows the expanded top-level folders:

As you may have noticed already, different file types can be recognized by the different icons to the left of the file’s name. The following table summarizes some of the common file types users may have in their accounts, shows the icon used for these in the Explorer, and indicates which application the file will be opened in when (left-)clicking on the file:

*When clicking on files of these types, the Lightning Editor application will be opened and a message stating that the file is potentially unsupported will be displayed. Users can click on a “Load Anyway” button to attempt to load the file in the Lightning Editor. If the user chooses to do so, the file will be loaded, but the result will usually be unintelligible for these file types.

Some file types can be opened in other applications on the Optilogic platform too. These options are available from the right-click context menus, see the “Right-click Context Menus” section further below.

Icons to the right of names of Cosmic Frog models in the Explorer indicate if the model is a shared one and if so, what type of access the user / team has to it. Hovering over these icons will show text describing the type of share too.

Learn more about sharing models and the details of read-write vs read-only access in the “Model Sharing & Backups for Multi-user Collaboration in Cosmic Frog” help center article.

While working on the Optilogic platform, additional files and folders can be created in / added to a user’s account. In this section we will discuss which applications create what types of files and where in the folder structure they can be found in the Explorer.

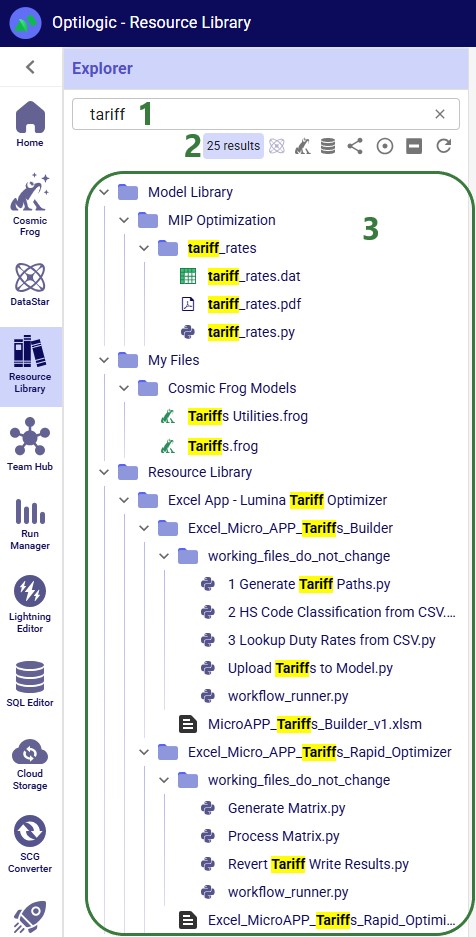

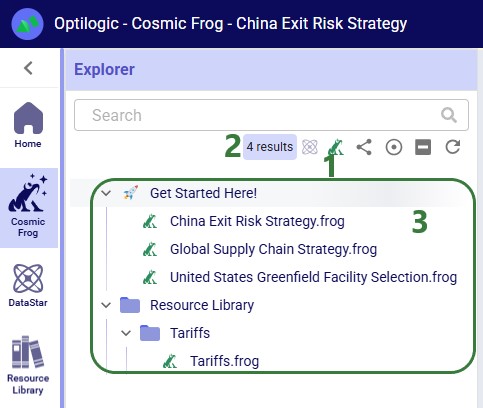

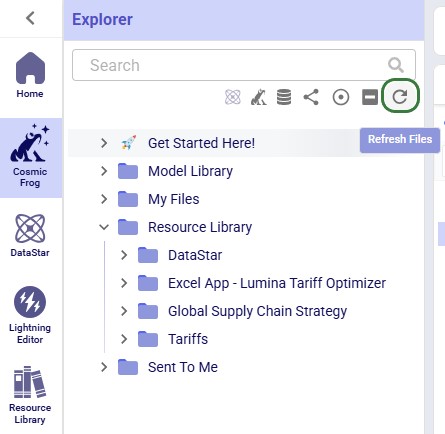

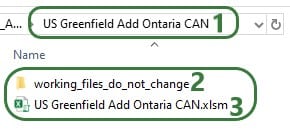

The Resource Library on the Optilogic platform contains example Cosmic Frog models, DataStar template projects, Cosmic Frog for Excel Apps, Python scripts, reference data, utilities, and additional tools to help make Optilogic platform users successful. Users can browse the Resource Library and copy content from there to their own account to explore further (see the “How to use the Resource Library” help center article for more details):

Please note that Cosmic Frog models copied from the Resource Library are placed into a subfolder with the model’s name under the Resource Library folder; they can be recognized in the Explorer by their frog icon to the left of the model’s name and the .frog extension.

In addition, please note that previously, files copied from the Resource Library were placed in a different location in users’ accounts and not in the Resource Library folder and its subfolders. The old location was a subfolder with the resource’s name under the My Files folder. Users who have been using the Optilogic platform for a while will likely still see this file structure for files copied from the Resource Library before this change was made.

Users can create new Cosmic Frog models from Cosmic Frog’s start page (see this help center article); these will be placed in a subfolder named “Cosmic Frog Models”, which sits under the My Files folder:

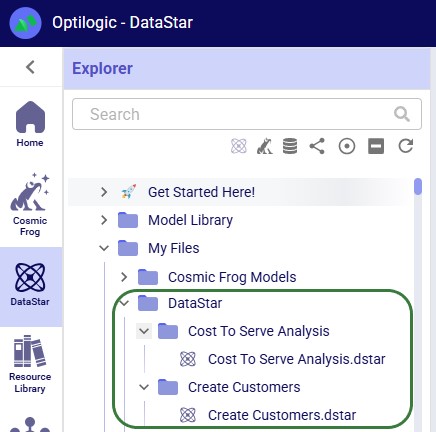

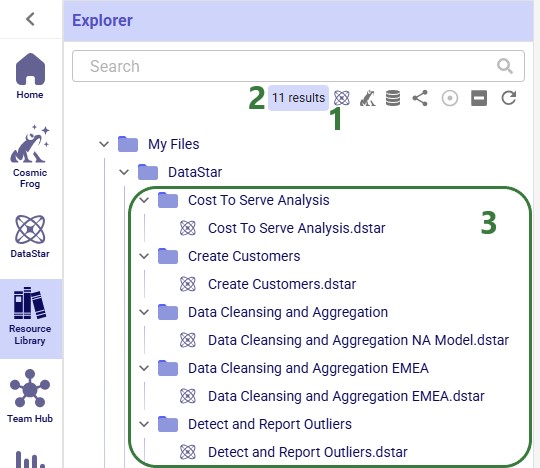

Users can create new DataStar projects from DataStar's start page (see this help center article); these will be placed in a subfolder named “DataStar”, which sits under the My Files folder. Within this DataStar folder, sub-folders with the names of the DataStar projects are created and the .dstar project files are located in these folders. In the following screenshot, we are showing 2 DataStar projects, 1 named "Cost to Serve Analysis" and 1 named "Create Customers":

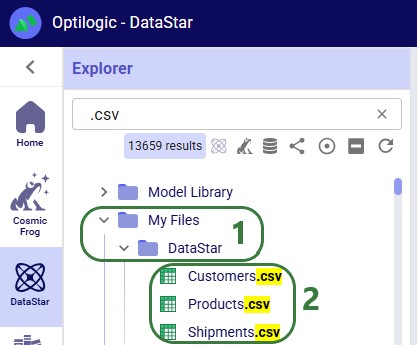

DataStar users may upload files to use with their data connections through the DataStar application (see this help center article). These uploaded files are also placed in the /My Files/DataStar folder:

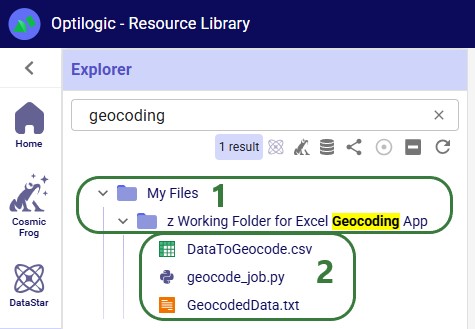

When working with any of the Cosmic Frog for Excel Apps (see also this help center article), the working files for these will be placed in subfolders under the My Files folder. These are named “z Working Folder for … App”:

In addition to the above-mentioned subfolders (Resource Library, Cosmic Frog Models, DataStar, and “z Working Folder for … App” folders) which are often present under the My Files top-level folder in a user’s Optilogic account, there are several other folders worth covering here:

Now that we have covered the folder and file structure of the Explorer including the default and common files and folders users may find here, it is time to cover how users can quickly find what they need using the options towards the top of the Explorer application.

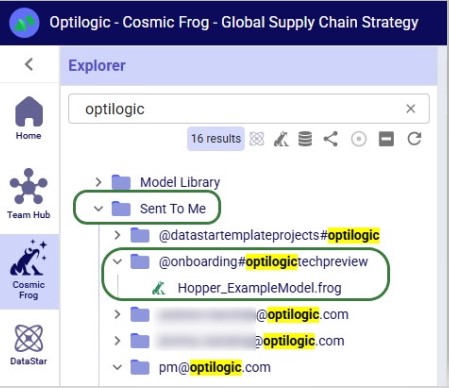

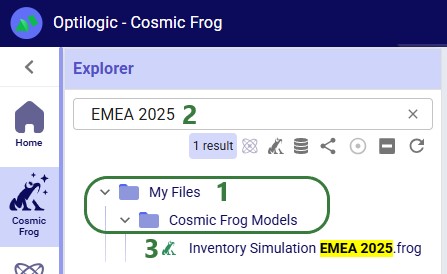

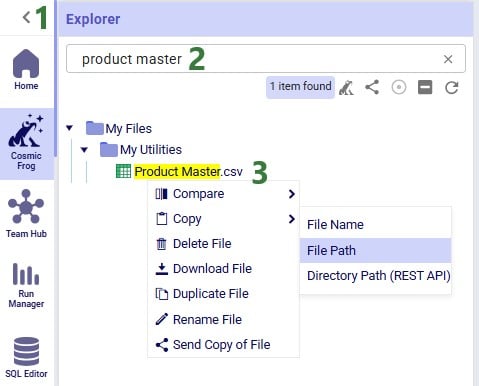

There is a free type text search box at the top of the Explorer application, which users can use to quickly find files and folders that contain the typed text in their names:

There is a quick search option to find all DataStar projects in the user’s account:

Similarly, there is a quick search option to find all Cosmic Frog models in the user’s account:

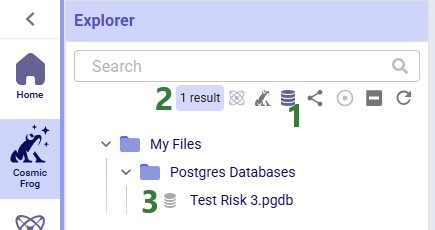

There is also a quick filter function to find all PostgreSQL databases in a user's account:

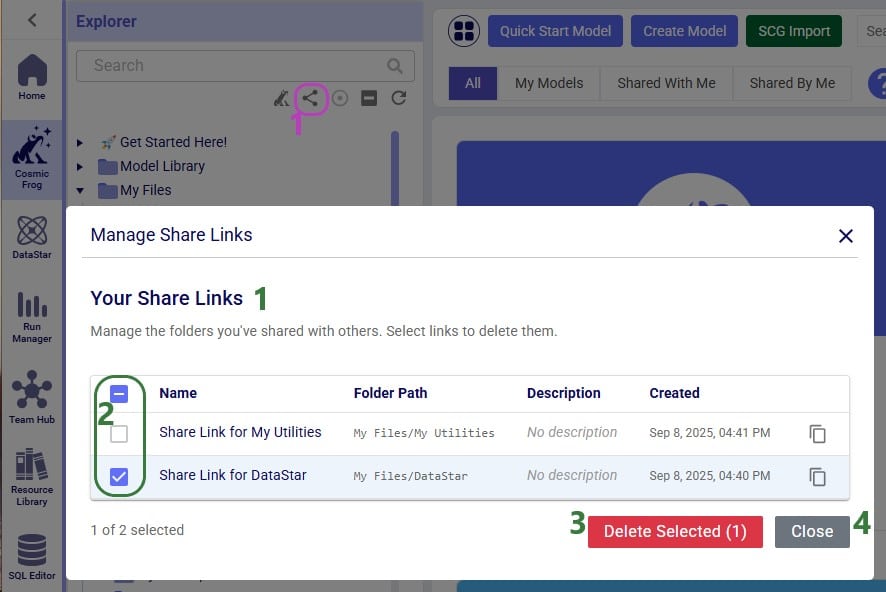

Users can create share links for folders in their Optilogic account to send a copy of the folder and all its contents to other users. See this “Folder Sharing” section in the “Model Sharing & Backups for Multi-User Collaboration in Cosmic Frog” help center article on how to create and use share links. If a user has created any share links for folders in their account, these can be managed by clicking on the View Share Links icon:

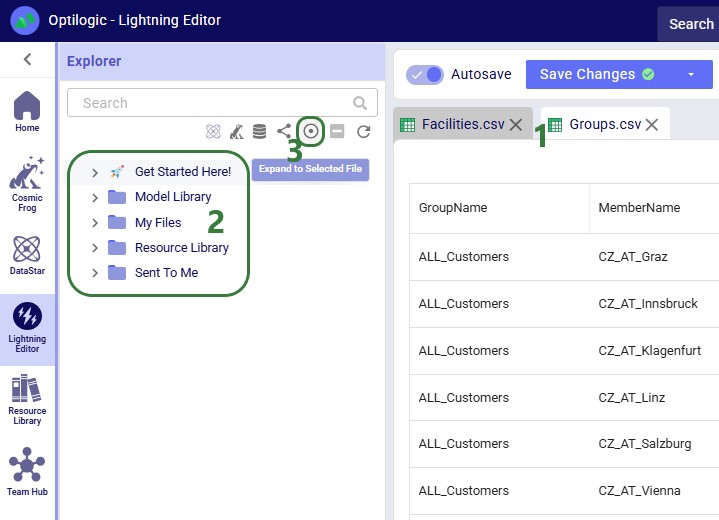

When browsing through the files and folders in your Optilogic account, you may collapse and expand quite a few different folders and their subfolders. Users can at times lose track of where the file they had selected is located. To help with this, users have the “Expand to Selected File” option available to them:

In addition to using the Expand to Selected File option, please note that switching to another file in the Lightning Editor by for example clicking on the Facilities.csv file, will further expand the Explorer to show that file in the list too. If needed, the Explorer will also automatically scroll up or down to show the active file in the center of the list.

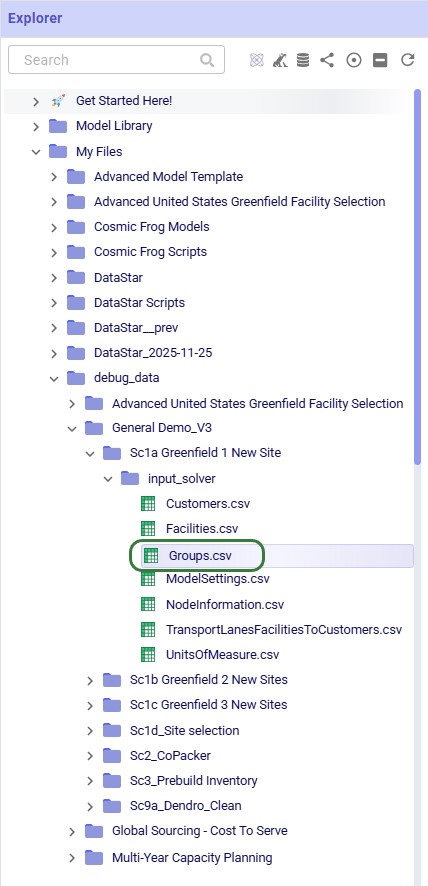

If you have many folders and subfolders expanded, it can be tedious to collapse them all one by one again. Therefore, users also have a “Collapse All” option at their disposal when working with the Explorer. The following screenshot shows the state of the Explorer before clicking on the Collapse All icon, which is the 6th of the 7 icons to the right of the Search box in the following screenshot:

The user then clicks on the Collapse All icon and the following screenshot shows the state of the Explorer after doing so:

Note that the Collapse All icon has now become inactive and will remain so until any folders are expanded again.

Sometimes when deleting, copying, or adding files or folders to a user’s account, these changes may not be immediately reflected in the Explorer files & folders list as they may take a bit of time. The last of the icons to the right of / underneath the Search box provides users with a “Refresh Files” option. Clicking on this icon will update the files and folders list such that all the latest are showing in the Explorer:

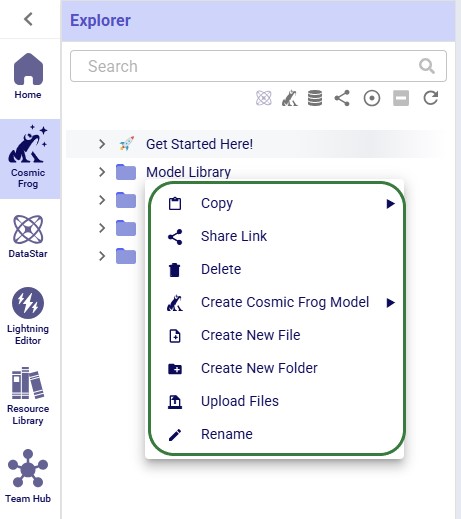

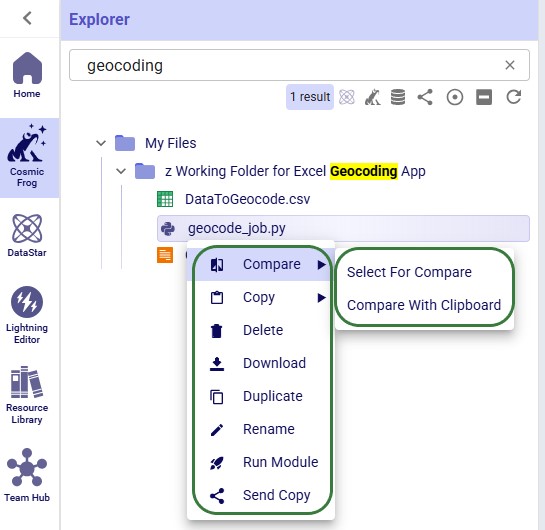

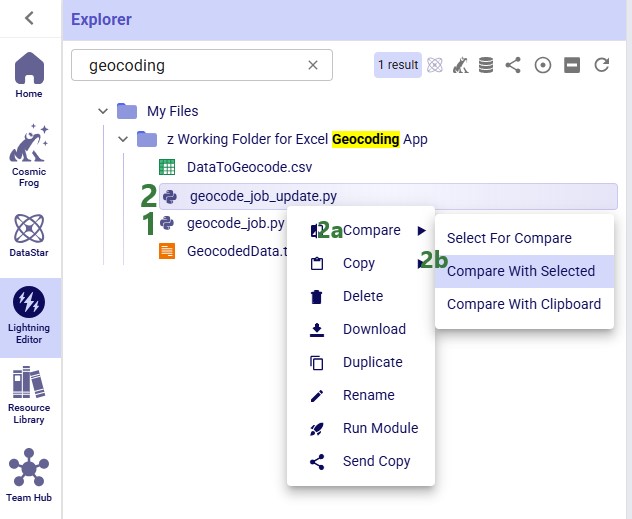

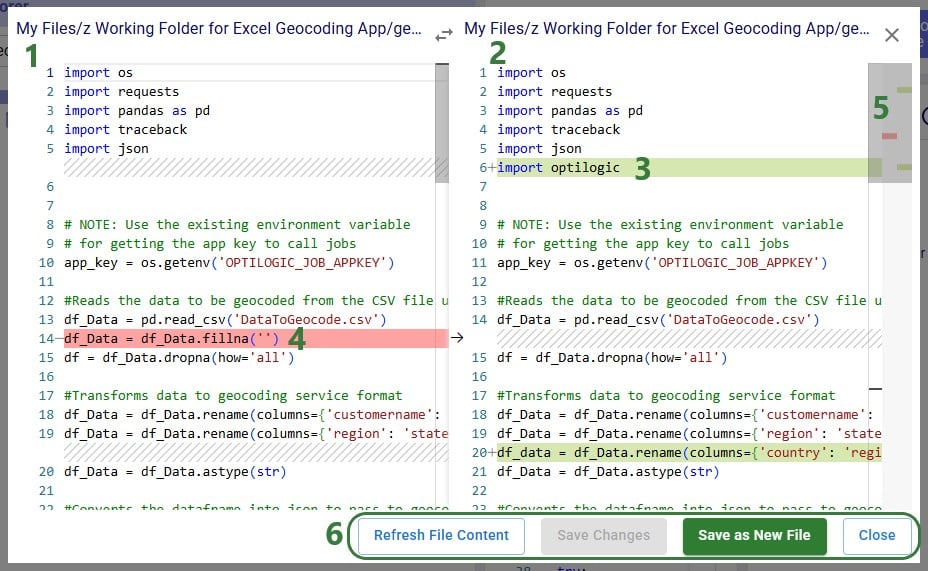

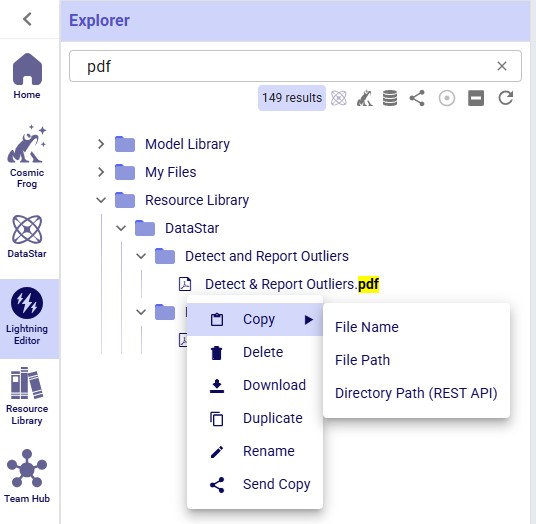

In this final section of the Explorer documentation, we will cover the options users have from the context menus that come up when right-clicking on files and folders in the Explorer. Screenshots and text will explain the options in the context menus for folders, Cosmic Frog models, text-based files, and all other files.

When right-clicking on a folder in the Explorer, users will see the following context menu come up (here the user right-clicked on the Model Library folder):

The options from this context menu are, from top to bottom:

Note that right-clicking on the Get Started Here folder gives fewer options: just the Copy (with the same 3 options as above), Share Link, and Delete Folder options are available for this folder.

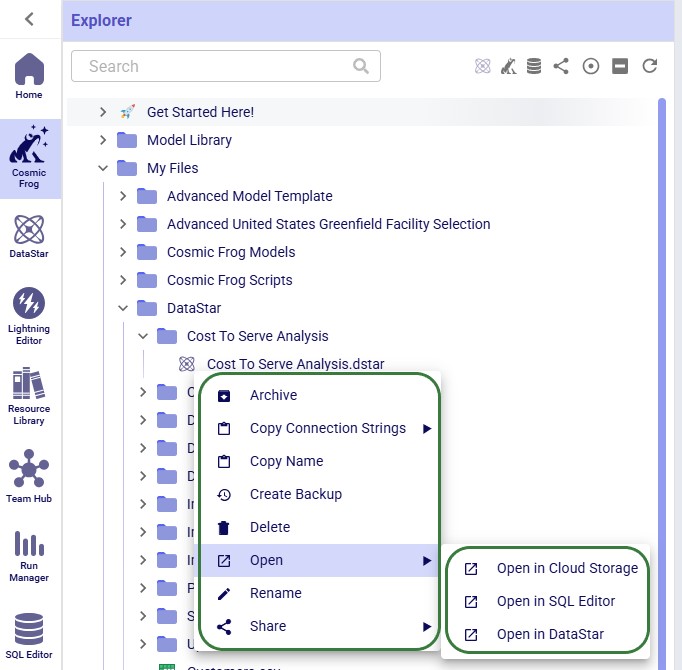

Now, we will cover the options available from the context menu when right-clicking on different types of files, starting with Cosmic Frog models:

The options, from top to bottom, are:

Please note that the Cosmic Frog models listed in the Explorer are not actual databases, but pointer files. These are essentially empty placeholder files to let users visualize and interact with models inside the Explorer. Due to this, actions like downloading are not possible; working directly with the Cosmic Frog model databases can be done through Cosmic Frog or the SQL Editor.

Next, we will look at the right-click context menu for DataStar projects. The options here are very similar to those of Cosmic Frog models:

The options, from top to bottom, are:

When right-clicking on a Python script file, the following context menu will open:

The options, from top to bottom, are:

The next 2 screenshots show what it looks like when comparing 2 text-based files with each other:

Other text-based files, such as those with extensions of .csv, .txt, .md and .html have the same options in their context menus as those for Python script files, with the exception that they do not have a Run Module option. The next screenshot shows the context menu that comes up when right-clicking on a .txt file:

Other files, such as those with extensions of .pdf, .xls, .xlsx, .xlsm, .png, .jpg, .twb and .yxmd, have the same options from their context menus as Python scripts, minus the Compare and Run Module options. The following screenshot shows the context menu of a .pdf file:

As always, please feel free to let us know of any questions or feedback by contacting Optilogic support on support@optilogic.com.

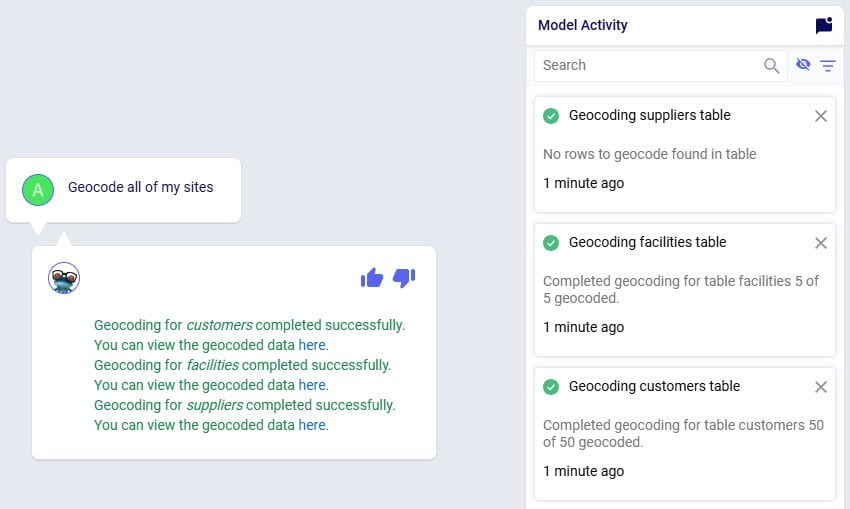

This documentation covers which geo providers one can use with Cosmic Frog, and how they can be used for geocoding and distance and time calculations.

Currently, there are 5 geo providers that can be used for geocoding locations in Cosmic Frog: MapBox, Bing, Google, PTV, and PC*Miler. MapBox is the default provider and comes free of cost with Cosmic Frog. To use any of the other 4 providers, you will need to obtain a license key from the company and add this to Cosmic Frog through your Account. The steps to do so are described in this help article “Using Alternate Geocoding Providers”.

Different geocoding providers may specialize in different geographies; refer to your provider for guidelines.

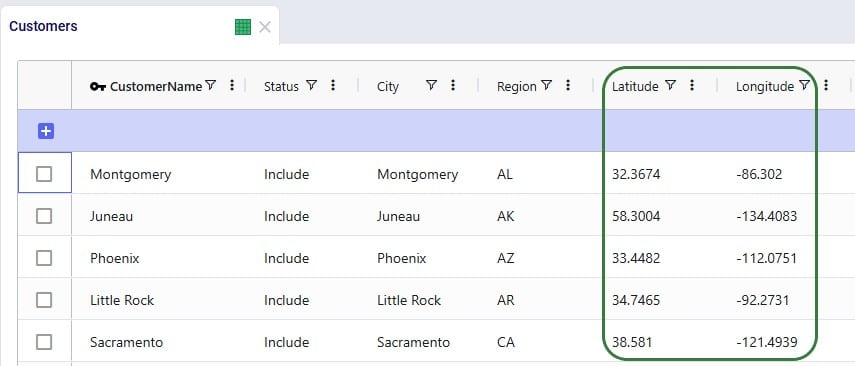

Geocoding a location (e.g. a customer, facility or supplier) means finding the latitude and longitude for it. Once a location is geocoded it can be shown on a map in the correct location which helps with visualizing the network itself and building a visual story using model inputs and outputs that are shown on maps.

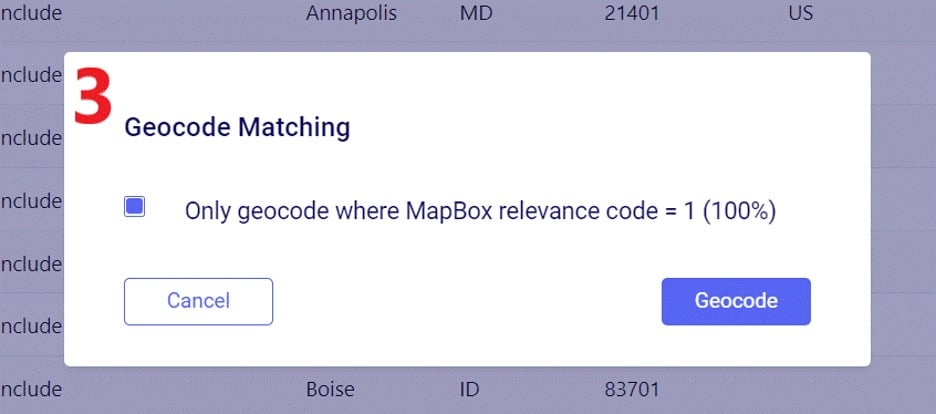

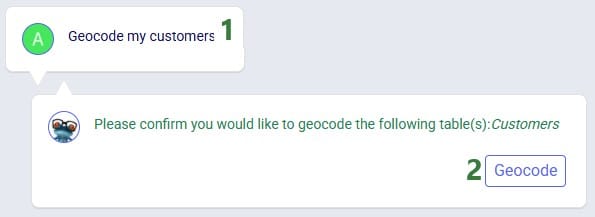

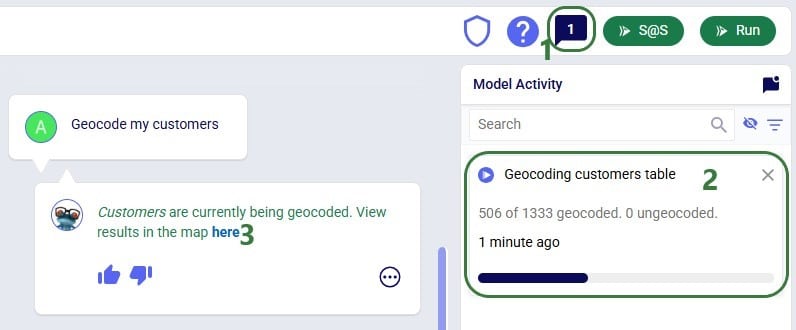

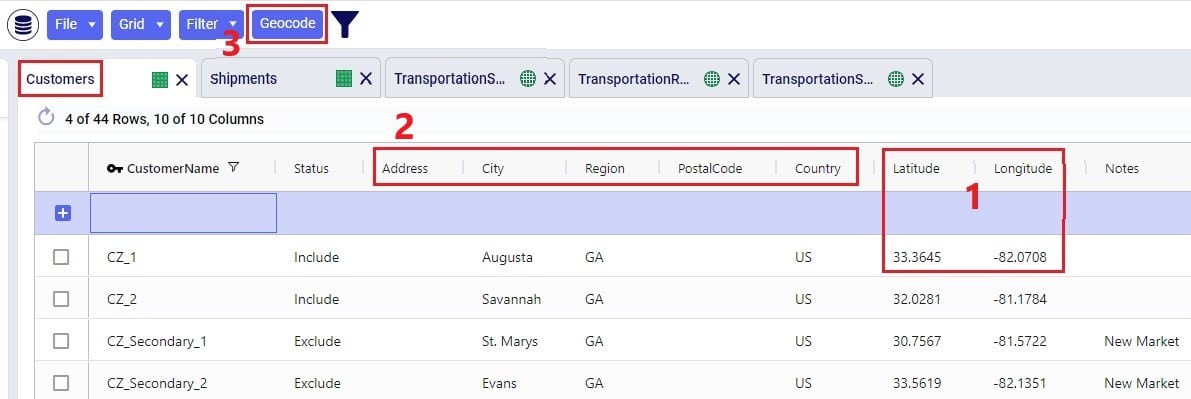

To geocode a location:

For costs and capacities to be calculated correctly, it may be needed to add transport distances and transport times to Cosmic Frog models. There are defaults that will be used if nothing is entered into the model, or users can populate these fields, either themselves or by using a Distance Lookup Utility. Here the tables where distances and times can be entered, what happens if nothing has been entered, and how users can utilize the Distance Lookup Utility will be explained.

There are multiple Cosmic Frog input tables that have input fields related to Transport Distance, and Transport Time, including Speed which can also be used to calculate transport time from a Transport Distance (time = distance / speed). These all have their own accompanying UOM (unit of measure) field. Here is an overview of the tables which contain Distance, Time and/or Speed fields:

For Optimization (Neo), this is the order of precedence that is applied when multiple tables and fields are used:

For Transportation (Hopper) models, this is the order of precedence when multiple tables and fields are being used:

To populate these input tables and their pertinent fields, user has following options:

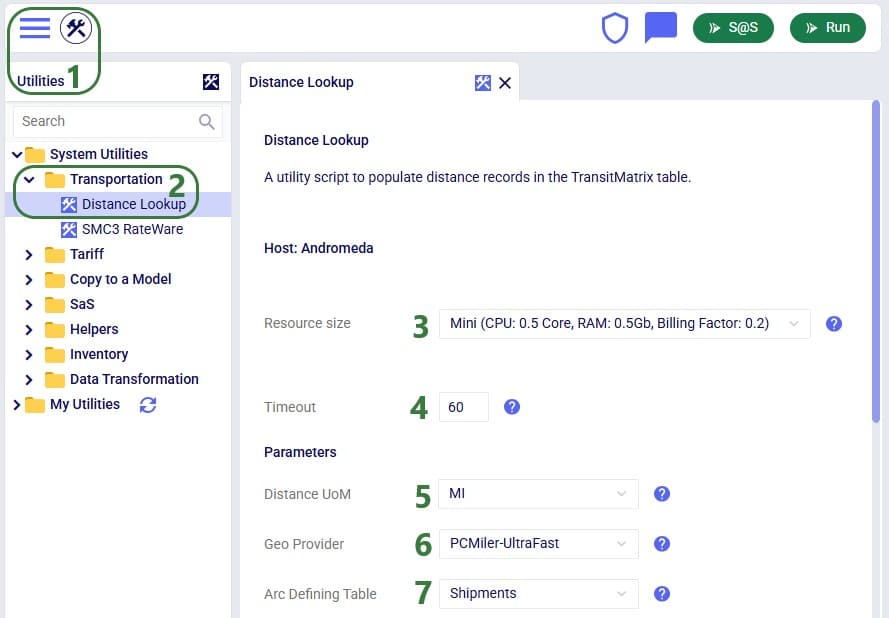

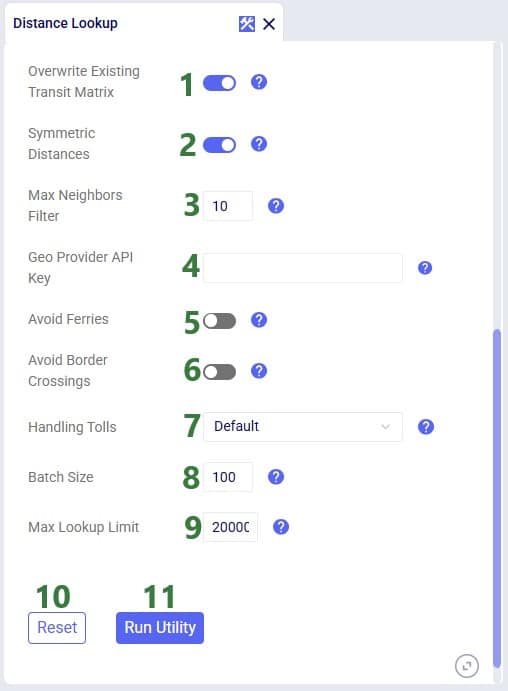

Cosmic Frog users can find multiple handy utilities in the Utilities section of Cosmic Frog - here we will cover the Distance Lookup utility. This utility looks up transportation distances and times for origin-destination pairs and populates the Transit Matrix table. As Geo Providers, Bing, PC Miler and Azure can be used if the user has a license key for these. In addition, there is a free PC Miler-UltraFast option which can look up accurate road distances within the EU and North America without needing a license key. This is also a very fast way to lookup distances. A new free provider OLRouting has been added. This provider leverages valhalla, an open source routing engine for OpenStreetMap. It has global coverage and performs the lookups very fast as well. Lastly, the Great Circle Geo Provider option calculates the straight-line distance for origin-destination pairs based on latitudes & longitudes. We will look at the configuration options of the utility using the next 2 screenshots:

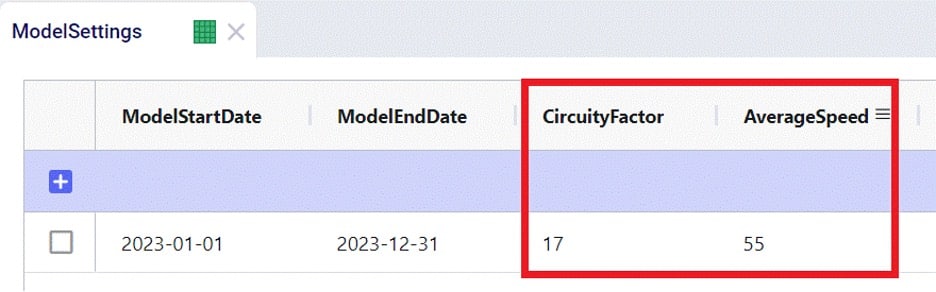

Note that when using the Great Circle geo provider for Distance calculations, only the Transport Distance field in the Transit Matrix table will be populated. The Transport Time will be calculated at run time using the Average Speed on the Model Settings table.

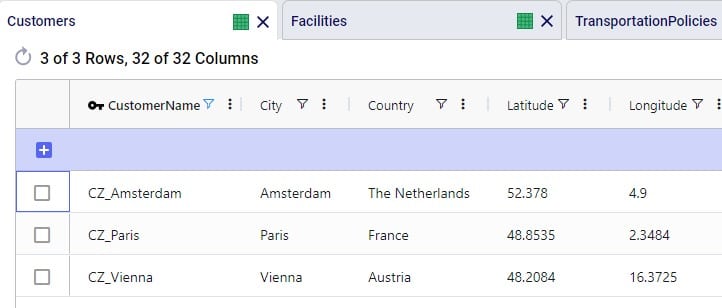

To finish up, we will walk through an example of using the Distance Lookup utility on a simple model with 3 customers (CZs) and 2 distribution centers (DCs), which are shown in the following 2 screenshots of the Customers and Facilities tables:

We can use Groups and/or Named Table Filters in the Transportation Policies table if we want to make 1 policy that represents all possible lanes from the DCs to the customers:

Next, we run the Distance Lookup utility with following settings:

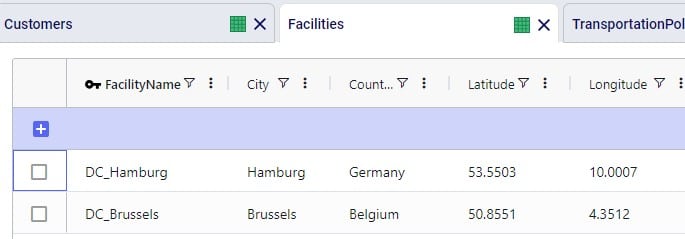

This results in the following 6 records being added to the Transit Matrix table - 1 for each possible DC-CZ origin-destination pair:

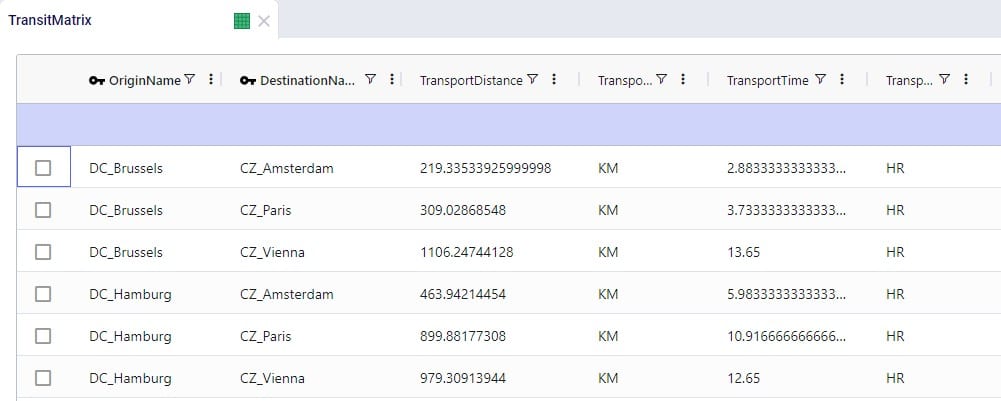

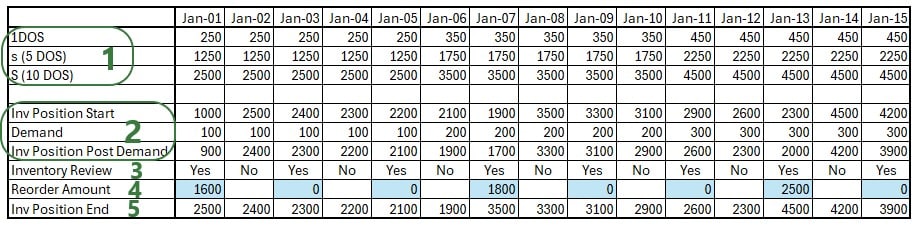

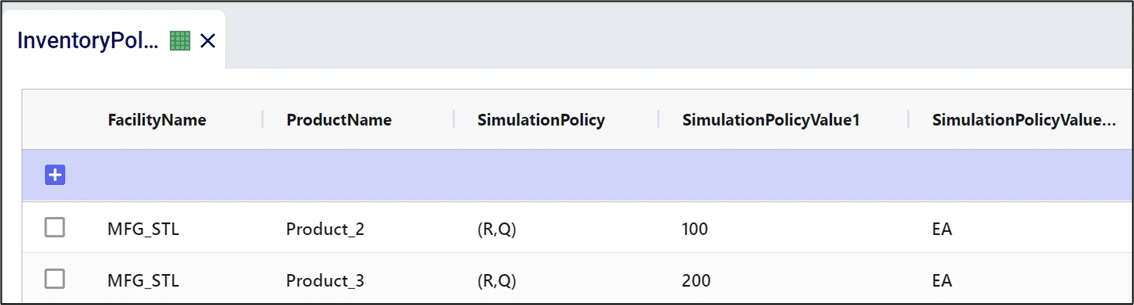

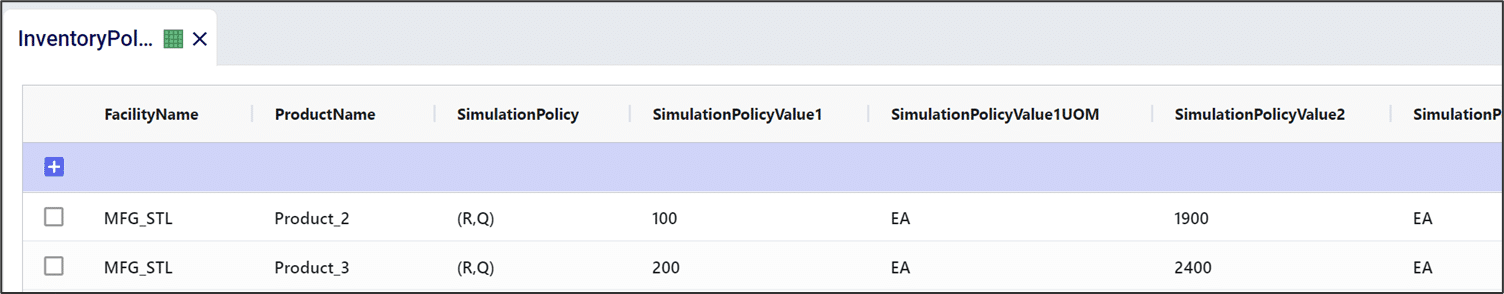

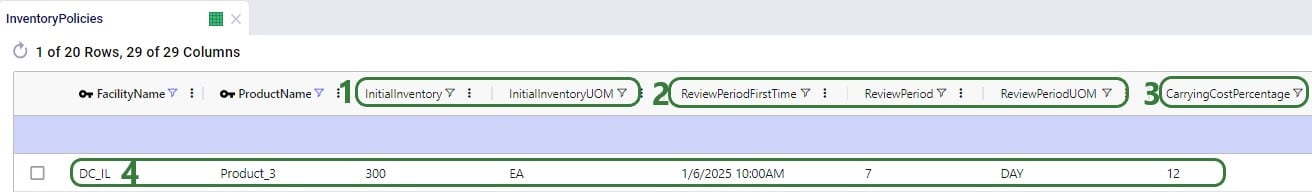

When demand fluctuates due to for example seasonality, it can be beneficial to manage inventory dynamically. This means that when the demand (or forecasted demand) goes up or down, the inventory levels go up or down accordingly. To support this in Cosmic Frog models, inventory policies can be set up in terms of days of supply (DOS): for example for the (s,S) inventory policy, the Simulation Policy Value 1 UOM and Simulation Policy Value 2 UOM fields can be set to DOS. Say for example that reorder point s and order up to quantity S are set to 5 DOS and 10 DOS, respectively. This means that if the inventory falls to or below the level that is the equivalent of 5 days of supply, a replenishment order is placed that will order the amount of inventory to bring the level up to the equivalent of 10 days of supply. In this documentation we will cover the DOS-specific inputs on the Inventory Policies table, how a day of supply equivalent in units is calculated from these and walk through a numbers example.

In short, using DOS lets users be flexible with policy parameters; it is a good starting point for estimating/making assumptions about how inventory is managed in practice.

Note that it is recommended you are familiar with the Inventory Policies table in Cosmic Frog already before diving into the details of this help article.

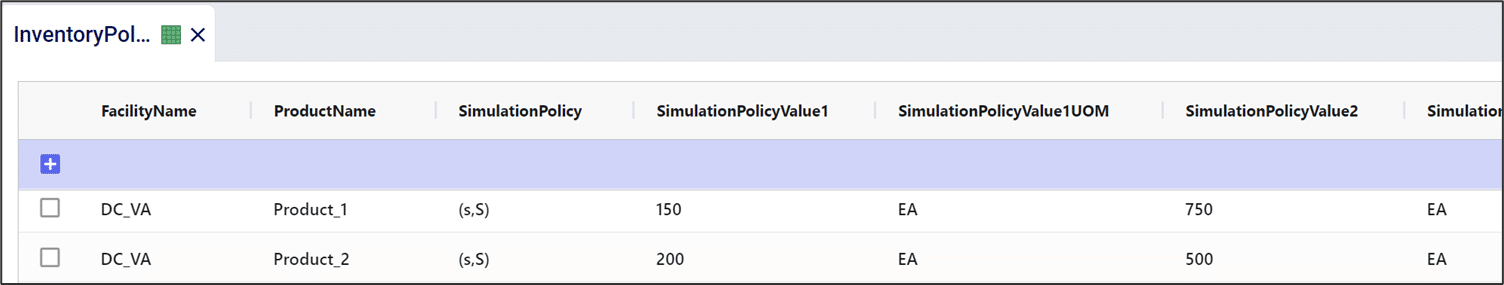

The following screenshot shows the fields that set the simulation inventory policy and its parameters:

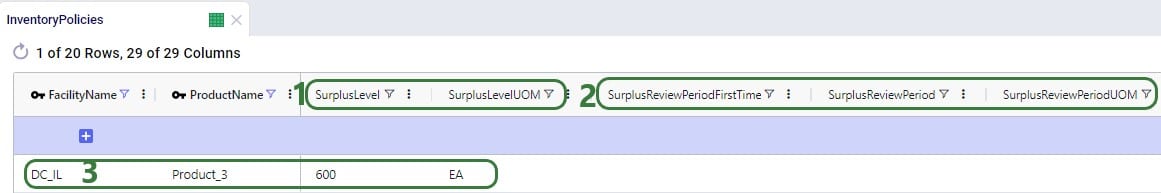

For the same inventory policy, the next screenshot shows the DOS-related fields on the Inventory Policies table; note that the UOM fields are omitted in this screenshot:

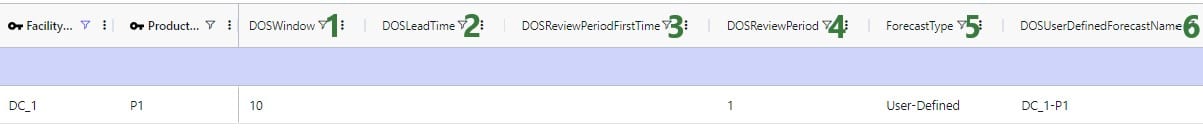

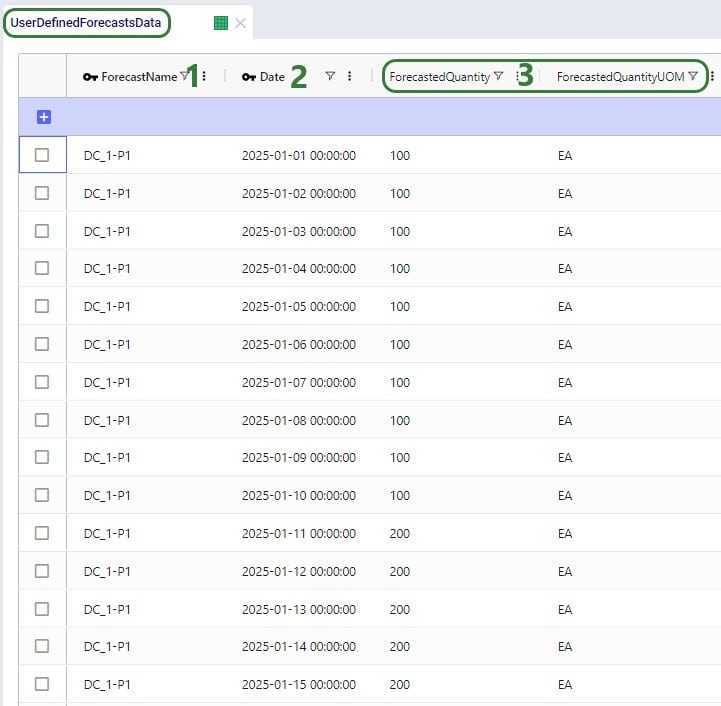

As mentioned above, when using forecasted demand for the DOS calculations, this forecasted demand needs to be specified in the User Defined Forecasts Data and User Defined Forecasts tables, which we will discuss here. This next screenshot shows the first 15 example records in the User Defined Forecasts Table:

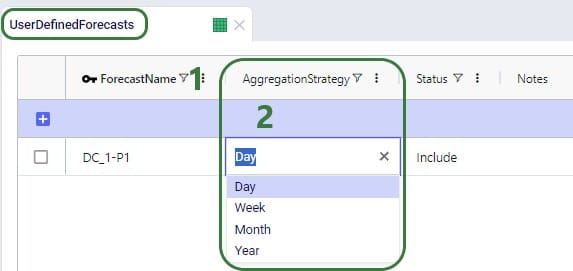

Next, the User Defined Forecasts table lets a user configure the time-period to which a forecast is aggregated:

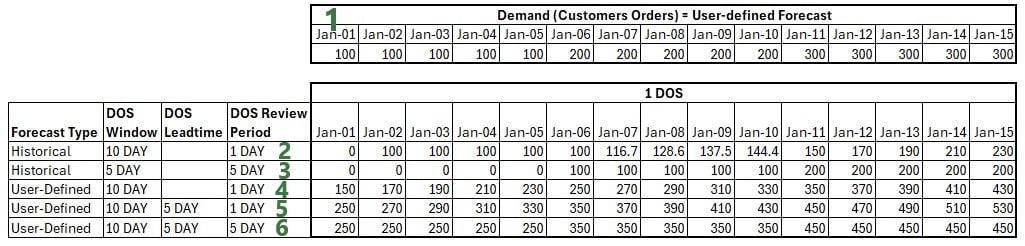

Let us now explain how the DOS calculations work for different DOS settings through the examples shown in the next screenshot. Note that for all these examples the DOS Review Period First Time field has been left blank, meaning that the first 1 DOS equivalent calculation occurs at the start of this model (on January 1st) for each of these examples:

Now that we know how to calculate the value of 1 DOS, we can apply this to inventory policies which use DOS as their UOM for the simulation policy value fields. We will do a numbers example with the one shown in the screenshot above (in the Days of Supply Settings section) where reorder point s is 5 DOS and order up to quantity S is 10 DOS. Let us assume the same settings as in the last example for the 1 DOS calculations in the screenshot above, explained in bullet #6 above: forecasted demand is used with a 10 day DOS Window, a 5 day DOS Leadtime, and a 5 day DOS Review Period, so the calculations for the equivalent of 1 DOS are the numbers in the last row shown in the screenshot, which we will use in our example below. In addition to this, we will assume a 2 day Review Period for the inventory policy, meaning inventory levels are checked every other day to see if a replenishment order needs to be placed. DC_1 also has 1,000 units of P1 on hand at the start of the simulation (specified in the Initial Inventory field):

Cosmic Frog’s new breakpoints feature enables users to create maps which relay even more supply chain data in just one glance. Lines and points can now be styled based on field values from the underlying input or output table the lines/points are drawn from.

In this Help Center article, we will cover where to find the breakpoints feature for both point and line layers and how to configure them. A basic knowledge of how to configure maps and their layers in Cosmic Frog is assumed; users unfamiliar with maps in Cosmic Frog are encouraged to first read the “Getting Started with Maps” Help Center article.

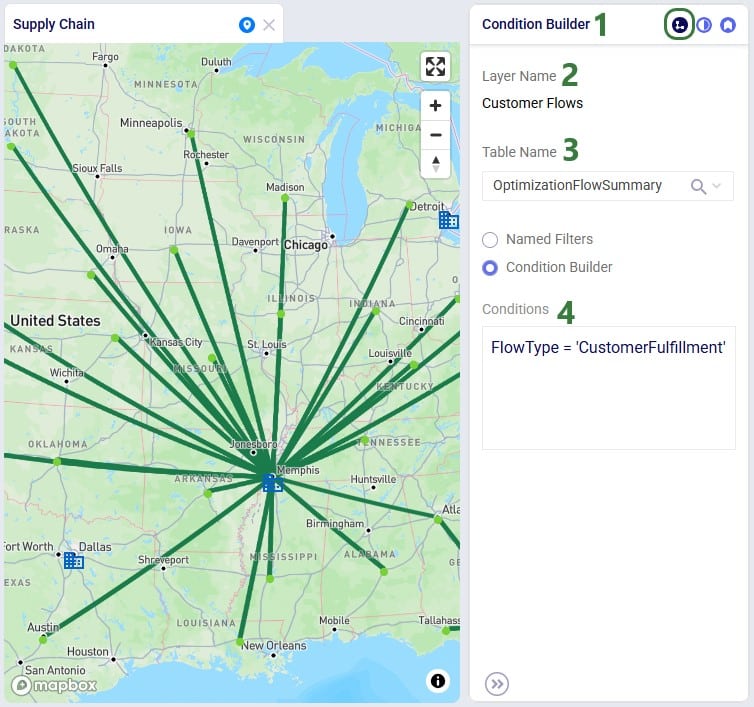

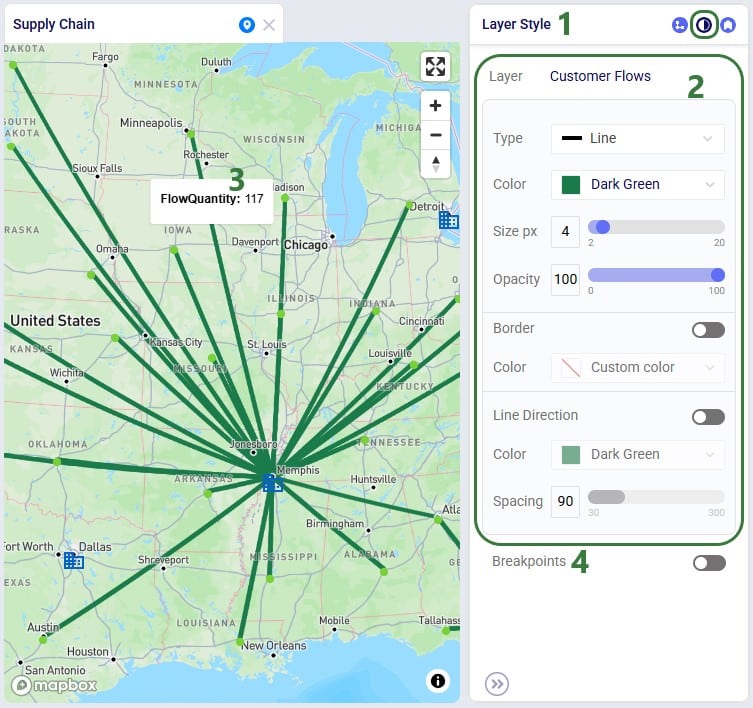

First, we will walk through how to apply breakpoints to map layers of type = line, which are often used to show flows between locations. With breakpoints we can style the lines between origins and destinations for example based on how much is flowing in terms of quantity, volume or weight. It is also possible to style the lines on other numeric fields, like costs, distances or time.

Consider the following map showing flows (dark green lines) to customers (light green circles):

Next, we will go to the Layer Style pane on which breakpoints can be turned on and configured:

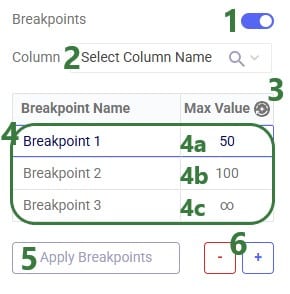

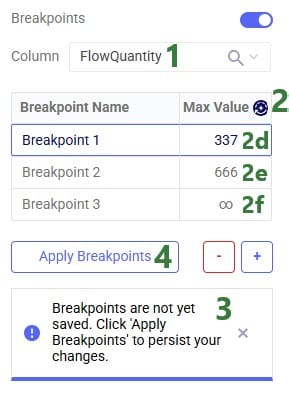

Once the Breakpoints toggle has been turned on (slide right, the color turns blue), the breakpoint configuration options become visible:

One additional note is that one can use tab to navigate through the cells in the Breakpoints table.

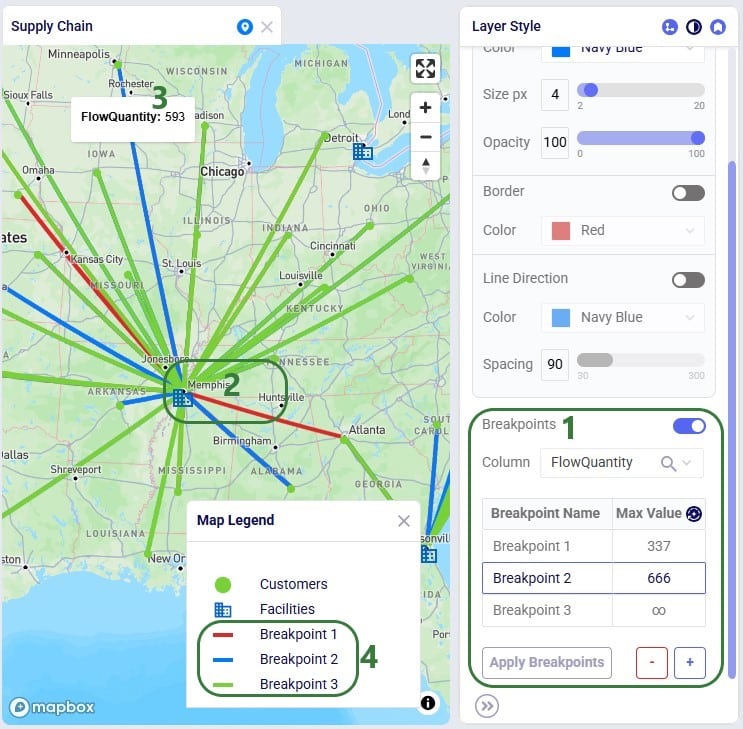

The next screenshot shows breakpoints based on the Flow Quantity field (in the Optimization Flow Summary) for which the Max Values have been auto generated:

Users can customize the style of each individual breakpoint:

Please note:

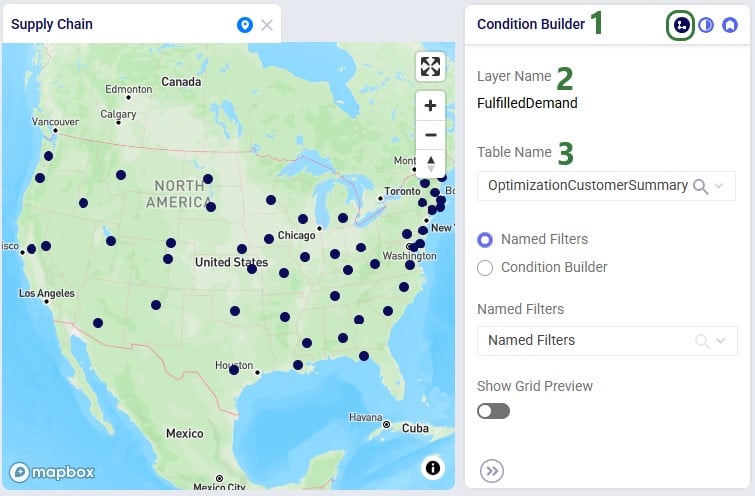

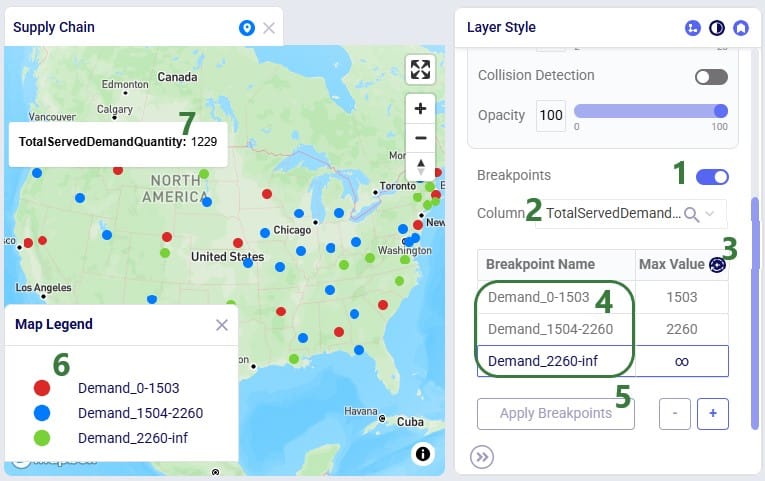

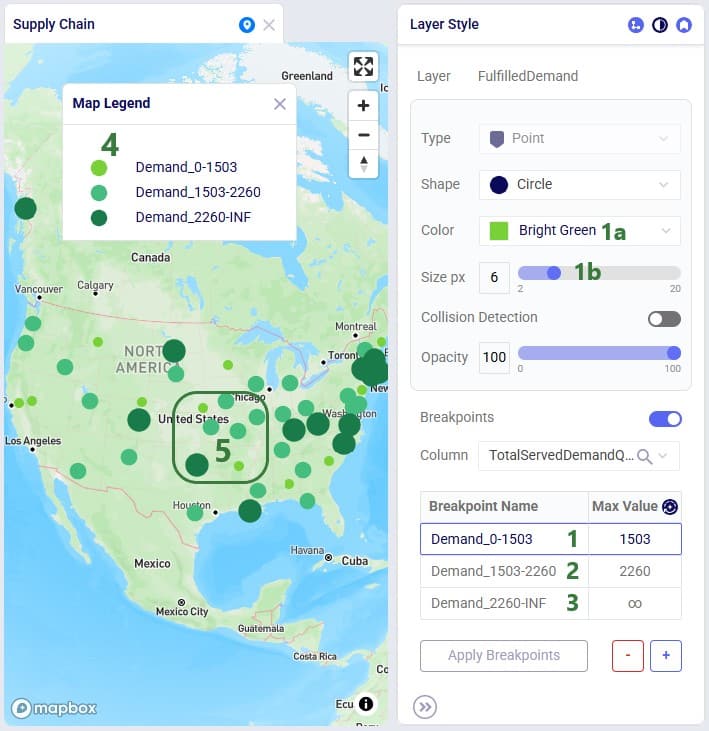

Configuring and applying breakpoints on point layers is very similar to those on line layers. We will walk through the steps in the next 4 screenshots in slightly less detail. In this example we will base the size of the customer locations on the map on the total demand they have been served:

Next, we again look at the Layer Style pane of the layer:

Lastly, user would like to gradually increase the color of the customer circles from light to dark green and the size from small to bigger based on the breakpoint the customer belongs to:

As always, please feel free to reach out to Optilogic support at support@optilogic.com should you have any questions.

For various reasons, many supply chains need to deal with returns. This can for example be due to packaging materials coming back to be reused at plants or DCs, retail customers returning finished goods that they are not happy with, defective products, etc. Previously, these returns could mostly be modelled within Cosmic Frog NEO (Network Optimization) models by using some tricks and workarounds. But with the latest Cosmic Frog release, returns are now supported natively, so that the reuse, repurposing, or recycling of these retuned products to help companies reduce costs, minimize waste, and improve overall supply chain efficiency can be taken into account easily.

This documentation will first provide an overview of how returns work in a Cosmic Frog model and then walk through an example model of a retailer which includes modelling the returns of finished goods. The appendix details all the new returns-related fields in several new tables and some of the existing tables.

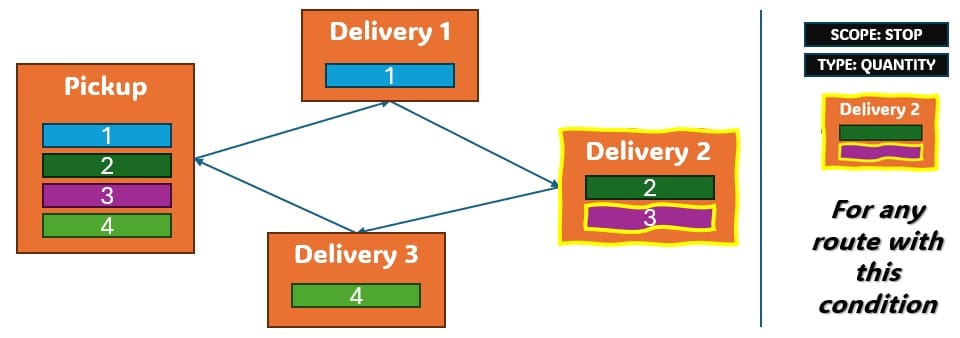

When modelling returns in Cosmic Frog:

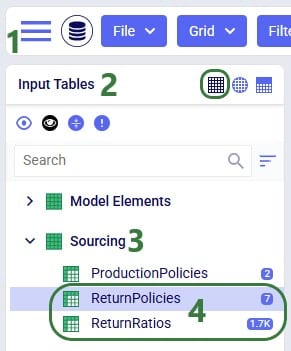

Users need to use 2 new input tables to set up returns:

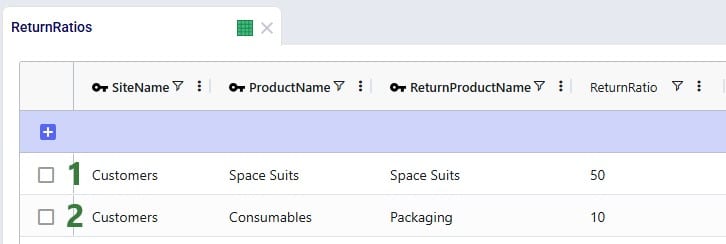

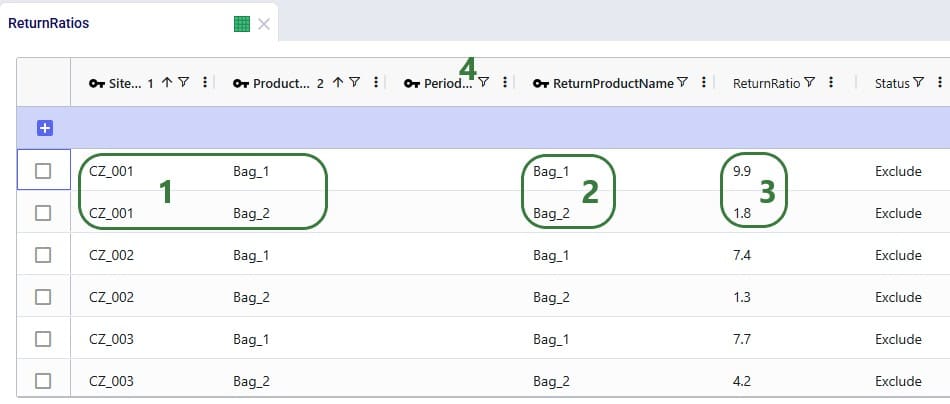

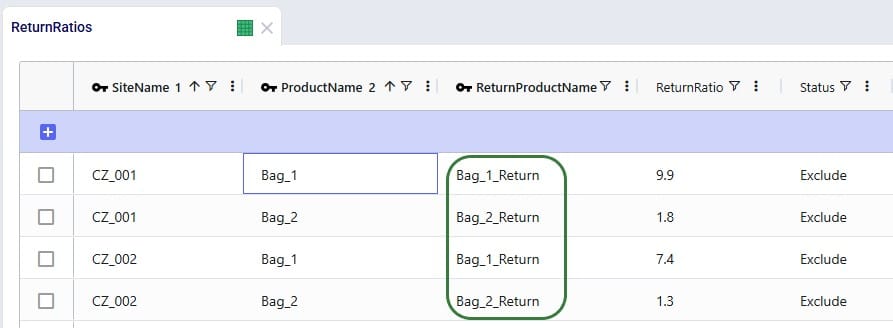

The Return Ratios table contains the information on how much return-product is returned for a certain amount of product delivered to a certain destination:

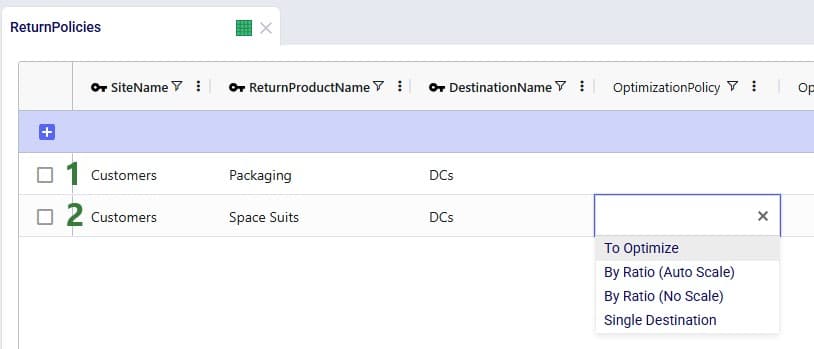

The Return Policies table is used to indicate where returned products need to go to and the rules around multiple possible destinations. Optionally, costs can be associated with the returns here and a maximum distance allowed for returns can be entered on this table too.

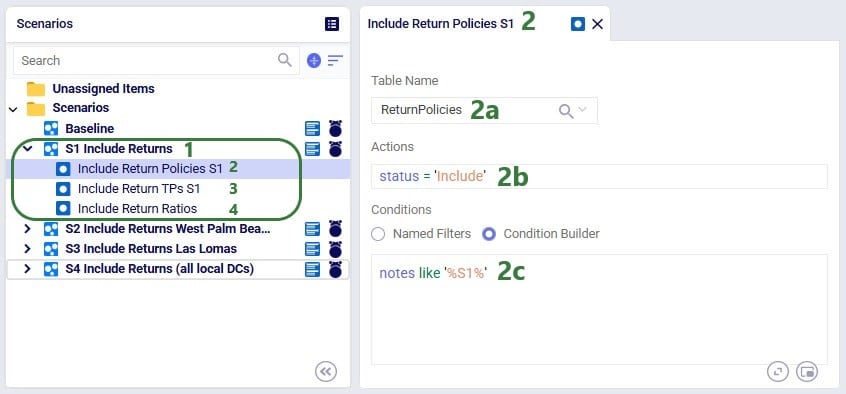

Note that both these tables have Status and Notes fields (not shown in the screenshots), like most Cosmic Frog input tables have. These are often used for scenario creation where the Status is set to Exclude in the table itself and changed to Include in select scenarios based on text in the Notes field.

All columns on these 2 returns-related input table are explained in more detail in the appendix.

In addition to populating the Return Policies and Return Ratios tables, users need to be aware that additional model structure needed for the returned products may need to be put in place:

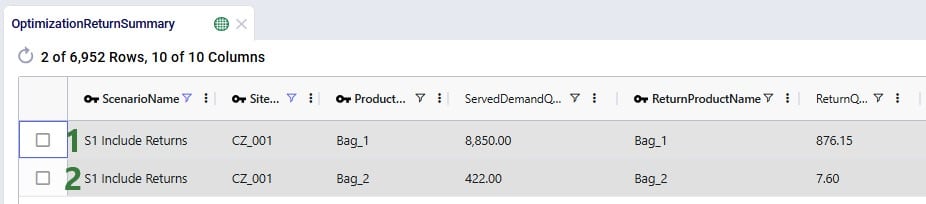

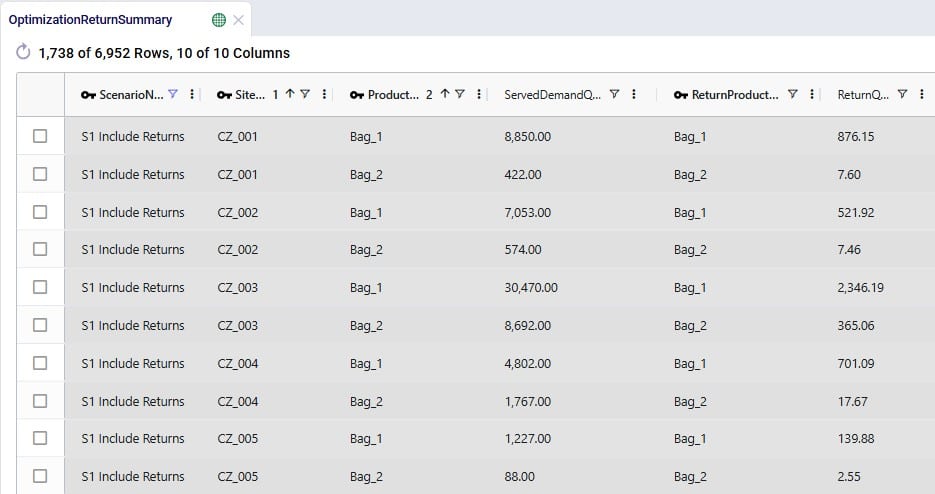

The Optimization Return Summary output table is a new output table that will be generated for Neo runs if returns are included in the modelling:

This table and all its fields are explained in detail in the appendix.

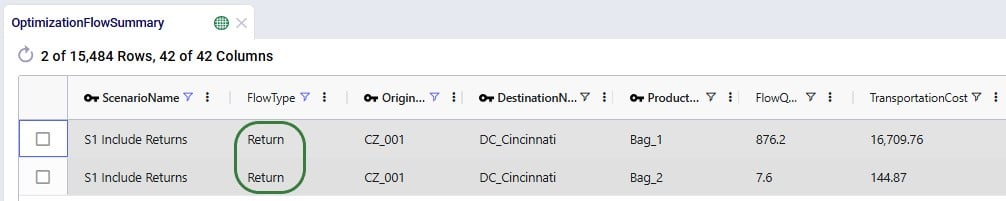

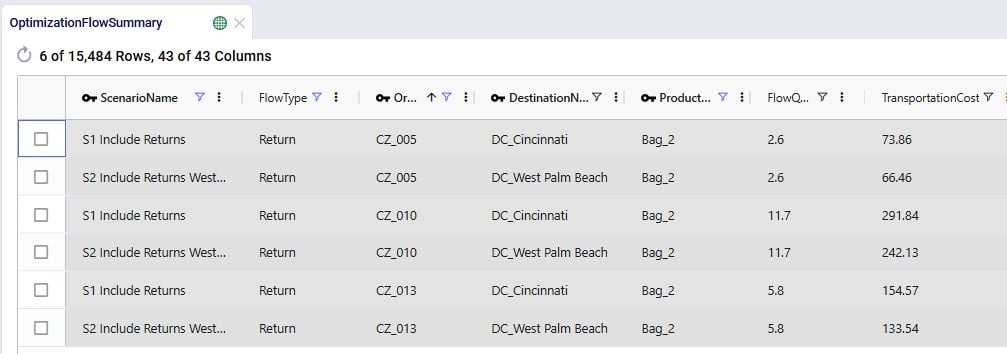

The Optimization Flow Summary output table will contain additional records for models that include returns; they can be identified by filtering the Flow Type field for “Return”:

These 2 records show the return flows and associated transportation costs for the Bag_1 and Bag_2 products from CZ_001, going to DC_Cincinnati, that we saw in the Optimization Return Summary table screenshot above.

In addition to the new Optimization Return Summary output table, and new records of Flow Type = Return in the Optimization Flow Summary output table, following existing output tables now contain additional fields related to returns:

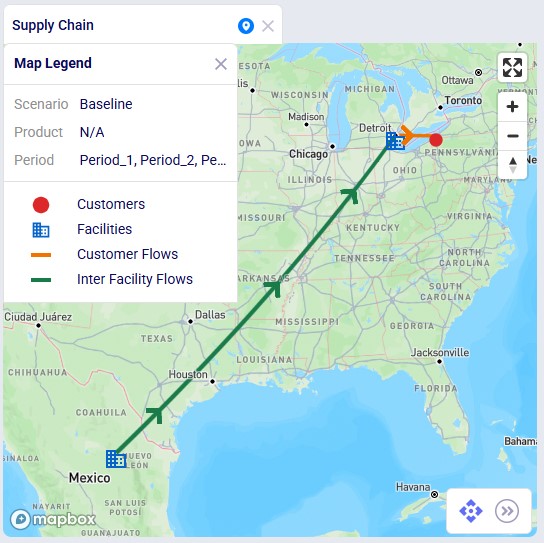

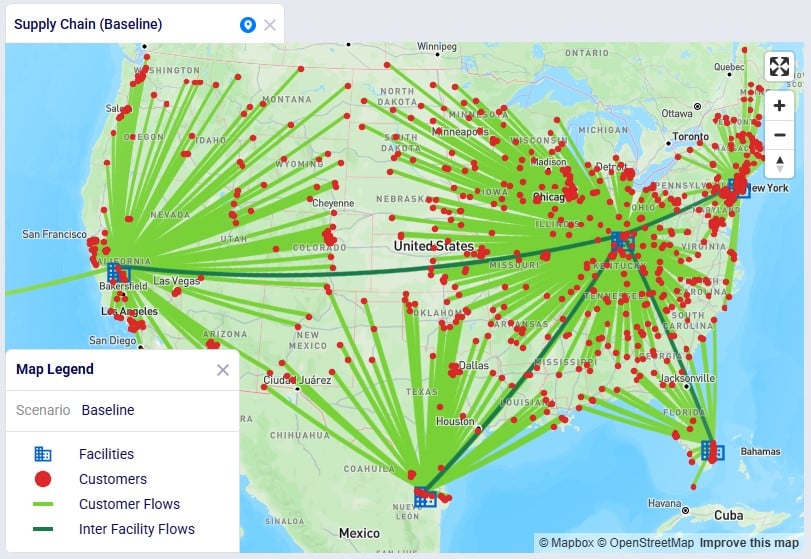

The example Returns model can be copied from the Resource Library to a user’s Optilogic account (see this help center article on how to use the Resource Library). It models the US supply chain of a fashion bag retailer. The model’s locations and flows both to customers and between DCs are shown in this screenshot (returns are not yet included here):

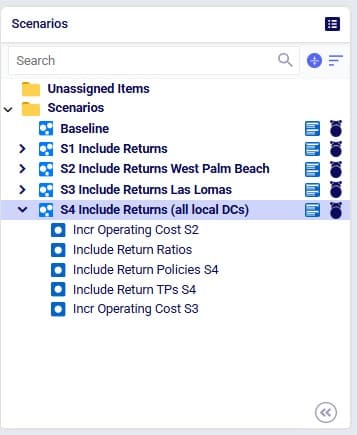

Historically, the retailer had 1 main DC in Cincinnati, Ohio, where all products were received and all 869 customers were fulfilled from. Over time, 4 secondary DCs were added based on Greenfield analysis, 2 bigger ones in Clovis, California, and Jersey City, New Jersey, and 2 smaller ones in West Palm Beach, Florida, and Las Lomas, Texas. These secondary DCs receive product from the Cincinnati DC and serve their own set of customers. The main DC in Cincinnati and the 2 bigger secondary DCs (Clovis, CA, and Jersey City, NJ) can handle returns currently: returns are received there and re-used to fulfill demand. However, until now, these returns had not been taken into account in the modelling. In this model we will explore following scenarios:

Other model features:

Please note that in this model the order of columns in the tables has sometimes been changed to put those containing data together on the left-hand side of the table. All columns are still present in the table but may be in a different position than you are used to. Columns can be reset to their default position by choosing “Reset Columns” from the menu that comes up when clicking on the icon with 3 vertical dots to the right of a column name.

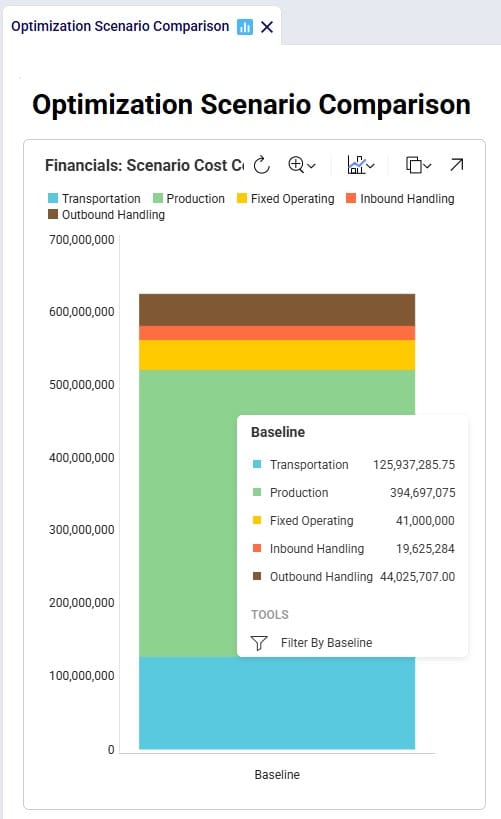

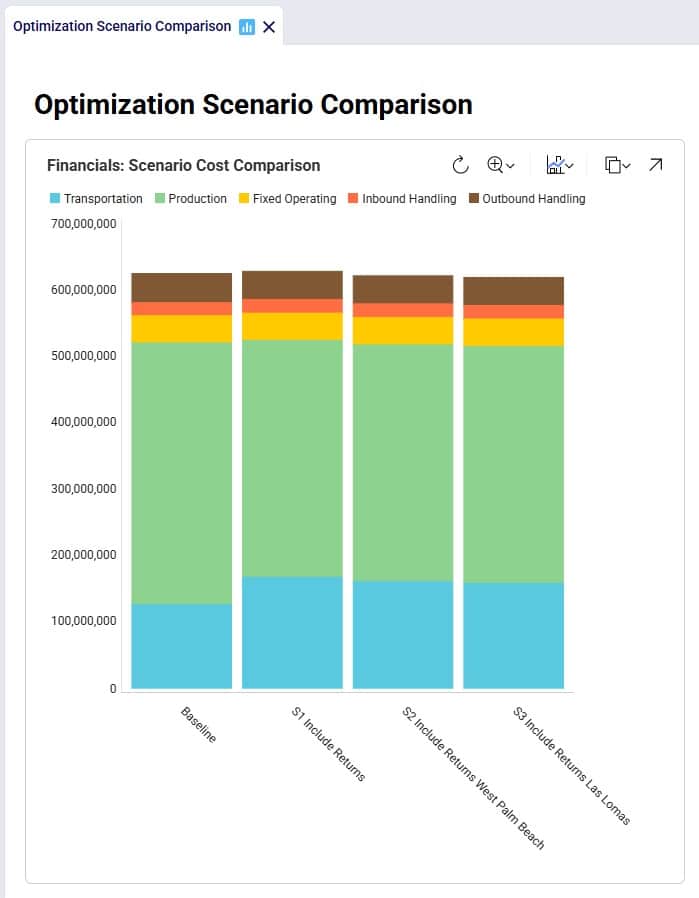

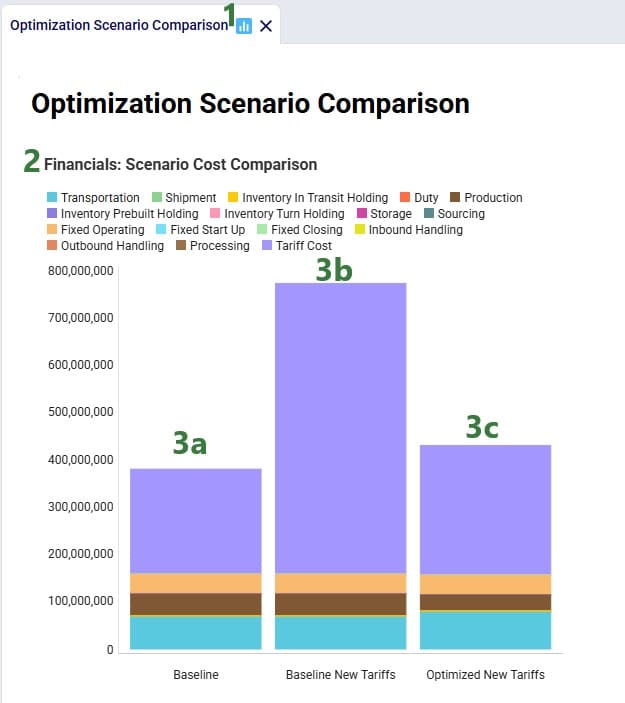

After running the baseline scenario (which does not include returns), we take a look at the Financials: Scenario Cost Comparison chart in the Optimization Scenario Comparison dashboard (in Cosmic Frog’s Analytics module):

We see that the biggest cost currently is the production cost at 394.7M (= procurement of all product into Cincinnati), followed by transportation costs at 125.9M. The total supply chain cost of this scenario is 625.3M.

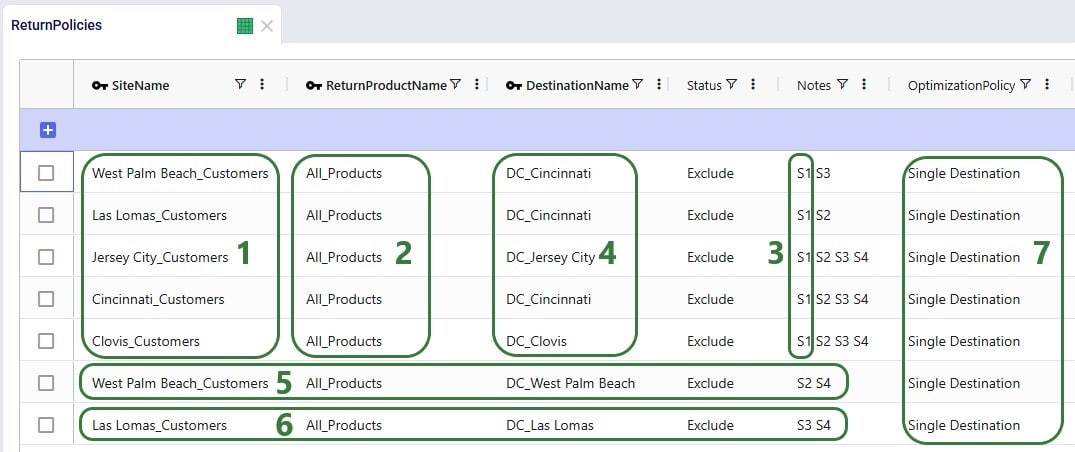

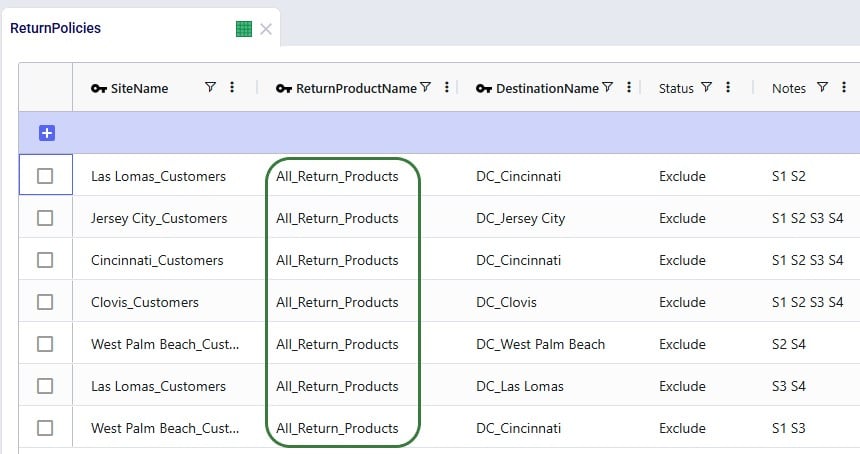

In this scenario we want to include how returns currently work: Cincinnati, Clovis, and Jersey City customers return their products to their local DCs whereas West Palm Beach and Las Lomas customers return their products to the main DC in Cincinnati. To set this up, we need to add records to the Return Policies, Return Ratios, and Transportation Policies input tables. To not change the Baseline scenario, all new records will be added with Status = Exclude, and the Notes field populated so it can be used to filter on in scenario items that change the Status to Include for subsets of records. Starting with the Return Policies table:

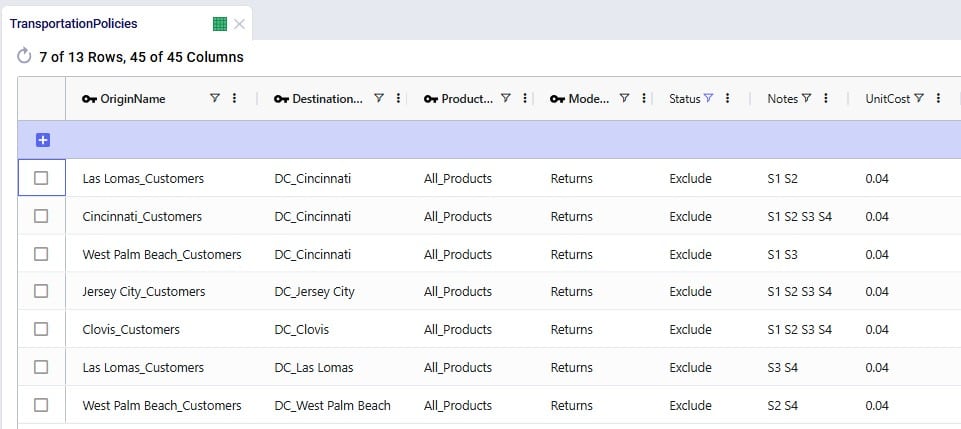

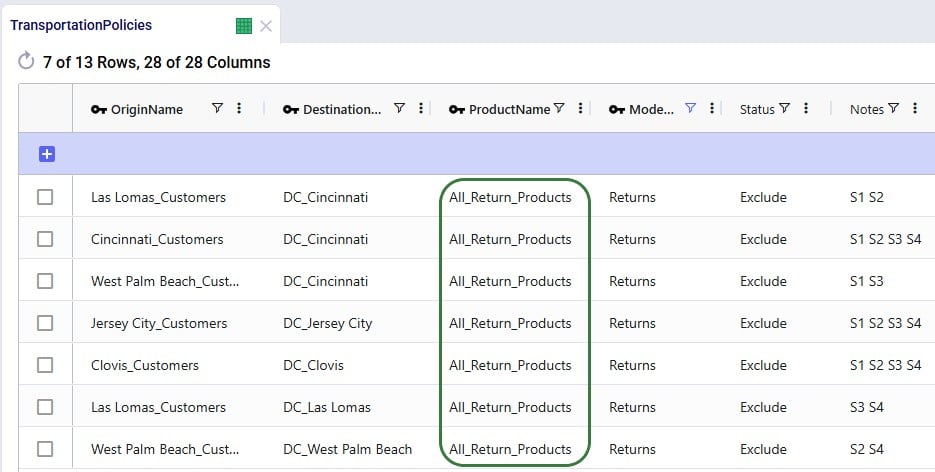

Next, we need to add records to the Transportation Policies table so that there is at least 1 lane available for each site-product-destination combination set up in the return policies table. For this example, we add records to the Transportation Policies table that match the ones added to the Return Policies table exactly, while additionally setting Mode Name = Returns, Unit Cost = 0.04 and Unit Cost UOM = EA-MI (the latter is not shown in the screenshot below), which means the transportation cost on return lanes is 0.04 per unit per mile:

Finally, we also need to indicate how much product is returned in the Return Ratios table. Since we want to model different ratios by individual customer and individual product, this table does not use any groups. Groups can however be used in this table too for the Site Name, Product Name, Period Name, and Return Product Name fields.

Please note that adding records to these 3 tables and including them in the scenarios is sufficient to capture returns in this example model. For other models it is possible that additional tables may need to be used, see the Other Input Tables section above.

Now that we have populated the input tables to capture returns, we can set up scenario S1 which will change the Status of the appropriate records in these tables from Exclude to Include:

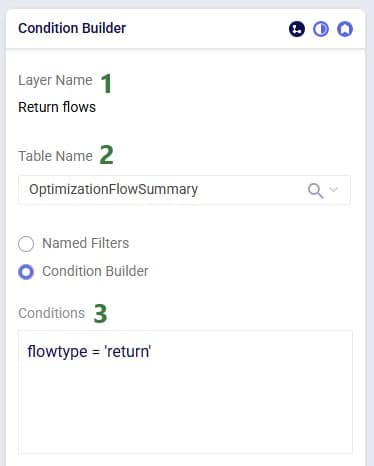

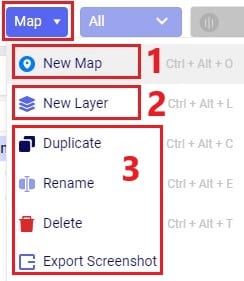

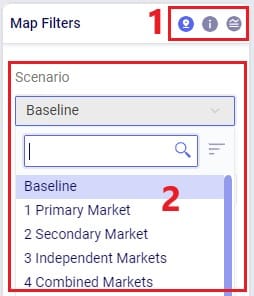

After running this scenario S1, we are first having a look at the map, where we will be showing the DCs, Customers and the Return Flows for scenario S1. This has been set up in the map named Supply Chain (S1) in the model from the Resource Library. To set this map up, we first copied the Supply Chain (Baseline) map and renamed it to Supply Chain (S1). Then clicked on the map’s name (Supply Chain (S1)) to open it and in the Map Filters form that is showing on the right-hand side of the screen changed the scenario to “S1 Include Returns” in the Scenario drop-down. To configure the Return Flows, we added a new Map Layer, and configured its Condition Builder form as follows (learn more about Maps and how to configure them in this Help Center article):

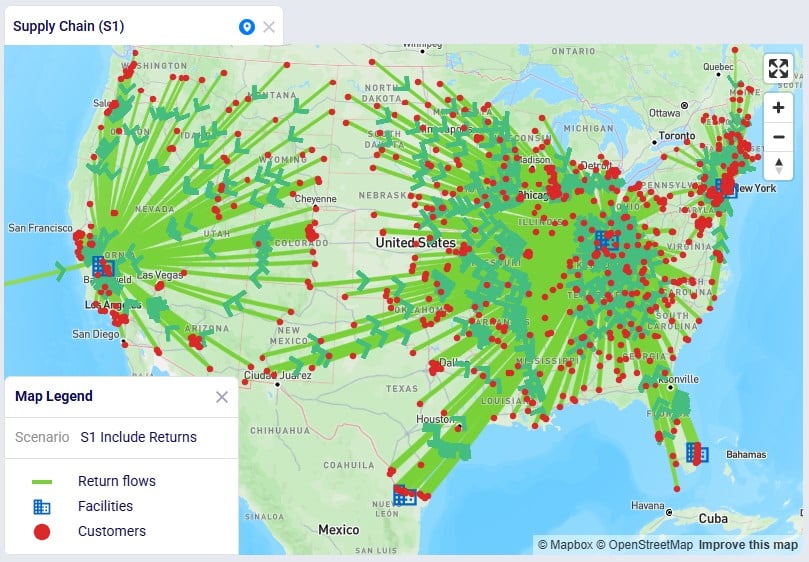

The resulting map is shown in this next screenshot:

We see that, as expected, the bulk of the returns are going back the main DC in Cincinnati: from its local customers, but also from the customers served by the 2 smaller DCs in Las Lomas and West Palm Beach DCs. The customers served by the Clovis and Jersey City DCs return their products to their local DCs.

To assess the financial impact of including returns in the model, we again look at the Financials: Scenario Cost Comparison chart in the Optimization Scenario Comparison dashboard, comparing the S1 scenario to the Baseline scenario:

We see that including returns in S1 leads to:

Seeing that the main driver for the overall supply chain costs being higher when including returns are the high transportation costs for returning products, especially those travelling long distances from the Las Lomas and West Palm Beach customers to the Cincinnati DC sparks the idea to explore if it would be more beneficial for the Las Lomas and/or West Palm Beach customers to return their products to their local DC, rather than the Cincinnati DC. This will be modelled in the next three scenarios.

Building upon scenario S1, we will run 2 scenarios (S2 and S3) where it will be examined if it is beneficial cost-wise for West Palm Beach customers to return their products to their local West Palm Beach DC (S2) and for Las Lomas customers to return their products to their local Las Lomas DC (S3) rather than to the Cincinnati DC. In order to be able to handle returns, the fixed operating costs at these DCs are increased by 0.5M to 3.5M:

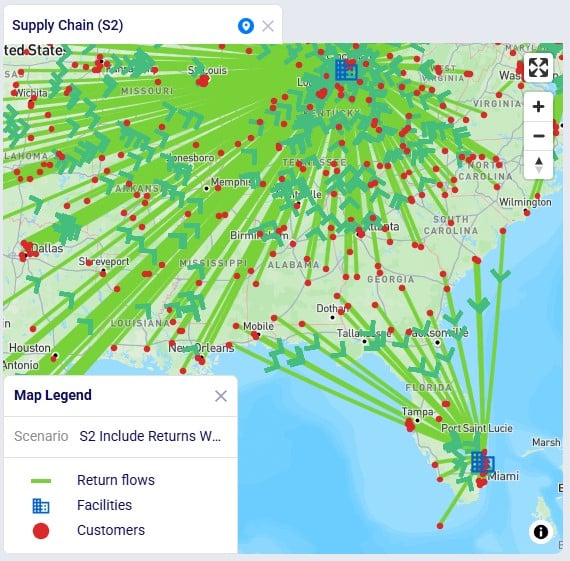

Scenarios S2 and S3 are run, and first we look at the map to check the return flows for the West Palm Beach and Las Lomas customers, respectively (copied the map for S1, renamed it, and then changed the scenario by clicking on the map’s name and selecting the S2/S3 scenario from the Scenario drop-down in the Map Filters pane on the right-hand side):

As expected, due to how we set up these scenarios, now all returns from these customers go to their local DC, rather than to DC-Cincinnati which was the case in scenario S1.

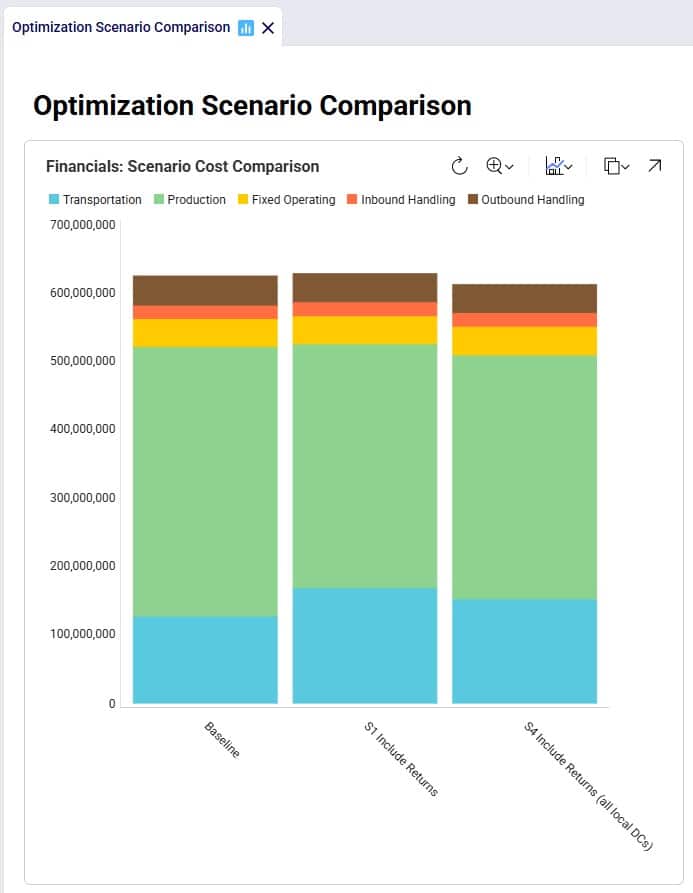

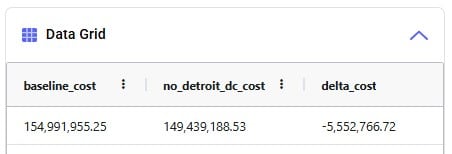

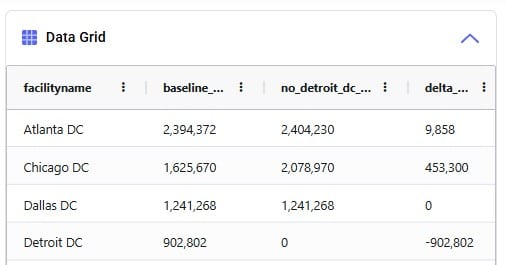

Let us next look at the overall costs for these 2 scenarios and compare them back to the S1 and Baseline scenarios:

Besides some smaller reductions in the inbound and outbound costs in S2 and S3 as compared to S1, the transportation costs are reduced by sizeable amounts: 6.9M (S2 compared to S1) and 9.4M (S3 compared to S1), while the production (= procurement) costs are the same across these 3 scenarios. The reduction in transportation costs outweighs the 0.5M increase in fixed operating costs to be able to handle returns at the West Palm Beach and Las Lomas DCs. Also note that both scenario S2 and S3 have a lower total cost than the Baseline scenario.

Since it is beneficial to have the West Palm Beach and Las Lomas DCs handle returns, scenario S4 where this capability is included for both DCs is set up and run:

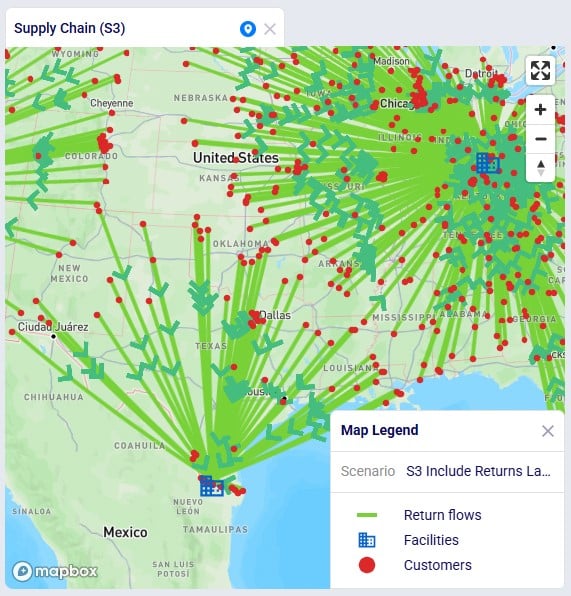

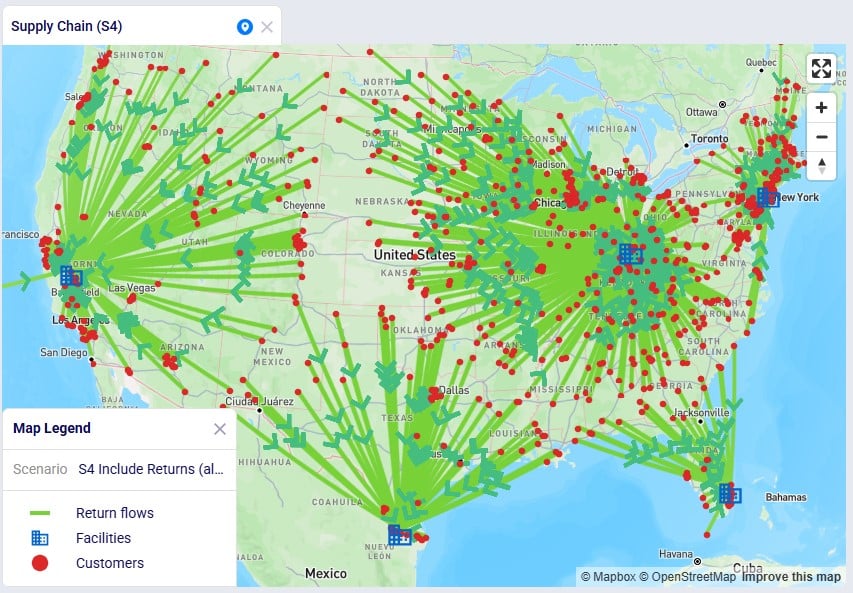

The S4 scenario increases the fixed operating costs at both these DCs from 3M to 3.5M (scenario items “Incr Operating Cost S2” and “Incr Operating Cost S3”), sets the Status of all records on the Return Ratios table to Include (the Include Return Ratios scenario item), and sets the Status to Include for records on the Return Policies and Transportation Policies tables where the Notes field contains the text “S4” (the “Include Return Policies S4” and “Include Return TPs S4” items), which are records where customers all ship their returns back to their local DC. We first check on the map if this is working as expected after running the S4 scenario:

We notice that indeed there are no more returns going back to the Cincinnati DC from Las Lomas or West Palm Beach customers.

Finally, we expect the costs of this scenario to be the lowest overall since we should see the combined reductions of scenarios S2 and S3:

Between S1 and S4:

In addition to looking at maps or graphs, users can also use the output tables to understand the overall costs and flows, including those of the returns included in the network.

Often, users will start by looking at the overall cost picture using the Optimization Network Summary output table, which summarizes total costs and quantities at the scenario level:

For each scenario, we are showing the Total Supply Chain Cost and Total Return Quantity fields here. As mentioned, the Baseline did not include any returns, whereas scenarios S1-4 did, which is reflected in the Total Return Quantity values. There are many more fields available on this output table, but in the next screenshot we are just showing the individual cost buckets that are used in this model (all other cost fields are 0):

How these costs increase/decrease between scenarios has been discussed above when looking at the “Financials: Scenario Cost Comparison” chart in the “Optimization Scenario Comparison” dashboard. In summary:

Please note that on this table, there is also a Total Return Cost field. It is 0 in this example model. It would be > 0 if the Unit Cost field on the Return Policies table had been populated, which is a field where any specific cost related to the return can be captured. In our example Returns model, the return costs are entirely captured by the transportation costs and fixed operating costs specified.

The Optimization Return Summary output table is a new output table that has been added to summarize returns at the scenario-returning site-product-return product-period level:

Looking at the first record here, we understand that in the S1 scenario, CZ_001 was served 8,850 units of Bag_1, while 876.15 units of Bag_1 were returned.

Lastly, we can also see individual return flows in the Optimization Flow Summary table by filtering the Flow Type field for “Return”:

Note that the product name for these flows is of the product that is being returned.

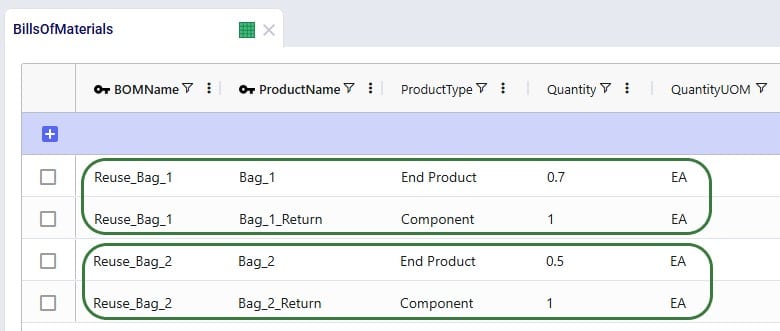

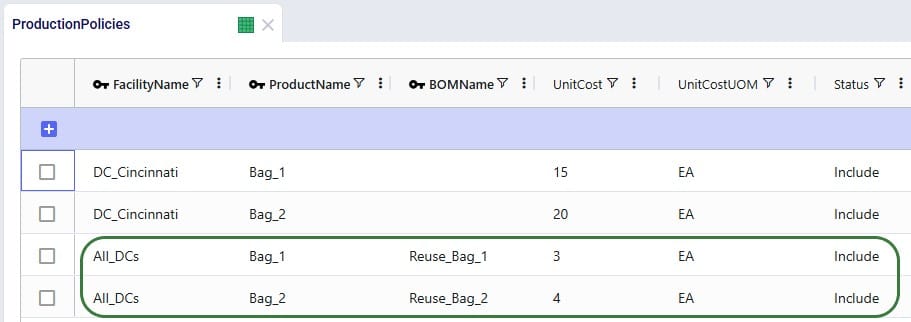

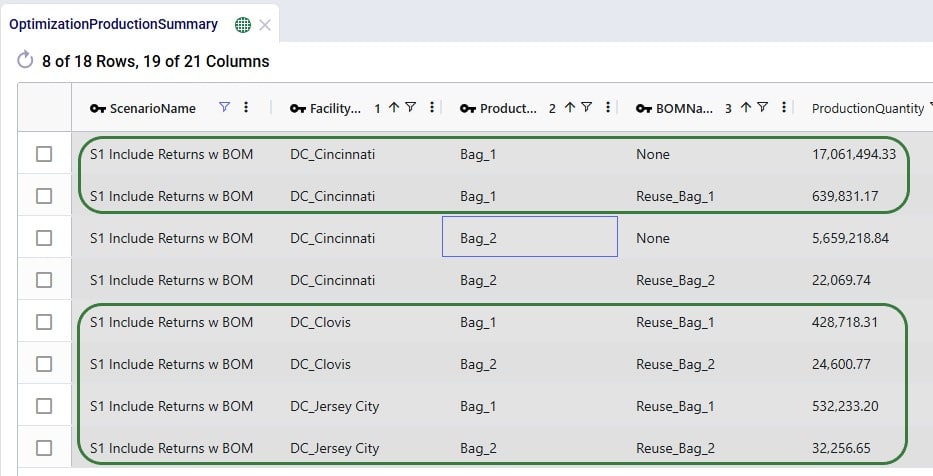

The example Returns model described above assumes that 100% of the returned Bag_1 and Bag_2 products can be reused. Here we will discuss through screenshots how the model can be adjusted to take into account that only 70% of Bag_1 returns and 50% of Bag_2 returns can be reused. To achieve this, we will need to add an additional “return” product for each finished good, set up bills of materials, and add records to the policies tables for the required additional model structure.

The tables that will be updated and for which we will see a screenshot each below are: Products, Groups, Return Policies, Return Ratios, Transportation Policies, Warehousing Policies, Bills of Materials, and Production Policies.

Two products are added here, 1 for each finished good: Bag_1_Return and Bag_2_Return. This way we can distinguish the return product from the sellable finished goods, apply different policies/costs to them, and convert a percentage back into the sellable items. The naming convention of adding “_Return” to the finished good name makes for easy filtering and provides clarity around what the product’s role is in the model. Of course, users can use different naming conventions.

The same unit value as for the finished goods is used for the return products, so that inventory carrying cost calculations are consistent. A unit price (again, same as the finished goods) has been entered too, but this will not actually be used by the model as these “_Return” products are not used to serve customer demand.

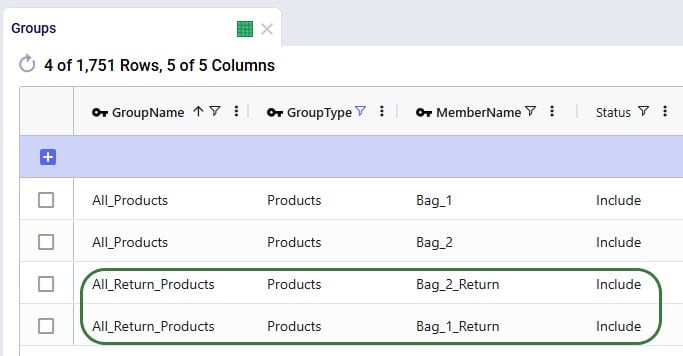

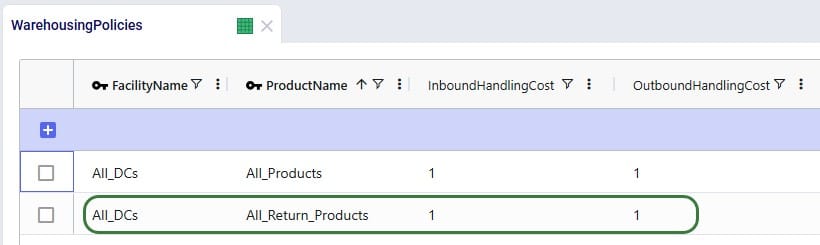

To facilitate setting up policies where the return products behave the same (e.g. same lanes, same costs, etc.), we add an “All_Return_Products” group to the Groups table, which consists of the 2 return products:

In the Return Policies table, the Return Product Name column needs to be updated to reflect that the products that are being returned are the “_Return” products. Previously, the Return Product Name was set to the All_Products group for each record, and it is now updated to the All_Return_Products group. Updating a field in all records or a subset of filtered records to the same value can be done using the Bulk Update Column functionality, which can be accessed by clicking on the icon with 3 vertical dots to the right of the column name and then choosing “Bulk Update this Column” in the list of options that comes up.

We keep the ratios of how much product comes back for each unit of Bag_1 / Bag_2 sold the same, however we need to update the Return Product Name field on all records to reflect that it is the “_Return” product that comes back. Since this table does not use groups because the return ratios are different for different customer-finished good combinations, the best way to update this table is to also use the bulk update column functionality: