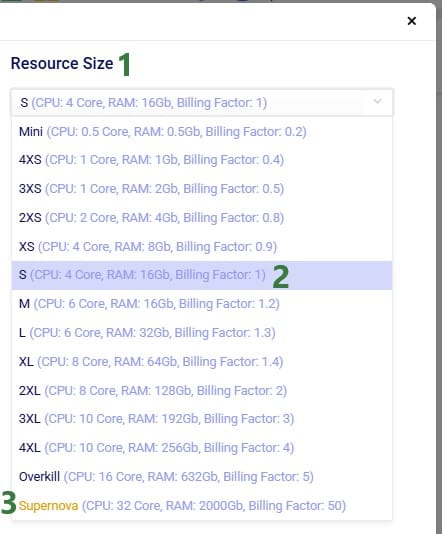

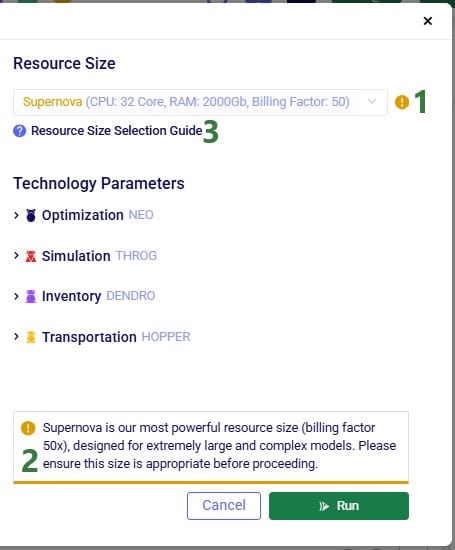

When running models in Cosmic Frog, users can choose the size of the resource the model’s scenario(s) will be run on in terms of available memory (RAM in Gb) and number of CPU cores. Depending on the complexity of the model and the number of elements, policies and constraints in the model, the model will need a certain amount of memory to run to completion successfully. Bigger, more complex models typically need to be run using a resource that has more memory (RAM) available as compared to smaller, less complex models. The bigger the resource that is being used, the higher the billing factor which leads to using more of the available cloud compute hours available to the customer (the total amount of cloud compute time available to the customer is part of customer’s Master License Agreement with Optilogic). Ideally, users choose a resource size that is just big enough to run their scenario(s) without the resource running out of memory, while minimizing the amount of cloud compute time used. This document guides users in choosing an initial resource size and periodically re-evaluating it to ensure optimal usage of the customer’s available cloud compute time.

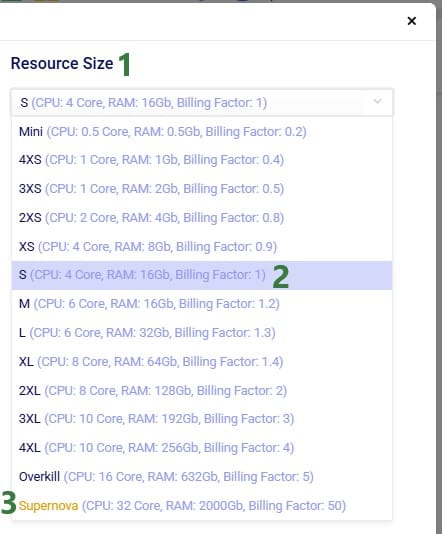

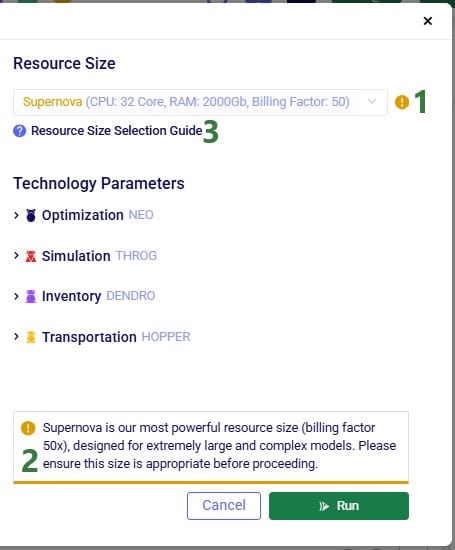

Once a model has been built and the user is ready to run 1 or multiple scenarios, they can click on the green Run button at the right top in Cosmic Frog which opens the Run Settings screen. The Run Settings screen is documented in the Running Models & Scenarios in Cosmic Frog Help Center article. On the right-hand side of the Run Settings screen, user can select the Resource Size that will be used for the scenario(s) that are being kicked off to run:

In this section, we will guide users on choosing an initial resource size for the different engines in Cosmic Frog, based on some model properties. Before diving in, please keep following in mind:

There are quite a few model factors that influence how much memory a scenario needs to solve a Neo run. These include the number of model elements, policies, periods, and constraints. The type(s) of constraints used may play a role too. The main factors, in order of impact on memory usage, are:

These numbers are those after expansion of any grouped records and application of scenario items, if any.

The number of lanes can depend on the Lane Creation Rule setting in the Neo (Optimization) Parameters:

Note that for lane creation, expansion of grouped records and application of scenario item(s) need to be taken into account too to get at the number of lanes considered in the scenario run.

Users can use the following list to choose an initial resource size for Neo runs. First, calculate the number of demand records multiplied with the number of lanes in your model (after expansion of grouped records and application of scenario items). Next, find the range in the list, and use the associated recommended initial resource size:

# demand records * # lanes: Recommended Initial Resource Size

A good indicator for Throg and Dendro runs to base the initial resource size selection on is the order of magnitude of the total number of policies in the model. To estimate the total number of policies in the model, add up the number of policies contained in all policies tables. There are 5 policies tables in the Sourcing category (Customer Fulfillment Policies, Replenishment Policies, Production Policies, Procurement Policies, and Return Policies), 4 in the Inventory category (Inventory Policies, Warehousing Policies, Order Fulfillment Policies, and Inventory Policies Advanced), and the Transportation Policies table in the Transportation category. The policy counts of each table should be those after expansion of any grouped records and application of scenario items, if any. The list below shows the minimum recommended initial resource size based on the total number of policies in the model to solve models using the Throg or Dendro engine.

Number of Policies: Minimum Resource

For Hopper runs, memory is the most important factor in choosing the right resource, and the main driver of memory requirements is the number of origin-destination (OD) pairs in the model. OD pairs are determined primarily by all possible facility-to-customer, facility-to-facility, and customer-to-customer lane combinations.

Most Hopper models have many more customers compared to facilities, and so we often can use the number of customers in a model as a guide for resource size. The list below shows the minimum recommended initial resource size to solve models using Hopper.

Customers: Minimum Resource Size

Most Triad models should solve very quickly, typically under 10 minutes. Still, choosing the right resource size will ensure your Triad model solves successfully, without paying for unneeded compute resources.

As with Hopper, memory is the most important factor in resource selection. In Triad, the main driver of memory requirements is the number of customers, with a smaller secondary effect from the number of greenfield facilities.

The list below shows the minimum recommended initial resource size to solve models using Triad where the number of facilities is assumed to be between 1 and 10:

Customers: Minimum Resource Size

Please note:

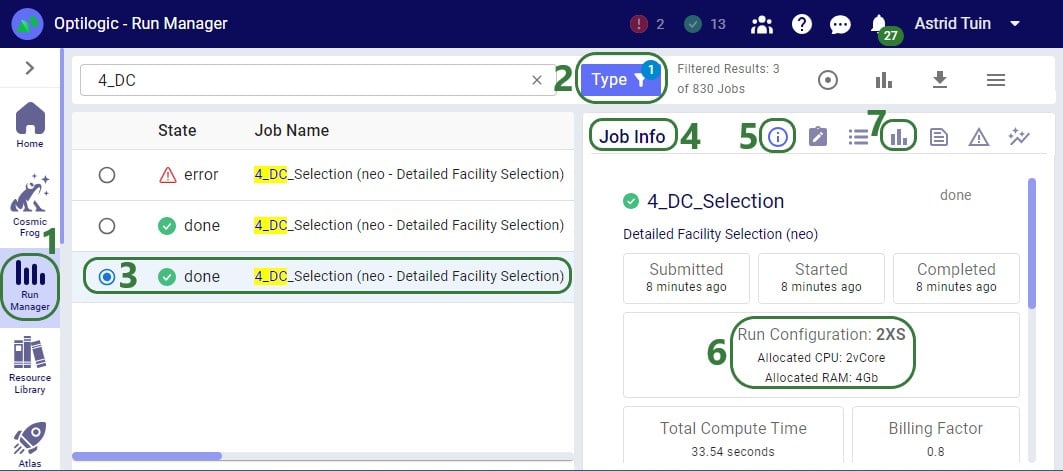

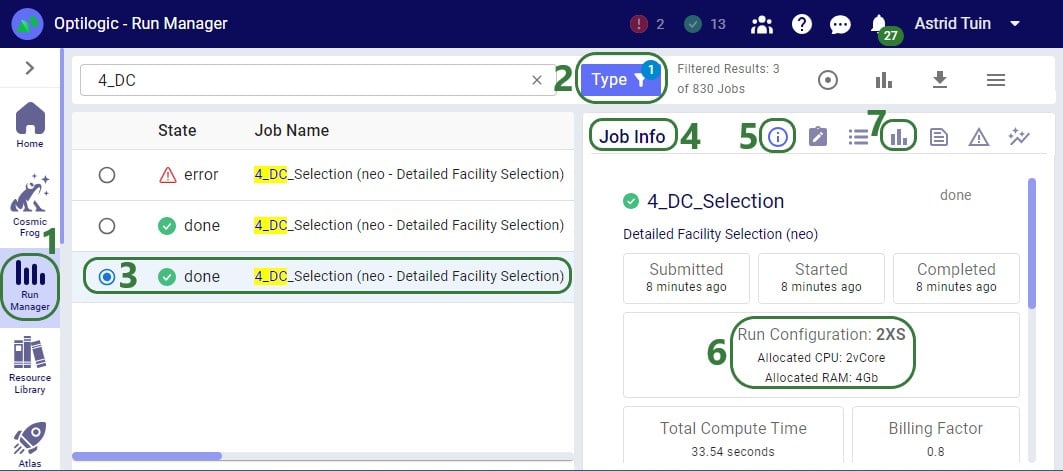

After running a scenario with the initially selected resource size, users can evaluate if it is the best resource size to use or if a smaller or larger one is more appropriate. The Run Manager application on Optilogic’s platform can be used to assess resource size:

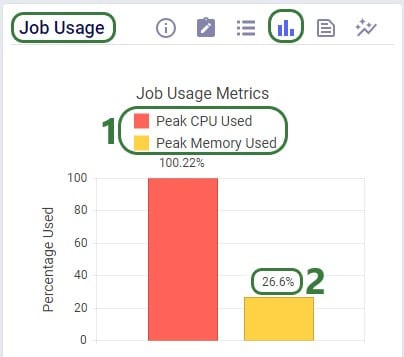

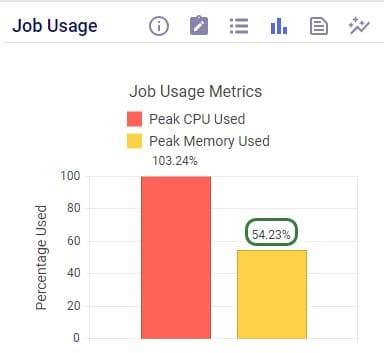

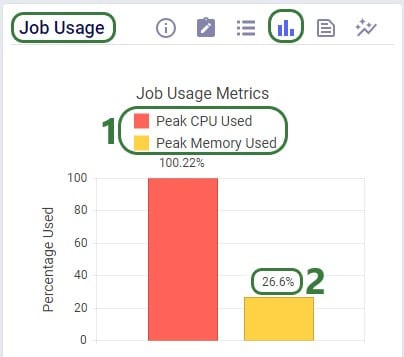

Using this knowledge that the RAM required at peak usage is just over 1 Gb, we can conclude that going down to resource size 3XS, which has 2Gb of RAM available should still work OK for this scenario. The expectation is that going further down to 4XS, which has 1Gb of RAM available, will not work as the scenario will likely run out of memory. We can test this with 2 additional runs. These are the Job Usage Metrics after running with resource size 3XS:

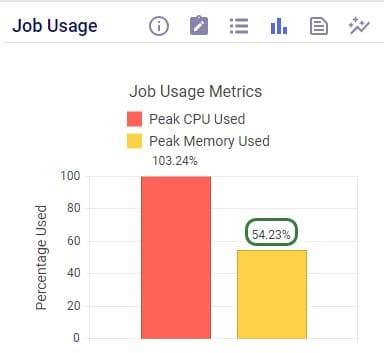

As expected, the scenario runs fine, and the memory usage is now at about 54% (of 2Gb) at peak usage.

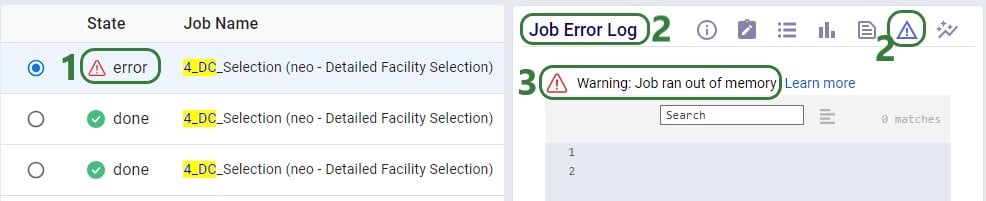

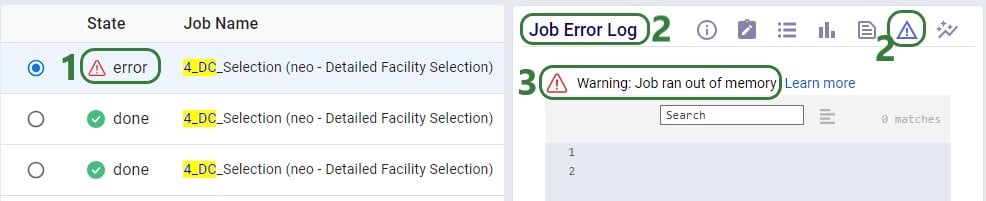

Trying with resource size 4XS results in an error:

Note that when a scenario runs out of memory like this one here, there are no results for it in the output tables in Cosmic Frog if it is the first time the scenario is run. If the scenario has been run successfully before, then the previous results will still be in the output tables. To ensure that a scenario has run successfully within Cosmic Frog, user can check the timestamp of the outputs in the Optimization Network Summary (Neo), Transportation Summary (Hopper), or Optimization Greenfield Output Summary (Triad) output tables, or review the number of error jobs versus done jobs at the top of Cosmic Frog (see next screenshot). If either of these 2 indicate that the scenario may not have run, then double-check in the Run Manager and review the logs there to find the cause.

In the status bar at the top of Cosmic Frog, user can see that there were 2 error jobs and 13 done jobs within the last 24 hours.

In conclusion, for this scenario we started with a 2XS resource size. Using the Run Manager, we reviewed the percentage of memory used at peak usage in the Job Usage Metrics and concluded that a smaller 3XS resource size with 2Gb of RAM should still work fine for this scenario, but an even smaller 4XS resource size with 1Gb of RAM would be too small. Test runs using the 3XS and 4XS resource sizes confirmed this.

When running models in Cosmic Frog, users can choose the size of the resource the model’s scenario(s) will be run on in terms of available memory (RAM in Gb) and number of CPU cores. Depending on the complexity of the model and the number of elements, policies and constraints in the model, the model will need a certain amount of memory to run to completion successfully. Bigger, more complex models typically need to be run using a resource that has more memory (RAM) available as compared to smaller, less complex models. The bigger the resource that is being used, the higher the billing factor which leads to using more of the available cloud compute hours available to the customer (the total amount of cloud compute time available to the customer is part of customer’s Master License Agreement with Optilogic). Ideally, users choose a resource size that is just big enough to run their scenario(s) without the resource running out of memory, while minimizing the amount of cloud compute time used. This document guides users in choosing an initial resource size and periodically re-evaluating it to ensure optimal usage of the customer’s available cloud compute time.

Once a model has been built and the user is ready to run 1 or multiple scenarios, they can click on the green Run button at the right top in Cosmic Frog which opens the Run Settings screen. The Run Settings screen is documented in the Running Models & Scenarios in Cosmic Frog Help Center article. On the right-hand side of the Run Settings screen, user can select the Resource Size that will be used for the scenario(s) that are being kicked off to run:

In this section, we will guide users on choosing an initial resource size for the different engines in Cosmic Frog, based on some model properties. Before diving in, please keep following in mind:

There are quite a few model factors that influence how much memory a scenario needs to solve a Neo run. These include the number of model elements, policies, periods, and constraints. The type(s) of constraints used may play a role too. The main factors, in order of impact on memory usage, are:

These numbers are those after expansion of any grouped records and application of scenario items, if any.

The number of lanes can depend on the Lane Creation Rule setting in the Neo (Optimization) Parameters:

Note that for lane creation, expansion of grouped records and application of scenario item(s) need to be taken into account too to get at the number of lanes considered in the scenario run.

Users can use the following list to choose an initial resource size for Neo runs. First, calculate the number of demand records multiplied with the number of lanes in your model (after expansion of grouped records and application of scenario items). Next, find the range in the list, and use the associated recommended initial resource size:

# demand records * # lanes: Recommended Initial Resource Size

A good indicator for Throg and Dendro runs to base the initial resource size selection on is the order of magnitude of the total number of policies in the model. To estimate the total number of policies in the model, add up the number of policies contained in all policies tables. There are 5 policies tables in the Sourcing category (Customer Fulfillment Policies, Replenishment Policies, Production Policies, Procurement Policies, and Return Policies), 4 in the Inventory category (Inventory Policies, Warehousing Policies, Order Fulfillment Policies, and Inventory Policies Advanced), and the Transportation Policies table in the Transportation category. The policy counts of each table should be those after expansion of any grouped records and application of scenario items, if any. The list below shows the minimum recommended initial resource size based on the total number of policies in the model to solve models using the Throg or Dendro engine.

Number of Policies: Minimum Resource

For Hopper runs, memory is the most important factor in choosing the right resource, and the main driver of memory requirements is the number of origin-destination (OD) pairs in the model. OD pairs are determined primarily by all possible facility-to-customer, facility-to-facility, and customer-to-customer lane combinations.

Most Hopper models have many more customers compared to facilities, and so we often can use the number of customers in a model as a guide for resource size. The list below shows the minimum recommended initial resource size to solve models using Hopper.

Customers: Minimum Resource Size

Most Triad models should solve very quickly, typically under 10 minutes. Still, choosing the right resource size will ensure your Triad model solves successfully, without paying for unneeded compute resources.

As with Hopper, memory is the most important factor in resource selection. In Triad, the main driver of memory requirements is the number of customers, with a smaller secondary effect from the number of greenfield facilities.

The list below shows the minimum recommended initial resource size to solve models using Triad where the number of facilities is assumed to be between 1 and 10:

Customers: Minimum Resource Size

Please note:

After running a scenario with the initially selected resource size, users can evaluate if it is the best resource size to use or if a smaller or larger one is more appropriate. The Run Manager application on Optilogic’s platform can be used to assess resource size:

Using this knowledge that the RAM required at peak usage is just over 1 Gb, we can conclude that going down to resource size 3XS, which has 2Gb of RAM available should still work OK for this scenario. The expectation is that going further down to 4XS, which has 1Gb of RAM available, will not work as the scenario will likely run out of memory. We can test this with 2 additional runs. These are the Job Usage Metrics after running with resource size 3XS:

As expected, the scenario runs fine, and the memory usage is now at about 54% (of 2Gb) at peak usage.

Trying with resource size 4XS results in an error:

Note that when a scenario runs out of memory like this one here, there are no results for it in the output tables in Cosmic Frog if it is the first time the scenario is run. If the scenario has been run successfully before, then the previous results will still be in the output tables. To ensure that a scenario has run successfully within Cosmic Frog, user can check the timestamp of the outputs in the Optimization Network Summary (Neo), Transportation Summary (Hopper), or Optimization Greenfield Output Summary (Triad) output tables, or review the number of error jobs versus done jobs at the top of Cosmic Frog (see next screenshot). If either of these 2 indicate that the scenario may not have run, then double-check in the Run Manager and review the logs there to find the cause.

In the status bar at the top of Cosmic Frog, user can see that there were 2 error jobs and 13 done jobs within the last 24 hours.

In conclusion, for this scenario we started with a 2XS resource size. Using the Run Manager, we reviewed the percentage of memory used at peak usage in the Job Usage Metrics and concluded that a smaller 3XS resource size with 2Gb of RAM should still work fine for this scenario, but an even smaller 4XS resource size with 1Gb of RAM would be too small. Test runs using the 3XS and 4XS resource sizes confirmed this.