Since Leapfrog's creation, the system has continuously evolved with the addition of new specialized agents. The platform now features a comprehensive agent library built on a robust toolkit framework, enabling sophisticated multi-agent workflows and autonomous task execution.

AI agents are software systems that use a large language model (LLM) as a reasoning engine but go beyond chat by taking actions in an environment. Instead of only generating text, an agent can interpret a goal, decide what to do next, call external capabilities (tools), observe the results, and iterate until the objective is achieved.

In practice, an "agent" is not a single model call - it is a control system wrapped around an LLM:

This architecture matters because it turns the LLM from a passive text generator into an adaptive problem-solver that can:

An agent is not just a chat model. A chat model produces responses; an agent operates - it can run commands, fetch data, write artifacts, and iterate autonomously within defined constraints. Think of an AI agent as a smart assistant that can:

Agents are most useful when tasks are multi-step, partially specified, and feedback-driven, for example:

If a task is single-shot and fully specified (e.g., "summarize this paragraph"), a non-agent LLM call is often simpler and cheaper.

Most agents follow a ReAct-style loop (Reason + Act), sometimes with explicit planning:

A useful way to think about the loop is that each iteration should:

Well-behaved agents stop for explicit reasons, such as:

An agent is the intelligent layer that decides what to do. It's like a project manager who understands the goal, plans the approach, and uses available skills and tools to get the job done.

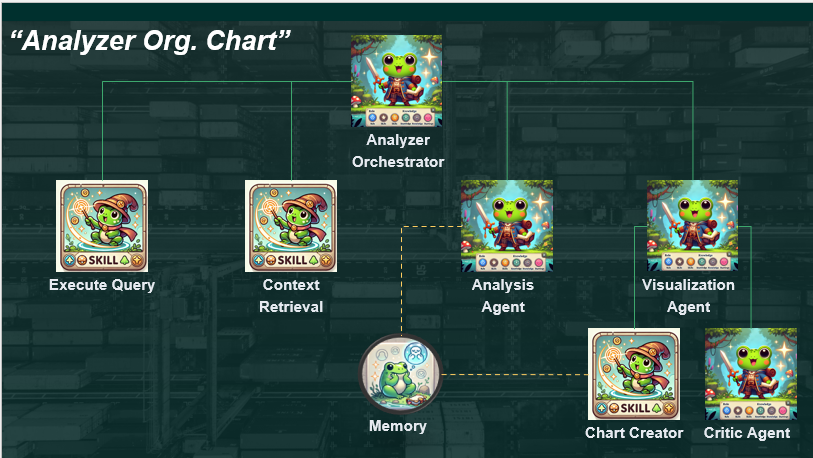

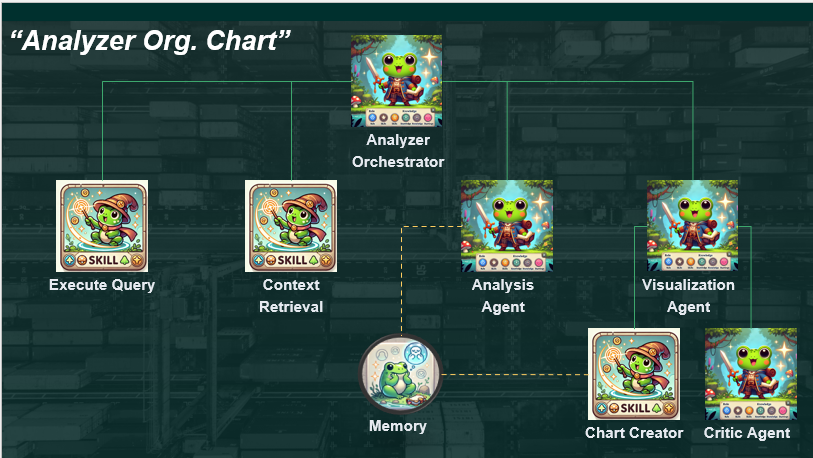

The Leapfrog ecosystem includes many specialized agents (some of which are shown in the image above), each designed for specific analytical and reporting tasks.

Why specialization helps:

A common pattern is an orchestrator (or "manager") agent that routes work to sub-agents and integrates their outputs into a final deliverable.

The agent toolkit is built on four foundational concepts that enable flexible and powerful agent development:

The core reasoning component - a large language model equipped with specialized skills and capabilities.

In addition to the model itself, an agent definition typically includes:

A versatile building block that packages how to do something. This modularity allows agents to be composed and extended dynamically.

A skill may:

A mechanism for injecting domain-specific expertise into agents at runtime, enabling them to operate effectively in specialized fields without requiring model retraining.

An intelligent storage system that helps agents overcome context-management challenges by preserving important information for future use, enabling continuity across interactions.

Current implementation supports several advanced capabilities enabled by the agent toolkit:

Agents can build structured plans that improve the accuracy and quality of final outputs through systematic decomposition of complex tasks.

The system supports custom tools provided by users, allowing agents to integrate with existing workflows and data infrastructure.

Persistent memory enables agents to maintain context and track important information across extended work sessions.

Complex tasks can be delegated to specialized sub-agents, allowing for efficient division of labor and expertise application.

The system intelligently manages context to ensure agents have access to relevant information while avoiding context window limitations.

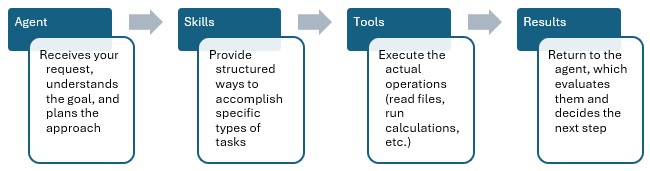

Below is a simple workflow showing how different components work together. For simplicity, not all components are included here.

An agent is the intelligent layer that decides what to do. It's like a project manager who understands the goal, plans the approach, and uses available skills and tools to get the job done.

Skills are packaged capabilities that combine one or more tools with guidance on when and how to use them. Think of a skill as a trained procedure or technique.

Tools are the specific actions an AI agent can perform. They are specialized and do one specific thing reliably. They don't make decisions - they just execute when called.

As an AI Agent works, it produces the logs which include steps that the agent takes, tools it calls, as well as a work summary. The AI Response sections are typically the most useful as they explain the exploration plan, the work it has done, and the results after exploration. This is generally a response to the user. While all others are more for internal processes.

Since Leapfrog's creation, the system has continuously evolved with the addition of new specialized agents. The platform now features a comprehensive agent library built on a robust toolkit framework, enabling sophisticated multi-agent workflows and autonomous task execution.

AI agents are software systems that use a large language model (LLM) as a reasoning engine but go beyond chat by taking actions in an environment. Instead of only generating text, an agent can interpret a goal, decide what to do next, call external capabilities (tools), observe the results, and iterate until the objective is achieved.

In practice, an "agent" is not a single model call - it is a control system wrapped around an LLM:

This architecture matters because it turns the LLM from a passive text generator into an adaptive problem-solver that can:

An agent is not just a chat model. A chat model produces responses; an agent operates - it can run commands, fetch data, write artifacts, and iterate autonomously within defined constraints. Think of an AI agent as a smart assistant that can:

Agents are most useful when tasks are multi-step, partially specified, and feedback-driven, for example:

If a task is single-shot and fully specified (e.g., "summarize this paragraph"), a non-agent LLM call is often simpler and cheaper.

Most agents follow a ReAct-style loop (Reason + Act), sometimes with explicit planning:

A useful way to think about the loop is that each iteration should:

Well-behaved agents stop for explicit reasons, such as:

An agent is the intelligent layer that decides what to do. It's like a project manager who understands the goal, plans the approach, and uses available skills and tools to get the job done.

The Leapfrog ecosystem includes many specialized agents (some of which are shown in the image above), each designed for specific analytical and reporting tasks.

Why specialization helps:

A common pattern is an orchestrator (or "manager") agent that routes work to sub-agents and integrates their outputs into a final deliverable.

The agent toolkit is built on four foundational concepts that enable flexible and powerful agent development:

The core reasoning component - a large language model equipped with specialized skills and capabilities.

In addition to the model itself, an agent definition typically includes:

A versatile building block that packages how to do something. This modularity allows agents to be composed and extended dynamically.

A skill may:

A mechanism for injecting domain-specific expertise into agents at runtime, enabling them to operate effectively in specialized fields without requiring model retraining.

An intelligent storage system that helps agents overcome context-management challenges by preserving important information for future use, enabling continuity across interactions.

Current implementation supports several advanced capabilities enabled by the agent toolkit:

Agents can build structured plans that improve the accuracy and quality of final outputs through systematic decomposition of complex tasks.

The system supports custom tools provided by users, allowing agents to integrate with existing workflows and data infrastructure.

Persistent memory enables agents to maintain context and track important information across extended work sessions.

Complex tasks can be delegated to specialized sub-agents, allowing for efficient division of labor and expertise application.

The system intelligently manages context to ensure agents have access to relevant information while avoiding context window limitations.

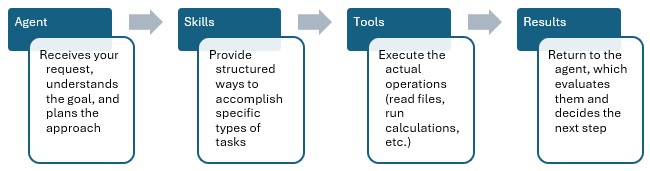

Below is a simple workflow showing how different components work together. For simplicity, not all components are included here.

An agent is the intelligent layer that decides what to do. It's like a project manager who understands the goal, plans the approach, and uses available skills and tools to get the job done.

Skills are packaged capabilities that combine one or more tools with guidance on when and how to use them. Think of a skill as a trained procedure or technique.

Tools are the specific actions an AI agent can perform. They are specialized and do one specific thing reliably. They don't make decisions - they just execute when called.

As an AI Agent works, it produces the logs which include steps that the agent takes, tools it calls, as well as a work summary. The AI Response sections are typically the most useful as they explain the exploration plan, the work it has done, and the results after exploration. This is generally a response to the user. While all others are more for internal processes.